上一章节中,ffplay.c源码分析【1】、ffplay.c源码分析【2】:ffpaly解码得到frame,存放在相应的队列中,本文主要讲音频、视频输出以及音视频同步。

音频输出

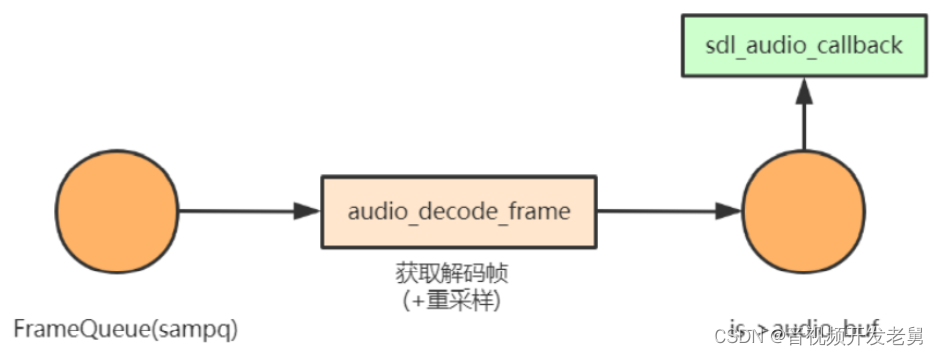

ffplay的音频输出主要通过SDL实现,SDL是一套开放源代码的跨平台多媒体开发库,在ffplay中,在开启SDL音频后,当SDL需要数据输出时,通过回调函数的方式告诉应用者需要传入多少数据,那么问题就来了,ffmpeg解码AVPacket的音频得到AVFrame后,此时,解码得到的AVFrame大小跟需要传入数据的大小不一定相等,再加上如果要实现变速播放功能,每帧AVFrame做变速后的数据大小和SDL回调需要的数据大小也会不一样,所以需要增加一级缓冲区解决,即从FrameQueue队列读取到Frame数据后,先缓存到buffer,然后再从buffer读取数据到SDL回调函数。

本文福利, 免费领取C++音视频学习资料包、技术视频/代码,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,编解码,推拉流,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

SDL通过sdl_audio_callback函数向ffplay要音频数据,ffplay将sampq中的数据通过audio_decode_frame函数取出,放入is->audio_buf,然后送给sdl,在后续回调时先找audio_buf要数据,数据不足的情况下,再调用audio_decode_frame补充audio_buf。

static int audio_open(void *opaque, int64_t wanted_channel_layout, int wanted_nb_channels, int wanted_sample_rate, struct AudioParams *audio_hw_params)

{

SDL_AudioSpec wanted_spec, spec;

const char *env;

static const int next_nb_channels[] = {0, 0, 1, 6, 2, 6, 4, 6};

static const int next_sample_rates[] = {0, 44100, 48000, 96000, 192000};

int next_sample_rate_idx = FF_ARRAY_ELEMS(next_sample_rates) - 1;

env = SDL_getenv("SDL_AUDIO_CHANNELS");

if (env) {// 若环境变量有设置,优先从环境变量取得声道数和声道布局

wanted_nb_channels = atoi(env);

wanted_channel_layout = av_get_default_channel_layout(wanted_nb_channels);

}

if (!wanted_channel_layout || wanted_nb_channels != av_get_channel_layout_nb_channels(wanted_channel_layout)) {

wanted_channel_layout = av_get_default_channel_layout(wanted_nb_channels);

wanted_channel_layout &= ~AV_CH_LAYOUT_STEREO_DOWNMIX;

}

//根据channel_layout获取nb_channels,当传⼊参数wanted_nb_channels不匹配时,此处会作修正

wanted_nb_channels = av_get_channel_layout_nb_channels(wanted_channel_layout);

wanted_spec.channels = wanted_nb_channels;

wanted_spec.freq = wanted_sample_rate;

if (wanted_spec.freq <= 0 || wanted_spec.channels <= 0) {

av_log(NULL, AV_LOG_ERROR, "Invalid sample rate or channel count!\n");

return -1;

}

while (next_sample_rate_idx && next_sample_rates[next_sample_rate_idx] >= wanted_spec.freq)

next_sample_rate_idx--;

wanted_spec.format = AUDIO_S16SYS;

wanted_spec.silence = 0;<br> //SDL_AUDIO_MAX_CALLBACKS_PER_SEC一秒最多回调次数,避免频繁的回调

wanted_spec.samples = FFMAX(SDL_AUDIO_MIN_BUFFER_SIZE, 2 << av_log2(wanted_spec.freq / SDL_AUDIO_MAX_CALLBACKS_PER_SEC));

wanted_spec.callback = sdl_audio_callback;//设置回调函数

wanted_spec.userdata = opaque;

while (!(audio_dev = SDL_OpenAudioDevice(NULL, 0, &wanted_spec, &spec, SDL_AUDIO_ALLOW_FREQUENCY_CHANGE | SDL_AUDIO_ALLOW_CHANNELS_CHANGE))) {

av_log(NULL, AV_LOG_WARNING, "SDL_OpenAudio (%d channels, %d Hz): %s\n",

wanted_spec.channels, wanted_spec.freq, SDL_GetError());

wanted_spec.channels = next_nb_channels[FFMIN(7, wanted_spec.channels)];

if (!wanted_spec.channels) {

wanted_spec.freq = next_sample_rates[next_sample_rate_idx--];

wanted_spec.channels = wanted_nb_channels;

if (!wanted_spec.freq) {

av_log(NULL, AV_LOG_ERROR,

"No more combinations to try, audio open failed\n");

return -1;

}

}

wanted_channel_layout = av_get_default_channel_layout(wanted_spec.channels);

}

//检查打开音频设备的实际参数:采样格式

if (spec.format != AUDIO_S16SYS) {

av_log(NULL, AV_LOG_ERROR,

"SDL advised audio format %d is not supported!\n", spec.format);

return -1;

}

//声道数,spec.channels实际参数,wanted_spec.channels期望参数

if (spec.channels != wanted_spec.channels) {

wanted_channel_layout = av_get_default_channel_layout(spec.channels);

if (!wanted_channel_layout) {

av_log(NULL, AV_LOG_ERROR,

"SDL advised channel count %d is not supported!\n", spec.channels);

return -1;

}

}

audio_hw_params->fmt = AV_SAMPLE_FMT_S16;

audio_hw_params->freq = spec.freq;

audio_hw_params->channel_layout = wanted_channel_layout;

audio_hw_params->channels = spec.channels;

//audio_hw_params->frame_size计算一个采样点占用的字节数

audio_hw_params->frame_size = av_samples_get_buffer_size(NULL, audio_hw_params->channels, 1, audio_hw_params->fmt, 1);

audio_hw_params->bytes_per_sec = av_samples_get_buffer_size(NULL, audio_hw_params->channels, audio_hw_params->freq, audio_hw_params->fmt, 1);

if (audio_hw_params->bytes_per_sec <= 0 || audio_hw_params->frame_size <= 0) {

av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size failed\n");

return -1;

}

return spec.size;//硬件內部缓存的数据字节,samples * channels *byte_per_sample

}看看sdl_audio_callback的实现

static void sdl_audio_callback(void *opaque, Uint8 *stream, int len)

{

VideoState *is = opaque;

int audio_size, len1;

audio_callback_time = av_gettime_relative();

//循环读取,直到读取足够的数据

while (len > 0) {

if (is->audio_buf_index >= is->audio_buf_size) {//数据不足,audio_decode_frame读取数据

audio_size = audio_decode_frame(is);//读取数据,重采样处理

if (audio_size < 0) {

/* if error, just output silence */

is->audio_buf = NULL;

is->audio_buf_size = SDL_AUDIO_MIN_BUFFER_SIZE / is->audio_tgt.frame_size * is->audio_tgt.frame_size;

} else {

if (is->show_mode != SHOW_MODE_VIDEO)

update_sample_display(is, (int16_t *)is->audio_buf, audio_size);

is->audio_buf_size = audio_size;

}

is->audio_buf_index = 0;

}

//根据缓冲区剩余大小量力而行

len1 = is->audio_buf_size - is->audio_buf_index;

if (len1 > len)

len1 = len;

//根据audio_volume决定如何输出audio_buf

//判断是否为静音,以及当前音量的大小,如果音量为最大则直接拷贝数据

if (!is->muted && is->audio_buf && is->audio_volume == SDL_MIX_MAXVOLUME)

memcpy(stream, (uint8_t *)is->audio_buf + is->audio_buf_index, len1);

else {

memset(stream, 0, len1);//如果处于mute状态,则直接给stream填0

if (!is->muted && is->audio_buf)//如果不处于mute状态,即控制音量在某一个值(0,SDL_MIX_MAXVOLUME),使用SDL_MixAudioFormat进行音量调整和混音

SDL_MixAudioFormat(stream, (uint8_t *)is->audio_buf + is->audio_buf_index, AUDIO_S16SYS, len1, is->audio_volume);

}

len -= len1;

stream += len1;

//更新is->audio_buf_index,指向audio_buf中未被拷贝到stream的数据的起始位置

is->audio_buf_index += len1;

}

is->audio_write_buf_size = is->audio_buf_size - is->audio_buf_index;

/* Let's assume the audio driver that is used by SDL has two periods. */

if (!isnan(is->audio_clock)) {

set_clock_at(&is->audclk, is->audio_clock - (double)(2 * is->audio_hw_buf_size + is->audio_write_buf_size) / is->audio_tgt.bytes_per_sec, is->audio_clock_serial, audio_callback_time / 1000000.0);

sync_clock_to_slave(&is->extclk, &is->audclk);

}

}输出audio_buf到stream,如果audio_volume为最大音量,则只需要需memcpy复制给stream即可,否则调用SDL_MixAudioFormat进行音量调整(比如改变了音量的情况下),如果audio_buf消耗完了,就会调用audio_decode_frame重新填充audio_buf,接下来分析audio_decode_frame函数。

static int audio_decode_frame(VideoState *is)

{

int data_size, resampled_data_size;

int64_t dec_channel_layout;

av_unused double audio_clock0;

int wanted_nb_samples;

Frame *af;

if (is->paused)//暂停状态,退出,不从队列取帧,不会播放音频

return -1;

do {//1.从sampq取一帧,如果发生了seek,此时serial会不连续,就需要丢帧处理

#if defined(_WIN32)

while (frame_queue_nb_remaining(&is->sampq) == 0) {

if ((av_gettime_relative() - audio_callback_time) > 1000000LL * is->audio_hw_buf_size / is->audio_tgt.bytes_per_sec / 2)

return -1;

av_usleep (1000);

}

#endif

if (!(af = frame_queue_peek_readable(&is->sampq)))//判断是否可读,从队列头部读取一帧

return -1;

frame_queue_next(&is->sampq);//更新读索引,此时Frame才真正出队列,丢帧

} while (af->serial != is->audioq.serial);//判断是否连续

//计算这一帧,占用的字节数

data_size = av_samples_get_buffer_size(NULL, af->frame->channels,

af->frame->nb_samples,

af->frame->format, 1);

dec_channel_layout =

(af->frame->channel_layout && af->frame->channels == av_get_channel_layout_nb_channels(af->frame->channel_layout)) ?

af->frame->channel_layout : av_get_default_channel_layout(af->frame->channels);

wanted_nb_samples = synchronize_audio(is, af->frame->nb_samples);

//判断是否需要重新初始化重采样

if (af->frame->format != is->audio_src.fmt ||

dec_channel_layout != is->audio_src.channel_layout ||

af->frame->sample_rate != is->audio_src.freq ||

(wanted_nb_samples != af->frame->nb_samples && !is->swr_ctx)) {

swr_free(&is->swr_ctx);

//设置输出、输入目标参数

is->swr_ctx = swr_alloc_set_opts(NULL,

is->audio_tgt.channel_layout, is->audio_tgt.fmt, is->audio_tgt.freq,

dec_channel_layout, af->frame->format, af->frame->sample_rate,

0, NULL);

if (!is->swr_ctx || swr_init(is->swr_ctx) < 0) {

av_log(NULL, AV_LOG_ERROR,

"Cannot create sample rate converter for conversion of %d Hz %s %d channels to %d Hz %s %d channels!\n",

af->frame->sample_rate, av_get_sample_fmt_name(af->frame->format), af->frame->channels,

is->audio_tgt.freq, av_get_sample_fmt_name(is->audio_tgt.fmt), is->audio_tgt.channels);

swr_free(&is->swr_ctx);

return -1;

}

is->audio_src.channel_layout = dec_channel_layout;

is->audio_src.channels = af->frame->channels;

is->audio_src.freq = af->frame->sample_rate;

is->audio_src.fmt = af->frame->format;

}

//如果初始化了重采样

if (is->swr_ctx) {

const uint8_t **in = (const uint8_t **)af->frame->extended_data;

uint8_t **out = &is->audio_buf1;

int out_count = (int64_t)wanted_nb_samples * is->audio_tgt.freq / af->frame->sample_rate + 256;

int out_size = av_samples_get_buffer_size(NULL, is->audio_tgt.channels, out_count, is->audio_tgt.fmt, 0);

int len2;

if (out_size < 0) {

av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size() failed\n");

return -1;

}

if (wanted_nb_samples != af->frame->nb_samples) {

if (swr_set_compensation(is->swr_ctx, (wanted_nb_samples - af->frame->nb_samples) * is->audio_tgt.freq / af->frame->sample_rate,

wanted_nb_samples * is->audio_tgt.freq / af->frame->sample_rate) < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_set_compensation() failed\n");

return -1;

}

}

av_fast_malloc(&is->audio_buf1, &is->audio_buf1_size, out_size);

if (!is->audio_buf1)

return AVERROR(ENOMEM);

//音频重采样:返回值是重采样后得到的音频数据中单个声道的样本数

len2 = swr_convert(is->swr_ctx, out, out_count, in, af->frame->nb_samples);

if (len2 < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_convert() failed\n");

return -1;

}

if (len2 == out_count) {

av_log(NULL, AV_LOG_WARNING, "audio buffer is probably too small\n");

if (swr_init(is->swr_ctx) < 0)

swr_free(&is->swr_ctx);

}

is->audio_buf = is->audio_buf1;

resampled_data_size = len2 * is->audio_tgt.channels * av_get_bytes_per_sample(is->audio_tgt.fmt);

} else {

//不用重采样,则将指针指向frame中音频数据

is->audio_buf = af->frame->data[0];

resampled_data_size = data_size;

}

audio_clock0 = is->audio_clock;

/* update the audio clock with the pts */

if (!isnan(af->pts))

is->audio_clock = af->pts + (double) af->frame->nb_samples / af->frame->sample_rate;

else

is->audio_clock = NAN;

is->audio_clock_serial = af->serial;

#ifdef DEBUG

{

static double last_clock;

printf("audio: delay=%0.3f clock=%0.3f clock0=%0.3f\n",

is->audio_clock - last_clock,

is->audio_clock, audio_clock0);

last_clock = is->audio_clock;

}

#endif

return resampled_data_size;//返回audio_buf的数据大小

}首先从sampq读取一帧,判断serial是否连续,如果不连续就做丢帧处理,比如发生了seek操作,serial就会不连续,就要丢帧处理;接着计算这一帧的字节数,通过av_samples_get_buffer_size计算得到;然后就是重采样处理,当解码得到的⾳频帧的格式未必能被SDL⽀持,在这种情况下,需要进行音频重采样,即将⾳频帧格式转换为SDL⽀持的⾳频格式。

视频输出

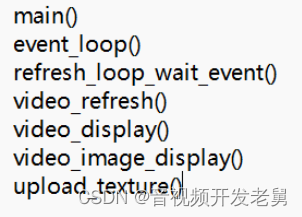

ffplay视频输出使用SDL来显示,视频的显示在main函数处理。

int main(int argc, char **argv)

{

..............................

flags = SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER;

if (audio_disable)

flags &= ~SDL_INIT_AUDIO;

else {

/* Try to work around an occasional ALSA buffer underflow issue when the

* period size is NPOT due to ALSA resampling by forcing the buffer size. */

if (!SDL_getenv("SDL_AUDIO_ALSA_SET_BUFFER_SIZE"))

SDL_setenv("SDL_AUDIO_ALSA_SET_BUFFER_SIZE","1", 1);

}

if (display_disable)

flags &= ~SDL_INIT_VIDEO;<br> //1.SDL的初始化

if (SDL_Init (flags)) {

av_log(NULL, AV_LOG_FATAL, "Could not initialize SDL - %s\n", SDL_GetError());

av_log(NULL, AV_LOG_FATAL, "(Did you set the DISPLAY variable?)\n");

exit(1);

}

SDL_EventState(SDL_SYSWMEVENT, SDL_IGNORE);

SDL_EventState(SDL_USEREVENT, SDL_IGNORE);

av_init_packet(&flush_pkt);

flush_pkt.data = (uint8_t *)&flush_pkt;

if (!display_disable) {

int flags = SDL_WINDOW_HIDDEN;

if (alwaysontop)

#if SDL_VERSION_ATLEAST(2,0,5)

flags |= SDL_WINDOW_ALWAYS_ON_TOP;

#else

av_log(NULL, AV_LOG_WARNING, "Your SDL version doesn't support SDL_WINDOW_ALWAYS_ON_TOP. Feature will be inactive.\n");

#endif

if (borderless)

flags |= SDL_WINDOW_BORDERLESS;

else

flags |= SDL_WINDOW_RESIZABLE;<br> //2.创建窗口

window = SDL_CreateWindow(program_name, SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED, default_width, default_height, flags);

SDL_SetHint(SDL_HINT_RENDER_SCALE_QUALITY, "linear");

if (window) {

renderer = SDL_CreateRenderer(window, -1, SDL_RENDERER_ACCELERATED | SDL_RENDERER_PRESENTVSYNC);

if (!renderer) {

av_log(NULL, AV_LOG_WARNING, "Failed to initialize a hardware accelerated renderer: %s\n", SDL_GetError());

renderer = SDL_CreateRenderer(window, -1, 0);

}

if (renderer) {

if (!SDL_GetRendererInfo(renderer, &renderer_info))

av_log(NULL, AV_LOG_VERBOSE, "Initialized %s renderer.\n", renderer_info.name);

}

}

if (!window || !renderer || !renderer_info.num_texture_formats) {

av_log(NULL, AV_LOG_FATAL, "Failed to create window or renderer: %s", SDL_GetError());

do_exit(NULL);

}

}

//3.初始化队列,创建数据读取线程 read_thread

is = stream_open(input_filename, file_iformat);

if (!is) {

av_log(NULL, AV_LOG_FATAL, "Failed to initialize VideoState!\n");

do_exit(NULL);

}

//4.事件响应

event_loop(is);

/* never returns */

return 0;

}基本上窗口操作都会在主函数main中处理,SDL创建主窗口后,创建render用于渲染输出,event_loop播放控制事件轮询,也包括video的显示输出。

在代码断点,视频输出的调用栈如下:

event_loop开始处理SDL事件:

static void event_loop(VideoState *cur_stream)

{

SDL_Event event;

double incr, pos, frac;

for (;;) {

double x;

refresh_loop_wait_event(cur_stream, &event);//video显示

switch (event.type) {

按键事件

............................

}

}

}视频的显示主要在refresh_loop_wait_event:

static void refresh_loop_wait_event(VideoState *is, SDL_Event *event) {

double remaining_time = 0.0;

SDL_PumpEvents();

while (!SDL_PeepEvents(event, 1, SDL_GETEVENT, SDL_FIRSTEVENT, SDL_LASTEVENT)) {

if (!cursor_hidden && av_gettime_relative() - cursor_last_shown > CURSOR_HIDE_DELAY) {

SDL_ShowCursor(0);

cursor_hidden = 1;

}

if (remaining_time > 0.0)

av_usleep((int64_t)(remaining_time * 1000000.0));

remaining_time = REFRESH_RATE;

if (is->show_mode != SHOW_MODE_NONE && (!is->paused || is->force_refresh))

video_refresh(is, &remaining_time);//显示画面,remaining_time:下⼀轮应当sleep的时间,以保持稳定的画⾯输出

SDL_PumpEvents();

}

}video_refresh具体实现如下

static void video_refresh(void *opaque, double *remaining_time)

{

VideoState *is = opaque;

double time;

Frame *sp, *sp2;

if (!is->paused && get_master_sync_type(is) == AV_SYNC_EXTERNAL_CLOCK && is->realtime)

check_external_clock_speed(is);

if (!display_disable && is->show_mode != SHOW_MODE_VIDEO && is->audio_st) {

time = av_gettime_relative() / 1000000.0;

if (is->force_refresh || is->last_vis_time + rdftspeed < time) {

video_display(is);

is->last_vis_time = time;

}

*remaining_time = FFMIN(*remaining_time, is->last_vis_time + rdftspeed - time);

}

if (is->video_st) {

retry:

if (frame_queue_nb_remaining(&is->pictq) == 0) {//判断队列是否为空

// nothing to do, no picture to display in the queue

} else {

double last_duration, duration, delay;

Frame *vp, *lastvp;

/* dequeue the picture */

lastvp = frame_queue_peek_last(&is->pictq);//获取上一帧,已在显示

vp = frame_queue_peek(&is->pictq);//获取待显示帧

if (vp->serial != is->videoq.serial) {//如果序列不连续(比如seek),则将其出队列,以尽快读取最新的序列

frame_queue_next(&is->pictq);//丢帧

goto retry;

}

if (lastvp->serial != vp->serial)//新的播放序列重置当前时间

is->frame_timer = av_gettime_relative() / 1000000.0;//frame_timer自己独立的时间轴

if (is->paused)//暂停状态,一直显示上一帧

goto display;

/* compute nominal last_duration */

last_duration = vp_duration(is, lastvp, vp);//计算相邻两帧间隔时长

delay = compute_target_delay(last_duration, is);//计算上一帧lastvp还需要播放时间

time= av_gettime_relative()/1000000.0;//当前时间

if (time < is->frame_timer + delay) {//如果还没有到播放时间,说明当前显示帧还需要继续显示

*remaining_time = FFMIN(is->frame_timer + delay - time, *remaining_time);//延迟的时间,继续显示时间长

goto display;

}

//该到vp播放了

is->frame_timer += delay;

if (delay > 0 && time - is->frame_timer > AV_SYNC_THRESHOLD_MAX)

is->frame_timer = time;

SDL_LockMutex(is->pictq.mutex);

if (!isnan(vp->pts))

update_video_pts(is, vp->pts, vp->pos, vp->serial);

SDL_UnlockMutex(is->pictq.mutex);

//判断丢帧处理

if (frame_queue_nb_remaining(&is->pictq) > 1) {//队列至少有2帧

Frame *nextvp = frame_queue_peek_next(&is->pictq);//取下一帧

duration = vp_duration(is, vp, nextvp);

if(!is->step && //非逐帧播放才检测是否需要丢帧

(framedrop>0 //framedrop = 0,不开启丢帧

|| (framedrop && get_master_sync_type(is) != AV_SYNC_VIDEO_MASTER)) && //如果同步时钟以视频为基准不需要丢帧,非视频时钟为基准,才需要丢帧

time > is->frame_timer + duration){//落后,也需要丢帧

is->frame_drops_late++;//累计丢帧

frame_queue_next(&is->pictq);

goto retry;

}

}

//字幕显示

if (is->subtitle_st) {

........

}

frame_queue_next(&is->pictq);

is->force_refresh = 1;

if (is->step && !is->paused)

stream_toggle_pause(is);

}

display:

/* display picture */

if (!display_disable && is->force_refresh && is->show_mode == SHOW_MODE_VIDEO && is->pictq.rindex_shown)

video_display(is);//显示图像

}

is->force_refresh = 0;

if (show_status) {

AVBPrint buf;

static int64_t last_time;

int64_t cur_time;

int aqsize, vqsize, sqsize;

double av_diff;

cur_time = av_gettime_relative();

if (!last_time || (cur_time - last_time) >= 30000) {

aqsize = 0;

vqsize = 0;

sqsize = 0;

if (is->audio_st)

aqsize = is->audioq.size;

if (is->video_st)

vqsize = is->videoq.size;

if (is->subtitle_st)

sqsize = is->subtitleq.size;

av_diff = 0;

if (is->audio_st && is->video_st)

av_diff = get_clock(&is->audclk) - get_clock(&is->vidclk);

else if (is->video_st)

av_diff = get_master_clock(is) - get_clock(&is->vidclk);

else if (is->audio_st)

av_diff = get_master_clock(is) - get_clock(&is->audclk);

av_bprint_init(&buf, 0, AV_BPRINT_SIZE_AUTOMATIC);

av_bprintf(&buf,

"%7.2f %s:%7.3f fd=%4d aq=%5dKB vq=%5dKB sq=%5dB f=%"PRId64"/%"PRId64" \r",

get_master_clock(is),

(is->audio_st && is->video_st) ? "A-V" : (is->video_st ? "M-V" : (is->audio_st ? "M-A" : " ")),

av_diff,

is->frame_drops_early + is->frame_drops_late,

aqsize / 1024,

vqsize / 1024,

sqsize,

is->video_st ? is->viddec.avctx->pts_correction_num_faulty_dts : 0,

is->video_st ? is->viddec.avctx->pts_correction_num_faulty_pts : 0);

if (show_status == 1 && AV_LOG_INFO > av_log_get_level())

fprintf(stderr, "%s", buf.str);

else

av_log(NULL, AV_LOG_INFO, "%s", buf.str);

fflush(stderr);

av_bprint_finalize(&buf, NULL);

last_time = cur_time;

}

}

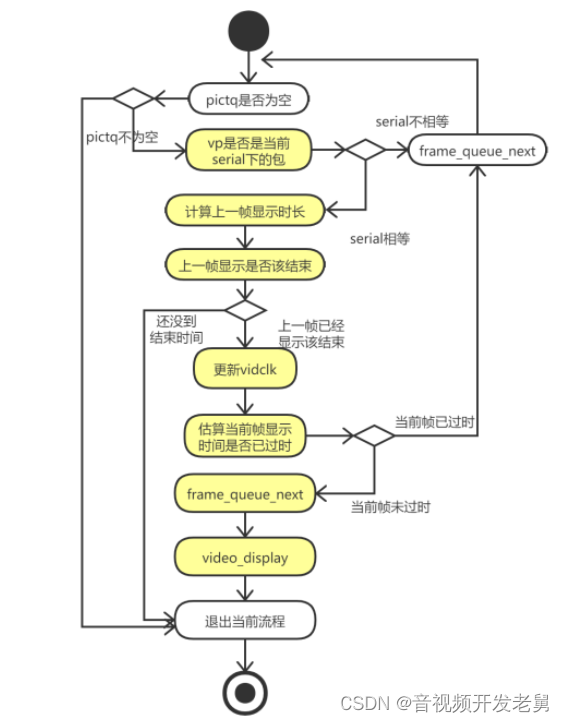

} 其处理流程:

本文福利, 免费领取C++音视频学习资料包、技术视频/代码,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,编解码,推拉流,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓

static void video_display(VideoState *is)

{

if (!is->width)

video_open(is);

SDL_SetRenderDrawColor(renderer, 0, 0, 0, 255);

SDL_RenderClear(renderer);//清理render

if (is->audio_st && is->show_mode != SHOW_MODE_VIDEO)

video_audio_display(is);//音频显示(显示的方式根据SHOW_MODE_VIDEO的值)

else if (is->video_st)

video_image_display(is);//图像显示

SDL_RenderPresent(renderer);

}video_image_display的逻辑是先frame_queue_peek_last取出要显示帧,然后upload_texture更新到SDL_Texture,最后通过SDL_RenaerCopyEx拷贝纹理给render显示。

static void video_image_display(VideoState *is)

{

Frame *vp;

Frame *sp = NULL;

SDL_Rect rect;

vp = frame_queue_peek_last(&is->pictq);//取要显示的视频帧

if (is->subtitle_st) {//字幕显示

if (frame_queue_nb_remaining(&is->subpq) > 0) {

sp = frame_queue_peek(&is->subpq);

if (vp->pts >= sp->pts + ((float) sp->sub.start_display_time / 1000)) {

if (!sp->uploaded) {

uint8_t* pixels[4];

int pitch[4];

int i;

if (!sp->width || !sp->height) {

sp->width = vp->width;

sp->height = vp->height;

}

if (realloc_texture(&is->sub_texture, SDL_PIXELFORMAT_ARGB8888, sp->width, sp->height, SDL_BLENDMODE_BLEND, 1) < 0)

return;

for (i = 0; i < sp->sub.num_rects; i++) {

AVSubtitleRect *sub_rect = sp->sub.rects[i];

sub_rect->x = av_clip(sub_rect->x, 0, sp->width );

sub_rect->y = av_clip(sub_rect->y, 0, sp->height);

sub_rect->w = av_clip(sub_rect->w, 0, sp->width - sub_rect->x);

sub_rect->h = av_clip(sub_rect->h, 0, sp->height - sub_rect->y);

is->sub_convert_ctx = sws_getCachedContext(is->sub_convert_ctx,

sub_rect->w, sub_rect->h, AV_PIX_FMT_PAL8,

sub_rect->w, sub_rect->h, AV_PIX_FMT_BGRA,

0, NULL, NULL, NULL);

if (!is->sub_convert_ctx) {

av_log(NULL, AV_LOG_FATAL, "Cannot initialize the conversion context\n");

return;

}

if (!SDL_LockTexture(is->sub_texture, (SDL_Rect *)sub_rect, (void **)pixels, pitch)) {

sws_scale(is->sub_convert_ctx, (const uint8_t * const *)sub_rect->data, sub_rect->linesize,

0, sub_rect->h, pixels, pitch);

SDL_UnlockTexture(is->sub_texture);

}

}

sp->uploaded = 1;

}

} else

sp = NULL;

}

}

//每次显示,都会计算窗口显示的大小

calculate_display_rect(&rect, is->xleft, is->ytop, is->width, is->height, vp->width, vp->height, vp->sar);

if (!vp->uploaded) {//判断帧vp是否加载显示过

if (upload_texture(&is->vid_texture, vp->frame, &is->img_convert_ctx) < 0)

return;

vp->uploaded = 1;

vp->flip_v = vp->frame->linesize[0] < 0;

}

set_sdl_yuv_conversion_mode(vp->frame);

SDL_RenderCopyEx(renderer, is->vid_texture, NULL, &rect, 0, NULL, vp->flip_v ? SDL_FLIP_VERTICAL : 0);//拷贝纹理到render

set_sdl_yuv_conversion_mode(NULL);

if (sp) {<br> //字幕显示逻辑

#if USE_ONEPASS_SUBTITLE_RENDER

SDL_RenderCopy(renderer, is->sub_texture, NULL, &rect);

#else

int i;

double xratio = (double)rect.w / (double)sp->width;

double yratio = (double)rect.h / (double)sp->height;

for (i = 0; i < sp->sub.num_rects; i++) {

SDL_Rect *sub_rect = (SDL_Rect*)sp->sub.rects[i];

SDL_Rect target = {.x = rect.x + sub_rect->x * xratio,

.y = rect.y + sub_rect->y * yratio,

.w = sub_rect->w * xratio,

.h = sub_rect->h * yratio};

SDL_RenderCopy(renderer, is->sub_texture, sub_rect, &target);

}

#endif

}

}upload_texure是将AVFormat的图像数据传给SDL的纹理的过程。

static int upload_texture(SDL_Texture **tex, AVFrame *frame, struct SwsContext **img_convert_ctx) {

int ret = 0;

Uint32 sdl_pix_fmt;

SDL_BlendMode sdl_blendmode;<br> //根据frame的图像格式,获取对应的SDL像素格式

get_sdl_pix_fmt_and_blendmode(frame->format, &sdl_pix_fmt, &sdl_blendmode);<br> //realloc_texture 根据新得到的SDL像素格式,为&is->vid_texture重新分配空间

if (realloc_texture(tex, sdl_pix_fmt == SDL_PIXELFORMAT_UNKNOWN ? SDL_PIXELFORMAT_ARGB8888 : sdl_pix_fmt, frame->width, frame->height, sdl_blendmode, 0) < 0)

return -1;

switch (sdl_pix_fmt) {

case SDL_PIXELFORMAT_UNKNOWN://frame的格式是sdl不支持的格式,则需要进行图像格式转换,转换为目标格式AV_PIX_FMT_BGRA,对应SDL_PIXELFORMAT_BGRA32

/* This should only happen if we are not using avfilter... */

*img_convert_ctx = sws_getCachedContext(*img_convert_ctx,

frame->width, frame->height, frame->format, frame->width, frame->height,

AV_PIX_FMT_BGRA, sws_flags, NULL, NULL, NULL);

if (*img_convert_ctx != NULL) {

uint8_t *pixels[4];

int pitch[4];

if (!SDL_LockTexture(*tex, NULL, (void **)pixels, pitch)) {<br> //图像格式转换,做视频像素格式和分辨率的转换,比如图像缩放,其实使用libyuv或shader效率更高

sws_scale(*img_convert_ctx, (const uint8_t * const *)frame->data, frame->linesize,

0, frame->height, pixels, pitch);

SDL_UnlockTexture(*tex);

}

} else {

av_log(NULL, AV_LOG_FATAL, "Cannot initialize the conversion context\n");

ret = -1;

}

break;

case SDL_PIXELFORMAT_IYUV://framr格式对应SDL_PIXELFORMAT_IYUV,不用进行图像格式转换,调用SDL_UpdateYUVTexture更新texture

if (frame->linesize[0] > 0 && frame->linesize[1] > 0 && frame->linesize[2] > 0) {

ret = SDL_UpdateYUVTexture(*tex, NULL, frame->data[0], frame->linesize[0],

frame->data[1], frame->linesize[1],

frame->data[2], frame->linesize[2]);

} else if (frame->linesize[0] < 0 && frame->linesize[1] < 0 && frame->linesize[2] < 0) {

ret = SDL_UpdateYUVTexture(*tex, NULL, frame->data[0] + frame->linesize[0] * (frame->height - 1), -frame->linesize[0],

frame->data[1] + frame->linesize[1] * (AV_CEIL_RSHIFT(frame->height, 1) - 1), -frame->linesize[1],

frame->data[2] + frame->linesize[2] * (AV_CEIL_RSHIFT(frame->height, 1) - 1), -frame->linesize[2]);

} else {

av_log(NULL, AV_LOG_ERROR, "Mixed negative and positive linesizes are not supported.\n");

return -1;

}

break;<br><br>//framr格式对应其他的SDL格式,不用进行图像格式转换,调用SDL_UpdateYUVTexture更新texturedefault:<br>

if (frame->linesize[0] < 0) {

ret = SDL_UpdateTexture(*tex, NULL, frame->data[0] + frame->linesize[0] * (frame->height - 1), -frame->linesize[0]);

} else {

ret = SDL_UpdateTexture(*tex, NULL, frame->data[0], frame->linesize[0]);

}

break;

}

return ret;

}音视频同步

首先要明白为啥要做音视频同步?经过上面音频和视频的输出可知道,它们的输出不在同一个线程,那么不一定会同时解出同一个pts的音频帧和视频帧,有时编码或封装的时候可能pts还是不连续的,如果不做处理就直接播放,就会出现声音和画面不同步,因此在进行音频和视频的播放时,需要对音频和视频的播放速度、播放时刻进行控制,以实现音频和视频保持同步。

在ffplay中有3种同步策略:音频为准、视频为准、外部时钟为准,由于人耳朵对声音变化的敏感度比视觉高,一般的同步策略都是以音频为准,即将视频同步到音频,对画面进行适当的丢帧或重复显示,以追赶或等待音频,在ffplay中默认也是以音频时钟为准进行同步。

在做同步的时候,需要一个“时钟“概念,音频、视频、外部时钟都有自己独立时钟,各自set各自的时钟,以谁为基准(master),其他的则只能get该时钟进行同步,时钟的结构体定义如下:

typedef struct Clock {

double pts;//时钟基础, 当前帧(待播放)显示时间戳,播放后,当前帧变成上一帧

double pts_drift;//当前pts与当前系统时钟的差值, audio、video对于该值是独立的

double last_updated;//最后一次更新的系统时钟

double speed;//时钟速度控制,用于控制播放速度

int serial;//播放序列,所谓播放序列就是一段连续的播放动作,一个seek操作会启动一段新的播放序列

int paused;//= 1 说明是暂停状态

int *queue_serial; //指向packet_serial

} Clock;时钟的工作原理,需要不断“对时”,通过set_clock_at(Clock *c, double pts, int serial, double time),用pts、serial、time(系统时间)进行对时。获取的时间是一个估算值,是通过“对时”时记录的pts_drifft估算的。

以音频为准

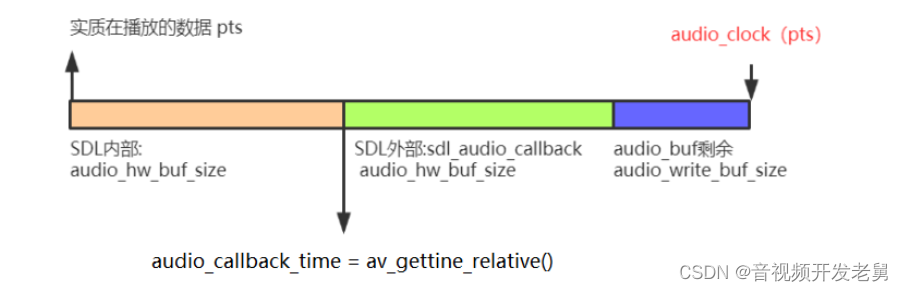

ffplay默认采用的是音频时钟为准进行同步,此时音频的时钟设置在sdl_audio_callback:

static void sdl_audio_callback(void *opaque, Uint8 *stream, int len)

{<br>

audio_callback_time = av_gettime_relative();

..........................................

/* Let's assume the audio driver that is used by SDL has two periods. */

if (!isnan(is->audio_clock)) {

set_clock_at(&is->audclk, is->audio_clock - (double)(2 * is->audio_hw_buf_size + is->audio_write_buf_size) / is->audio_tgt.bytes_per_sec, is->audio_clock_serial, audio_callback_time / 1000000.0);

sync_clock_to_slave(&is->extclk, &is->audclk);

}

}

is->audio_clock是在是在audio_decode_frame赋值:

static int audio_decode_frame(VideoState *is)

{

........................................................

/* update the audio clock with the pts */

if (!isnan(af->pts))

is->audio_clock = af->pts + (double) af->frame->nb_samples / af->frame->sample_rate;

else

is->audio_clock = NAN;

is->audio_clock_serial = af->serial;

................................................

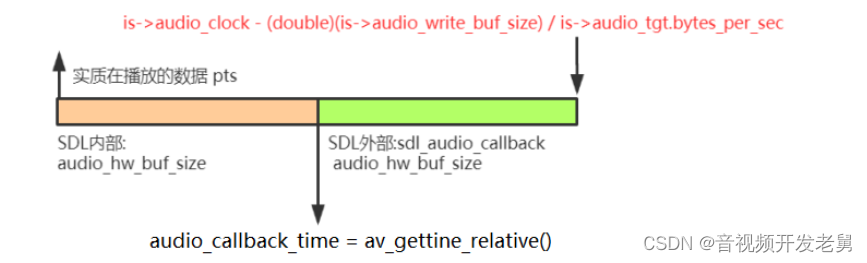

}从这⾥可以看出来,这⾥的时间戳是audio_buf结束位置的时间戳,⽽不是audio_buf起始位置的时间戳, 所以当audio_buf有剩余时(剩余的⻓度记录在audio_write_buf_size),那实际数据的pts就变成is- >audio_clock - (double)(is->audio_write_buf_size) / is->audio_tgt.bytes_per_sec。

实质上audio_hw_buf_size*2这些数据实际都没有播放出去,所以就有:

is->audio_clock - (double)(2 * is->audio_hw_buf_size + is->audio_write_buf_size) / is->audio_tgt.bytes_per_sec

set_clock_at(&is->audclk, is->audio_clock - (double)(2 * is->audio_hw_buf_size + is->audio_write_buf_size) / is->audio_tgt.bytes_per_sec, is->audio_clock_serial, audio_callback_time / 1000000.0);接下来要将视频以音频时钟为准进行同步,如果视频播放过快,则重复播放上一帧,来等待音频;如果视频播放过慢,则丢帧,追赶音频。这部分的逻辑在vido_refresh中:

static void video_refresh(void *opaque, double *remaining_time)

{

...............................................................

/* compute nominal last_duration */

last_duration = vp_duration(is, lastvp, vp);//计算相邻两帧间隔时长

delay = compute_target_delay(last_duration, is);//计算上一帧lastvp还需要播放时间

time= av_gettime_relative()/1000000.0;//当前时间

if (time < is->frame_timer + delay) {//如果还没有到播放时间,说明当前显示帧还需要继续显示

*remaining_time = FFMIN(is->frame_timer + delay - time, *remaining_time);//延迟的时间,继续显示时间长

goto display;

}

//该到vp播放了

is->frame_timer += delay;

if (delay > 0 && time - is->frame_timer > AV_SYNC_THRESHOLD_MAX)

is->frame_timer = time;

SDL_LockMutex(is->pictq.mutex);

if (!isnan(vp->pts))

update_video_pts(is, vp->pts, vp->pos, vp->serial);

SDL_UnlockMutex(is->pictq.mutex);

if (frame_queue_nb_remaining(&is->pictq) > 1) {//队列至少有2帧

Frame *nextvp = frame_queue_peek_next(&is->pictq);//取下一帧

duration = vp_duration(is, vp, nextvp);

if(!is->step && //非逐帧播放才检测是否需要丢帧

(framedrop>0 //framedrop = 0,不开启丢帧

|| (framedrop && get_master_sync_type(is) != AV_SYNC_VIDEO_MASTER)) && //如果同步时钟以视频为基准不需要丢帧,非视频时钟为基准,才需要丢帧

time > is->frame_timer + duration){//落后,也需要丢帧

is->frame_drops_late++;//累计丢帧

frame_queue_next(&is->pictq);

goto retry;

}

}

.............................................................

}这里引入了另一个概念:frame_timer,可以理解为帧显示时刻,如更新前,是上 ⼀帧lastvp的显示时刻;对于更新后( is->frame_timer += delay ),则为当前帧vp显示时刻,上⼀帧显示时刻加上delay(还应显示多久(含帧本身时⻓))即为上⼀帧应结束显示的时刻。

time1:系统时刻小于lastvp结束显示的时刻(frame_timer+dealy),即虚线圆圈位置,此时应该继续显示lastvp;

time2:系统时刻大于lastvp的结束显示时刻,但小于vp的结束显示时刻(vp的显示时间开始于虚线圆圈,结束于黑色圆圈),此时既不重复显示lastvp,也不丢弃vp,即应显示vp;

time3:系统时刻大于vp结束显示时刻(黑色圆圈位置,也是nextvp预计的开始显示时刻),此时应该丢弃vp。

lastvp的显示时⻓delay是如何计算的,这在函数compute_target_delay中实现,compute_target_delay返回值越大,画面越慢,也就是上一帧显示的时间越长。

static double compute_target_delay(double delay, VideoState *is)

{

double sync_threshold, diff = 0;

/* update delay to follow master synchronisation source */

if (get_master_sync_type(is) != AV_SYNC_VIDEO_MASTER) {

/* if video is slave, we try to correct big delays by

duplicating or deleting a frame */

diff = get_clock(&is->vidclk) - get_master_clock(is);

/* skip or repeat frame. We take into account the

delay to compute the threshold. I still don't know

if it is the best guess */

sync_threshold = FFMAX(AV_SYNC_THRESHOLD_MIN, FFMIN(AV_SYNC_THRESHOLD_MAX, delay));

if (!isnan(diff) && fabs(diff) < is->max_frame_duration) {

if (diff <= -sync_threshold)

delay = FFMAX(0, delay + diff);

else if (diff >= sync_threshold && delay > AV_SYNC_FRAMEDUP_THRESHOLD)

delay = delay + diff;

else if (diff >= sync_threshold)

delay = 2 * delay;

}

}

av_log(NULL, AV_LOG_TRACE, "video: delay=%0.3f A-V=%f\n",delay, -diff);

return delay;

}(1) diff<= -sync_threshold:视频播放较慢,需要适当丢帧;

(2) diff>= sync_threshold && delay > AV_SYNC_FRAMEDUP_THRESHOLD:返回 delay + diff,由具体dif决定还要显示多久;

(3) diff>= sync_threshold:视频播放较快,需要适当重复显示lastvp,返回2*delay,也就是2倍lastvp显示时长,也就是让lastvp再显示一帧的时长;

(4) -sync_threshold< diff < sync_threshold:允许误差内,按frame duration去显示视频,返回delay。

由上可知,并不是每时每刻都在同步,而是有一个“准同步”的差值区域。

以视频为准

在以视频为准进行音频同步视频时,如果对音频使用一样的丢帧和重复显示方案,是不靠谱的,因为⾳频的连续性远⾼于视频,通过重复几百个样本或者丢弃几百个样本来达到同步,会在听觉有很明显的不连贯。ffplay采用的原理:通过做重采样补偿,音频变慢了,重采样后的样本就比正常的少,以赶紧播放下一帧;音频快了,重采样后的样本就比正常的增加,从而播放慢一些。

对于视频流程,video_refresh()-> update_video_pts() 按照着视频帧间隔去播放,并实时地重新矫正video时钟即可,主要在于audio的播放,其主要看audio_decode_frame:

static int audio_decode_frame(VideoState *is)

{

.......................................

//1.根据与video clock的差值,计算应该输出的样本数

wanted_nb_samples = synchronize_audio(is, af->frame->nb_samples);

//2.判断是否需要重新初始化重采样

if (af->frame->format != is->audio_src.fmt ||

dec_channel_layout != is->audio_src.channel_layout ||

af->frame->sample_rate != is->audio_src.freq ||

(wanted_nb_samples != af->frame->nb_samples && !is->swr_ctx)) {

swr_free(&is->swr_ctx);

//设置输出、输入目标参数

is->swr_ctx = swr_alloc_set_opts(NULL,

is->audio_tgt.channel_layout, is->audio_tgt.fmt, is->audio_tgt.freq,

dec_channel_layout, af->frame->format, af->frame->sample_rate,

0, NULL);

if (!is->swr_ctx || swr_init(is->swr_ctx) < 0) {

av_log(NULL, AV_LOG_ERROR,

"Cannot create sample rate converter for conversion of %d Hz %s %d channels to %d Hz %s %d channels!\n",

af->frame->sample_rate, av_get_sample_fmt_name(af->frame->format), af->frame->channels,

is->audio_tgt.freq, av_get_sample_fmt_name(is->audio_tgt.fmt), is->audio_tgt.channels);

swr_free(&is->swr_ctx);

return -1;

}

//保存最新的参数

is->audio_src.channel_layout = dec_channel_layout;

is->audio_src.channels = af->frame->channels;

is->audio_src.freq = af->frame->sample_rate;

is->audio_src.fmt = af->frame->format;

}

//3.重采样,利用重采样进行样本的添加或剔除

if (is->swr_ctx) {

const uint8_t **in = (const uint8_t **)af->frame->extended_data;

uint8_t **out = &is->audio_buf1;

int out_count = (int64_t)wanted_nb_samples * is->audio_tgt.freq / af->frame->sample_rate + 256;

int out_size = av_samples_get_buffer_size(NULL, is->audio_tgt.channels, out_count, is->audio_tgt.fmt, 0);

int len2;

if (out_size < 0) {

av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size() failed\n");

return -1;

}

if (wanted_nb_samples != af->frame->nb_samples) {

if (swr_set_compensation(is->swr_ctx, (wanted_nb_samples - af->frame->nb_samples) * is->audio_tgt.freq / af->frame->sample_rate,

wanted_nb_samples * is->audio_tgt.freq / af->frame->sample_rate) < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_set_compensation() failed\n");

return -1;

}

}

av_fast_malloc(&is->audio_buf1, &is->audio_buf1_size, out_size);

if (!is->audio_buf1)

return AVERROR(ENOMEM);

//音频重采样:返回值是重采样后得到的音频数据中单个声道的样本数

len2 = swr_convert(is->swr_ctx, out, out_count, in, af->frame->nb_samples);

if (len2 < 0) {

av_log(NULL, AV_LOG_ERROR, "swr_convert() failed\n");

return -1;

}

if (len2 == out_count) {

av_log(NULL, AV_LOG_WARNING, "audio buffer is probably too small\n");

if (swr_init(is->swr_ctx) < 0)

swr_free(&is->swr_ctx);

}

is->audio_buf = is->audio_buf1;

resampled_data_size = len2 * is->audio_tgt.channels * av_get_bytes_per_sample(is->audio_tgt.fmt);

} else {

//不用重采样,则将指针指向frame中音频数据

is->audio_buf = af->frame->data[0];

resampled_data_size = data_size;

}

...............................................

return resampled_data_size;//返回audio_buf的数据大小

}(1) 根据与video clock的差值,计算应该输出的样本数。由函数 synchronize_audio 完成,音频变慢了则样本数减少,音频变快了则样本数增加;

(2) 判断是否需要重采样:如果要输出的样本数与frame的样本数不相等,也就是需要适当减少或增加样本;

(3) 利⽤重采样库进行平滑地样本添加或剔除,在获知要调整的⽬标样本数 wanted_nb_samples 后,通过 swr_set_compensation 和 swr_convert 的函数组合完成重采样。

本文福利, 免费领取C++音视频学习资料包、技术视频/代码,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,编解码,推拉流,srs)↓↓↓↓↓↓见下面↓↓文章底部点击免费领取↓↓