问题描述:

在进行图像分割时,由于分割效果不够理想,使得两相邻区域间存在未分割的情况。如果使用合并boundingBox的方式来处理,势必又会引入更多无关的区域,从而增加了分割的难度。因此本文考虑对两区域进行连接并寻找连接后的凸包,从而实现相邻区域合并,以便于后续处理。

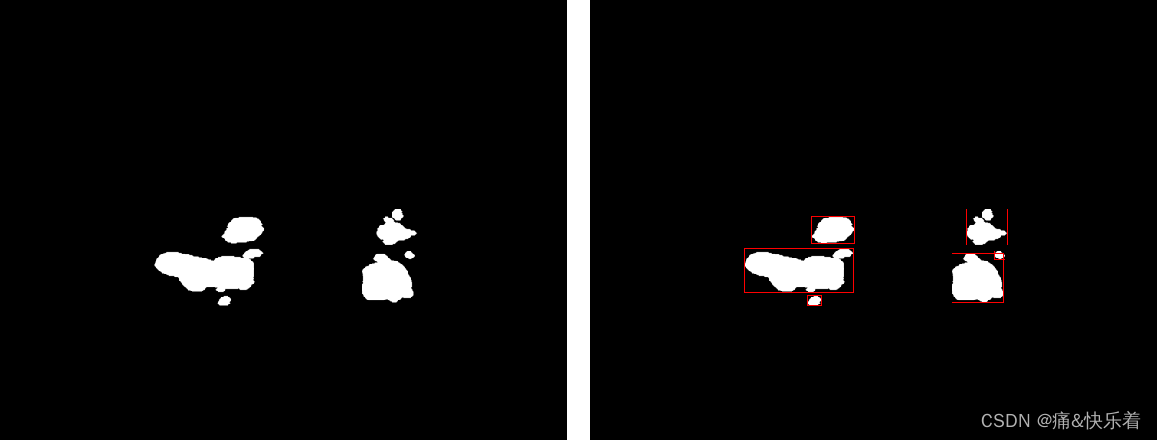

图1 原始图像以及其boundingBox

算法思路:

提取轮廓->计算轮廓间的距离->连接小于阈值T的两个轮廓->寻找凸包并进行填充

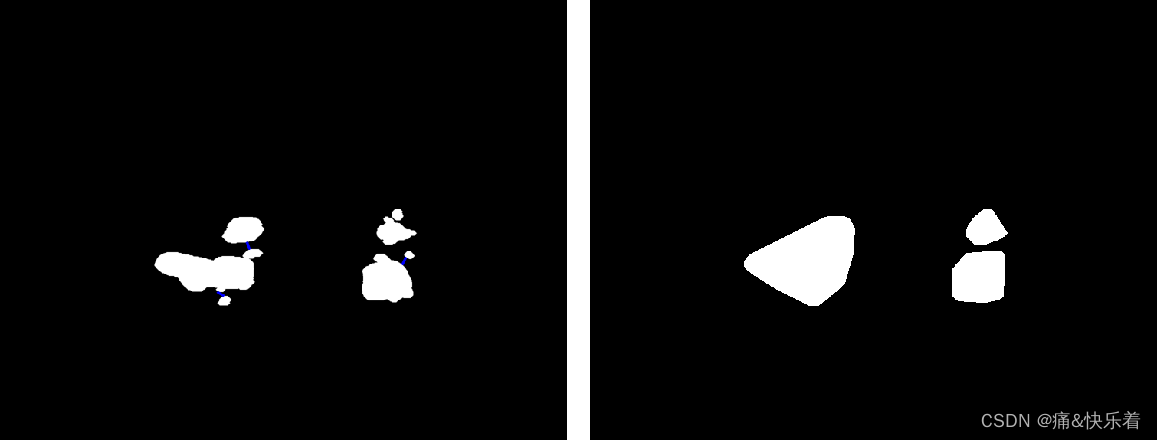

图2 连接相邻区域(蓝线)和相应的凸包

由图2显而易见,该方法相比与合并boundingBox的方式减小无关的区域。

完整实现代码如下:

vector<Point> GetPoint(vector<Point> contour)

{

cv::Rect rect = cv::boundingRect(cv::Mat(contour));

Point up, down, left, right;

up.x = rect.x + rect.width / 2;

up.y = rect.y;

vector<Point> p1;

p1.push_back(up);

down.x = rect.x + rect.width / 2;

down.y = rect.y + rect.height;

p1.push_back(down);

left.x = rect.x;

left.y = rect.y + rect.height / 2;

p1.push_back(left);

right.x = rect.x + rect.width;

right.y = rect.y + rect.height / 2;

p1.push_back(right);

return p1;

}

void ConnectNearRegion()

{

Mat src = imread("D:\\PyProject01\\images\\metal.png");

Mat gray, imageBw;

cvtColor(src, gray, CV_BGR2GRAY);

threshold(gray, imageBw, 0, 255, CV_THRESH_BINARY);

//腐蚀

/*cv::Mat element = cv::getStructuringElement(MORPH_RECT, Size(3, 3));

Mat metalMask;

cv::erode(imageBw, metalMask, element);*/

vector<vector<Point>> boundingPoints;

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

findContours(imageBw, contours, hierarchy, CV_RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

//每个轮廓都只提取四个点

for (int i = 0; i < contours.size(); ++i)

{

vector<Point> p1 = GetPoint(contours[i]);

boundingPoints.push_back(p1);

}

//计算轮廓间的距离

for (int i = 0; i < contours.size(); ++i)

{

cv::Rect rect = cv::boundingRect(cv::Mat(contours[i]));

//rectangle(imageBw, rect, Scalar(200,0,255), 1);

vector<Point> p1 = contours[i]; // boundingPoints[i];

for (int i1 = i+1; i1 < contours.size(); ++i1)

{

vector<Point> p2 = contours[i1];// boundingPoints[i1];

//分别求p1和p2的四个点距离

float T = 10;

int flag = 0;

for (int i2 = 0; i2 < p1.size(); i2++)

{

for (int i3 = 0; i3 < p2.size(); i3++)

{

float d = sqrt(pow(float(p1[i2].x - p2[i3].x), 2) + pow(float(p1[i2].y - p2[i3].y), 2));

if (d < T)

{

line(imageBw, p1[i2], p2[i3], Scalar(255, 0, 0), 1, LINE_AA, 0);

flag = 1;

break;

}

}

if (flag)

break;

}

}

}

imshow("", imageBw);

waitKey();

//find convex

vector<vector<Point>> contours2;

vector<Vec4i> hierarchy2;

//find image contours

findContours(imageBw, contours2, hierarchy2, RETR_TREE, CHAIN_APPROX_SIMPLE, Point());

vector<vector<Point>>hull2(contours2.size());

//get convex

Mat filling = Mat::zeros(imageBw.size(), CV_8UC1);

for (int i = 0; i < contours2.size(); i++)

{

convexHull(Mat(contours2[i]), hull2[i], false);

fillPoly(filling, hull2[i], Scalar(255));

}

imshow("", filling);

waitKey();

}