用prometheus插件监控kubernetes控制平面

例如,您使用kubeadm构建k8s集群。

然后kube控制器管理器、kube调度程序和etcd需要一些额外的工作来进行发现。

create service for kube-controller-manager

1. kubectl apply -f controller-service.yaml

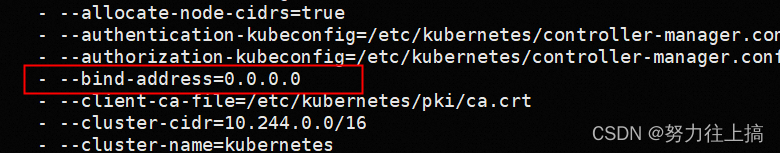

2. edit /etc/kubernetes/manifests/kube-controller-manager.yaml , modify or add one line - --bind-address=0.0.0.0

3. wait kube-controller-manager to restart

As follows:

controller-service.yaml

如果你的环境没有对应的 endpoint,可以手工创建一个 service,

把这个 controller-service.yaml apply 一下就行了。另外,如果是用 kubeadm 安装的 controller-manager,还要记得修改 /etc/kubernetes/manifests/kube-controller-manager.yaml,调整 controller-manager 的启动参数:–bind-address=0.0.0.0。

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-controller-manager

labels:

k8s-app: kube-controller-manager

spec:

selector:

component: kube-controller-manager

type: ClusterIP

clusterIP: None

ports:

- name: https

port: 10257

targetPort: 10257

prometheus的配置:

- job_name: 'controller-manager'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

insecure_skip_verify: true

authorization:

credentials_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;kube-controller-manager;https

create service for kube-scheduler

1. kubectl apply -f scheduler-service.yaml

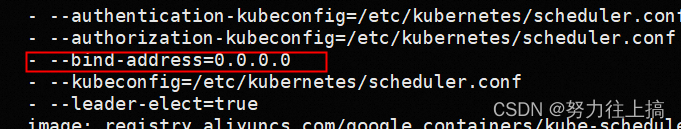

2. edit /etc/kubernetes/manifests/kube-scheduler.yaml , modify or add one line - --bind-address=0.0.0.0

3. wait kube-scheduler to restart

As follows:

scheduler-service.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-scheduler

labels:

k8s-app: kube-scheduler

spec:

selector:

component: kube-scheduler

type: ClusterIP

clusterIP: None

ports:

- name: https

port: 10259

targetPort: 10259

prometheus的配置:

- job_name: 'kube-scheduler'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

insecure_skip_verify: true

authorization:

credentials_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;kube-scheduler;https

create service for etcd

ETCD 这么云原生的组件,显然是内置支持了 /metrics 接口的,不过 ETCD 很讲求安全性,默认的 2379 端口的访问是要用证书的,测试一下先:

[[email protected] ~]# curl -k https://localhost:2379/metrics

curl: (35) error:14094412:SSL routines:ssl3_read_bytes:sslv3 alert bad certificate

[[email protected] ~]# ls /etc/kubernetes/pki/etcd

ca.crt ca.key healthcheck-client.crt healthcheck-client.key peer.crt peer.key server.crt server.key

[[email protected] ~]# curl -s --cacert /etc/kubernetes/pki/etcd/ca.crt --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://localhost:2379/metrics | head -n 6

# HELP etcd_cluster_version Which version is running. 1 for 'cluster_version' label with current cluster version

# TYPE etcd_cluster_version gauge

etcd_cluster_version{

cluster_version="3.5"} 1

# HELP etcd_debugging_auth_revision The current revision of auth store.

# TYPE etcd_debugging_auth_revision gauge

etcd_debugging_auth_revision 1

使用 kubeadm 安装的 Kubernetes 集群,相关证书是在 /etc/kubernetes/pki/etcd 目录下,为 curl 命令指定相关证书,是可以访问的通的。

ETCD 也确实提供了另一个端口来获取指标数据,无需走这套证书认证机制。我们看一下 ETCD 的启动命令,在 Kubernetes 体系中,直接导出 ETCD 的 Pod 的 yaml 信息即可:

kubectl get pod -n kube-system etcd-10.206.0.16 -o yaml

上例中 etcd-10.206.0.16 是我的 ETCD 的 podname,你的可能是别的名字,自行替换即可。输出的内容中可以看到 ETCD 的启动命令:

spec:

containers:

- command:

- etcd

- --advertise-client-urls=https://10.206.0.16:2379

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --client-cert-auth=true

- --data-dir=/var/lib/etcd

- --initial-advertise-peer-urls=https://10.206.0.16:2380

- --initial-cluster=10.206.0.16=https://10.206.0.16:2380

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --listen-client-urls=https://127.0.0.1:2379,https://10.206.0.16:2379

- --listen-metrics-urls=http://0.0.0.0:2381

- --listen-peer-urls=https://10.206.0.16:2380

- --name=10.206.0.16

- --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

- --peer-client-cert-auth=true

- --peer-key-file=/etc/kubernetes/pki/etcd/peer.key

- --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- --snapshot-count=10000

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

image: registry.aliyuncs.com/google_containers/etcd:3.5.1-0

注意 --listen-metrics-urls=http://0.0.0.0:2381 这一行,这实际就是指定了 2381 这个端口可以直接获取 metrics 数据,来测试一下:

[[email protected] ~]# curl -s localhost:2381/metrics | head -n 6

# HELP etcd_cluster_version Which version is running. 1 for 'cluster_version' label with current cluster version

# TYPE etcd_cluster_version gauge

etcd_cluster_version{

cluster_version="3.5"} 1

# HELP etcd_debugging_auth_revision The current revision of auth store.

# TYPE etcd_debugging_auth_revision gauge

etcd_debugging_auth_revision 1

后面我们就直接通过这个接口来获取数据即可。

1. kubectl apply -f etcd-service-http.yaml

2. edit /etc/kubernetes/manifests/etcd.yaml , modify - --listen-metrics-urls=http://127.0.0.1:2381 to - --listen-metrics-urls=http://0.0.0.0:2381

3. wait etcd to restart

k8s/etcd-service.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: etcd

labels:

k8s-app: etcd

spec:

selector:

component: etcd

type: ClusterIP

clusterIP: None

ports:

- name: http

port: 2381

targetPort: 2381

protocol: TCP

k8s/etcd-service-https.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: etcd

labels:

k8s-app: etcd

spec:

selector:

component: etcd

type: ClusterIP

clusterIP: None

ports:

- name: https

port: 2379

targetPort: 2379

protocol: TCP

Prometheus 配置文件:

- job_name: 'etcd'

kubernetes_sd_configs:

- role: endpoints

scheme: http

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;etcd;http

---

kind: ConfigMap

metadata:

name: scrape-config

apiVersion: v1

data:

in_cluster_scrape.yaml: |

global:

scrape_interval: 15s

#external_labels:

# cluster: test

# replica: 0

scrape_configs:

- job_name: "apiserver"

metrics_path: "/metrics"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

insecure_skip_verify: true

authorization:

credentials_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: default;kubernetes;https

- job_name: "controller-manager"

metrics_path: "/metrics"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

insecure_skip_verify: true

authorization:

credentials_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: kube-system;kube-controller-manager;https

- job_name: "scheduler"

metrics_path: "/metrics"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

insecure_skip_verify: true

authorization:

credentials_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: kube-system;kube-scheduler;https

- job_name: 'etcd'

kubernetes_sd_configs:

- role: endpoints

scheme: http

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;etcd;http

- job_name: "etcd"

metrics_path: "/metrics"

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /opt/categraf/pki/etcd/ca.crt

cert_file: /opt/categraf/pki/etcd/client.crt

key_file: /opt/categraf/pki/etcd/client.key

insecure_skip_verify: true

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: kube-system;etcd;https

- job_name: "coredns"

metrics_path: "/metrics"

kubernetes_sd_configs:

- role: endpoints

scheme: http

relabel_configs:

- source_labels:

[

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: kube-system;kube-dns;metrics

# remote_write:

# - url: 'http://${NSERVER_SERVICE_WITH_PORT}/prometheus/v1/write'

注:

匹配规则有三点(通过 relabel_configs 的 keep 实现):

__meta_kubernetes_namespace endpoint 的 namespace 要求是 kube-system

__meta_kubernetes_service_name service name 要求是 kube-controller-manager

__meta_kubernetes_endpoint_port_name endpoint 的 port_name 要求是叫 https

如果你没有采集成功,就要去看看有没有这个 endpoint:

$ kubectl get endpoints -n kube-system

[root@master etcd]# kubectl get endpoints -n kube-system

NAME ENDPOINTS AGE

kube-controller-manager 192.168.200.156:10257 151m

kube-dns 10.244.0.11:53,10.244.0.13:53,10.244.0.11:53 + 3 more... 20d

kube-scheduler 192.168.200.156:10259 136m

监控指标

controller-manager 的关键指标分别是啥意思

# HELP rest_client_request_duration_seconds [ALPHA] Request latency in seconds. Broken down by verb and URL.

# TYPE rest_client_request_duration_seconds histogram

请求apiserver的耗时分布,按照url+verb统计

# HELP cronjob_controller_cronjob_job_creation_skew_duration_seconds [ALPHA] Time between when a cronjob is scheduled to be run, and when the corresponding job is created

# TYPE cronjob_controller_cronjob_job_creation_skew_duration_seconds histogram

cronjob 创建到运行的时间分布

# HELP leader_election_master_status [ALPHA] Gauge of if the reporting system is master of the relevant lease, 0 indicates backup, 1 indicates master. 'name' is the string used to identify the lease. Please make sure to group by name.

# TYPE leader_election_master_status gauge

控制器的选举状态,0表示backup, 1表示master

# HELP node_collector_zone_health [ALPHA] Gauge measuring percentage of healthy nodes per zone.

# TYPE node_collector_zone_health gauge

每个zone的健康node占比

# HELP node_collector_zone_size [ALPHA] Gauge measuring number of registered Nodes per zones.

# TYPE node_collector_zone_size gauge

每个zone的node数

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

cpu使用量(也可以理解为cpu使用率)

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

控制器打开的fd数

# HELP pv_collector_bound_pv_count [ALPHA] Gauge measuring number of persistent volume currently bound

# TYPE pv_collector_bound_pv_count gauge

当前绑定的pv数量

# HELP pv_collector_unbound_pvc_count [ALPHA] Gauge measuring number of persistent volume claim currently unbound

# TYPE pv_collector_unbound_pvc_count gauge

当前没有绑定的pvc数量

# HELP pv_collector_bound_pvc_count [ALPHA] Gauge measuring number of persistent volume claim currently bound

# TYPE pv_collector_bound_pvc_count gauge

当前绑定的pvc数量

# HELP pv_collector_total_pv_count [ALPHA] Gauge measuring total number of persistent volumes

# TYPE pv_collector_total_pv_count gauge

pv总数量

# HELP workqueue_adds_total [ALPHA] Total number of adds handled by workqueue

# TYPE workqueue_adds_total counter

各个controller已接受的任务总数

与apiserver的workqueue_adds_total指标类似

# HELP workqueue_depth [ALPHA] Current depth of workqueue

# TYPE workqueue_depth gauge

各个controller队列深度,表示一个controller中的任务的数量

与apiserver的workqueue_depth类似,这个是指各个controller中队列的深度,数值越小越好

# HELP workqueue_queue_duration_seconds [ALPHA] How long in seconds an item stays in workqueue before being requested.

# TYPE workqueue_queue_duration_seconds histogram

任务在队列中的等待耗时,按照控制器分别统计

# HELP workqueue_work_duration_seconds [ALPHA] How long in seconds processing an item from workqueue takes.

# TYPE workqueue_work_duration_seconds histogram

任务出队到被处理完成的时间,按照控制分别统计

# HELP workqueue_retries_total [ALPHA] Total number of retries handled by workqueue

# TYPE workqueue_retries_total counter

任务进入队列重试的次数

# HELP workqueue_longest_running_processor_seconds [ALPHA] How many seconds has the longest running processor for workqueue been running.

# TYPE workqueue_longest_running_processor_seconds gauge

正在处理的任务中,最长耗时任务的处理时间

# HELP endpoint_slice_controller_syncs [ALPHA] Number of EndpointSlice syncs

# TYPE endpoint_slice_controller_syncs counter

endpoint_slice 同步的数量(1.20以上)

# HELP get_token_fail_count [ALPHA] Counter of failed Token() requests to the alternate token source

# TYPE get_token_fail_count counter

获取token失败的次数

# HELP go_memstats_gc_cpu_fraction The fraction of this program's available CPU time used by the GC since the program started.

# TYPE go_memstats_gc_cpu_fraction gauge

controller gc的cpu使用率

引用文章:https://flashcat.cloud/blog/kubernetes-monitoring-09-etcd/

https://flashcat.cloud/blog/kubernetes-monitoring-08-scheduler/

https://flashcat.cloud/blog/kubernetes-monitoring-07-controller-manager/

https://github.com/flashcatcloud/categraf/tree/main/k8s