k8s部署redis6节点,组成3主3从集群模式

一般来说,redis部署有三种模式。

单实例模式,一般用于测试环境。

哨兵模式

集群模式

后两者用于生产部署

哨兵模式

在redis3.0以前,要实现集群一般是借助哨兵sentinel工具来监控master节点的状态。

如果master节点异常,则会做主从切换,将某一台slave作为master。

引入了哨兵节点,部署更复杂,维护成本也比较高,并且性能和高可用性等各方面表现一般。

集群模式

3.0 后推出的 Redis 分布式集群解决方案

主节点提供读写操作,从节点作为备用节点,不提供请求,只作为故障转移使用

如果master节点异常,也是会自动做主从切换,将slave切换为master。

总的来说,集群模式明显优于哨兵模式

一、redis集群搭建

RC、Deployment、DaemonSet都是面向无状态的服务,它们所管理的Pod的IP、名字,启停顺序等都是随机的,而StatefulSet是什么?顾名思义,有状态的集合,管理所有有状态的服务,比如MySQL、MongoDB集群等。

StatefulSet本质上是Deployment的一种变体,在v1.9版本中已成为GA版本,它为了解决有状态服务的问题,它所管理的Pod拥有固定的Pod名称,启停顺序,在StatefulSet中,Pod名字称为网络标识(hostname),还必须要用到共享存储。

在Deployment中,与之对应的服务是service,而在StatefulSet中与之对应的headless service,headless service,即无头服务,与service的区别就是它没有Cluster IP,解析它的名称时将返回该Headless Service对应的全部Pod的Endpoint列表。

除此之外,StatefulSet在Headless Service的基础上又为StatefulSet控制的每个Pod副本创建了一个DNS域名,这个域名的格式为:

( p o d . n a m e ) . (pod.name). (pod.name).(headless server.name).${namespace}.svc.cluster.local

也即是说,对于有状态服务,我们最好使用固定的网络标识(如域名信息)来标记节点,当然这也需要应用程序的支持(如Zookeeper就支持在配置文件中写入主机域名)。

StatefulSet基于Headless Service(即没有Cluster IP的Service)为Pod实现了稳定的网络标志(包括Pod的hostname和DNS Records),在Pod重新调度后也保持不变。同时,结合PV/PVC,StatefulSet可以实现稳定的持久化存储,就算Pod重新调度后,还是能访问到原先的持久化数据。

以下为使用StatefulSet部署Redis的架构,无论是Master还是Slave,都作为StatefulSet的一个副本,并且数据通过PV进行持久化,对外暴露为一个Service,接受客户端请求。

1.redis.conf配置文件参考

说明:我们知道,redis默认目录是/var/lib/redis/和/etc/redis/,同时官方在构建redis镜像时,默认工作目录在/data目录,所以本篇为了规范redis数据存放目录,将redis.conf挂载到/etc/redis/下,其他redis日志文件、数据文件全部放到/data目录下。

#[root@master redis]# vi redis.conf #编写一个redis.conf配置文件

#[root@master redis]# grep -Ev "$^|#" redis.conf #下面是redis.conf配置文件

bind 0.0.0.0

protected-mode yes

port 6379 #redis端口,为了安全设置为6379端口

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no #redis是否以后台模式运行,必须设置no

supervised no

pidfile /data/redis.pid #redis的pid文件,放到/data目录下

loglevel notice

logfile /data/redis_log #redis日志文件,放到/data目录下

databases 16

always-show-logo yes

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb #这个文件会放在dir定义的/data目录

dir /data #数据目录

masterauth iloveyou #redis集群各节点相互认证的密码,必须配置和下面的requirepass一致

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

replica-priority 100

requirepass iloveyou #redis的密码

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

appendonly no

appendfilename "appendonly.aof" #这个文件会放在dir定义的/data目录

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lua-time-limit 5000

cluster-enabled yes #是否启用集群模式,必须去掉注释设为yes

cluster-config-file nodes.conf #这个文件会放在dir定义的/data目录

cluster-node-timeout 15000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

2.创建statefulsets有状态应用

redis集群一般可以使用deployment和statefulsets,这里使用statefulsets有状态应用来创建redis,创建sts有状态应用需要有一个headless service,同时在sts中挂载configmap卷,使用动态分配pv用于redis数据持久化。

cat 03-redis-cluster-sts.yaml

cat > 03-redis-cluster-sts.yaml << 'eof'

apiVersion: v1

kind: ConfigMap

metadata:

name: redis-cluster-config

data:

redis-cluster.conf: |

daemonize no

supervised no

protected-mode no

bind 0.0.0.0

port 6379

cluster-announce-bus-port 16379

cluster-enabled yes

appendonly yes

cluster-node-timeout 5000

dir /data

cluster-config-file /data/nodes.conf

requirepass iloveyou

masterauth iloveyou

pidfile /data/redis.pid

loglevel notice

logfile /data/redis_log

---

apiVersion: v1

kind: Service

metadata:

name: redis-cluster-service

spec:

selector:

app: redis-cluster

clusterIP: None

ports:

- name: redis-6379

port: 6379

- name: redis-16379

port: 16379

---

apiVersion: v1

kind: Service

metadata:

name: redis-cluster-service-access

spec:

selector:

app: redis-cluster

type: NodePort

sessionAffinity: None

ports:

- name: redis-6379

port: 6379

targetPort: 6379

nodePort: 30202

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: redis-cluster

name: redis-cluster

spec:

serviceName: redis-cluster-service

replicas: 6

selector:

matchLabels:

app: redis-cluster

template:

metadata:

labels:

app: redis-cluster

spec:

terminationGracePeriodSeconds: 30

containers:

- name: redis

image: redis:6.2.6

imagePullPolicy: IfNotPresent

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

command: [ "redis-server", "/etc/redis/redis-cluster.conf" ]

args:

- "--cluster-announce-ip"

- "$(POD_IP)"

env:

- name: HOST_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: TZ

value: "Asia/Shanghai"

ports:

- name: redis

containerPort: 6379

protocol: TCP

- name: cluster

containerPort: 16379

protocol: TCP

volumeMounts:

- name: redis-conf

mountPath: /etc/redis

- name: pvc-data

mountPath: /data

volumes:

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

- name: redis-conf

configMap:

name: redis-cluster-config

items:

- key: redis-cluster.conf

path: redis-cluster.conf

volumeClaimTemplates:

- metadata:

name: pvc-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 200M

accessModes:

- ReadWriteOnce #访问模式为RWO

storageClassName: "nfs-boge" #指定使用的存储类,实现动态分配pv

eof

kubectl apply -f 03-redis-cluster-sts.yaml

二、构建3主3从集群模式

6个pod已经创建完毕,状态都是running,下面将6个pod 组成redis集群,3主3从模式。

命令说明:

- –replicas 1或者–cluster-replicas 1 :指定集群中每个master的副本个数为1,此时节点总数 ÷ (replicas + 1) 得到的就是master的数量。因此节点列表中的前n个就是master,其它节点都是slave节点,随机分配到不同master

#在redis任意一个pod执行初始化命令,可以进入到pod里面执行也可以直接在外面执行

#其中为了获取每个pod的ip,使用

# kubectl get pods -l app=redis-cluster -o jsonpath='{range.items[*]}{.status.podIP}:6379 {end}'

#本次采用自动创建redis的形式,也就是说不指定哪个是主哪个是从节点,让redis自动分配,生产环境中也建议使用该种方式

[root@master redis]# kubectl exec -it redis-cluster-0 -- redis-cli -a iloveyou --cluster create --cluster-replicas 1 $(kubectl get pods -l app=redis-cluster -o jsonpath='{range.items[*]}{.status.podIP}:6379 {end}')

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 10.244.2.22:6379 to 10.244.2.20:6379

Adding replica 10.244.1.20:6379 to 10.244.1.18:6379

Adding replica 10.244.2.21:6379 to 10.244.1.19:6379

M: 972b376e8cc658b8bf5f2a1a3294cbe2c84ee852 10.244.2.20:6379

slots:[0-5460] (5461 slots) master

M: ac6cc9dd3a86cf370333d36933c99df5f13f42ab 10.244.1.18:6379

slots:[5461-10922] (5462 slots) master

M: 18b4ceacd3222e546ab59e041e4ae50e736c5c26 10.244.1.19:6379

slots:[10923-16383] (5461 slots) master

S: 8394ceff0b32fc7119b65704ea78e9b5bbc2fbd7 10.244.2.21:6379

replicates 18b4ceacd3222e546ab59e041e4ae50e736c5c26

S: 565f9f9931323f8ac0376b7a7ec701f0a2955e8b 10.244.2.22:6379

replicates 972b376e8cc658b8bf5f2a1a3294cbe2c84ee852

S: 5c1270743b6a5f81003da4402f39c360631a2d0f 10.244.1.20:6379

replicates ac6cc9dd3a86cf370333d36933c99df5f13f42ab

Can I set the above configuration? (type 'yes' to accept): yes #输入yes表示接受redis给我们自动分配的槽点,主从也是redis任意指定的

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

..

>>> Performing Cluster Check (using node 10.244.2.20:6379)

M: 972b376e8cc658b8bf5f2a1a3294cbe2c84ee852 10.244.2.20:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 5c1270743b6a5f81003da4402f39c360631a2d0f 10.244.1.20:6379

slots: (0 slots) slave

replicates ac6cc9dd3a86cf370333d36933c99df5f13f42ab

M: 18b4ceacd3222e546ab59e041e4ae50e736c5c26 10.244.1.19:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: ac6cc9dd3a86cf370333d36933c99df5f13f42ab 10.244.1.18:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 565f9f9931323f8ac0376b7a7ec701f0a2955e8b 10.244.2.22:6379

slots: (0 slots) slave

replicates 972b376e8cc658b8bf5f2a1a3294cbe2c84ee852

S: 8394ceff0b32fc7119b65704ea78e9b5bbc2fbd7 10.244.2.21:6379

slots: (0 slots) slave

replicates 18b4ceacd3222e546ab59e041e4ae50e736c5c26

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@master redis]#

1.验证redis

#往集群里面写入一百条数据。

for line in {

1..100};do kubectl exec -it redis-cluster-0 -- redis-cli -c -p 6379 -a iloveyou -c set ops_${line} ${line}; done

#读取数据看一下是否能读取到。

for line in {

1..100};do kubectl exec -it redis-cluster-1 -- redis-cli -c -p 6379 -a iloveyou -c get ops_${line}; done

#登入redis

kubectl exec -it redis-cluster-0 -- redis-cli -c -p 6379 -a iloveyou

#查看redis信息

127.0.0.1:6379> cluster info

#检查redis集群信息

127.0.0.1:6379> cluster nodes

#插入数据

127.0.0.1:6379> set num 111

#遍历所有的key

127.0.0.1:6379> keys *

#删除key

127.0.0.1:6379> del num

#登入redis0

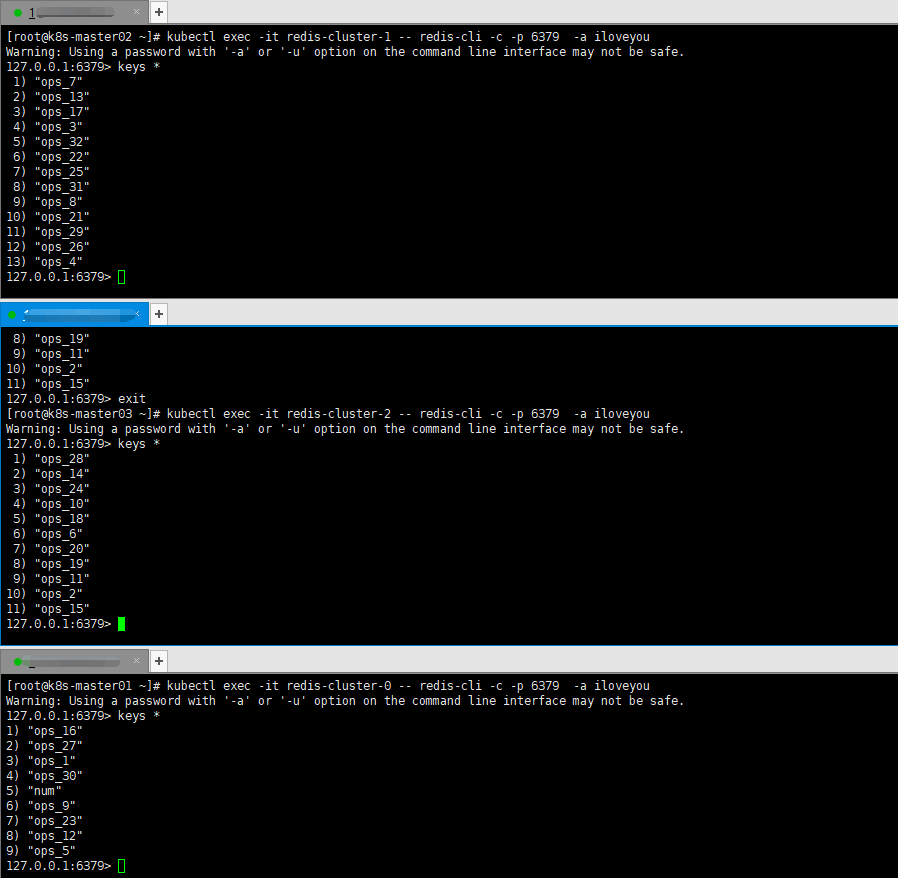

kubectl exec -it redis-cluster-0 -- redis-cli -c -p 6379 -a iloveyou

#遍历所有的key

127.0.0.1:6379> keys *

#登入redis1

kubectl exec -it redis-cluster-1 -- redis-cli -c -p 6379 -a iloveyou

#遍历所有的key

127.0.0.1:6379> keys *

#登入redis2

kubectl exec -it redis-cluster-2 -- redis-cli -c -p 6379 -a iloveyou

#遍历所有的key

127.0.0.1:6379> keys *

2.删除pod验证

2.1 验证集群信息

[root@k8s-master01 redis]# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 61m 100.125.152.57 k8s-node02 <none> <none>

redis-cluster-1 1/1 Running 0 33m 100.97.125.39 k8s-node01 <none> <none>

redis-cluster-2 1/1 Running 0 60m 100.125.152.22 k8s-node02 <none> <none>

redis-cluster-3 1/1 Running 0 60m 100.97.125.10 k8s-node01 <none> <none>

redis-cluster-4 1/1 Running 0 60m 100.125.152.41 k8s-node02 <none> <none>

redis-cluster-5 1/1 Running 0 60m 100.97.125.57 k8s-node01 <none> <none>

[root@k8s-master01 redis]#

#登入redis

kubectl exec -it redis-cluster-0 – redis-cli -c -p 6379 -a iloveyou

#检查redis集群信息

127.0.0.1:6379> cluster nodes

e4189ba4094320d4f379fb0cbc78cac6cdb3abe6 100.97.125.10:6379@16379 slave 0e3cae56f05f211c35b4401f6a0e61454ca4f64a 0 1693891070028 3 connected

9cdff4df51646dd440e5005bac7f0cb5f4470d9b 100.125.152.41:6379@16379 slave 0a228f6fbd7fc75247180ac31516146e8abd0466 0 1693891069927 1 connected

93ccc4c795f74da6acf84821bf372a2414375f55 100.97.125.39:6379@16379 master - 0 1693891070430 2 connected 5461-10922

ff960d1a1aca448a391b69da155947b4c627677e 100.97.125.57:6379@16379 slave 93ccc4c795f74da6acf84821bf372a2414375f55 0 1693891069000 2 connected

0e3cae56f05f211c35b4401f6a0e61454ca4f64a 100.125.152.22:6379@16379 master - 0 1693891069426 3 connected 10923-16383

0a228f6fbd7fc75247180ac31516146e8abd0466 100.125.152.57:6379@16379 myself,master - 0 1693891069000 1 connected 0-5460

2.2.删除pod

[root@k8s-master01 redis]# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 61m 100.125.152.57 k8s-node02 <none> <none>

redis-cluster-1 1/1 Running 0 33m 100.97.125.39 k8s-node01 <none> <none>

redis-cluster-2 1/1 Running 0 60m 100.125.152.22 k8s-node02 <none> <none>

redis-cluster-3 1/1 Running 0 60m 100.97.125.10 k8s-node01 <none> <none>

redis-cluster-4 1/1 Running 0 60m 100.125.152.41 k8s-node02 <none> <none>

redis-cluster-5 1/1 Running 0 60m 100.97.125.57 k8s-node01 <none> <none>

[root@k8s-master01 redis]# kubectl delete po redis-cluster-5 --force

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

pod "redis-cluster-5" force deleted

[root@k8s-master01 redis]# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 62m 100.125.152.57 k8s-node02 <none> <none>

redis-cluster-1 1/1 Running 0 35m 100.97.125.39 k8s-node01 <none> <none>

redis-cluster-2 1/1 Running 0 62m 100.125.152.22 k8s-node02 <none> <none>

redis-cluster-3 1/1 Running 0 62m 100.97.125.10 k8s-node01 <none> <none>

redis-cluster-4 1/1 Running 0 61m 100.125.152.41 k8s-node02 <none> <none>

redis-cluster-5 1/1 Running 0 6s 100.97.125.37 k8s-node01 <none> <none>

[root@k8s-master01 redis]#

2.2 验证redis集群

[root@k8s-master01 redis]# kubectl exec -it redis-cluster-1 -- redis-cli -c -p 6379 -a iloveyou

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6379> cluster nodes

e4189ba4094320d4f379fb0cbc78cac6cdb3abe6 100.97.125.10:6379@16379 slave 0e3cae56f05f211c35b4401f6a0e61454ca4f64a 0 1693891297573 3 connected

9cdff4df51646dd440e5005bac7f0cb5f4470d9b 100.125.152.41:6379@16379 slave 0a228f6fbd7fc75247180ac31516146e8abd0466 0 1693891297073 1 connected

93ccc4c795f74da6acf84821bf372a2414375f55 100.97.125.39:6379@16379 myself,master - 0 1693891297000 2 connected 5461-10922

0e3cae56f05f211c35b4401f6a0e61454ca4f64a 100.125.152.22:6379@16379 master - 0 1693891297573 3 connected 10923-16383

ff960d1a1aca448a391b69da155947b4c627677e 100.97.125.37:6379@16379 slave 93ccc4c795f74da6acf84821bf372a2414375f55 0 1693891297073 2 connected

0a228f6fbd7fc75247180ac31516146e8abd0466 100.125.152.57:6379@16379 master - 0 1693891296571 1 connected 0-5460

127.0.0.1:6379>

查询插入数据验证

del num

#读取数据看一下是否能读取到。

for line in {

1..100};do kubectl exec -it redis-cluster-4 -- redis-cli -c -p 6379 -a iloveyou -c get ops_${line}; done

#往集群里面写入一百条数据。

for line in {

1..200};do kubectl exec -it redis-cluster-5 -- redis-cli -c -p 6379 -a iloveyou -c set ops_${line} ${line}; done

for line in {

1..200};do kubectl exec -it redis-cluster-2 -- redis-cli -c -p 6379 -a iloveyou -c del ops_${line}; done

#往集群里面写入32条数据。

for line in {

1..32};do kubectl exec -it redis-cluster-5 -- redis-cli -c -p 6379 -a iloveyou -c set ops_${line} ${line}; done