这里先说明下这边使用的canal版本号为1.1.5

在描述这个问题之前,首先需要简单对于canal架构有个基本的了解

canal工作原理

- canal 模拟 MySQL slave 的交互协议,伪装自己为 MySQL slave ,向 MySQL master 发送dump 协议

- MySQL master 收到 dump 请求,开始推送 binary log 给 slave (即 canal )

- canal 解析 binary log 对象(原始为 byte 流)

canal环境的几个组件

canal-server(canal-deploy):直接监听MySQL的binlog,把自己伪装成MySQL的从库,只负责接收数据,并不做处理。

canal-adapter:相当于canal的客户端,会从canal-server中获取数据,然后对数据进行同步,可以同步到MySQL、Elasticsearch和HBase等存储中去。

canal-admin:为canal提供整体配置管理、节点运维等面向运维的功能,提供相对友好的WebUI操作界面,方便更多用户快速和安全的操作。

canal集群搭建架构

这里找了几篇

Canal Admin 高可用集群使用教程-腾讯云开发者社区-腾讯云

相关集群搭建的文章,可以大致了解到对应集群环境,需要的组件以及作用

Could not find first log file name in binary log index file

2023-09-07 16:15:57.322 [destination = example , address = /192.168.6.168:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> find start position successfully, EntryPosition[included=false,journalName=mysql-bin.000192,position=270118817,serverId=101,gtid=,timestamp=1662998460000] cost : 394ms , the next step is binlog dump

2023-09-07 16:15:57.334 [destination = example , address = /192.168.6.168:3306 , EventParser] ERROR c.a.o.canal.parse.inbound.mysql.dbsync.DirectLogFetcher - I/O error while reading from client socket

java.io.IOException: Received error packet: errno = 1236, sqlstate = HY000 errmsg = Could not find first log file name in binary log index file

at com.alibaba.otter.canal.parse.inbound.mysql.dbsync.DirectLogFetcher.fetch(DirectLogFetcher.java:102) ~[canal.parse-1.1.5.jar:na]

at com.alibaba.otter.canal.parse.inbound.mysql.MysqlConnection.dump(MysqlConnection.java:238) [canal.parse-1.1.5.jar:na]

at com.alibaba.otter.canal.parse.inbound.AbstractEventParser$1.run(AbstractEventParser.java:262) [canal.parse-1.1.5.jar:na]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_181]

2023-09-07 16:15:57.335 [destination = example , address = /192.168.6.168:3306 , EventParser] ERROR c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - dump address /192.168.6.168:3306 has an error, retrying. caused by

java.io.IOException: Received error packet: errno = 1236, sqlstate = HY000 errmsg = Could not find first log file name in binary log index file

at com.alibaba.otter.canal.parse.inbound.mysql.dbsync.DirectLogFetcher.fetch(DirectLogFetcher.java:102) ~[canal.parse-1.1.5.jar:na]

at com.alibaba.otter.canal.parse.inbound.mysql.MysqlConnection.dump(MysqlConnection.java:238) ~[canal.parse-1.1.5.jar:na]

at com.alibaba.otter.canal.parse.inbound.AbstractEventParser$1.run(AbstractEventParser.java:262) ~[canal.parse-1.1.5.jar:na]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_181]

2023-09-07 16:15:57.336 [destination = example , address = /192.168.6.168:3306 , EventParser] ERROR com.alibaba.otter.canal.common.alarm.LogAlarmHandler - destination:example[java.io.IOException: Received error packet: errno = 1236, sqlstate = HY000 errmsg = Could not find first log file name in binary log index file

at com.alibaba.otter.canal.parse.inbound.mysql.dbsync.DirectLogFetcher.fetch(DirectLogFetcher.java:102)

at com.alibaba.otter.canal.parse.inbound.mysql.MysqlConnection.dump(MysqlConnection.java:238)

at com.alibaba.otter.canal.parse.inbound.AbstractEventParser$1.run(AbstractEventParser.java:262)

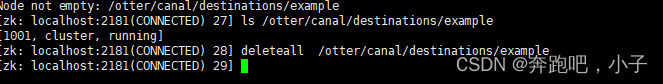

at java.lang.Thread.run(Thread.java:748)因为集群中环境中,canal server会在zookeeper中记录下当前最后一次消费成功的binlog位点,所以我们需要删除zookeeper的节点信息,客户端连接zookeeper删除指定目录数据

/otter/canal/destinations/{instance的名字}/1001/cursor

重新启动canal,验证下是否已经恢复表同步

我这边删除后,使用canal-admin工具查看Instance 实例,查看日志又出现了其他的错误

com.alibaba.otter.canal.parse.exception.CanalParseException: column size is not match for table

com.alibaba.otter.canal.parse.exception.CanalParseException: column size is not match for table

com.alibaba.otter.canal.parse.exception.CanalParseException: column size is not match for table出现这种错误,会导致解析线程被阻塞,也就是binlog事件不会再接收和解析

Caused by: com.alibaba.otter.canal.parse.exception.CanalParseException: column size is not match for table:mx_oms.om_logistics_task_header,138 vs 132

2023-09-07 17:38:46.312 [destination = example , address = /192.168.6.168:3306 , EventParser] ERROR com.alibaba.otter.canal.common.alarm.LogAlarmHandler - destination:example[com.alibaba.otter.canal.parse.exception.CanalParseException: com.alibaba.otter.canal.parse.exception.CanalParseException: parse row data failed.

Caused by: com.alibaba.otter.canal.parse.exception.CanalParseException: parse row data failed.

Caused by: com.alibaba.otter.canal.parse.exception.CanalParseException: column size is not match for table:mx_oms.om_logistics_task_header,138 vs 132第一反应,感觉和我们的table 元数据内容相关,回想之前有个地方好像是有配置table元数据信息

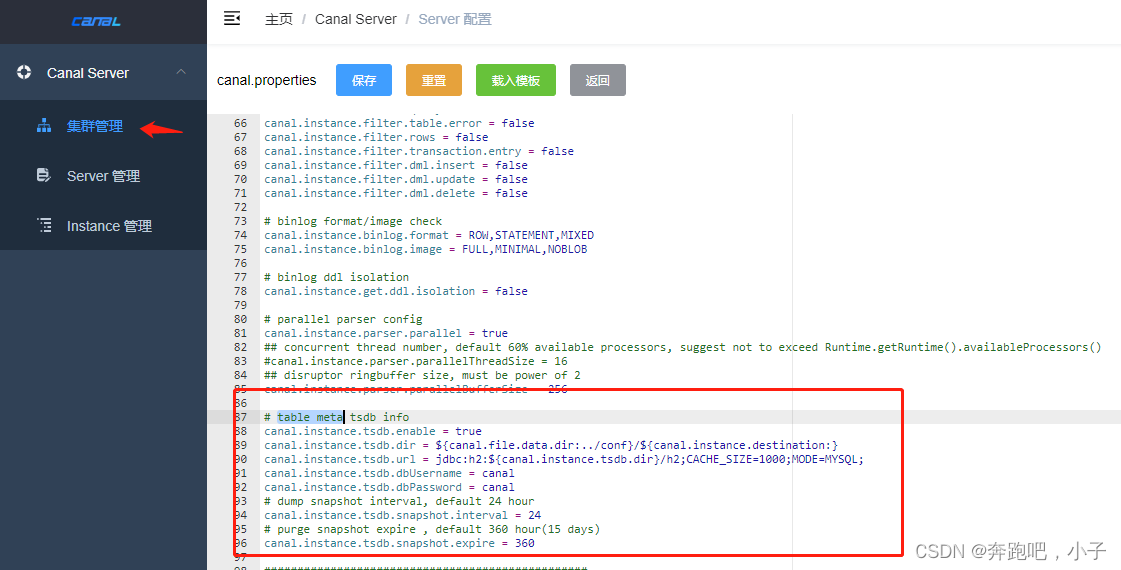

登录canal-admin界面,可以看到有个H2的开源的轻量级数据库存储了和表结构相关的数据

这个也是为了解决canal上一版本存在的表结构一致性的问题

当然,这里需要插一嘴,如果是搭建canal 集群,这里使用tsdb来支持ddl表结构变更一致性,我们一定需要使用mysql来存储。因为我排查的这边集群竟然是关闭了该配置

比如使用下面的tsdb配置

canal.instance.tsdb.enable=true

canal.instance.tsdb.url=jdbc:mysql://192.168.6.168:3306/canal_manager

canal.instance.tsdb.dbUsername=xxxxx

canal.instance.tsdb.dbPassword=xxxxx

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml进入canal-server的安装目录,docker容器的话进入/home/admin/canal-server/conf/example 这个目录下面

删除里面h2.开头的文件,然后再重启下canal-server

再测试了一把表同步,果然终于数据同步成功了