最近在做一个项目,需要使用SpringBoot+Mybatis+Druid使用多数据源,前提条件是数据源的个数和名称不确定,是在application.properties文件中设定,在使用时根据条件动态切换。

这样就不能像Druid官网提到的,通过ConfigurationProperties注解创建多个DruidDataSource,因为这样属于硬编码,添加一个数据源就要再添加代码,我考虑的是只使用一套构建DataSource的代码,添加或删除数据源只需要修改配置文件。

Spring提供的AbstractRoutingDataSource提供了运行时动态切换DataSource的功能,但是AbstractRoutingDataSource对象中包含的DataSourceBuilder构建的仅仅是Spring JDBC的DataSource,并不是我们使用的DruidDataSource,需要自行构建。

我参考了以下文章

http://412887952-qq-com.iteye.com/blog/2303075

这篇文章介绍了如何使用AbstractRoutingDataSource构建多数据源,但是它有几点不足:

1)构建的TargetDataSources中的DataSource仅包含driverClassName,username,password,url等基本属性,对于DruidDataSource这种复杂的DataSource,仅赋这些属性是不够的。

2)构建AbstractingRoutingDataSource使用ImportBeanDefinitionRegistrar进行注册,不够直观。

我的方案对这个解决方案做了一定的修改。

我在本地MySQL新建三个数据库testdb_1,testdb_2,testdb_3,每个数据库新建一张student表

建表语句如下

CREATE TABLE `student` (

`ID` int(11) NOT NULL AUTO_INCREMENT,

`NAME` varchar(20) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`CLASS_NAME` varchar(30) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`CREATE_DATE` timestamp(0) NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP(0),

`UPDATE_DATE` timestamp(0) NOT NULL ON UPDATE CURRENT_TIMESTAMP(0),

PRIMARY KEY (`ID`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 0 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;分别创建三个用户appuser_1,appuser_2,appuser_3,用于连接三个数据库(需要赋予它们访问数据库的相应权限)

我们构建一个名为SpringBootDruidMultiDB的SpringBoot项目,导入mybatis-spring-boot-starter和spring-boot-starter-web以及spring-boot-starter-test,为了使用Druid方便,项目还导入druid-spring-boot-starter。由于使用Log4j2记录日志,还添加了log4j2所需要的库,pom文件的配置如下

<dependencies>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.6</version>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>2.10.0</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.10.0</version>

</dependency>

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

<version>3.3.7</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.45</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.43</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.7</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-collections4</artifactId>

<version>4.1</version>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.2</version>

</dependency>

</dependencies>修改src/main/resources文件,添加多个数据源信息

spring.custom.datasource.name=db1,db2,db3

spring.custom.datasource.db1.name=db1

spring.custom.datasource.db1.type=com.alibaba.druid.pool.DruidDataSource

spring.custom.datasource.db1.driver-class-name=com.mysql.jdbc.Driver

spring.custom.datasource.db1.url=jdbc:mysql://localhost:3306/testdb_1?characterEncoding=utf8&autoReconnect=true&useSSL=false&useAffectedRows=true

spring.custom.datasource.db1.username=appuser1

spring.custom.datasource.db1.password=admin

spring.custom.datasource.db2.name=db2

spring.custom.datasource.db2.type=com.alibaba.druid.pool.DruidDataSource

spring.custom.datasource.db2.driver-class-name=com.mysql.jdbc.Driver

spring.custom.datasource.db2.url=jdbc:mysql://localhost:3306/testdb_2?characterEncoding=utf8&autoReconnect=true&useSSL=false&useAffectedRows=true

spring.custom.datasource.db2.username=appuser2

spring.custom.datasource.db2.password=admin

spring.custom.datasource.db3.name=db3

spring.custom.datasource.db3.type=com.alibaba.druid.pool.DruidDataSource

spring.custom.datasource.db3.driver-class-name=com.mysql.jdbc.Driver

spring.custom.datasource.db3.url=jdbc:mysql://localhost:3306/testdb_3?characterEncoding=utf8&autoReconnect=true&useSSL=false&useAffectedRows=true

spring.custom.datasource.db3.username=appuser3

spring.custom.datasource.db3.password=admin再添加DruidDataSource的属性

spring.datasource.druid.initial-size=5

spring.datasource.druid.min-idle=5

spring.datasource.druid.async-init=true

spring.datasource.druid.async-close-connection-enable=true

spring.datasource.druid.max-active=20

spring.datasource.druid.max-wait=60000

spring.datasource.druid.time-between-eviction-runs-millis=60000

spring.datasource.druid.min-evictable-idle-time-millis=30000

spring.datasource.druid.validation-query=SELECT 1 FROM DUAL

spring.datasource.druid.test-while-idle=true

spring.datasource.druid.test-on-borrow=false

spring.datasource.druid.test-on-return=false

spring.datasource.druid.pool-prepared-statements=true

spring.datasource.druid.max-pool-prepared-statement-per-connection-size=20

spring.datasource.druid.filters=stat,wall,log4j2

spring.datasource.druid.connectionProperties=druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000

spring.datasource.druid.web-stat-filter.enabled=true

spring.datasource.druid.web-stat-filter.url-pattern=/*

spring.datasource.druid.web-stat-filter.exclusions=*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*

spring.datasource.druid.web-stat-filter.session-stat-enable=true

spring.datasource.druid.web-stat-filter.profile-enable=true

spring.datasource.druid.stat-view-servlet.enabled=true

spring.datasource.druid.stat-view-servlet.url-pattern=/druid/*

spring.datasource.druid.stat-view-servlet.login-username=admin

spring.datasource.druid.stat-view-servlet.login-password=admin

spring.datasource.druid.stat-view-servlet.reset-enable=false

spring.datasource.druid.stat-view-servlet.allow=127.0.0.1

spring.datasource.druid.filter.wall.enabled=true

spring.datasource.druid.filter.wall.db-type=mysql

spring.datasource.druid.filter.wall.config.alter-table-allow=false

spring.datasource.druid.filter.wall.config.truncate-allow=false

spring.datasource.druid.filter.wall.config.drop-table-allow=false

spring.datasource.druid.filter.wall.config.none-base-statement-allow=false

spring.datasource.druid.filter.wall.config.update-where-none-check=true

spring.datasource.druid.filter.wall.config.select-into-outfile-allow=false

spring.datasource.druid.filter.wall.config.metadata-allow=true

spring.datasource.druid.filter.wall.log-violation=true

spring.datasource.druid.filter.wall.throw-exception=true

spring.datasource.druid.filter.stat.log-slow-sql= true

spring.datasource.druid.filter.stat.slow-sql-millis=1000

spring.datasource.druid.filter.stat.merge-sql=true

spring.datasource.druid.filter.stat.db-type=mysql

spring.datasource.druid.filter.stat.enabled=true

spring.datasource.druid.filter.log4j2.enabled=true

spring.datasource.druid.filter.log4j2.connection-log-enabled=true

spring.datasource.druid.filter.log4j2.connection-close-after-log-enabled=true

spring.datasource.druid.filter.log4j2.connection-commit-after-log-enabled=true

spring.datasource.druid.filter.log4j2.connection-connect-after-log-enabled=true

spring.datasource.druid.filter.log4j2.connection-connect-before-log-enabled=true

spring.datasource.druid.filter.log4j2.connection-log-error-enabled=true

spring.datasource.druid.filter.log4j2.data-source-log-enabled=true

spring.datasource.druid.filter.log4j2.result-set-log-enabled=true

spring.datasource.druid.filter.log4j2.statement-log-enabled=true在src/main/resources目录下添加log4j2.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="OFF">

<properties>

<property name="logPath">./logs/</property>

</properties>

<Appenders>

<Console name="Console" target="SYSTEM_OUT" ignoreExceptions="false">

<PatternLayout pattern="%d [%t] %-5p %c - %m%n"/>

<ThresholdFilter level="trace" onMatch="ACCEPT" onMismatch="DENY"/>

</Console>

<RollingFile name="infoLog" fileName="${logPath}/multidb_info.log"

filePattern="${logPath}/multidb_info-%d{yyyy-MM-dd}.log" append="true" immediateFlush="true">

<PatternLayout pattern="%d [%t] %-5p %c - %m%n" />

<TimeBasedTriggeringPolicy />

<Policies>

<SizeBasedTriggeringPolicy size="10 MB"/>

</Policies>

<DefaultRolloverStrategy max="30"/>

<Filters>

<ThresholdFilter level="error" onMatch="DENY" onMismatch="NEUTRAL"/>

<ThresholdFilter level="trace" onMatch="ACCEPT" onMismatch="DENY"/>

</Filters>

</RollingFile>

<RollingFile name="errorLog" fileName="${logPath}/multidb_error.log"

filePattern="${logPath}/multidb_error-%d{yyyy-MM-dd}.log" append="true" immediateFlush="true">

<PatternLayout pattern="%d [%t] %-5p %c - %m%n" />

<TimeBasedTriggeringPolicy />

<Policies>

<SizeBasedTriggeringPolicy size="10 MB"/>

</Policies>

<DefaultRolloverStrategy max="30"/>

<Filters>

<ThresholdFilter level="error" onMatch="ACCEPT" onMismatch="DENY"/>

</Filters>

</RollingFile>

</Appenders>

<Loggers>

<AsyncLogger name="org.springframework.*" level="INFO"/>

<AsyncLogger name="com.rick" level="INFO" additivity="false">

<AppenderRef ref="infoLog" />

<AppenderRef ref="errorLog" />

<AppenderRef ref="Console" />

</AsyncLogger>

<Root level="INFO">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>一开始我参照单数据源的构建方式,想像下面的方式构建DruidDataSource数据源

public DataSource createDataSource(Environment environment,

String prefix)

{

return DruidDataSourceBuilder.create().build(environment,prefix);

}这里prefix是类似于spring.custom.datasource.db1的前缀,然而执行后发现生成的DruidDataSource对象的driverClassName,url,username,password这些基本属性并没有赋值,一旦创建连接时就会抛异常。只有使用@ConnectionProperties注解构建的DruidDataSource才可以正常赋值(类似下面的代码)。

@Bean("db1")

@ConfigurationProperties(prefix="spring.custom.datasource.db1.")

public DataSource dataSource(Environment environment) {

DruidDataSource ds = DruidDataSourceBuilder.create().build();

return ds;

}这种方式构建DruidDataSource代码很简洁,弊病是硬编码,构建的DruidDataSource数目固定,不能动态构建,不能满足我们的需要。上面的文章链接中采用的是下面的方式构建

public DataSource buildDataSource(Map<String, Object> dsMap) {

Object type = dsMap.get("type");

if (type == null){

type = DATASOURCE_TYPE_DEFAULT;// 默认DataSource

}

Class<? extends DataSource> dataSourceType;

try

{

dataSourceType = (Class<? extends DataSource>) Class.forName((String) type);

String driverClassName = dsMap.get("driverClassName").toString();

String url = dsMap.get("url").toString();

String username = dsMap.get("username").toString();

String password = dsMap.get("password").toString();

DataSourceBuilder factory = DataSourceBuilder.create().driverClassName(driverClassName).url(url).username(username).password(password).type(dataSourceType);

returnfactory.build();

} catch (ClassNotFoundException e) {

e.printStackTrace();

}

return null;

}这种方式能够构建最基本的DataSource,包含了driverClassName,url,username,password属性,但是像initial-size,maxActive等属性都没有赋值,也不能满足要求。

参考上述文章中的做法,以及druid-spring-boot-starter中构建DruidDataSource的代码,我们采用下列方式构建包含多个DruidDataSource的DynamicDataSource

我们首先创建DynamicDataSource和用于存储当前DataSource信息的ContextHolder

public class DynamicDataSource extends AbstractRoutingDataSource {

/**

* 获得数据源

*/

@Override

protected Object determineCurrentLookupKey() {

return DynamicDataSourceContextHolder.getDateSoureType();

}

}public class DynamicDataSourceContextHolder {

/*

* 使用ThreadLocal维护变量,ThreadLocal为每个使用该变量的线程提供独立的变量副本,

* 所以每一个线程都可以独立地改变自己的副本,而不会影响其它线程所对应的副本。

*/

private static final ThreadLocal<String> CONTEXT_HOLDER = new ThreadLocal<String>();

/*

* 管理所有的数据源id,用于数据源的判断

*/

public static List<String> datasourceId = new ArrayList<String>();

/**

* @Title: setDateSoureType

* @Description: 设置数据源的变量

* @param dateSoureType

* @return void

* @throws

*/

public static void setDateSoureType(String dateSoureType){

CONTEXT_HOLDER.set(dateSoureType);

}

/**

* @Title: getDateSoureType

* @Description: 获得数据源的变量

* @return String

* @throws

*/

public static String getDateSoureType(){

return CONTEXT_HOLDER.get();

}

/**

* @Title: clearDateSoureType

* @Description: 清空所有的数据源变量

* @return void

* @throws

*/

public static void clearDateSoureType(){

CONTEXT_HOLDER.remove();

}

/**

* @Title: existDateSoure

* @Description: 判断数据源是否已存在

* @param dateSoureType

* @return boolean

* @throws

*/

public static boolean existDateSoure(String dateSoureType ){

return datasourceId.contains(dateSoureType);

}

}我定义了切换DataSource判断的DataSourceAnnotation,以及用于切换DataSource的AOP Aspect

@Retention(RetentionPolicy.RUNTIME)

@Target({ElementType.METHOD, ElementType.TYPE})

@Documented

public @interface DataSourceAnnotation {

}@Aspect

@Order(-1)

@Component

public class DynamicDataSourceAspect {

private static final Logger logger = LogManager.getLogger(DynamicDataSourceAspect.class);

/**

* 切换数据库

* @param point

* @param dataSourceAnnotation

* @return

* @throws Throwable

*/

@Before("@annotation(dataSourceAnnotation)")

public void changeDataSource(JoinPoint point, DataSourceAnnotation dataSourceAnnotation){

Object[] methodArgs = point.getArgs();

String dsId = methodArgs[methodArgs.length-1].toString();

if(!DynamicDataSourceContextHolder.existDateSoure(dsId)){

logger.error("No data source found ...【"+dsId+"】");

return;

}else{

DynamicDataSourceContextHolder.setDateSoureType(dsId);

}

}

/**

* @Title: destroyDataSource

* @Description: 销毁数据源 在所有的方法执行执行完毕后

* @param point

* @param dataSourceAnnotation

* @return void

* @throws

*/

@After("@annotation(dataSourceAnnotation)")

public void destroyDataSource(JoinPoint point,DataSourceAnnotation dataSourceAnnotation){

DynamicDataSourceContextHolder.clearDateSoureType();

}

}链接中的DataSource是对于带有TargetDataSource注解的方法,根据其value属性进行数据库切换(类似下面的Service方法)

/**

* 指定数据源

* @return

*/

@TargetDataSource("ds1")

public List<Demo> getListByDs1(){

returntestDao.getListByDs1();

}这样的切换方式非常笨拙,如果数据源有5个,getList方法也要写5个,加上插入数据和更新数据的方法,Service里的接口方法就有几十个,而这些方法里的实现逻辑完全一样,唯独注解value值不一样而已,冗余的代码非常可观。

我采取的方法是在需要切换数据源的方法参数中添加一个数据库类型的参数dsId,在DynamicDataSourceAspect类的changeDataSource方法中读取,根据dsId变量的值进行数据源切换,前提是这些Service方法上必须有DataSourceAnnotation注解,方法类似如下

@Service("studentService")

@Transactional

public class StudentServiceImpl implements StudentService {

@Autowired

private StudentMapper studentMapper;

/**

* 插入学生信息

*

* @param employee 学生信息

* @param dsId 数据源Type

* @return 插入成功后的学生id

*/

@Override

@DataSourceAnnotation

public int insertEmployee(Student student, String dsId) {

studentMapper.insertStudent(student);

return student.getId();

}说明一下,这里的切换数据源方式仅基于我的需求,参考者可以根据自己的需要进行调整。

接下来编写Druid的配置类DruidConfig

@Configuration

@EnableTransactionManagement

public class DruidConfig implements EnvironmentAware {

private List<String> customDataSourceNames = new ArrayList<>();

private Environment environment;

/**

* @param environment the enviroment to set

*/

@Override

public void setEnvironment(Environment environment) {

this.environment = environment;

}这里的Environment是用于读取application.properties信息的环境对象

构建DynamicDataSource

@Bean(name = "dataSource")

@Primary

public AbstractRoutingDataSource dataSource() {

DynamicDataSource dynamicDataSource = new DynamicDataSource();

LinkedHashMap<Object, Object> targetDatasources = new LinkedHashMap<>();

initCustomDataSources(targetDatasources);

dynamicDataSource.setDefaultTargetDataSource(targetDatasources.get(customDataSourceNames.get(0)));

dynamicDataSource.setTargetDataSources(targetDatasources);

dynamicDataSource.afterPropertiesSet();

return dynamicDataSource;

}这里存储DynamicDataSource的targetDatasource对象使用LinkedHashMap,是为了在Druid监控中数据源对象能按照构造的顺序显示。initCustomDataSources方法用于构建每个DruidDataSource对象,它的代码如下

private void initCustomDataSources(LinkedHashMap<Object, Object> targetDataResources)

{

RelaxedPropertyResolver property =

new RelaxedPropertyResolver(environment, DATA_SOURCE_PREfIX_CUSTOM);

String dataSourceNames = property.getProperty(DATA_SOURCE_CUSTOM_NAME);

if(StringUtils.isEmpty(dataSourceNames))

{

logger.error("The multiple data source list are empty.");

}

else{

RelaxedPropertyResolver springDataSourceProperty =

new RelaxedPropertyResolver(environment, "spring.datasource.");

Map<String, Object> druidPropertiesMaps = springDataSourceProperty.getSubProperties("druid.");

Map<String,Object> druidValuesMaps = new HashMap<>();

for(String key:druidPropertiesMaps.keySet())

{

String druidKey = AppConstants.DRUID_SOURCE_PREFIX + key;

druidValuesMaps.put(druidKey,druidPropertiesMaps.get(key));

}

MutablePropertyValues dataSourcePropertyValue = new MutablePropertyValues(druidValuesMaps);

for (String dataSourceName : dataSourceNames.split(SEP)) {

try {

Map<String, Object> dsMaps = property.getSubProperties(dataSourceName+".");

for(String dsKey : dsMaps.keySet())

{

if(dsKey.equals("type"))

{

dataSourcePropertyValue.addPropertyValue("spring.datasource.type", dsMaps.get(dsKey));

}

else

{

String druidKey = DRUID_SOURCE_PREFIX + dsKey;

dataSourcePropertyValue.addPropertyValue(druidKey, dsMaps.get(dsKey));

}

}

DataSource ds = dataSourcebuild(dataSourcePropertyValue);

if(null != ds){

if(ds instanceof DruidDataSource)

{

DruidDataSource druidDataSource = (DruidDataSource)ds;

druidDataSource.setName(dataSourceName);

initDruidFilters(druidDataSource);

}

customDataSourceNames.add(dataSourceName);

DynamicDataSourceContextHolder.datasourceId.add(dataSourceName);

targetDataResources.put(dataSourceName,ds);

}

logger.info("Data source initialization 【"+dataSourceName+"】 successfully ...");

} catch (Exception e) {

logger.error("Data source initialization【"+dataSourceName+"】 failed ...", e);

}

}

}

}这个方法的执行步骤如下:

1)从application.properties中读取spring.custom.datasource.name的值(多数据源列表),判断是否存在多个数据源,以及多个数据源的名称,如果不存在,就不构建数据源,直接返回。

2)获取application.properties中以spring.datasource.druid.开头的属性值,在这个项目中里主要是指从spring.datasource.druid.initial-size到spring.datasource.druid.connectionProperties的属性值,并将它们放入一个MutablePropertyValues对象dataSourcePropertyValue中。在dataSourcePropertyValue中,每个druid属性的key仍然和application.properties保持一致。

3)将1)中读取的多数据源列表以逗号分割,获取数据源名称列表,循环这个列表,每一次循环,读取以spring.custom.datasource.[数据源名称]前缀开头的属性,除了type属性映射为spring.datasource.type外,其他属性都映射为spring.datasource.druid. + [属性名],放入dataSourcePropertyValue中(这里只考虑了DruidDataSource的情况,如果构建其他类型的DataSource,可以进行调整).

4)执行dataSourcebuild方法构建DataSource,dataSourcebuild方法显示如下

/**

* @Title: DataSourcebuild

* @Description: 创建数据源

* @param dataSourcePropertyValue 数据源创建所需参数

*

* @return DataSource 创建的数据源对象

*/

public DataSource dataSourcebuild(MutablePropertyValues dataSourcePropertyValue)

{

DataSource ds = null;

if(dataSourcePropertyValue.isEmpty()){

return ds;

}

String type = dataSourcePropertyValue.get("spring.datasource.type").toString();

if(StringUtils.isNotEmpty(type))

{

if(StringUtils.equals(type,DruidDataSource.class.getTypeName()))

{

ds = new DruidDataSource();

RelaxedDataBinder dataBinder = new RelaxedDataBinder(ds, DRUID_SOURCE_PREFIX);

dataBinder.setConversionService(conversionService);

dataBinder.setIgnoreInvalidFields(false);

dataBinder.setIgnoreNestedProperties(false);

dataBinder.setIgnoreUnknownFields(true);

dataBinder.bind(dataSourcePropertyValue);

}

}

return ds;

}dataSourcebuild方法根据dataSourcePropertyValue中spring.datasource.type的值构建对应的DataSource对象(这里只考虑了DruidDataSource,如果需要构建其他数据源,也可以添加其他代码分支),构建完毕后,将构建的DruidDataSource对象与dataSourcePropertyValue以spring.datasource.druid属性进行绑定,RelaxedDataBinder会自动将dataSourcePropertyValue中的Druid属性绑定到DruidDataSource对象上(通过RelaxedDataBinder的applyPropertyValues方法)。

而且,DruidDataSource类会根据spring.datasource.druid.filters属性值,自行构建DruidDataSource的Filters对象列表,参见DruidAbstractDataSource的setFilters方法

public abstract class DruidAbstractDataSource extends WrapperAdapter implements DruidAbstractDataSourceMBean, DataSource, DataSourceProxy, Serializable {

...................

public void setFilters(String filters) throws SQLException {

if (filters != null && filters.startsWith("!")) {

filters = filters.substring(1);

this.clearFilters();

}

this.addFilters(filters);

}

public void addFilters(String filters) throws SQLException {

if (filters == null || filters.length() == 0) {

return;

}

String[] filterArray = filters.split("\\,");

for (String item : filterArray) {

FilterManager.loadFilter(this.filters, item.trim());

}

}public class FilterManager {

.........

public static void loadFilter(List<Filter> filters, String filterName) throws SQLException {

if (filterName.length() == 0) {

return;

}

String filterClassNames = getFilter(filterName);

if (filterClassNames != null) {

for (String filterClassName : filterClassNames.split(",")) {

if (existsFilter(filters, filterClassName)) {

continue;

}

Class<?> filterClass = Utils.loadClass(filterClassName);

if (filterClass == null) {

LOG.error("load filter error, filter not found : " + filterClassName);

continue;

}

Filter filter;

try {

filter = (Filter) filterClass.newInstance();

} catch (ClassCastException e) {

LOG.error("load filter error.", e);

continue;

} catch (InstantiationException e) {

throw new SQLException("load managed jdbc driver event listener error. " + filterName, e);

} catch (IllegalAccessException e) {

throw new SQLException("load managed jdbc driver event listener error. " + filterName, e);

}

filters.add(filter);

}

return;

}

if (existsFilter(filters, filterName)) {

return;

}

Class<?> filterClass = Utils.loadClass(filterName);

if (filterClass == null) {

LOG.error("load filter error, filter not found : " + filterName);

return;

}

try {

Filter filter = (Filter) filterClass.newInstance();

filters.add(filter);

} catch (Exception e) {

throw new SQLException("load managed jdbc driver event listener error. " + filterName, e);

}

}在DruidDataSource对象属性赋值完毕后,DruidDataSource对象的filters属性将拥有与spring.datasource.druid.filters相对应的Filter对象列表,在本项目filters列表中包含StatFilter对象,WallFilter对象,Log4j2Filter对象,但是这些对象并没有与application.properties中的属性相绑定,还需要我们手动绑定。

5)dataSourcebuild返回构建的DruidDataSource对象后,initCustomDataSources方法设置DruidDataSource的name属性为数据源名称(这是为了在Druid监控的数据源页面中便于区分),调用initDruidFilters方法为4)中构建的DruidDataSource对象的filters赋值,initDruidFilters方法代码如下:

private void initDruidFilters(DruidDataSource druidDataSource){

List<Filter> filters = druidDataSource.getProxyFilters();

RelaxedPropertyResolver filterProperty =

new RelaxedPropertyResolver(environment, "spring.datasource.druid.filter.");

String filterNames= environment.getProperty("spring.datasource.druid.filters");

String[] filterNameArray = filterNames.split("\\,");

for(int i=0; i<filterNameArray.length;i++){

String filterName = filterNameArray[i];

Filter filter = filters.get(i);

Map<String, Object> filterValueMap = filterProperty.getSubProperties(filterName + ".");

String statFilterEnabled = filterValueMap.get(ENABLED_ATTRIBUTE_NAME).toString();

if(statFilterEnabled.equals("true")){

MutablePropertyValues propertyValues = new MutablePropertyValues(filterValueMap);

RelaxedDataBinder dataBinder = new RelaxedDataBinder(filter);

dataBinder.bind(propertyValues);

}

}

}initDruidFilters方法根据spring.datasource.druid.filters属性,获取filter名称列表filterNames,并循环这个列表,读取每个Filter对应的属性值,构建MutablePropertyValues,将其与对应的Filter对象绑定,实现赋值。

6)最后将构建的DruidDataSource对象放入targetDataResources,将数据源名放入DynamicDataSourceContextHolder的DsId列表,用于切换数据源时检查。

initCustomDataSources构建数据源完毕后,dataSource()方法将使用第一个DruidDataSource为默认DataSource,完成DynamicDataSource的构建。

对于StatViewServlet和Web Stat Filter对象,不需要手动构建,druid-spring-boot-starter中DruidWebStatFilterConfiguration和DruidStatViewServletConfiguration会自动扫描对应前缀进行构建,只需要在application.properties中将spring.datasource.druid.web-stat-filter.enabled和spring.datasource.druid.stat-view-servlet.enabled两项设置为true即可。

添加完Druid的配置类后,再添加SessionFactory的配置类SessionFactoryConfig

@Configuration

@EnableTransactionManagement

@MapperScan("com.rick.mappers")

public class SessionFactoryConfig {

private static Logger logger = LogManager.getLogger(SessionFactoryConfig.class);

@Autowired

private DataSource dataSource;

private String typeAliasPackage = "com.rick.entities";

/**

*创建sqlSessionFactoryBean 实例

* 并且设置configtion 如驼峰命名.等等

* 设置mapper 映射路径

* 设置datasource数据源

* @return

*/

@Bean(name = "sqlSessionFactory")

public SqlSessionFactoryBean createSqlSessionFactoryBean() {

logger.info("createSqlSessionFactoryBean method");

try{

ResourcePatternResolver resolver = new PathMatchingResourcePatternResolver();

SqlSessionFactoryBean sqlSessionFactoryBean = new SqlSessionFactoryBean();

sqlSessionFactoryBean.setDataSource(dataSource);

sqlSessionFactoryBean.setMapperLocations(resolver.getResources("classpath:com/rick/mappers/*.xml"));

sqlSessionFactoryBean.setTypeAliasesPackage(typeAliasPackage);

return sqlSessionFactoryBean;

}

catch(IOException ex){

logger.error("Error happens when getting config files." + ExceptionUtils.getMessage(ex));

}

return null;

}

@Bean

public SqlSessionTemplate sqlSessionTemplate(SqlSessionFactory sqlSessionFactory) {

return new SqlSessionTemplate(sqlSessionFactory);

}

@Bean

public PlatformTransactionManager annotationDrivenTransactionManager() {

return new DataSourceTransactionManager(dataSource);

}

}SessionFactoryConfig的配置与单数据源的SessionFactory配置基本一致,只不过使用DynamicDataSource替换单数据源。

为了测试集成的多数据源,我写了一个插入Student数据的Service和Rest API接口,StudentService的代码如下:

public interface StudentService {

/**

* 插入学生信息

* @param student 学生信息

* @param dsId 数据源Id

* @return 插入成功后的学生id

*/

int insertStudent(Student student, String dsId);

/**

* 根据学生id查找学生信息

* @param id 学生id

* @param dsId 数据源Id

* @return 如果学生存在,返回学生对象,反之返回null

*/

Student findStudentById(int id, String dsId);

}

@Service("studentService")

@Transactional

public class StudentServiceImpl implements StudentService {

@Autowired

private StudentMapper studentMapper;

/**

* 插入学生信息

* @param student 学生信息

* @param dsId 数据源Id

* @return 插入成功后的学生id

*/

@Override

@DataSourceAnnotation

public int insertStudent(Student student, String dsId) {

studentMapper.insertStudent(student);

return student.getId();

}

/**

* 根据学生id查找学生信息

* @param id 学生id

* @param dsId 数据源Id

* @return 如果学生存在,返回学生对象,反之返回null

*/

@Override

@DataSourceAnnotation

public Student findStudentById(int id, String dsId) {

return studentMapper.findStudentById(id);

}

}Rest API的接口代码如下:

@RestController

public class ApiController {

@Autowired

private StudentService studentService;

/**

* 添加学生并检查

* @param params 添加的学生信息参数

* @return 添加学生后检查的结果,如果成功返回Success和添加学生信息,失败返回Fail和失败信息。

*/

@RequestMapping(value = "/api/addStudent", produces = "application/json", method = RequestMethod.POST)

public ResponseEntity<String> addStudent(@RequestBody String params)

{

ResponseEntity<String> response = null;

HttpHeaders headers = new HttpHeaders();

headers.setContentType(MediaType.APPLICATION_JSON_UTF8);

Map<String, Object> resultMap = new HashMap<>();

try{

JSONObject paramJsonObj = JSON.parseObject(params);

Student student = new Student();

student.setName(paramJsonObj.getString(NAME_ATTRIBUTE_NAME));

student.setClassName(paramJsonObj.getString(CLASS_ATTRIBUTE_NAME));

String dbType = paramJsonObj.getString(DB_TYPE);

if(StringUtils.isEmpty(dbType))

{

dbType = "db1";

}

int id = studentService.insertStudent(student,dbType);

if(id <= 0)

{

String errorMessage = "Error happens when insert student, the insert operation failed.";

logger.error(errorMessage);

resultMap = new HashMap<>();

resultMap.put(RESULT_CONSTANTS, FAIL_RESULT);

resultMap.put(MESSAGE_CONSTANTS, errorMessage);

String resultStr = JSON.toJSONString(resultMap);

return new ResponseEntity<>(resultStr,headers, HttpStatus.INTERNAL_SERVER_ERROR);

}

else

{

resultMap.put(RESULT_CONSTANTS, SUCCESS_RESULT);

Student newStudent = studentService.findStudentById(id,dbType);

Map<String, String> studentMap = new HashMap<>();

studentMap.put(ID_ATTRIBUTE_NAME,Integer.toString(id));

studentMap.put(NAME_ATTRIBUTE_NAME,newStudent.getName());

studentMap.put(CLASS_ATTRIBUTE_NAME,newStudent.getClassName());

studentMap.put(CRAETE_DATE_ATTRIBUTE_NAME, sdf.format(newStudent.getCreateDate()));

studentMap.put(UPDATE_DATE_ATTRIBUTE_NAME, sdf.format(newStudent.getUpdateDate()));

resultMap.put(STUDENT_NODE_NAME,studentMap);

String resultStr = JSON.toJSONString(resultMap);

response = new ResponseEntity<>(resultStr,headers, HttpStatus.OK);

}

}

catch(Exception ex)

{

response = processException("insert student", ex, headers);

}

return response;

}addStudent方法通过POST请求Body中的dbType值确认需要切换的数据源(实际情况下可以根据请求数据信息判断该切换到哪一个数据源,这个方法先插入一条学生数据,再根据插入后生成的id查找插入的学生信息。

添加对应的Mapper接口StudentMapper和studentMapper.xml文件

@Mapper

public interface StudentMapper {

Student findStudentById(int id);

int insertStudent(Student student);

}<!DOCTYPE mapper

PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN"

"http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<mapper namespace="com.rick.mappers.StudentMapper">

<resultMap id="studentResultMap" type="com.rick.entities.Student">

<id column="ID" property="id" />

<result column="NAME" property="name" />

<result column="CLASS_NAME" property="className" />

<result column="CREATE_DATE" property="createDate" />

<result column="UPDATE_DATE" property="updateDate" />

</resultMap>

<select id="findStudentById" resultMap="studentResultMap" resultType="map">

select ID,NAME,CLASS_NAME,CREATE_DATE,UPDATE_DATE from student WHERE ID=#{id}

</select>

<insert id="insertStudent" parameterType="com.rick.entities.Student" useGeneratedKeys="true" keyProperty="id">

insert into student(NAME,CLASS_NAME,CREATE_DATE,UPDATE_DATE) values(#{name},#{className},CURRENT_TIMESTAMP(),CURRENT_TIMESTAMP())

</insert>

</mapper>修改pom.xml文件,在编译时包含mapper.xml文件

<build>

......

<resources>

<resource>

<directory>src/main/java</directory>

<includes>

<include>**/*.xml</include>

</includes>

</resource>

......

</resources>

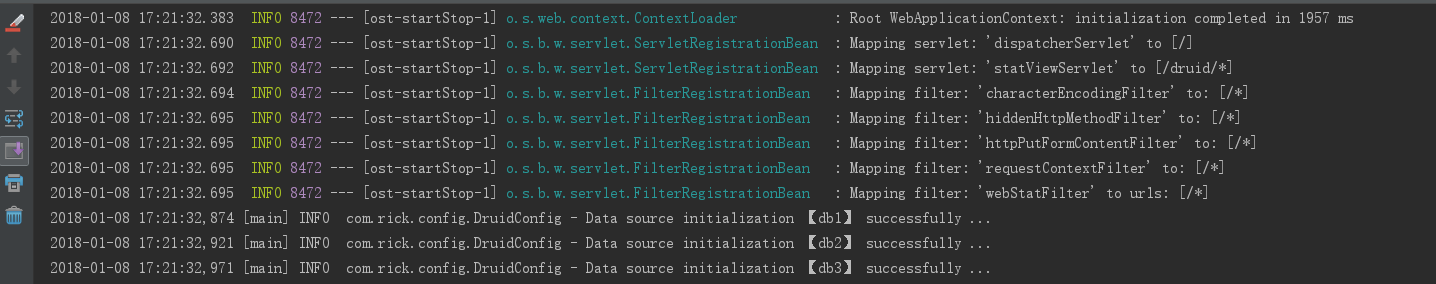

</build>启动SpringBoot项目,从启动日志中我们可以看到db1,db2,db3 DataSource都成功构建

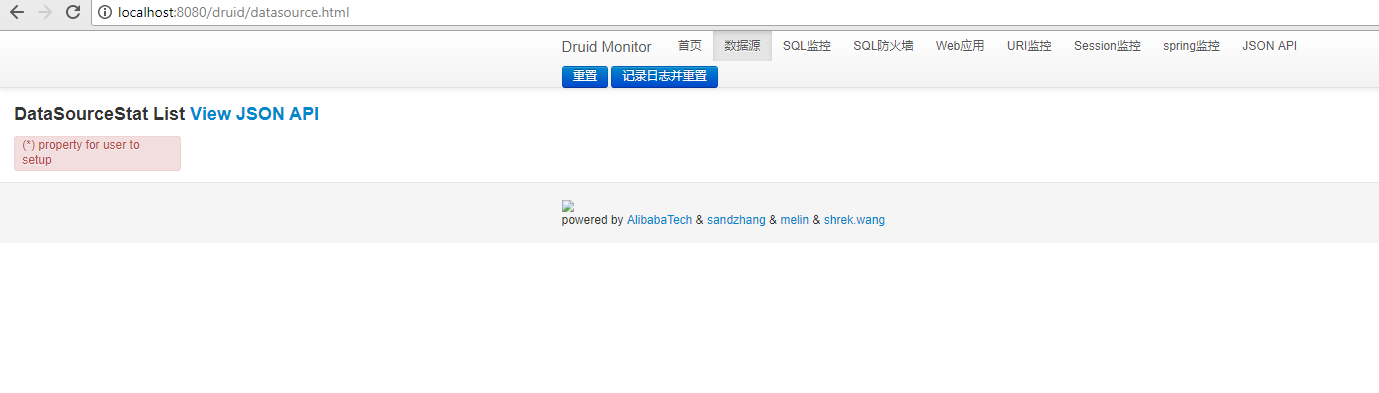

打开Druid监控页面(http://localhost:8080/druid),在数据源页面中没有任何数据源

这是因为Druid监控的数据源信息是根据源自MBean Server的数据源信息,而这个注册过程是在第一次使用数据源,获取Jdbc Connection连接执行的,参见下面的代码

public class DruidDataSource extends DruidAbstractDataSource implements DruidDataSourceMBean, ManagedDataSource, Referenceable, Closeable, Cloneable, ConnectionPoolDataSource, MBeanRegistration {

..........

public DruidPooledConnection getConnection(long maxWaitMillis) throws SQLException {

init();

.......

}

public void init() throws SQLException {

.........

registerMbean();

.........

}

public void registerMbean() {

if (!mbeanRegistered) {

AccessController.doPrivileged(new PrivilegedAction<Object>() {

@Override

public Object run() {

ObjectName objectName = DruidDataSourceStatManager.addDataSource(DruidDataSource.this,

DruidDataSource.this.name);

DruidDataSource.this.setObjectName(objectName);

DruidDataSource.this.mbeanRegistered = true;

return null;

}

});

}

}

由于SpringBoot程序执行时并没有任何数据库访问,没有Connection创建,因此没有任何数据源被注册,从数据源监控界面看不到任何数据源。

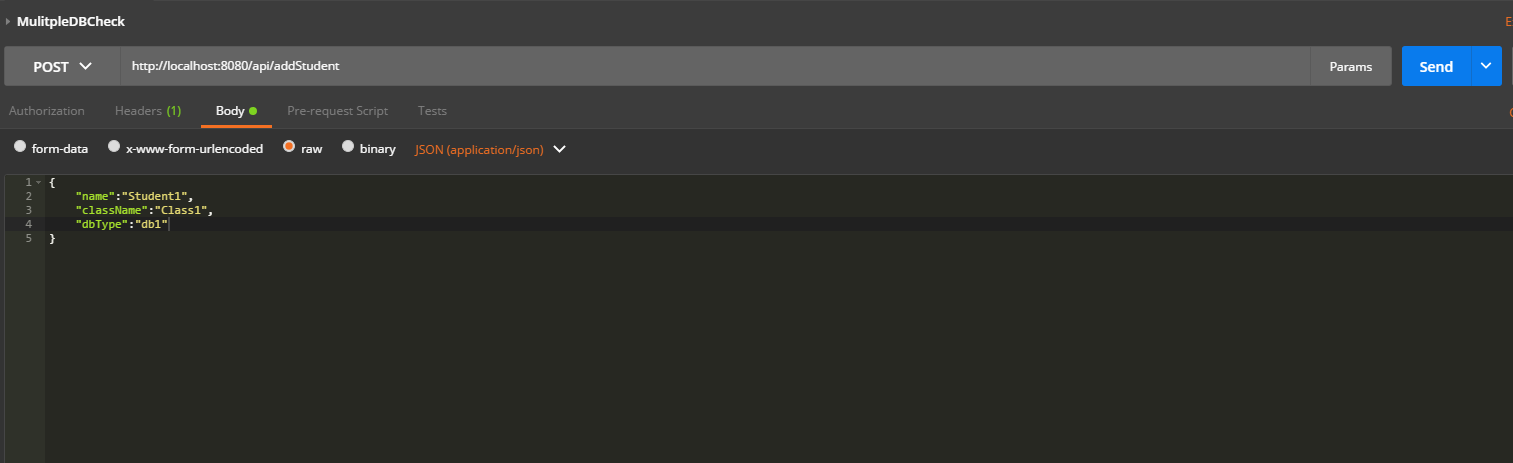

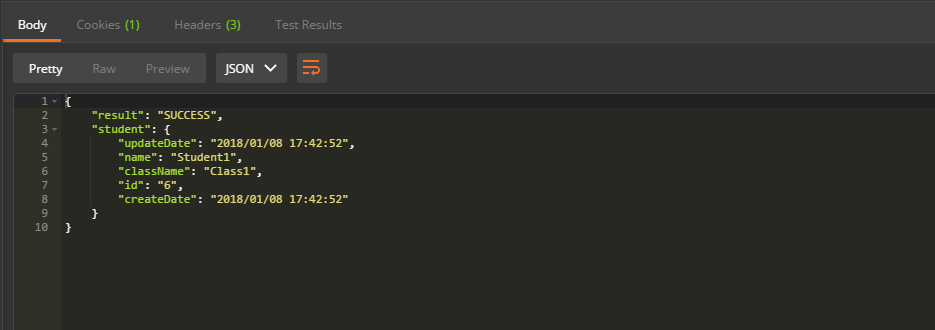

我们执行三次学生信息插入操作,分别使用不同的数据源

第二次和第三次操作的请求报文与第一次类似,学生名分别为Student2和Student3,班级名分别为Class2和Class3,dbType分别为db2和db3,执行后的结果如下

第二次和第三次操作执行的返回结果类似。

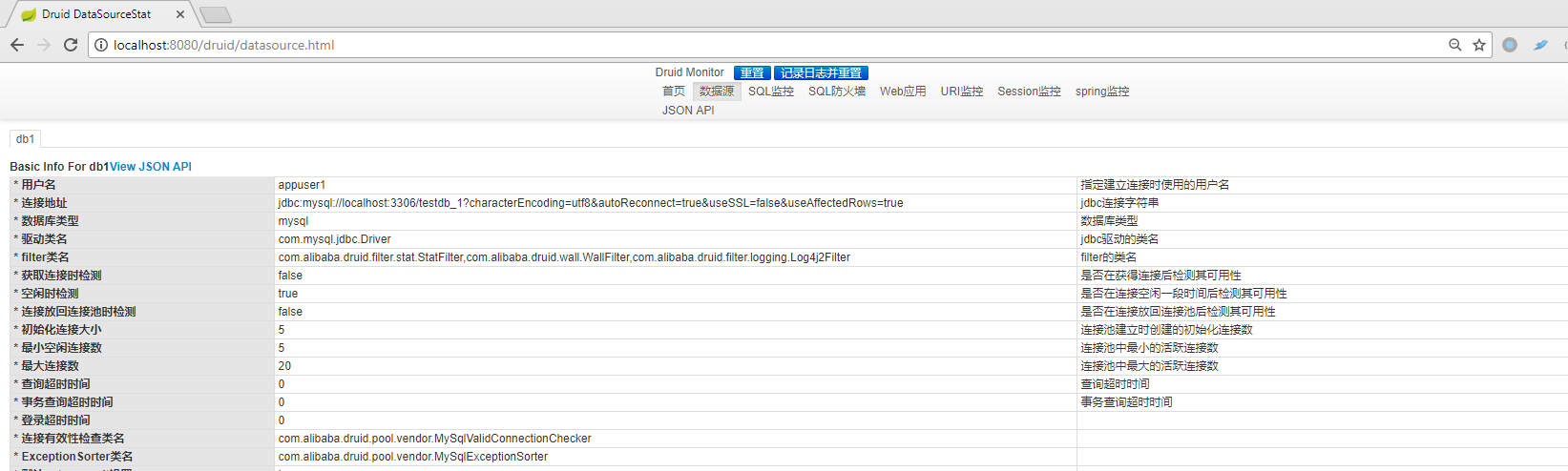

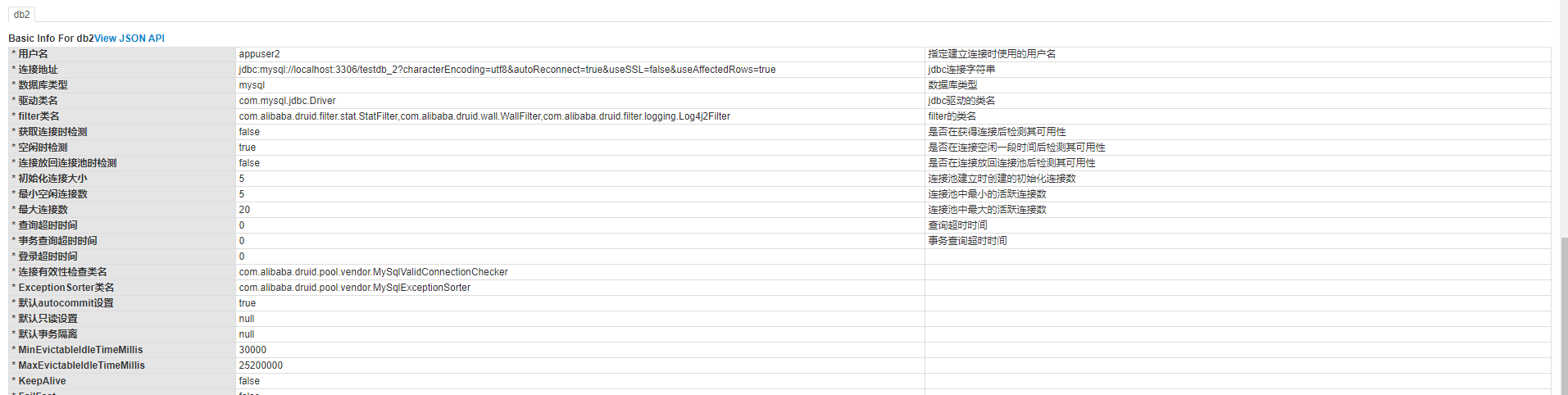

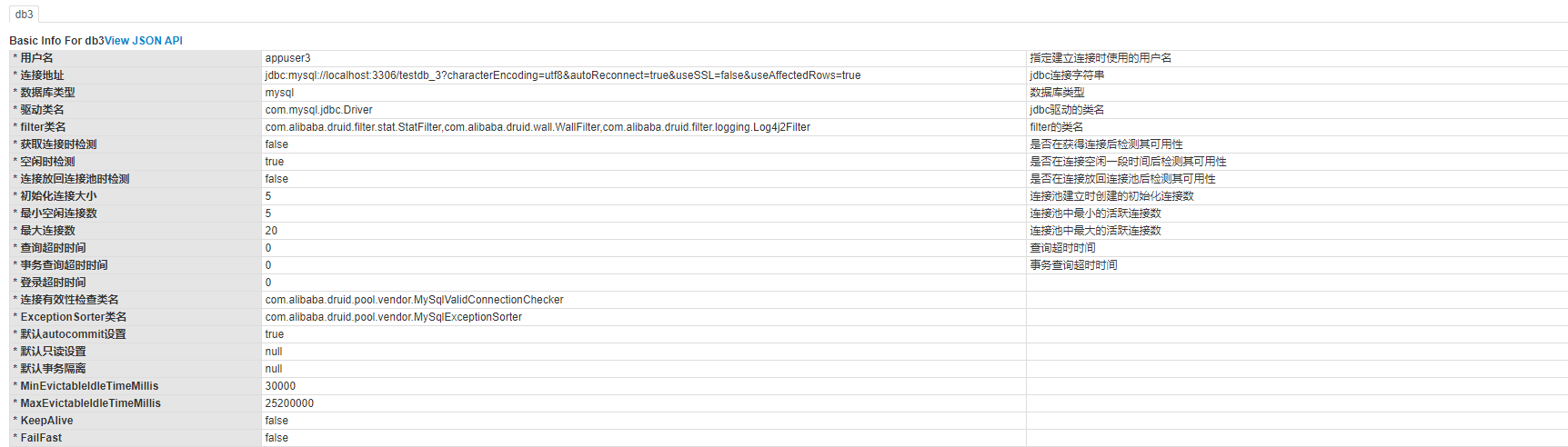

这时我们再查看Druid数据源监控界面,可以看到三个数据源都已经被注册

数据源tab上的名称是我们设置的DruidDataSource对象的name属性值。

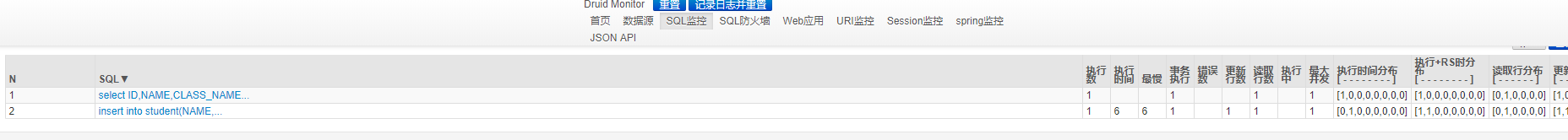

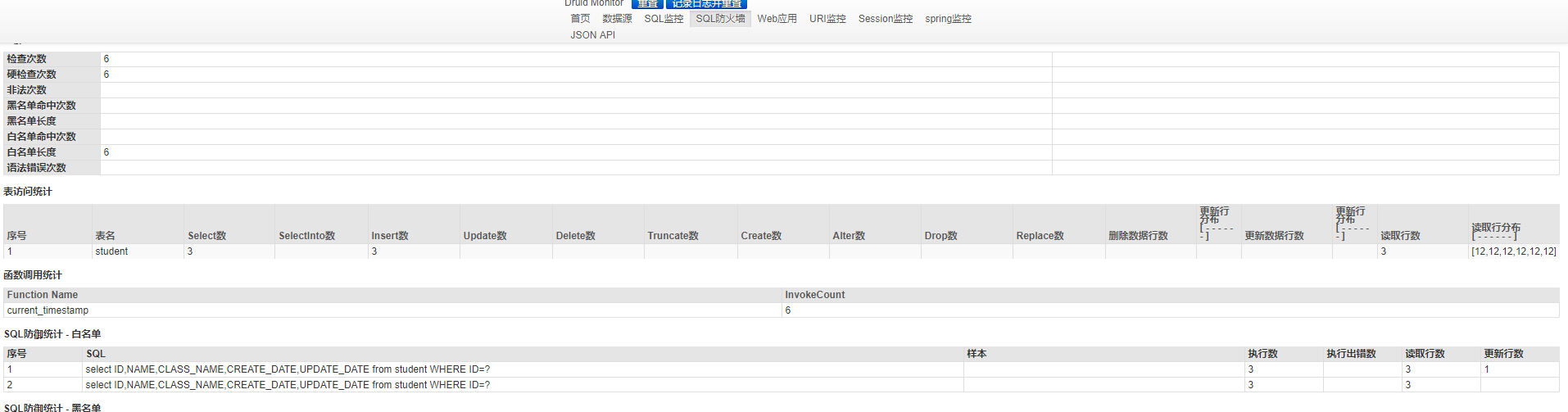

随便点开一个数据源的[sql列表]行的[View]按钮,我们可以看到API接口调用后的sql语句

点开[SQL防火墙],可以看到对三个数据源的统一统计

所有的项目代码已经上传到Github,代码地址为

https://github.com/yosaku01/SpringBootDruidMultiDB

对博文有不清楚的读者可以自行下载代码查看。

另外,本项目仅仅是SpringBoot + Mybatis + Druid多数据源集成的一个简单Demo,可能有些地方理解不准确,有些做法不正确,如果读者有意见,也可以指出,作者感激不尽。