系统

[root@kubernetes-build etcd]# cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core) 主机

10.39.47.28 slave-28

10.39.47.23 slave-23

10.39.47.27 master-27 设置主机名

hostnamectl set-hostname master-27

hostnamectl set-hostname slave-23

hostnamectl set-hostname slave-28安装docker

yum install docker -y

swapoff -a

setenforce 0

[root@slave-28 ~]# cat /etc/docker/daemon.json

{"registry-mirrors": ["http://579fe187.m.daocloud.io","https://pee6w651.mirror.aliyuncs.com"],"insecure-registries": ["10.39.47.22"]}

systemctl start docker注意 需要在每台机器上docker login,

[root@slave-28 ~]# docker login 10.39.47.22

Username: admin

Password:

Login Succeeded

制作证书

安装 cfssl

mkdir -p /opt/local/cfssl

cd /opt/local/cfssl

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

mv cfssl_linux-amd64 cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssljson_linux-amd64 cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 cfssl-certinfo

chmod +x *

[root@master-27 cfssl]# ls -l

total 18808

-rwxr-xr-x 1 root root 10376657 Mar 30 2016 cfssl

-rwxr-xr-x 1 root root 6595195 Mar 30 2016 cfssl-certinfo

-rwxr-xr-x 1 root root 2277873 Mar 30 2016 cfssljson创建 CA 证书配置

mkdir /opt/ssl

cd /opt/ssl# config.json 文件

vi config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}# csr.json 文件

vi csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}生成 CA 证书和私钥

cd /opt/ssl/

/opt/local/cfssl/cfssl gencert -initca csr.json | /opt/local/cfssl/cfssljson -bare ca

[root@master-27 ssl]# /opt/local/cfssl/cfssl gencert -initca csr.json | /opt/local/cfssl/cfssljson -bare ca

2018/06/16 15:00:33 [INFO] generating a new CA key and certificate from CSR

2018/06/16 15:00:33 [INFO] generate received request

2018/06/16 15:00:33 [INFO] received CSR

2018/06/16 15:00:33 [INFO] generating key: rsa-2048

2018/06/16 15:00:33 [INFO] encoded CSR

2018/06/16 15:00:33 [INFO] signed certificate with serial number 159485179627734853801933465778297599203045299990

[root@master-27 ssl]# ls -l

total 20

-rw-r--r-- 1 root root 1005 Jun 16 15:00 ca.csr

-rw------- 1 root root 1679 Jun 16 15:00 ca-key.pem

-rw-r--r-- 1 root root 1363 Jun 16 15:00 ca.pem

-rw-r--r-- 1 root root 292 Jun 16 14:59 config.json

-rw-r--r-- 1 root root 210 Jun 16 15:00 csr.json分发证书

mkdir -p /etc/kubernetes/ssl/

[root@master-27 ssl]# cp * /etc/kubernetes/ssl/

[root@master-27 ssl]# ls /etc/kubernetes/ssl

ca.csr ca-key.pem ca.pem config.json csr.json

[root@master-27 ssl]# scp * [email protected]:/etc/kubernetes/ssl/

Warning: Permanently added '10.39.47.28' (ECDSA) to the list of known hosts.

root@10.39.47.28's password:

ca.csr 100% 1005 2.5MB/s 00:00

ca-key.pem 100% 1679 6.1MB/s 00:00

ca.pem 100% 1363 5.4MB/s 00:00

config.json 100% 292 1.4MB/s 00:00

csr.json 100% 210 1.0MB/s 00:00

[root@master-27 ssl]# scp * [email protected]:/etc/kubernetes/ssl/

Warning: Permanently added '10.39.47.23' (ECDSA) to the list of known hosts.

[email protected]'s password:

ca.csr 100% 1005 2.1MB/s 00:00

ca-key.pem 100% 1679 5.0MB/s 00:00

ca.pem 100% 1363 4.8MB/s 00:00

config.json 100% 292 1.2MB/s 00:00

csr.json 生成etcd集群的证书

cd /opt/ssl/

vi etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"10.39.47.28",

"10.39.47.27",

"10.39.47.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}# 生成 etcd 密钥

/opt/local/cfssl/cfssl gencert -ca=/opt/ssl/ca.pem \

-ca-key=/opt/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes etcd-csr.json | /opt/local/cfssl/cfssljson -bare etcd

[root@master-27 ssl]# ls -l etcd*

-rw-r--r-- 1 root root 1066 Jun 16 15:07 etcd.csr

-rw-r--r-- 1 root root 295 Jun 16 15:07 etcd-csr.json

-rw------- 1 root root 1675 Jun 16 15:07 etcd-key.pem

-rw-r--r-- 1 root root 1440 Jun 16 15:07 etcd.pem

cp etcd* /etc/kubernetes/ssl/

分发证书

[root@master-27 ssl]# scp * [email protected]:/etc/kubernetes/ssl/

Warning: Permanently added '10.39.47.23' (ECDSA) to the list of known hosts.

[email protected]'s password:

ca.csr 100% 1005 3.2MB/s 00:00

ca-key.pem 100% 1679 6.0MB/s 00:00

ca.pem 100% 1363 5.6MB/s 00:00

config.json 100% 292 26.0KB/s 00:00

csr.json 100% 210 893.4KB/s 00:00

etcd.csr 100% 1066 4.0MB/s 00:00

etcd-csr.json 100% 295 1.3MB/s 00:00

etcd-key.pem 100% 1675 7.0MB/s 00:00

etcd.pem 100% 1440 6.7MB/s 00:00

[root@master-27 ssl]# scp * [email protected]:/etc/kubernetes/ssl/

Warning: Permanently added '10.39.47.28' (ECDSA) to the list of known hosts.

[email protected]'s password:

ca.csr 100% 1005 2.4MB/s 00:00

ca-key.pem 100% 1679 5.5MB/s 00:00

ca.pem 100% 1363 4.9MB/s 00:00

config.json 100% 292 1.3MB/s 00:00

csr.json 100% 210 1.0MB/s 00:00

etcd.csr 100% 1066 4.5MB/s 00:00

etcd-csr.json 100% 295 1.3MB/s 00:00

etcd-key.pem 100% 1675 7.1MB/s 00:00

etcd.pem 100% 1440 6.3MB/s 00:00创建 admin 证书

kubectl 与 kube-apiserver 的安全端口通信,需要为安全通信提供 TLS 证书和秘钥。

cd /opt/ssl/

vi admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "system:masters",

"OU": "System"

}

]

}# 生成 admin 证书和私钥

cd /opt/ssl/

/opt/local/cfssl/cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes admin-csr.json | /opt/local/cfssl/cfssljson -bare admin

# 查看生成

[root@master-27 ssl]# ls -l

total 52

-rw-r--r-- 1 root root 1013 Jun 16 16:23 admin.csr

-rw-r--r-- 1 root root 231 Jun 16 16:22 admin-csr.json

-rw------- 1 root root 1675 Jun 16 16:23 admin-key.pem

-rw-r--r-- 1 root root 1407 Jun 16 16:23 admin.pem

-rw-r--r-- 1 root root 1005 Jun 16 15:00 ca.csr

-rw------- 1 root root 1679 Jun 16 15:00 ca-key.pem

-rw-r--r-- 1 root root 1363 Jun 16 15:00 ca.pem

-rw-r--r-- 1 root root 292 Jun 16 14:59 config.json

-rw-r--r-- 1 root root 210 Jun 16 15:00 csr.json

-rw-r--r-- 1 root root 1066 Jun 16 15:07 etcd.csr

-rw-r--r-- 1 root root 295 Jun 16 15:07 etcd-csr.json

-rw------- 1 root root 1675 Jun 16 15:07 etcd-key.pem

-rw-r--r-- 1 root root 1440 Jun 16 15:07 etcd.pem

cp admin*.pem /etc/kubernetes/ssl/配置 kubectl kubeconfig 文件

scp kubelet kubectl [email protected]:/root

# 配置 kubernetes 集群

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://10.39.47.27:6443

# 配置 客户端认证

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

kubectl config use-context kubernetes创建 kubernetes 证书

cd /opt/ssl

vi kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.39.47.27",

"10.39.47.23",

"10.39.47.28",

"10.0.0.1",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}

/opt/local/cfssl/cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes kubernetes-csr.json | /opt/local/cfssl/cfssljson -bare kubernetes

[root@master-27 ssl]# ls -l kubernetes*

-rw-r--r-- 1 root root 1261 Jun 16 16:32 kubernetes.csr

-rw-r--r-- 1 root root 475 Jun 16 16:31 kubernetes-csr.json

-rw------- 1 root root 1679 Jun 16 16:32 kubernetes-key.pem

-rw-r--r-- 1 root root 1635 Jun 16 16:32 kubernetes.pem

# 拷贝到目录

cp kubernetes*.pem /etc/kubernetes/ssl/制作etcd.json

cd /etc/kubernetes

mkdir manifests

cd manifests/

[root@master-27 manifests]# cat etcd.json

{

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"name": "etcd",

"namespace": "kube-system",

"labels": {

"component": "etcd",

"tier": "control-plane"

}

},

"spec": {

"volumes": [

{

"name": "certs",

"hostPath": {

"path": "/etc/ssl/certs"

}

},

{

"name": "etcd",

"hostPath": {

"path": "/var/lib/etcd"

}

},

{

"name": "pki",

"hostPath": {

"path": "/etc/kubernetes"

}

}

],

"containers": [

{

"name": "etcd",

"image": "10.39.47.22/qinzhao/etcd-amd64:3.1.12",

"command": [

"etcd",

"--name",

"etcd-10.39.47.27",

"--data-dir=/var/etcd/data",

"--trusted-ca-file",

"/etc/kubernetes/pki/ca.pem",

"--key-file",

"/etc/kubernetes/pki/apiserver-key.pem",

"--cert-file",

"/etc/kubernetes/pki/apiserver.pem",

"--client-cert-auth",

"--peer-trusted-ca-file",

"/etc/kubernetes/pki/ca.pem",

"--peer-key-file",

"/etc/kubernetes/pki/apiserver-key.pem",

"--peer-cert-file",

"/etc/kubernetes/pki/apiserver.pem",

"--peer-client-cert-auth",

"--initial-advertise-peer-urls=https://10.39.47.27:2380",

"--listen-peer-urls=https://10.39.47.27:2380",

"--listen-client-urls=https://10.39.47.27:2379,http://127.0.0.1:2379",

"--advertise-client-urls=https://10.39.47.27:2379,http://127.0.0.1:2379",

"--initial-cluster-token=etcd-cluster-1",

"--initial-cluster",

"etcd-10.39.47.27=https://10.39.47.27:2380",

"--initial-cluster-state",

"new"

],

"resources": {

"requests": {

"cpu": "200m"

}

},

"volumeMounts": [

{

"name": "certs",

"mountPath": "/etc/ssl/certs"

},

{

"name": "etcd",

"mountPath": "/var/etcd"

},

{

"name": "pki",

"readOnly": true,

"mountPath": "/etc/kubernetes/"

}

],

"livenessProbe": {

"httpGet": {

"path": "/health",

"port": 2379,

"host": "127.0.0.1"

}

},

"securityContext": {

"seLinuxOptions": {

"type": "unconfined_t"

}

}

}

],

"hostNetwork": true

}

}kube-apiserver.json

[root@master-27 qinzhao]# cat /etc/kubernetes/manifests/kube-apiserver.json

{

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"name": "kube-apiserver",

"namespace": "kube-system",

"creationTimestamp": null,

"labels": {

"component": "kube-apiserver",

"tier": "control-plane"

}

},

"spec": {

"volumes": [

{

"name": "certs",

"hostPath": {

"path": "/etc/ssl/certs"

}

},

{

"name": "pki",

"hostPath": {

"path": "/etc/kubernetes"

}

}

],

"containers": [

{

"name": "kube-apiserver",

"image": "10.39.47.22/qinzhao/hyperkube-amd64:v1.7.6",

"imagePullPolicy": "Always",

"command": [

"/apiserver",

"--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,ResourceQuota",

"--advertise-address=10.39.47.27",

"--allow-privileged=true",

"--apiserver-count=3",

"--audit-log-maxage=30",

"--audit-log-maxbackup=3",

"--audit-log-maxsize=100",

"--audit-log-path=/var/lib/audit.log",

"--bind-address=10.39.47.27",

"--client-ca-file=/etc/kubernetes/ssl/ca.pem",

"--enable-swagger-ui=true",

"--etcd-servers=https://10.39.47.27:2379",

"--etcd-cafile=/etc/kubernetes/pki/ca.pem",

"--etcd-certfile=/etc/kubernetes/pki/apiserver.pem",

"--etcd-keyfile=/etc/kubernetes/pki/apiserver-key.pem",

"--event-ttl=1h",

"--kubelet-https=true",

"--insecure-bind-address=0.0.0.0",

"--service-account-key-file=/etc/kubernetes/ssl/ca.pem",

"--service-cluster-ip-range=10.12.0.0/12",

"--service-node-port-range=30000-32000",

"--tls-cert-file=/etc/kubernetes/ssl/apiserver.pem",

"--tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem",

"--experimental-bootstrap-token-auth",

"--token-auth-file=/etc/kubernetes/pki/tokens.csv",

"--v=2",

"--requestheader-username-headers=X-Remote-User --requestheader-group-headers=X-Remote-Group --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-client-ca-file=/var/run/kubernetes/request-header-ca.crt --requestheader-allowed-names=system:auth-proxy --proxy-client-cert-file=/var/run/kubernetes/client-auth-proxy.crt --proxy-client-key-file=/var/run/kubernetes/client-auth-proxy.key",

"--runtime-config=autoscaling/v2alpha1=true,settings.k8s.io/v1alpha1=true,batch/v2alpha1=true",

"--storage-backend=etcd2",

"--storage-media-type=application/json",

"--log-dir=/var/log/kuernetes",

"--logtostderr=false"

],

"resources": {

"requests": {

"cpu": "250m"

}

},

"volumeMounts": [

{

"name": "certs",

"mountPath": "/etc/ssl/certs"

},

{

"name": "pki",

"readOnly": true,

"mountPath": "/etc/kubernetes/"

}

],

"livenessProbe": {

"httpGet": {

"path": "/healthz",

"port": 8080,

"host": "127.0.0.1"

}

}

}

],

"hostNetwork": true

}

}kube-controller-manager.json

[root@master-27 qinzhao]# cat /etc/kubernetes/manifests/kube-controller-manager.json

{

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"name": "kube-controller-manager",

"namespace": "kube-system",

"labels": {

"component": "kube-controller-manager",

"tier": "control-plane"

}

},

"spec": {

"volumes": [

{

"name": "certs",

"hostPath": {

"path": "/etc/ssl/certs"

}

},

{

"name": "pki",

"hostPath": {

"path": "/etc/kubernetes"

}

},

{

"name": "plugin",

"hostPath": {

"path": "/usr/libexec/kubernetes/kubelet-plugins"

}

},

{

"name": "qingcloud",

"hostPath": {

"path": "/etc/qingcloud"

}

}

],

"containers": [

{

"name": "kube-controller-manager",

"image": "10.39.47.22/qinzhao/hyperkube-amd64:v1.7.6",

"imagePullPolicy": "Always",

"command": [

"/controller-manager",

"--address=127.0.0.1",

"--master=http://127.0.0.1:8080",

"--allocate-node-cidrs=false",

"--service-cluster-ip-range=10.254.0.0/16",

"--cluster-name=kubernetes",

"--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem",

"--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem",

"--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem",

"--root-ca-file=/etc/kubernetes/ssl/ca.pem",

"--leader-elect=true",

"--horizontal-pod-autoscaler-use-rest-clients",

"--horizontal-pod-autoscaler-sync-period=30s",

"--v=10"

],

"resources": {

"requests": {

"cpu": "200m"

}

},

"volumeMounts": [

{

"name": "certs",

"mountPath": "/etc/ssl/certs"

},

{

"name": "pki",

"readOnly": true,

"mountPath": "/etc/kubernetes/"

},

{

"name": "plugin",

"mountPath": "/usr/libexec/kubernetes/kubelet-plugins"

},

{

"name": "qingcloud",

"readOnly": true,

"mountPath": "/etc/qingcloud"

}

],

"livenessProbe": {

"httpGet": {

"path": "/healthz",

"port": 10252,

"host": "127.0.0.1"

}

}

}

],

"hostNetwork": true

}

}kube-scheduler.json

[root@master-27 qinzhao]# cat /etc/kubernetes/manifests/kube-scheduler.json

{

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"name": "kube-scheduler",

"namespace": "kube-system",

"labels": {

"component": "kube-scheduler",

"tier": "control-plane"

}

},

"spec": {

"containers": [

{

"name": "kube-scheduler",

"image": "10.39.47.22/qinzhao/hyperkube-amd64:v1.7.6",

"imagePullPolicy": "Always",

"command": [

"/scheduler",

"--address=127.0.0.1",

"--master=http://127.0.0.1:8080",

"--leader-elect=true",

"--v=2"

],

"resources": {

"requests": {

"cpu": "100m"

}

},

"livenessProbe": {

"httpGet": {

"path": "/healthz",

"port": 10251,

"host": "127.0.0.1"

}

}

}

],

"hostNetwork": true

}

}

安装kubelet

复制kubernetes之hyperkube方式安装文档-代码编译(一)编译好的二进制文件kubelet kubectl到各个节点上

[root@kubernetes-build amd64]# scp kube* [email protected]:/root/qinzhao/bin

The authenticity of host '10.39.47.27 (10.39.47.27)' can't be established.

ECDSA key fingerprint is SHA256:6hz+fc5OlstnoBGjq/LRass6lYCb5WQtpxqhHs5udRI.

ECDSA key fingerprint is MD5:f2:33:a9:eb:18:a7:c1:dd:5b:24:74:8f:46:4c:6f:8f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.39.47.27' (ECDSA) to the list of known hosts.

root@10.39.47.27's password:

kubeadm 100% 149MB 1.2MB/s 02:09

kube-aggregator 100% 54MB 1.1MB/s 00:48

kube-apiserver 100% 213MB 1.2MB/s 02:58

kube-controller-manager 100% 140MB 1.2MB/s 01:56

kubectl 100% 52MB 1.2MB/s 00:42

kubelet 100% 146MB 1.2MB/s 02:00

kubemark 100% 147MB 1.2MB/s 02:03

kube-proxy 100% 49MB 1.2MB/s 00:41

kube-scheduler 100% 47MB 1.2MB/s 00:38 然后把kubelet各自分发到其他节点上

先启动master-27主节点

创建kubelet的工作目录,要不然kubelet会起不来

mkdir /var/lib/kubelet编辑/etc/systemd/system/kubelet.service文件,内容如下

[root@master-27 kubernetes]# cat /etc/systemd/system/kubelet.service

[Unit]

Description=kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/bin/kubelet \

--address=10.39.47.27 \

--hostname-override=master-27 \

--pod-infra-container-image=10.39.47.22/qinzhao/pause-amd64:3.0 \

--kubeconfig=/etc/kubernetes/kubelet.conf \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/pki \

--cluster-dns=10.0.0.10 \

--cluster-domain=cluster.local \

--hairpin-mode promiscuous-bridge \

--allow-privileged=true \

--serialize-image-pulls=false \

--logtostderr=true \

--cgroup-driver=systemd \

--pod-manifest-path=/etc/kubernetes/manifests \

--kube-reserved cpu=500m,memory=512m \

--image-gc-high-threshold=85 --image-gc-low-threshold=70 \

--v=2 \

--enable-controller-attach-detach=true --volume-plugin-dir=/usr/libexec/kubernetes/kubelet-plugins/volume/exec/

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

systemctl enable kubelet创建kubelet.conf文件(主节点需要手动生成),因为kube-apiserver是由kubelet拉起服务的,kubelet 启动时需要向kube-apiserver注册,但是kube-apiserver还没起来,将会如下错误

un 16 19:16:18 master-27 kubelet[6371]: E0616 19:16:18.571653 6371 reflector.go:205] k8s.io/kubernetes/pkg/kubelet/kubelet.go:461: Failed to list *v1.Node: Get https://127.0.0.1:6443/api/v1/nodes?fieldSelector=metadata.name%3Dmaster-27&limit=500&resourceVersion=0: dial tcp 127.0.0.1:6443: getsockopt: connection refused

Jun 16 19:16:18 master-27 kubelet[6371]: E0616 19:16:18.697980 6371 event.go:209] Unable to write event: 'Post https://127.0.0.1:6443/api/v1/namespaces/default/events: dial tcp 127.0.0.1:6443: getsockopt: connection refused' (may retry after sleeping)

Jun 16 19:16:19 master-27 kubelet[6371]: E0616 19:16:19.570461 6371 reflector.go:205] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:47: Failed to list *v1.Pod: Get https://127.0.0.1:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dmaster-27&limit=500&resourceVersion=0: dial tcp 127.0.0.1:6443: getsockopt: connection refused

touch /etc/kubernetes/kubelet.conf配置kubelet kubeconfig 文件

# 生成 token

[root@master-01 ssl]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

89faf1c2d78a7e923e87bb5787cabd02

# 配置集群

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://10.39.47.27:6443 \

--kubeconfig=bootstrap.kubeconfig

# 配置客户端认证

kubectl config set-credentials kubelet-bootstrap \

--token=89faf1c2d78a7e923e87bb5787cabd02 \

--kubeconfig=bootstrap.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

mv bootstrap.kubeconfig /etc/kubernetes/启动kubelet服务

systemctl start kubelet

systemctl status kubelet查看服务启动情况

[root@master-27 kubernetes]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9d2dd5aeae48 10.39.47.22/qinzhao/hyperkube-amd64@sha256:c022ededf3ee769a907c4010f71b2c2d32a2a84c497e306f36fa5e6b581ff462 "/apiserver --enab..." About an hour ago Up About an hour k8s_kube-apiserver_kube-apiserver-master-27_kube-system_7a8cafad6e479e260008119a48d91f5d_6

6b3a0a8a246e 10.39.47.22/qinzhao/pause-amd64:3.0 "/pause" About an hour ago Up About an hour k8s_POD_kube-apiserver-master-27_kube-system_7a8cafad6e479e260008119a48d91f5d_0

fbd0dd7b50de d9c0194e0cb1 "etcd --name etcd-..." About an hour ago Up About an hour k8s_etcd_etcd-master-27_kube-system_fb3755d7349ae96f320fea3cea8b5f9e_0

ed29623eb9ec 10.39.47.22/qinzhao/pause-amd64:3.0 "/pause" About an hour ago Up About an hour k8s_POD_etcd-master-27_kube-system_fb3755d7349ae96f320fea3cea8b5f9e_0

6813b0915588 10.39.47.22/qinzhao/hyperkube-amd64@sha256:c022ededf3ee769a907c4010f71b2c2d32a2a84c497e306f36fa5e6b581ff462 "/controller-manag..." About an hour ago Up About an hour k8s_kube-controller-manager_kube-controller-manager-master-27_kube-system_3aaa2763216b7b6557b8afbc3bac98d1_0

bd945c7a5f6b 10.39.47.22/qinzhao/hyperkube-amd64@sha256:c022ededf3ee769a907c4010f71b2c2d32a2a84c497e306f36fa5e6b581ff462 "/scheduler --addr..." About an hour ago Up About an hour k8s_kube-scheduler_kube-scheduler-master-27_kube-system_ea5d437ff6ebd29303ac695b7e540a82_0

324cd989b7ac 10.39.47.22/qinzhao/pause-amd64:3.0 "/pause" About an hour ago Up About an hour k8s_POD_kube-controller-manager-master-27_kube-system_3aaa2763216b7b6557b8afbc3bac98d1_0

24bf0f3d9836 10.39.47.22/qinzhao/pause-amd64:3.0 "/pause" About an hour ago Up About an hour k8s_POD_kube-scheduler-master-27_kube-system_ea5d437ff6ebd29303ac695b7e540a82_0

[root@master-27 manifests]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"} 先创建认证请求,要不然会出错

# 先创建认证请求

# user 为 master 中 token.csv 文件里配置的用户

# 只需创建一次就可以

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

Jun 16 20:36:29 master-27 kubelet[21712]: I0616 20:36:29.539163 21712 bootstrap.go:58] Using bootstrap kubeconfig to generate TLS client cert, key and kubeconfig file

Jun 16 20:36:29 master-27 kubelet[21712]: F0616 20:36:29.564948 21712 server.go:233] failed to run Kubelet: cannot create certificate signing request: certificatesigningrequests.certificates.k8s.io is forbidden: User "kubelet-bootstrap" cannot create certificatesigningrequests.certificates.k8s.io at the cluster scope配置 TLS 认证

[root@master-27 pki]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-5NhRqipcqz-ZghMznROHjWI5NfckR3tLVX9-kqy4WV0 5m kubelet-bootstrap Pending

[root@master-27 pki]# kubectl certificate approve node-csr-5NhRqipcqz-ZghMznROHjWI5NfckR3tLVX9-kqy4WV0

certificatesigningrequest.certificates.k8s.io "node-csr-5NhRqipcqz-ZghMznROHjWI5NfckR3tLVX9-kqy4WV0" approved

[root@master-27 pki]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-5NhRqipcqz-ZghMznROHjWI5NfckR3tLVX9-kqy4WV0 7m kubelet-bootstrap Approved,Issued查看节点

[root@master-27 kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-27 Ready <none> 45m v1.10.4节点正常,kube-apiserver启动成功之后要手动删除文件/etc/kubernetes/kubelet.conf,然后重启kubelet,让kubelet向kube-apiserver注册,之后会自动生成kubelet的证书以及kubelet.conf文件,证书在这个目录下/etc/kubernetes/pki

[root@master-27 ~]# ls -l /etc/kubernetes/pki/kubelet*

-rw-r--r-- 1 root root 1046 Jun 16 20:43 /etc/kubernetes/pki/kubelet-client.crt

-rw------- 1 root root 227 Jun 16 20:36 /etc/kubernetes/pki/kubelet-client.key

-rw-r--r-- 1 root root 2173 Jun 16 17:51 /etc/kubernetes/pki/kubelet.crt

-rw------- 1 root root 1675 Jun 16 17:51 /etc/kubernetes/pki/kubelet.key配置slave-23节点

mkdir /var/lib/kubelet

[root@slave-23 ~]# cat /etc/systemd/system/kubelet.service

[Unit]

Description=kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/bin/kubelet \

--address=10.39.47.23 \

--hostname-override=slave-23 \

--pod-infra-container-image=10.39.47.22/qinzhao/pause-amd64:3.0 \

--kubeconfig=/etc/kubernetes/kubelet.conf \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/pki \

--cluster-dns=10.0.0.10 \

--cluster-domain=cluster.local \

--hairpin-mode promiscuous-bridge \

--allow-privileged=true \

--serialize-image-pulls=false \

--logtostderr=true \

--cgroup-driver=systemd \

--pod-manifest-path=/etc/kubernetes/manifests \

--kube-reserved cpu=500m,memory=512m \

--image-gc-high-threshold=85 --image-gc-low-threshold=70 \

--v=2 \

--enable-controller-attach-detach=true --volume-plugin-dir=/usr/libexec/kubernetes/kubelet-plugins/volume/exec/

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

swapoff -a

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

systemctl status kubelet配置TSL启动

[root@master-27 manifests]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-5NhRqipcqz-ZghMznROHjWI5NfckR3tLVX9-kqy4WV0 1h kubelet-bootstrap Approved,Issued

node-csr-Zpr-vPcNNjdmsBQCRf1RNfj-QLwaqcGEIIpnOWtVyE0 30s kubelet-bootstrap Pending[root@master-27 manifests]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-23 Ready <none> 13s v1.7.6

slave-23 Ready <none> 57m v1.7.6slave-28 节点也是一样的操作方法,这里就不讲了

[root@master-27 qinzhao]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-27 Ready <none> 48m v1.7.6

slave-23 Ready <none> 28m v1.7.6

slave-28 Ready <none> 39m v1.7.6部署kube-proxy

kube-proxy.yaml文件内容

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

labels:

component: kube-proxy

k8s-app: kube-proxy

kubernetes.io/cluster-service: "true"

name: kube-proxy

tier: node

name: kube-proxy

namespace: kube-system

spec:

selector:

matchLabels:

component: kube-proxy

k8s-app: kube-proxy

kubernetes.io/cluster-service: "true"

name: kube-proxy

tier: node

template:

metadata:

annotations:

scheduler.alpha.kubernetes.io/affinity: '{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"beta.kubernetes.io/arch","operator":"In","values":["amd64"]}]}]}}}'

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"dedicated","value":"master","effect":"NoSchedule"}]'

labels:

component: kube-proxy

k8s-app: kube-proxy

kubernetes.io/cluster-service: "true"

name: kube-proxy

tier: node

spec:

containers:

- command:

- /proxy

- --cluster-cidr=192.168.0.0/16

- --kubeconfig=/run/kubeconfig

- --logtostderr=true

- --proxy-mode=iptables

- --v=2

image: 10.39.47.22/qinzhao/hyperkube-amd64:v1.7.6

imagePullPolicy: IfNotPresent

name: kube-proxy

securityContext:

privileged: true

volumeMounts:

- mountPath: /var/run/dbus

name: dbus

- mountPath: /run/kubeconfig

name: kubeconfig

- mountPath: /etc/kubernetes/pki

name: pki

dnsPolicy: ClusterFirst

hostNetwork: true

restartPolicy: Always

volumes:

- hostPath:

path: /etc/kubernetes/kubelet.conf

name: kubeconfig

- hostPath:

path: /var/run/dbus

name: dbus

- hostPath:

path: /etc/kubernetes/pki

name: pki

updateStrategy:

type: OnDelete配置 kube-proxy

创建 kube-proxy 证书

cd /opt/ssl

vi kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShenZhen",

"L": "ShenZhen",

"O": "k8s",

"OU": "System"

}

]

}生成 kube-proxy 证书和私钥

/opt/local/cfssl/cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/opt/ssl/config.json \

-profile=kubernetes kube-proxy-csr.json | /opt/local/cfssl/cfssljson -bare kube-proxy

# 查看生成

ls kube-proxy*

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

# 拷贝到目录

cp kube-proxy*.pem /etc/kubernetes/ssl/创建 kube-proxy kubeconfig 文件

# 配置集群

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://10.39.47.27:6443 \

--kubeconfig=kube-proxy.kubeconfig

# 配置客户端认证

kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

# 拷贝到目录

mv kube-proxy.kubeconfig /etc/kubernetes/

最后分配到各个节点上即可

创建proxy

[root@master-27 qinzhao]# pwd

/root/qinzhao

[root@master-27 qinzhao]# kubectl create -f kube-proxy.yaml

daemonset.extensions "kube-proxy" created

[root@master-27 qinzhao]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

etcd-master-27 1/1 Running 0 13h

kube-apiserver-master-27 1/1 Running 6 13h

kube-controller-manager-master-27 1/1 Running 0 13h

kube-proxy-lwl4p 1/1 Running 0 10s

kube-proxy-tjzgb 1/1 Running 0 10s

kube-proxy-xtjjd 1/1 Running 0 10s

kube-scheduler-master-27 1/1 Running 0 13h部署calico

# 下载 yaml 文件

wget http://docs.projectcalico.org/v2.6/getting-started/kubernetes/installation/hosted/calico.yaml

wget http://docs.projectcalico.org/v2.6/getting-started/kubernetes/installation/rbac.yaml

# 下载 镜像

# 国外镜像 有墙

quay.io/calico/node:v2.6.0

quay.io/calico/cni:v1.11.0

quay.io/calico/kube-controllers:v1.0.0

配置 calico

# 注意修改如下选项:

etcd_endpoints: "https://10.39.47.27:2379"

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"

# 这里面要写入 base64 的信息

data:

etcd-key: (cat /etc/kubernetes/ssl/etcd-key.pem | base64 | tr -d '\n')

etcd-cert: (cat /etc/kubernetes/ssl/etcd.pem | base64 | tr -d '\n')

etcd-ca: (cat /etc/kubernetes/ssl/ca.pem | base64 | tr -d '\n')

- name: CALICO_IPV4POOL_CIDR

value: "10.233.0.0/16"导入 yaml 文件

[root@master-27 calico]# kubectl create -f .

configmap "calico-config" created

secret "calico-etcd-secrets" created

daemonset.extensions "calico-node" created

deployment.extensions "calico-kube-controllers" created

deployment.extensions "calico-policy-controller" created

serviceaccount "calico-kube-controllers" created

serviceaccount "calico-node" created

clusterrole.rbac.authorization.k8s.io "calico-kube-controllers" created

clusterrolebinding.rbac.authorization.k8s.io "calico-kube-controllers" created

clusterrole.rbac.authorization.k8s.io "calico-node" created

获取结果

[root@master-27 calico]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6c857c57bd-nwk9n 1/1 Running 0 1m

calico-node-qmqk7 2/2 Running 0 1m

calico-node-qzw9h 2/2 Running 0 1m

calico-node-sxn77 2/2 Running 0 1m部署kube-dns

下载kube-dns yaml文件

[root@master-27 dns]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

addonmanager.kubernetes.io/mode: EnsureExists

name: kube-dns

namespace: kube-system

[root@master-27 dns]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

addonmanager.kubernetes.io/mode: EnsureExists

name: kube-dns

namespace: kube-system

[root@master-27 dns]# cat dns_dep.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "3"

generation: 3

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

name: kube-dns

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kube-dns

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

k8s-app: kube-dns

spec:

containers:

- args:

- --domain=cluster.local

- --dns-port=10053

- --config-dir=/kube-dns-config

- --v=5

env:

- name: PROMETHEUS_PORT

value: "10055"

image: 10.39.47.27/qinzhao/k8s-dns-kube-dns-amd64:1.14.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: kubedns

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readiness

port: 8081

scheme: HTTP

initialDelaySeconds: 3

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

terminationMessagePolicy: File

volumeMounts:

- mountPath: /kube-dns-config

name: kube-dns-config

- args:

- -v=5

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- -N

- --cache-size=1000

- --log-facility=-

- --server=/cluster.local/127.0.0.1#10053

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

image: 10.39.47.27/qinzhao/k8s-dns-dnsmasq-nanny-amd64:1.14.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: dnsmasq

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

resources:

requests:

cpu: 150m

memory: 20Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/k8s/dns/dnsmasq-nanny

name: kube-dns-config

- args:

- --v=5

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,A

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,A

image: 10.39.47.27/qinzhao/k8s-dns-sidecar-amd64:1.14.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 5

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: sidecar

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

cpu: 10m

memory: 20Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: Default

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

tolerations:

- key: CriticalAddonsOnly

operator: Exists

volumes:

- configMap:

defaultMode: 420

name: kube-dns

optional: true

name: kube-dns-config

[root@master-27 dns]# cat kube-dns_svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: KubeDNS

name: kube-dns

namespace: kube-system

spec:

clusterIP: 10.0.0.10

ports:

- name: dns

port: 53

protocol: UDP

targetPort: 53

- name: dns-tcp

port: 53

protocol: TCP

targetPort: 53

selector:

k8s-app: kube-dns

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

部署dns

[root@master-27 dns]# kubectl create -f .

configmap "kube-dns" created

deployment.extensions "kube-dns" created

service "kube-dns" created部署dns结果

[root@master-27 dns]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-3794750602-lm8j7 1/1 Running 0 4m

calico-node-5379p 2/2 Running 0 4m

calico-node-9mvwg 2/2 Running 0 4m

calico-node-glsf7 2/2 Running 0 4m

etcd-master-27 1/1 Running 0 38m

kube-apiserver-master-27 1/1 Running 0 38m

kube-controller-manager-master-27 1/1 Running 0 38m

kube-dns-2744242050-wtcwj 3/3 Running 0 2m

kube-proxy-bbhst 1/1 Running 0 7m

kube-proxy-czhn1 1/1 Running 0 7m

kube-proxy-t79rc 1/1 Running 0 7m

kube-scheduler-master-27 1/1 Running 0 38m到此,k8s集群搭建完成

验证k8s集群

部署dashboard

下载 yaml 文件

[root@master-27 dashboard]# cat dashboard-controller.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

containers:

- name: kubernetes-dashboard

image: 10.39.47.22/qinzhao/kubernetes-dashboard-amd64:v1.6.1

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 9090

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

[root@master-27 dashboard]# cat dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

部署dashboard

[root@master-27 dashboard]# kubectl create -f .

deployment.extensions "kubernetes-dashboard" created

service "kubernetes-dashboard" created查看结果

[root@master-27 dashboard]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-3794750602-lm8j7 1/1 Running 0 34m

calico-node-5379p 2/2 Running 0 34m

calico-node-9mvwg 2/2 Running 0 34m

calico-node-glsf7 2/2 Running 0 34m

etcd-master-27 1/1 Running 0 1h

kube-apiserver-master-27 1/1 Running 0 1h

kube-controller-manager-master-27 1/1 Running 0 1h

kube-dns-2744242050-wtcwj 3/3 Running 0 32m

kube-proxy-bbhst 1/1 Running 0 36m

kube-proxy-czhn1 1/1 Running 0 36m

kube-proxy-t79rc 1/1 Running 0 36m

kube-scheduler-master-27 1/1 Running 0 1h

kubernetes-dashboard-774090368-3bspz 1/1 Running 0 19s访问dash,参考Accessing-Dashboard—1.7.X-and-above

编辑dash的server,把ClusterIP改成NodePort

kubectl -n kube-system edit service kubernetes-dashboardroot@master-27 dashboard]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.0.0.10 <none> 53/UDP,53/TCP 35m

kubernetes-dashboard NodePort 10.1.60.214 <none> 80:31315/TCP 3m

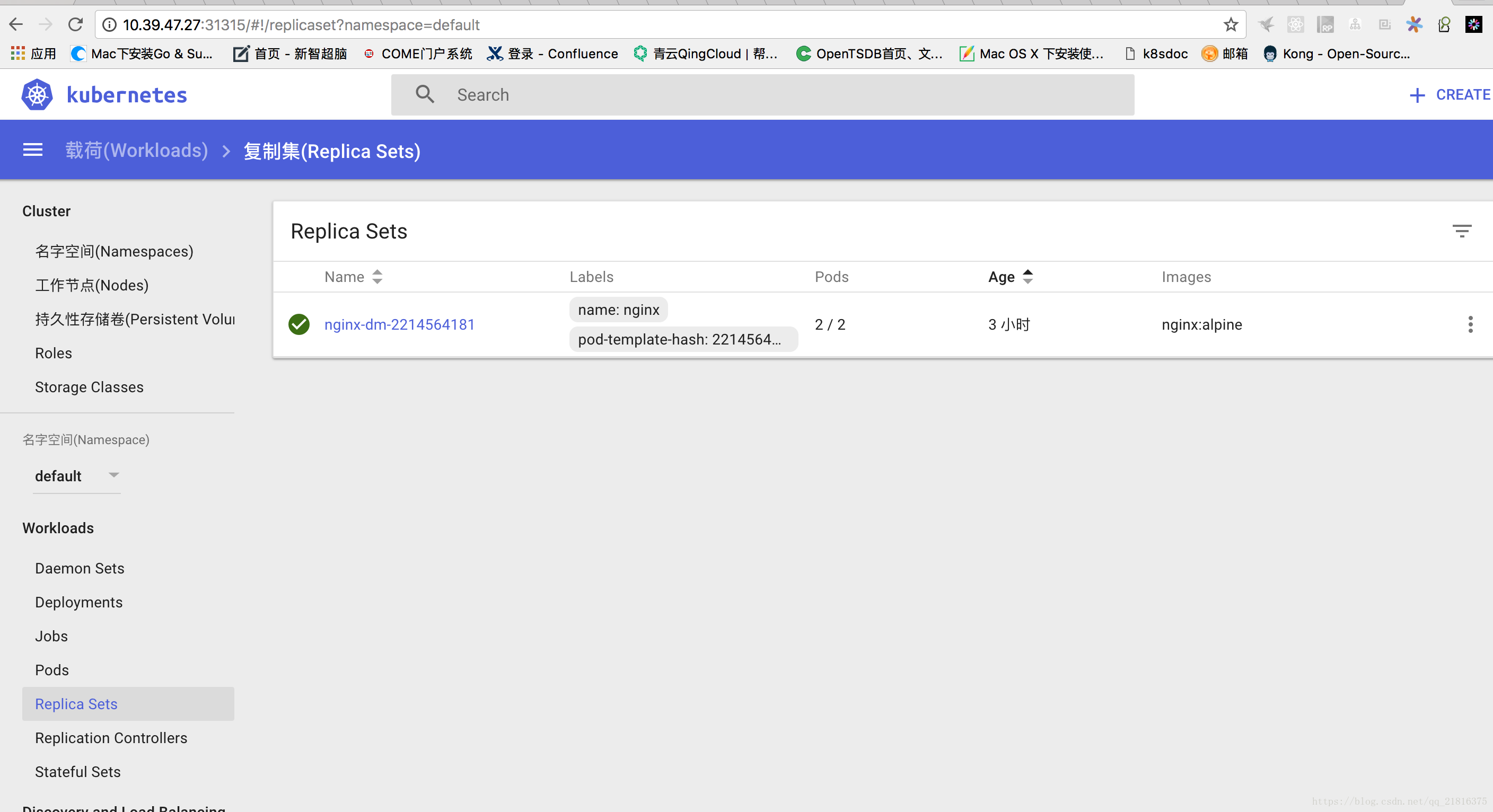

访问masterip:31315,我这里是https://10.39.47.27:31315/

部署服务验证

[root@master-27 ~]# cat nginxyaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-dm

spec:

replicas: 2

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx

[root@master-27 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-dm-2214564181-grz2k 1/1 Running 0 58m

pod/nginx-dm-2214564181-s3r63 1/1 Running 0 58m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.extensions/nginx-dm 2 2 2 2 6h

NAME DESIRED CURRENT READY AGE

replicaset.extensions/nginx-dm-2214564181 2 2 2 6h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-dm 2 2 2 2 6h访问nginx 服务

[root@master-27 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 2d

nginx-svc ClusterIP 10.6.118.7 <none> 80/TCP 6h

[root@master-27 ~]# curl 10.6.118.7

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>搞定。。。。

参考:

基于二进制安装 kubernetes 1.9.6

kube-proxy

kubelet