一、环境配置

1、各server (docker77 docker78 docker79) 关闭firewalld 或开放内部网段

例:

[root@docker79 ~]# firewall-cmd --perm --zone=public --add-rich-rule='rule family="ipv4" source address="192.168.0.0/16" accept'

success

[root@docker79 ~]# firewall-cmd --perm --zone=public --add-rich-rule='rule family="ipv4" source address="172.16.0.0/12" accept'

success

[root@docker79 ~]# firewall-cmd --reload

success2、各server 解压k8s_images.tar.bz2包:

[root@docker79 ~]# ls

anaconda-ks.cfg k8s_images.tar.bz2

[root@docker79 ~]# tar xfvj k8s_images.tar.bz2

k8s_images/

k8s_images/kubernetes-dashboard_v1.8.1.tar

k8s_images/kubernetes-cni-0.6.0-0.x86_64.rpm

k8s_images/libtool-ltdl-2.4.2-22.el7_3.x86_64.rpm

k8s_images/container-selinux-2.33-1.git86f33cd.el7.noarch.rpm

k8s_images/device-mapper-event-1.02.140-8.el7.x86_64.rpm

k8s_images/kubeadm-1.9.0-0.x86_64.rpm

k8s_images/device-mapper-event-libs-1.02.140-8.el7.x86_64.rpm

k8s_images/docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

k8s_images/device-mapper-1.02.140-8.el7.x86_64.rpm

k8s_images/device-mapper-libs-1.02.140-8.el7.x86_64.rpm

k8s_images/libxml2-python-2.9.1-6.el7_2.3.x86_64.rpm

k8s_images/kubectl-1.9.0-0.x86_64.rpm

k8s_images/docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm

k8s_images/kubelet-1.9.9-9.x86_64.rpm

k8s_images/libseccomp-2.3.1-3.el7.x86_64.rpm

k8s_images/device-mapper-persistent-data-0.7.0-0.1.rc6.el7.x86_64.rpm

k8s_images/lvm2-2.02.171-8.el7.x86_64.rpm

k8s_images/docker_images/

k8s_images/docker_images/kube-apiserver-amd64_v1.9.0.tar

k8s_images/docker_images/k8s-dns-dnsmasq-nanny-amd64_v1.14.7.tar

k8s_images/docker_images/kube-proxy-amd64_v1.9.0.tar

k8s_images/docker_images/pause-amd64_3.0.tar

k8s_images/docker_images/etcd-amd64_v3.1.10.tar

k8s_images/docker_images/k8s-dns-sidecar-amd64_1.14.7.tar

k8s_images/docker_images/kube-scheduler-amd64_v1.9.0.tar

k8s_images/docker_images/kube-controller-manager-amd64_v1.9.0.tar

k8s_images/docker_images/k8s-dns-kube-dns-amd64_1.14.7.tar

k8s_images/docker_images/flannel_v0.9.1-amd64.tar

k8s_images/lvm2-libs-2.02.171-8.el7.x86_64.rpm

k8s_images/python-kitchen-1.1.1-5.el7.noarch.rpm

k8s_images/lsof-4.87-4.el7.x86_64.rpm

k8s_images/kube-flannel.yml

k8s_images/kubernetes-dashboard.yaml

k8s_images/socat-1.7.3.2-2.el7.x86_64.rpm

k8s_images/yum-utils-1.1.31-42.el7.noarch.rpm3、各server 关闭swap 分区 :

[root@docker79 ~]# swapoff -a

[root@docker79 ~]# free -m

total used free shared buff/cache available

Mem: 15885 209 14032 8 1643 15364

Swap: 0 0 0

[root@docker79 ~]# vim /etc/fstab

[root@docker79 ~]# tail -1 /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@docker79 ~]#

[root@docker79 ~]# scp k8s_images.tar.bz2 docker78:/root/

k8s_images.tar.bz2 100% 287MB 71.8MB/s 00:04

[root@docker79 ~]# scp k8s_images.tar.bz2 docker77:/root/

k8s_images.tar.bz2 100% 287MB 71.8MB/s 00:04

[root@docker79 ~]#4、各server 关闭selinux

[root@docker79 ~]# getenforce

Disabled

[root@docker79 ~]#5、CentOS 7上的某些用户报告了由于iptables被绕过而导致流量被错误路由的问题。应该确保net.bridge.bridge-nf-call-iptables在sysctl配置中设置为1。

[root@docker79 ~]# cat <<EOF >> /etc/sysctl.d/k8s.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@docker79 ~]# sysctl --system

[root@docker78 ~]# tar xfj k8s_images.tar.bz2

[root@docker78 ~]# getenforce

Disabled

[root@docker78 ~]# swapoff -a

[root@docker78 ~]# vim /etc/fstab

[root@docker78 ~]# tail -1 /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@docker78 ~]#

[root@docker78 ~]# cat <<EOF >> /etc/sysctl.d/k8s.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@docker78 ~]# sysctl --system

[root@docker77 ~]# swapoff -a

[root@docker77 ~]# vim /etc/fstab

[root@docker77 ~]# tail -1 /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@docker77 ~]#

[root@docker77 ~]# cat <<EOF >> /etc/sysctl.d/k8s.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@docker77 ~]# sysctl --system二、部署kubernets

1、各server 安装 docker (并验证docker的Cgroup Driver为systemd)

[root@docker79 ~]# yum -y install docker

[root@docker79 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@docker79 ~]# systemctl start docker

[root@docker79 ~]# docker info

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 1.13.1

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: journald

Cgroup Driver: systemd

Plugins:

Volume: local

Network: bridge host macvlan null overlay

Swarm: inactive

Runtimes: docker-runc runc

Default Runtime: docker-runc

Init Binary: /usr/libexec/docker/docker-init-current

containerd version: (expected: aa8187dbd3b7ad67d8e5e3a15115d3eef43a7ed1)

runc version: e9c345b3f906d5dc5e8100b05ce37073a811c74a (expected: 9df8b306d01f59d3a8029be411de015b7304dd8f)

init version: 5b117de7f824f3d3825737cf09581645abbe35d4 (expected: 949e6facb77383876aeff8a6944dde66b3089574)

Security Options:

seccomp

WARNING: You're not using the default seccomp profile

Profile: /etc/docker/seccomp.json

Kernel Version: 3.10.0-862.3.3.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

Number of Docker Hooks: 3

CPUs: 8

Total Memory: 15.51 GiB

Name: docker79

ID: ECBV:A3XU:V254:PTNM:ZXDJ:4PSM:SXD7:2KBC:GPYJ:WDR4:H5WZ:YJIG

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/v1/

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Registries: docker.io (secure)

[root@docker79 ~]#2、Load相关images

[root@docker79 ~]# cd k8s_images/docker_images/

[root@docker79 docker_images]# for image in `ls *` ; do docker load < $image ; done

6a749002dd6a: Loading layer [==================================================>] 1.338 MB/1.338 MB

bbd07ea14872: Loading layer [==================================================>] 159.2 MB/159.2 MB

611a3394df5d: Loading layer [==================================================>] 32.44 MB/32.44 MB

Loaded image: gcr.io/google_containers/etcd-amd64:3.1.10

5bef08742407: Loading layer [==================================================>] 4.221 MB/4.221 MB

f439636ab0f0: Loading layer [==================================================>] 6.797 MB/6.797 MB

91b6f6ead101: Loading layer [==================================================>] 4.414 MB/4.414 MB

fc3c053505e6: Loading layer [==================================================>] 34.49 MB/34.49 MB

032657ac7c4a: Loading layer [==================================================>] 2.225 MB/2.225 MB

fd713c7c81af: Loading layer [==================================================>] 5.12 kB/5.12 kB

Loaded image: quay.io/coreos/flannel:v0.9.1-amd64

b87261cc1ccb: Loading layer [==================================================>] 2.56 kB/2.56 kB

ac66a5c581a8: Loading layer [==================================================>] 362 kB/362 kB

22f71f461ac8: Loading layer [==================================================>] 3.072 kB/3.072 kB

686a085da152: Loading layer [==================================================>] 36.63 MB/36.63 MB

Loaded image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

cd69fdcd7591: Loading layer [==================================================>] 46.31 MB/46.31 MB

Loaded image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

bd94706d2c63: Loading layer [==================================================>] 38.07 MB/38.07 MB

Loaded image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

0271b8eebde3: Loading layer [==================================================>] 1.338 MB/1.338 MB

9ccc9fba4253: Loading layer [==================================================>] 209.2 MB/209.2 MB

Loaded image: gcr.io/google_containers/kube-apiserver-amd64:v1.9.0

50a426d115f8: Loading layer [==================================================>] 136.6 MB/136.6 MB

Loaded image: gcr.io/google_containers/kube-controller-manager-amd64:v1.9.0

684c19bf2c27: Loading layer [==================================================>] 44.2 MB/44.2 MB

deb4ca39ea31: Loading layer [==================================================>] 3.358 MB/3.358 MB

9c44b0d51ed1: Loading layer [==================================================>] 63.38 MB/63.38 MB

Loaded image: gcr.io/google_containers/kube-proxy-amd64:v1.9.0

f733b8f8af29: Loading layer [==================================================>] 61.57 MB/61.57 MB

Loaded image: gcr.io/google_containers/kube-scheduler-amd64:v1.9.0

5f70bf18a086: Loading layer [==================================================>] 1.024 kB/1.024 kB

41ff149e94f2: Loading layer [==================================================>] 748.5 kB/748.5 kB

Loaded image: gcr.io/google_containers/pause-amd64:3.0

[root@docker79 docker_images]# cd ..

[root@docker79 k8s_images]# docker load < kubernetes-dashboard_v1.8.1.tar

64c55db70c4a: Loading layer [==================================================>] 121.2 MB/121.2 MB

Loaded image: gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.1

[root@docker79 k8s_images]#3、各server 安装相关软件包:

[root@docker79 k8s_images]# rpm -ivh socat-1.7.3.2-2.el7.x86_64.rpm kubernetes-cni-0.6.0-0.x86_64.rpm kubelet-1.9.9-9.x86_64.rpm kubectl-1.9.0-0.x86_64.rpm kubeadm-1.9.0-0.x86_64.rpm

警告:kubernetes-cni-0.6.0-0.x86_64.rpm: 头V4 RSA/SHA1 Signature, 密钥 ID 3e1ba8d5: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:kubectl-1.9.0-0 ################################# [ 20%]

2:socat-1.7.3.2-2.el7 ################################# [ 40%]

3:kubernetes-cni-0.6.0-0 ################################# [ 60%]

4:kubelet-1.9.0-0 ################################# [ 80%]

5:kubeadm-1.9.0-0 ################################# [100%]

[root@docker79 k8s_images]#4、验证 cgroup-driver的方式是否与docker中Cgroup Driver相一致(必须保持一致):

[root@docker79 k8s_images]# cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true"

Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_DNS_ARGS=--cluster-dns=10.96.0.10 --cluster-domain=cluster.local"

Environment="KUBELET_AUTHZ_ARGS=--authorization-mode=Webhook --client-ca-file=/etc/kubernetes/pki/ca.crt"

Environment="KUBELET_CADVISOR_ARGS=--cadvisor-port=0"

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=systemd"

Environment="KUBELET_CERTIFICATE_ARGS=--rotate-certificates=true --cert-dir=/var/lib/kubelet/pki"

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_AUTHZ_ARGS $KUBELET_CADVISOR_ARGS $KUBELET_CGROUP_ARGS $KUBELET_CERTIFICATE_ARGS $KUBELET_EXTRA_ARGS

[root@docker79 k8s_images]#

root@docker79 k8s_images]# systemctl daemon-reload

[root@docker79 k8s_images]# systemctl start kubelet

[root@docker79 k8s_images]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since 二 2018-07-03 15:47:53 CST; 8s ago

Docs: http://kubernetes.io/docs/

Process: 2361 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_AUTHZ_ARGS $KUBELET_CADVISOR_ARGS $KUBELET_CGROUP_ARGS $KUBELET_CERTIFICATE_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=1/FAILURE)

Main PID: 2361 (code=exited, status=1/FAILURE)

7月 03 15:47:53 docker79 systemd[1]: Unit kubelet.service entered failed state.

7月 03 15:47:53 docker79 systemd[1]: kubelet.service failed.

[root@docker79 k8s_images]#服务没有完全起来,这是因为还缺少许多文件在K8S初始化补齐后才能完全运行。

5、初始化k8s

[root@docker79 k8s_images]# kubeadm init --kubernetes-version=v1.9.0 --pod-network-cidr=172.18.0.0/16 ( 等待1分钟后,出现下面的内容)

[init] Using Kubernetes version: v1.9.0

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING FileExisting-crictl]: crictl not found in system path

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [docker79 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.20.79]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 29.002968 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node docker79 as master by adding a label and a taint

[markmaster] Master docker79 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 0def86.0385416542e427e6

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join --token 0def86.0385416542e427e6 192.168.20.79:6443 --discovery-token-ca-cert-hash sha256:6d879768eb1079942f0e43638cbaea72c83c71105677bc8749cc3e64ff1c105f

[root@docker79 k8s_images]# 此处生成的命令串后续会调用,各node需要调用该命令串才能加入cluster6、root用户的环境设置:

[root@docker79 k8s_images]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@docker79 k8s_images]# source ~/.bash_profile

[root@docker79 k8s_images]# kubectl version

Client Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.0", GitCommit:"925c127ec6b946659ad0fd596fa959be43f0cc05", GitTreeState:"clean", BuildDate:"2017-12-15T21:07:38Z", GoVersion:"go1.9.2", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.0", GitCommit:"925c127ec6b946659ad0fd596fa959be43f0cc05", GitTreeState:"clean", BuildDate:"2017-12-15T20:55:30Z", GoVersion:"go1.9.2", Compiler:"gc", Platform:"linux/amd64"}

[root@docker79 k8s_images]#对于非root用户

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config7、在master上创建Flannel :

[root@docker79 k8s_images]# pwd

/root/k8s_images

[root@docker79 k8s_images]# vim kube-flannel.yml

```

内容如下所示:

``` shell

[root@docker79 k8s_images]# grep 172 kube-flannel.yml

"Network": "172.18.0.0/16",

[root@docker79 k8s_images]# kubectl create -f kube-flannel.yml

clusterrole "flannel" created

clusterrolebinding "flannel" created

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset "kube-flannel-ds" created

[root@docker79 k8s_images]#8、将各node节点加入cluster中:

[root@docker78 ~]# kubeadm join --token 0def86.0385416542e427e6 192.168.20.79:6443 --discovery-token-ca-cert-hash sha256:6d879768eb1079942f0e43638cbaea72c83c71105677bc8749cc3e64ff1c105f

[preflight] Running pre-flight checks.

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Starting the kubelet service

[discovery] Trying to connect to API Server "192.168.20.79:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.20.79:6443"

[discovery] Requesting info from "https://192.168.20.79:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.20.79:6443"

[discovery] Successfully established connection with API Server "192.168.20.79:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@docker78 ~]#

[root@docker77 ~]# kubeadm join --token 0def86.0385416542e427e6 192.168.20.79:6443 --discovery-token-ca-cert-hash sha256:6d879768eb1079942f0e43638cbaea72c83c71105677bc8749cc3e64ff1c105f

[preflight] Running pre-flight checks.

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Starting the kubelet service

[discovery] Trying to connect to API Server "192.168.20.79:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.20.79:6443"

[discovery] Requesting info from "https://192.168.20.79:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.20.79:6443"

[discovery] Successfully established connection with API Server "192.168.20.79:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@docker77 ~]#三、验证

1、在master上查看nodes的情况 :

[root@docker79 k8s_images]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

docker77 Ready <none> 36s v1.9.0

docker78 Ready <none> 5m v1.9.0

docker79 Ready master 10m v1.9.0

[root@docker79 k8s_images]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since 二 2018-07-03 15:49:26 CST; 11min ago

Docs: http://kubernetes.io/docs/

Main PID: 2531 (kubelet)

CGroup: /system.slice/kubelet.service

└─2531 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernete...

7月 03 16:00:09 docker79 kubelet[2531]: E0703 16:00:09.454732 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:09 docker79 kubelet[2531]: E0703 16:00:09.454780 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:19 docker79 kubelet[2531]: E0703 16:00:19.470461 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:19 docker79 kubelet[2531]: E0703 16:00:19.470511 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:29 docker79 kubelet[2531]: E0703 16:00:29.485472 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:29 docker79 kubelet[2531]: E0703 16:00:29.485529 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:39 docker79 kubelet[2531]: E0703 16:00:39.500893 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:39 docker79 kubelet[2531]: E0703 16:00:39.500953 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:49 docker79 kubelet[2531]: E0703 16:00:49.515218 2531 summary.go:92] Failed to get system container s...rvice"

7月 03 16:00:49 docker79 kubelet[2531]: E0703 16:00:49.515264 2531 summary.go:92] Failed to get system container s...rvice"

Hint: Some lines were ellipsized, use -l to show in full.

[root@docker79 k8s_images]#2、kubernets会在每个node节点创建 flannel和kube-proxy的pod,如下:

[root@docker79 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-docker79 1/1 Running 0 13m

kube-system kube-apiserver-docker79 1/1 Running 0 13m

kube-system kube-controller-manager-docker79 1/1 Running 0 13m

kube-system kube-dns-6f4fd4bdf-dc7tf 3/3 Running 1 14m

kube-system kube-flannel-ds-gwrjj 1/1 Running 0 9m

kube-system kube-flannel-ds-pq6xb 1/1 Running 0 4m

kube-system kube-flannel-ds-s657n 1/1 Running 0 10m

kube-system kube-proxy-hkqhd 1/1 Running 0 4m

kube-system kube-proxy-rml2b 1/1 Running 0 14m

kube-system kube-proxy-wm5v6 1/1 Running 0 9m

kube-system kube-scheduler-docker79 1/1 Running 0 13m

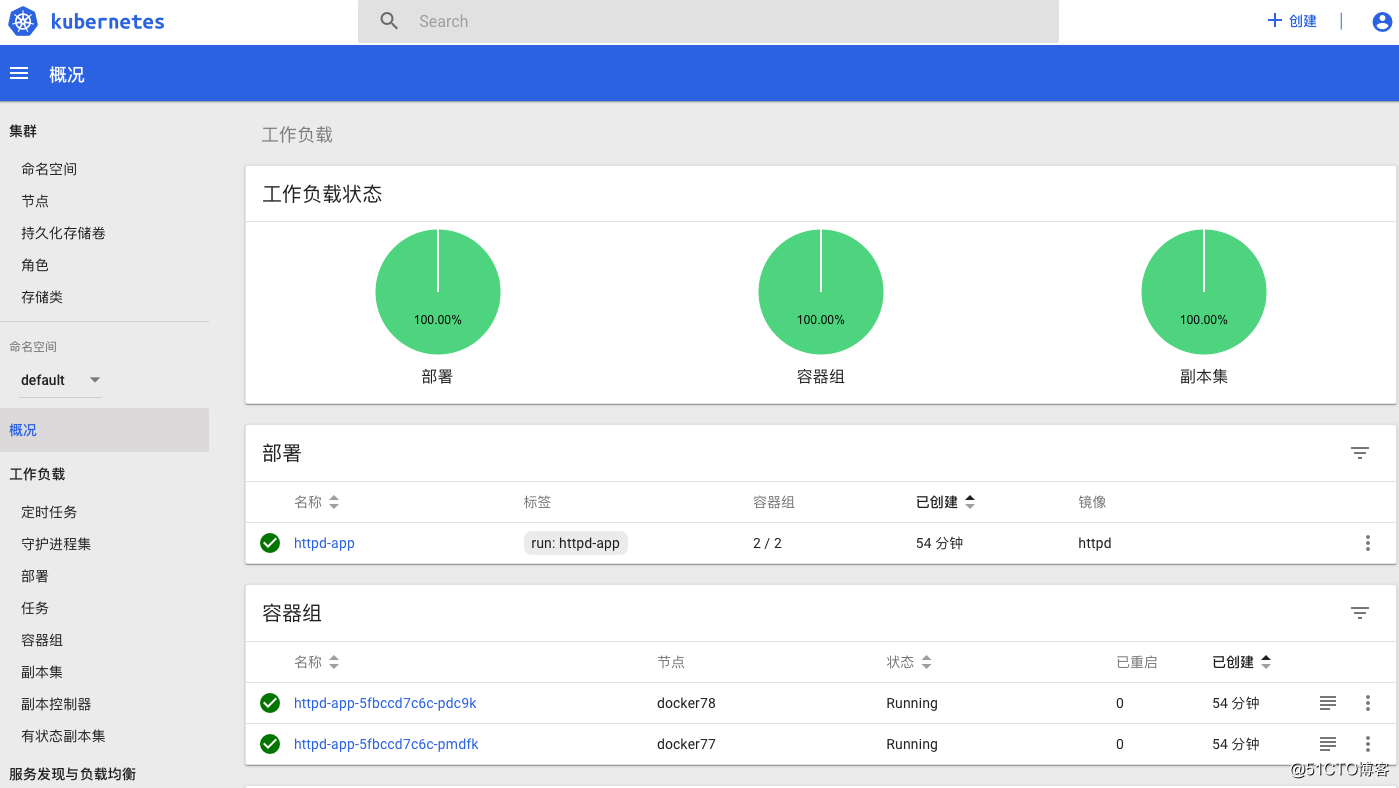

[root@docker79 ~]#3、部署一个httpd的应用,验证cluster是否正常:

[root@docker79 ~]# kubectl run httpd-app --image=httpd --replicas=2

deployment "httpd-app" created

[root@docker79 ~]#

[root@docker79 ~]# kubectl get deployment (创建过程有些慢)

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

httpd-app 2 2 2 2 4m

[root@docker79 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

httpd-app-5fbccd7c6c-pdc9k 1/1 Running 0 4m 172.18.1.2 docker78

httpd-app-5fbccd7c6c-pmdfk 1/1 Running 0 4m 172.18.2.2 docker77

[root@docker79 ~]#

[root@docker78 k8s_images]# curl http://172.18.1.2

<html><body><h1>It works!</h1></body></html>

[root@docker78 k8s_images]#

[root@docker77 k8s_images]# curl 172.18.2.2

<html><body><h1>It works!</h1></body></html>

[root@docker77 k8s_images]#四、部署Dashboard

[root@docker79 ~]# cd k8s_images

[root@docker79 k8s_images]# vim kubernetes-dashboard.yaml

```

如下图所示:

type: 默认为clusterport外部无法访问

``` shell

[root@docker79 k8s_images]# kubectl create -f kubernetes-dashboard.yaml

secret "kubernetes-dashboard-certs" created

serviceaccount "kubernetes-dashboard" created

role "kubernetes-dashboard-minimal" created

rolebinding "kubernetes-dashboard-minimal" created

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

[root@docker79 k8s_images]#

[root@docker79 k8s_images]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

[root@docker79 k8s_images]# grep -P "service-node|basic_auth" /etc/kubernetes/manifests/kube-apiserver.yaml

- --service-node-port-range=1-65535

- --basic_auth_file=/etc/kubernetes/pki/basic_auth_file

[root@docker79 k8s_images]#

[root@docker79 k8s_images]# cat <<EOF > /etc/kubernetes/pki/basic_auth_file

> admin,admin,2

> EOF

[root@docker79 k8s_images]# systemctl restart kubelet

[root@docker79 k8s_images]# cd /etc/kubernetes/manifests/

[root@docker79 manifests]# kubectl apply -f kube-apiserver.yaml

pod "kube-apiserver" created

[root@docker79 manifests]#查看cluster-admin

[root@docker79 manifests]# kubectl get clusterrole/cluster-admin -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: 2018-07-03T07:49:44Z

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: cluster-admin

resourceVersion: "14"

selfLink: /apis/rbac.authorization.k8s.io/v1/clusterroles/cluster-admin

uid: a65aaf39-7e95-11e8-9bd4-000c295011ce

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'给admin授权。默认cluster-admin是拥有全部权限的,将admin和cluster-admin bind这样admin就有cluster-admin的权限

[root@docker79 manifests]# kubectl create clusterrolebinding login-on-dashboard-with-cluster-admin --clusterrole=cluster-admin --user=admin

clusterrolebinding "login-on-dashboard-with-cluster-admin" created

[root@docker79 manifests]#

[root@docker79 manifests]# kubectl get clusterrolebinding/login-on-dashboard-with-cluster-admin -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: 2018-07-03T08:14:14Z

name: login-on-dashboard-with-cluster-admin

resourceVersion: "2350"

selfLink: /apis/rbac.authorization.k8s.io/v1/clusterrolebindings/login-on-dashboard-with-cluster-admin

uid: 12d75411-7e99-11e8-9ee0-000c295011ce

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: admin

[root@docker79 manifests]#https://master_ip:32666 ,如下图所示:

五、前后端相连的实例

[root@docker79 ~]# cat backend.yaml

kind: Service

apiVersion: v1

metadata:

name: apache-service

spec:

selector:

app: apache

tier: backend

ports:

- protocol: TCP

port: 80

targetPort: http

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: apache

spec:

replicas: 2

template:

metadata:

labels:

app: apache

tier: backend

track: stable

spec:

containers:

- name: apache

image: "192.168.20.79:5000/httpd:latest"

ports:

- name: http

containerPort: 80

volumeMounts:

- mountPath: /usr/local/apache2/htdocs

name: apache-volume

volumes:

- name: apache-volume

hostPath:

path: /data/httpd/www

[root@docker79 ~]#

[root@docker79 ~]# cat frontend.yaml

kind: Service

apiVersion: v1

metadata:

name: frontend

spec:

externalIPs:

- 192.168.20.79

selector:

app: nginx

tier: frontend

ports:

- protocol: "TCP"

port: 8080

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: frontend

spec:

replicas: 1

template:

metadata:

labels:

app: nginx

tier: frontend

track: stable

spec:

containers:

- name: nginx

image: "192.168.20.79:5000/nginx:latest"

lifecycle:

preStop:

exec:

command: ["/usr/sbin/nginx","-s","quit"]

[root@docker79 ~]#已经有了后端backend应用,就可以创建一个前端应用连接到后端。前端应用通过 DNS 名连接到后端的工作 Pods。 DNS 名是 “apache-service”,也就是 Service 配置文件中 name 字段的值。

前端 Deployment 中的 Pods 运行一个 nginx 镜像,这个已经配置好镜像去寻找后端的 hello Service。nginx 的配置文件如下:

[root@docker79 ~]# cat frontend.conf

upstream hello {

server apache-service;

}

server {

listen 80;

location / {

proxy_pass http://hello;

}

}

[root@docker79 ~]#使用 kubectl get endpoints 命令验证是否暴露了了 DNS endpoints

[root@docker79 ~]# kubectl get ep kube-dns --namespace=kube-system

NAME ENDPOINTS AGE

kube-dns 172.18.0.5:53,172.18.0.5:53 2d

[root@docker79 ~]#使用 kubectl get service 命令验证 DNS 服务已启动

[root@docker79 ~]# kubectl get svc --namespace=kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 2d

kubernetes-dashboard NodePort 10.98.176.199 <none> 443:32666/TCP 2d

[root@docker79 ~]#检查 DNS pod 是否正在运行中

[root@docker79 ~]# kubectl get pods --namespace=kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

kube-dns-6f4fd4bdf-r9sk5 3/3 Running 9 2d

[root@docker79 ~]#