预测结果为1到11中的1个

首先加载数据,训练数据,训练标签,预测数据,预测标签:

if __name__=="__main__": importTrainContentdata() importTestContentdata() importTrainlabeldata() importTestlabeldata()

traindata = [] testdata = [] trainlabel = [] testlabel = [] def importTrainContentdata(): file = 'F:/goverment/myfinalcode/train_big.csv' fo=open(file) ls=[] for line in fo: line=line.replace("\t",",") line=line.replace("\n",",") line=line.replace("\"",",") ls.append(line.split(",")) for i in ls: li=[] for j in i: if j == '': continue li.append(float(j)) traindata.append(li) def importTestContentdata(): file = 'F:/goverment/myfinalcode/test_big.csv' fo=open(file) ls=[] for line in fo: line=line.replace("\t",",") line=line.replace("\n",",") line=line.replace("\"",",") ls.append(line.split(",")) for i in ls: li=[] for j in i: if j == '': continue li.append(float(j)) testdata.append(li) #导入类别的训练和测试数据 def importTrainlabeldata(): file = 'F:/goverment/myfinalcode/train_big_label.xls' wb = xlrd.open_workbook(file) ws = wb.sheet_by_name("Sheet1") for r in range(ws.nrows): col = [] for c in range(1): col.append(ws.cell(r, c).value) trainlabel.append(col) def importTestlabeldata(): file = 'F:/goverment/myfinalcode/test_big_label.xls' wb = xlrd.open_workbook(file) ws = wb.sheet_by_name("Sheet1") for r in range(ws.nrows): col = [] for c in range(1): col.append(ws.cell(r, c).value) testlabel.append(col)

其中训练数据,预测数据是csv文件格式,而且是str,要转为float并一排排放入lis,然后将所有lis放入traindata或testdata中,但csv中是以","隔开的,所以要将"\t"等都转为",",需要利用

ls.append(line.split(","))放入ls中,但仍然是str型的,我又另外转化成了float,后来发

现不转化也是可以的,可能它后来会在即转化吧。

之后运用多种分类器,调参数参考

http://scikit-learn.org/stable/supervised_learning.html#supervised-learning

然后选出尽量好的分类器,提高准确率

''' #19% from sklearn import neighbors knn=neighbors.KNeighborsClassifier(n_neighbors=75, leaf_size=51, weights='distance',p=2) knn.fit(traindata, trainlabel) predict=knn.predict(testdata) ''' ''' #这个不行 from sklearn.neural_network import MLPClassifier import numpy as np traindata = np.array(traindata)#TypeError: cannot perform reduce with flexible type traindata = traindata.astype(float) trainlabel = np.array(trainlabel) trainlabel = trainlabel.astype(float) testdata=np.array(testdata) testdata = testdata.astype(float) model=MLPClassifier(activation='relu', alpha=1e-05, batch_size='auto', beta_1=0.9, beta_2=0.999, early_stopping=False, epsilon=1e-08, hidden_layer_sizes=(5, 2), learning_rate='constant', learning_rate_init=0.001, max_iter=200, momentum=0.9, nesterovs_momentum=True, power_t=0.5, random_state=1, shuffle=True, solver='lbfgs', tol=0.0001, validation_fraction=0.1, verbose=False, warm_start=False) model.fit(traindata, trainlabel) predict = model.predict(testdata) ''' ''' #19% from sklearn.tree import DecisionTreeClassifier model=DecisionTreeClassifier(class_weight='balanced',max_features=68,splitter='best',random_state=5) model.fit(traindata, trainlabel) predict = model.predict(testdata) 这个不行 from sklearn.naive_bayes import MultinomialNB clf = MultinomialNB(alpha=0.052).fit(traindata, trainlabel) #clf.fit(traindata, trainlabel) predict=clf.predict(testdata) ''' '''17% from sklearn.svm import SVC clf = SVC(C=150,kernel='rbf', degree=51, gamma='auto',coef0=0.0,shrinking=False,probability=False,tol=0.001,cache_size=300, class_weight=None,verbose=False,max_iter=-1,decision_function_shape=None,random_state=None) clf.fit(traindata, trainlabel) predict=clf.predict(testdata) ''' '''0.5% from sklearn.naive_bayes import GaussianNB import numpy as np gnb = GaussianNB() traindata = np.array(traindata)#TypeError: cannot perform reduce with flexible type traindata = traindata.astype(float) trainlabel = np.array(trainlabel) trainlabel = trainlabel.astype(float) testdata=np.array(testdata) testdata = testdata.astype(float) predict = gnb.fit(traindata, trainlabel).predict(testdata) ''' '''16% from sklearn.naive_bayes import BernoulliNB import numpy as np gnb = BernoulliNB() traindata = np.array(traindata)#TypeError: cannot perform reduce with flexible type traindata = traindata.astype(float) trainlabel = np.array(trainlabel) trainlabel = trainlabel.astype(float) testdata=np.array(testdata) testdata = testdata.astype(float) predict = gnb.fit(traindata, trainlabel).predict(testdata) ''' from sklearn.ensemble import RandomForestClassifier forest = RandomForestClassifier(n_estimators=500,random_state=5, warm_start=False, min_impurity_decrease=0.0,min_samples_split=15) # 生成随机森林多分类器 predict = forest.fit(traindata, trainlabel).predict(testdata)

输出准确率,我还把预测结果输出到txt中,方便分析。

s=len(predict) f=open('F:/goverment/myfinalcode/predict.txt', 'w') for i in range(s): f.write(str(predict[i])) f.write('\n') f.write("写好了") f.close() k=0 print(s) for i in range(s): if testlabel[i] == predict[i]: k=k+1 print("精度为:",k*1.0/s)

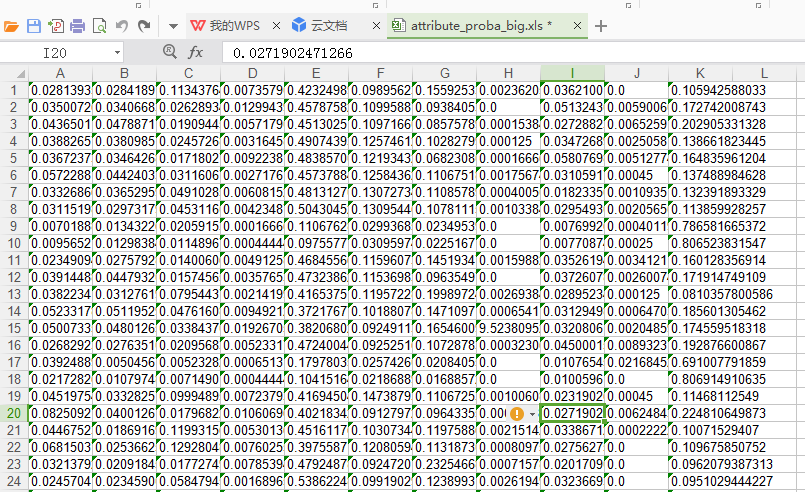

接下来是输出所有标签的支持度

print('我要开始输出支持度啦') attribute_proba=forest.predict_proba(testdata) #print(forest.predict_proba(testdata))#输出各个标签的概率 print(type(attribute_proba)) import xlwt myexcel = xlwt.Workbook() sheet = myexcel.add_sheet('sheet') si=-1 sj=-1 for i in attribute_proba: si=si+1 for j in i: sj=sj+1 sheet.write(si,sj,str(j)) sj=-1 myexcel.save("attribute_proba_small.xls")

运行结果如下:

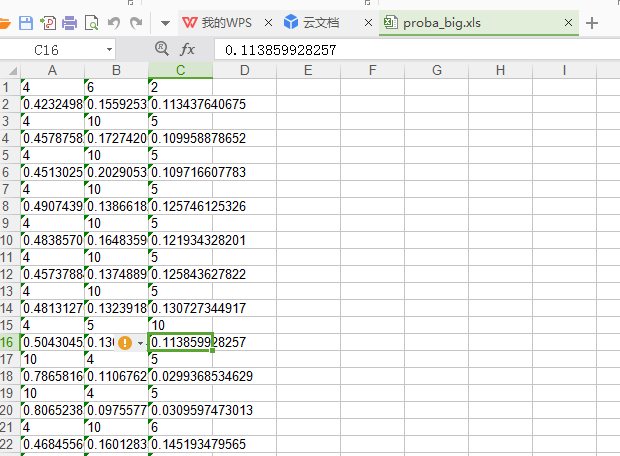

但是这样还不够,我还要输出前3个的预测结果的编号和支持度。

我开了个类attri,key用来放编号,weight则放支持度。

之后对每一条记录的所有的预测概率(支持度)遍历3次。每次找出概率最大的一个,挑出后把编号和

概率存好,并把这个值变为0,再寻找挑出最大的一个,循环3次。存好后输出到excel

'''接下来输出每组概率最大的四个的编号''' class attri: def __init__(self): self.key=0 self.weight=0.0 label=[] for i in attribute_proba: lis=[] k=0 while k<3: k=k+1 p=1 mm=0 sj=-1 for j in i: sj=sj+1 if j>mm: mm=j p=sj i[p]=0#难道是从1开始?我一开始写了i【p-1】但debug时发现不对 a=attri() a.key=p a.weight=mm lis.append(a) label.append(lis) print('挑几个输出') import xlwt myexcel = xlwt.Workbook() sheet = myexcel.add_sheet('sheet') si=-2 sj=-1 for i in label: si=si+2 for j in i: sj=sj+1 sheet.write(si,sj,str(j.key)) sheet.write(si+1,sj,str(j.weight)) sj=-1 myexcel.save("proba_big.xls")

运行结果如下:

自学得真辛苦啊,这些都是我的学习成果,准确还是可以在提高的,对你有帮助的话,点个赞吧,嘿嘿。