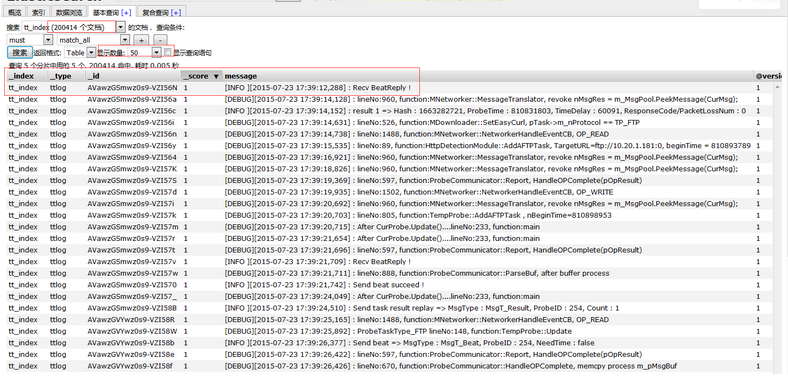

Elaticsearch的原理明白了以后,手头有很多不同类型的数据,如:

1)单条数据,如程序中自己构造的JSON格式数据;

2)符合Elasticsearch索引规范的批量数据;

3)日志文件,格式*.log;

4)结构化数据,存储在mysql、oracle等关系型数据库中;

5)非结构化数据,存储在mongo中;

如何将这些数据导入到Elasticsearch中呢?接下来,本文将逐个介绍。

1、单条索引导入elasticsearch

该方法类似mysql的insert 语句,用于插入一条数据。

[root@yang json_input]# curl -XPUT 'http://192.168.1.1:9200/blog/article/1' -d '

> {

> "title":"New version of Elasticsearch released!",

> "content":"Version 1.0 released today!",

> "tags":["announce","elasticsearch","release"]

> }'- 1

- 2

- 3

- 4

- 5

- 6

结果查看如下所示:

[root@yang json_input]# curl -XGET 'http://192.168.1.1:9200/blog/article/1?pretty'

{

"_index" : "blog",

"_type" : "article",

"_id" : "1",

"_version" : 1,

"found" : true,

"_source" : {

"title" : "New version of Elasticsearch released!",

"content" : "Version 1.0 released today!",

"tags" : [ "announce", "elasticsearch", "release" ]

}

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

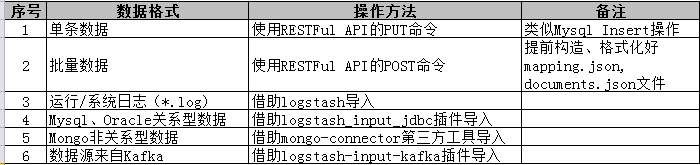

图形化显示如下:

2、批量索引导入到 elasticsearch。

(1)索引结构映射

类似于SQL创建模式描述数据,Mapping控制并定义结构。

[root@yang json_input]# cat mapping.json

{

"book" : {

"_all": {

"enabled": false

},

"properties" : {

"author" : {

"type" : "string"

},

"characters" : {

"type" : "string"

},

"copies" : {

"type" : "long",

"ignore_malformed" : false

},

"otitle" : {

"type" : "string"

},

"tags" : {

"type" : "string"

},

"title" : {

"type" : "string"

},

"year" : {

"type" : "long",

"ignore_malformed" : false,

"index" : "analyzed"

},

"available" : {

"type" : "boolean"

}

}

}

}

[root@yang json_input]# curl -XPUT 'http://110.0.11.120:9200/library/book/_mapping' -d @mapping.json

{"acknowledged":true}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

(2)批量索引,将构造好的JSON信息和数据导入elasticsearch

Elasticsearch可以合并多个请求至单个包中,而这些包可以单个请求的形式传送。如此,可以将多个操作结合起来:

1)在索引中增加或更换现有文档(index);

2)从索引中移除文档(delete);

3)当索引中不存在其他文档定义时,在索引中增加新文档(create)。

为了获得较高的处理效率,选择这样的请求格式。它假定,请求的每一行包含描述操作说明的JSON对象,第二行为JSON对象本身。

可以把第一行看做信息行,第二行行为数据行。唯一的例外是Delete操作,它只包含信息行。

举例如下:

[root@yang json_input]# cat documents_03.json

{ "index": {"_index": "library", "_type": "book", "_id": "1"}}

{ "title": "All Quiet on the Western Front","otitle": "Im Westen nichts Neues","author": "Erich Maria Remarque","year": 1929,"characters": ["Paul Bäumer", "Albert Kropp", "Haie Westhus", "Fredrich Müller", "Stanislaus Katczinsky", "Tjaden"],"tags": ["novel"],"copies": 1, "available": true, "section" : 3}

{ "index": {"_index": "library", "_type": "book", "_id": "2"}}

{ "title": "Catch-22","author": "Joseph Heller","year": 1961,"characters": ["John Yossarian", "Captain Aardvark", "Chaplain Tappman", "Colonel Cathcart", "Doctor Daneeka"],"tags": ["novel"],"copies": 6, "available" : false, "section" : 1}

{ "index": {"_index": "library", "_type": "book", "_id": "3"}}

{ "title": "The Complete Sherlock Holmes","author": "Arthur Conan Doyle","year": 1936,"characters": ["Sherlock Holmes","Dr. Watson", "G. Lestrade"],"tags": [],"copies": 0, "available" : false, "section" : 12}

{ "index": {"_index": "library", "_type": "book", "_id": "4"}}

{ "title": "Crime and Punishment","otitle": "Преступлéние и наказáние","author": "Fyodor Dostoevsky","year": 1886,"characters": ["Raskolnikov", "Sofia Semyonovna Marmeladova"],"tags": [],"copies": 0, "available" : true}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

为了执行批量请求,Elasticsearch提供了_bulk端点,形式是/_bulk,或者是/index_name/_bulk, 甚至是/index_name/type_name/_bulk。

Elasticsearch会返回每个操作的具体的信息,因此对于大批量的请求,响应也是巨大的。

3)执行结果如下所示:

[root@yang json_input]# curl -s -XPOST '10.0.1.30:9200/_bulk' --data-binary @documents_03.json

{"took":150,"errors":false,"items":[{"index":{"_index":"library","_type":"book","_id":"1","_version":1,"_shards":{"total":2,"successful":1,"failed":0},"status":201}},{"index":{"_index":"library","_type":"book","_id":"2","_version":1,"_shards":{"total":2,"successful":1,"failed":0},"status":201}},{"index":{"_index":"library","_type":"book","_id":"3","_version":1,"_shards":{"total":2,"successful":1,"failed":0},"status":201}},{"index":{"_index":"library","_type":"book","_id":"4","_version":1,"_shards":{"total":2,"successful":1,"failed":0},"status":201}}]}- 1

- 2

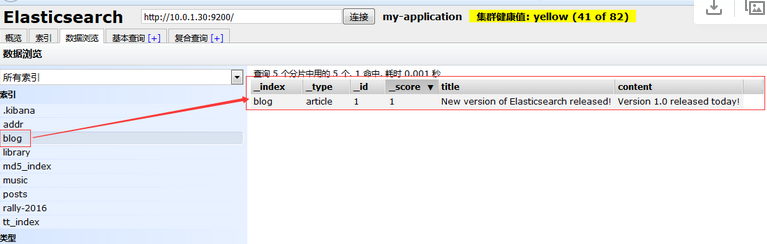

执行结果如下图所示:

3、使用Logstash将 log文件导入elasticsearch

以下以项目实战的 MprobeDebug.log导入到ES中。

[root@yang logstash_conf]# tail -f MrobeDebug.log

[DEBUG][2015-07-23 23:59:58,138] : After CurProbe.Update()....lineNo:233, function:main

[DEBUG][2015-07-23 23:59:58,594] : lineNo:960, function:MNetworker::MessageTranslator, revoke nMsgRes = m_MsgPool.PeekMessage(CurMsg);

[DEBUG][2015-07-23 23:59:58,608] : ProbeTaskType_FTP lineNo:148, function:TempProbe::Update

........- 1

- 2

- 3

- 4

- 5

核心配置文件要求如下:

[root@yang logstash_conf]# cat three.conf

input {

file {

path=> "/opt/logstash/bin/logstash_conf/MrobeDebug.log"

type=>"ttlog"

}

}

output {

elasticsearch {

hosts => "110.10.11.120:9200"

index => "tt_index"

}

stdout { codec => json_lines }

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

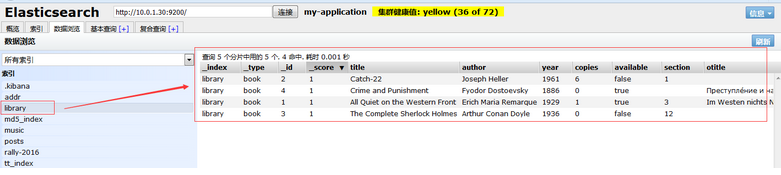

导入结果如下:

合计导入数据:200414条。

4、从Mysql/Oracle关系型数据库向Elasticsearch导入数据

参见:

http://blog.csdn.net/laoyang360/article/details/51747266

http://blog.csdn.net/laoyang360/article/details/51824617

5、从MongoDB非关系型数据库向Elasticsearch导入数据

参见:

http://blog.csdn.net/laoyang360/article/details/51842822

使用插件:mongo-connector

1)mongo与副本集成员连接

2)初始化副本集配置

3)Mongo与ES同步操作