版权声明:版权所有,转载请注明出处.谢谢 https://blog.csdn.net/weixin_35353187/article/details/81913766

HDFS系统里面的词频统计统计 , 有俩种方式 , 一种是简单的单机版 , 一种的分布式的MapReduce原理

第一种 : 简单的单机模式

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.HashMap;

import java.util.Map;

import java.util.Map.Entry;

import java.util.Set;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

/**

* 单机版的词频统计

* @author Administrator

*

*/

public class SingleWC_zhang {

public static void main(String[] args) throws IOException, InterruptedException, URISyntaxException {

Map<String, Integer> map = new HashMap<>();

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop01:9000"), conf, "root");

FSDataInputStream inputStream = fs.open(new Path("/wc.txt"));

BufferedReader reader = new BufferedReader(new InputStreamReader(inputStream));

String line = null;

while((line = reader.readLine())!=null){

//System.out.println(line);

String[] split = line.split(" ");

for (String word : split) {

Integer count = map.getOrDefault(word, 0);

count++;

map.put(word, count);

}

}

FSDataOutputStream create = fs.create(new Path("/part-r-000001"));

Set<Entry<String,Integer>> entrySet = map.entrySet();

for (Entry<String, Integer> entry : entrySet) {

create.write((entry.getKey() + "=" +entry.getValue()+"\r\n").getBytes());

//System.out.println(entry);

}

create.close();

reader.close();

fs.close();

System.out.println("结束任务");

}

}

第二种 : 用MapReduce原理

Map

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class MapTask_zhang {

public static void main(String[] args) throws Exception {

/**

* taskId标识哪台机器运行的

* file统计哪个文件的

* startOffSet从哪个位置开始的

* length读多长

*/

int taskId = Integer.parseInt(args[0]);

String file = args[1];

long startOffSet = Long.parseLong(args[2]);

long lenth = Long.parseLong(args[3]);

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop01:9000"), new Configuration(), "root");

FSDataInputStream inputStream = fs.open(new Path(file));

//输出文件

FSDataOutputStream out_tmp_1 = fs.create(new Path("/wordcount/tmp/part-m"+taskId+"-1"));

FSDataOutputStream out_tmp_2 = fs.create(new Path("/wordcount/tmp/part-m"+taskId+"-2"));

//定位到从哪里读

inputStream.seek(startOffSet);

BufferedReader br = new BufferedReader(new InputStreamReader(inputStream));

//除了taskIdwei1的能读第一行,后面的task都需要跳过一行

if(taskId != 1) {

br.readLine();

}

long count = 0 ;

String line = null;

while((line = br.readLine()) != null) {

String[] split = line.split(" ");

for (String word : split) {

//相同的字符串,相同的hashcode hello 奇数 hello 偶数

if (word.hashCode()%2 == 0) {

out_tmp_1.write((word+"\t"+1+"\n").getBytes());

} else {

out_tmp_2.write((word+"\t"+1+"\n").getBytes());

}

}

//累加每行的数据长度 多读一行

count += line.length()+1;

if (count>lenth) {

break;

}

}

br.close();

out_tmp_1.close();

out_tmp_2.close();

fs.close();

}

}Reduce

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.net.URI;

import java.util.HashMap;

import java.util.Map;

import java.util.Map.Entry;

import java.util.Set;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

public class ReduceTask_zhang {

public static void main(String[] args) throws Exception {

int taskId = Integer.parseInt(args[0]);

Map<String, Integer> map = new HashMap<>();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop01:9000"), new Configuration(), "root");

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/wordcount/tmp/"), true);

while(listFiles.hasNext()) {

LocatedFileStatus file = listFiles.next();

//判断是否是属于自己需要计算的文件

if(file.getPath().getName().endsWith("-"+taskId)) {

FSDataInputStream inputStream = fs.open(file.getPath());

BufferedReader br = new BufferedReader(new InputStreamReader(inputStream));

String line = null;

while((line = br.readLine()) != null) {

String[] split = line.split("\t");

Integer count = map.getOrDefault(split[0], 0);

count += Integer.parseInt(split[1]);

map.put(split[0], count);

}

br.close();

inputStream.close();

}

}

//将结果写入到hdfs上

FSDataOutputStream outputStream = fs.create(new Path("/wordcount/ret/part-r-"+taskId));

Set<Entry<String,Integer>> entrySet = map.entrySet();

for (Entry<String, Integer> entry : entrySet) {

outputStream.write((entry.getKey()+"="+entry.getValue()+"\n").getBytes());

}

outputStream.close();

fs.close();

}

}

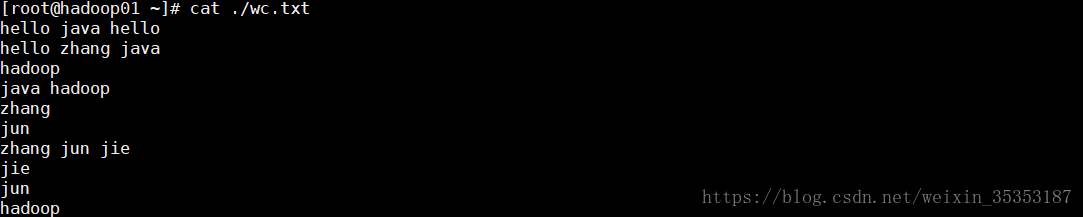

要分析的词频如下

运行方式 :

1.先运行Map,右键 run as ---> java Application

2.右键run as --->run Configurations , 传参数

第一次传参 1 /wc.txt 0 50

第二次传参 2 /wc.txt 50 50

3.运行Reduce , 右键 run as ---> java Application

右键run as --->run Configurations , 传参数

第一次传参 1

第二次传参 2

查看集群结果:

map处理结果:

Reduce分析结果: