版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/shursulei/article/details/78166457

HA搭建和zookpeer集群的搭建

一、zookpeer集群的搭建

| 机器 | 节点 | myid |

|---|---|---|

| zookpeerserver1 | node1 | 1 |

| zookpeerserver2 | node2 | 2 |

| zookpeerserver3 | node3 | 3 |

- 解压和放置文件

tar -zxvf zookeeper-3.4.6.tar.gz

[root@node1 software]# mv zookeeper-3.4.6 /home/

- 新建和配置zoo.cfg文件

[root@node1 zookeeper-3.4.6]# cd conf/

[root@node1 conf]# ls

configuration.xsl log4j.properties zoo_sample.cfg

[root@node1 conf]# vi zoo.cfg

tickTime=2000

dataDir=/opt/zookeeper

clientPort=2181

initLimit=5

syncLimit=2

server.1=node1:2888:3888

server.2=node2:2888:3888

server.3=node3:2888:3888

- 在四个节点中都创建文件

[root@node1 conf]# mkdir /opt/zookeeper

[root@node1 conf]# cd /opt/zookeeper

[root@node1 zookeeper]# vi myid

各个节点中写入

##node1:## 1

##node2:## 2

##node3:## 3

## ##之间的不是- 拷贝zookeeper到node2,node3节点上

[root@node1 home]# scp -r zookeeper-3.4.6/ root@node2:/home/

[root@node1 home]# scp -r zookeeper-3.4.6/ root@node3:/home/

- 启动(配置环境变量)

##配置node1、node2、node3的环境变量

vi ~/.bash_profile

export PATH

export JAVA_HOME=/usr/java/jdk1.7.0_79

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/home/hadoop-2.5.1

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:/home/zookeeper-3.4.6/bin- 启动2(node1,node2,node3同时都要启动)

[root@node1 bin]# zkServer.sh start

JMX enabled by default

Using config: /home/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED- 查看日志

##日志在启动的你所目录下生成,如在上面的bin目录下

##表示你在哪个目录下启动上面的命令,就会在该目录下生成日志文件

[root@node1 bin]# tail -100 zookeeper.out zookeeper启动结束之后会形成一个内存数据库;使用zkCli.sh连接内存数据库

启动zkCli.sh

[root@node1 bin]# zkCli.sh

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls /##查看

[zookeeper]

[zk: localhost:2181(CONNECTED) 1] get /zookeeper##获取

cZxid = 0x0

ctime = Thu Jan 01 08:00:00 CST 1970

mZxid = 0x0

mtime = Thu Jan 01 08:00:00 CST 1970

pZxid = 0x0

cversion = -1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

[zk: localhost:2181(CONNECTED) 2] quit##退出

Quitting...

2017-10-07 07:28:01,978 [myid:] - INFO [main:ZooKeeper@684] - Session: 0x15ef3fb2e4b0000 closed

2017-10-07 07:28:01,979 [myid:] - INFO [main-EventThread:ClientCnxn$EventThread@512] - EventThread shut down

二、hadoop的HA的环境搭建(四个节点都要删除)

- 删除masters文件(四个节点都要删除)

[root@node1 bin]# rm -rf /home/hadoop-2.5.1/etc/hadoop/masters- 删除hadoop的临时文件(四个节点都要删除)

[root@node1 bin]# rm -rf /opt/hadoop-2.5

- 修改hdfs-site.xml文件

| 主机 | namenode | datenode | journalnode |

|---|---|---|---|

| node1 | * | ||

| node2 | * | * | |

| node3 | * | * | |

| node4 | * | * | * |

<configuration>

<property>

<name>dfs.nameservices</name>

<value>shursulei</value>##自己命令

</property>

<property>

<name>dfs.ha.namenodes.shursulei</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.shursulei.nn1</name>

<value>node1:8020</value>#rpc

</property>

<property>

<name>dfs.namenode.rpc-address.shursulei.nn2</name>

<value>node4:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.shursulei.nn1</name>

<value>node1:50070</value>##http协议

</property>

<property>

<name>dfs.namenode.http-address.shursulei.nn2</name>

<value>node4:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://node2:8485;node3:8485;node4:8485/abc</value>

</property>##edits的共享文件,任选3台,实际中是另外的三台节点

<property>

<name>dfs.client.failover.proxy.provider.shursulei</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_dsa</value>##次出去需要修改

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/journalnode</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>##启动自动切换

</property>

</configuration>

##(##以后的文字都不需要粘贴,这个只是用来解释的)- core-site.xml文件配置

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://shursulei</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop-2.5</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>node1:2181,node2:2181,node3:2181</value>

</property>

</configuration>- slaves文件的配置

node2

node3

node4- 将该文件下的所有配置文件拷贝到其他几点

[root@node1 hadoop]# scp ./* root@node2:/home/hadoop-2.5.1/etc/hadoop/

[root@node1 hadoop]# scp ./* root@node3:/home/hadoop-2.5.1/etc/hadoop/

[root@node1 hadoop]# scp ./* root@node4:/home/hadoop-2.5.1/etc/hadoop/

- 启动journalnode(配置在node2,node3,node4节点上)

##分别启动各个节点,不用启动集群

[root@node2 hadoop]# hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop-2.5.1/logs/hadoop-root-journalnode-node2.out

[root@node3 ~]# hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop-2.5.1/logs/hadoop-root-journalnode-node3.out

[root@node4 ~]# hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop-2.5.1/logs/hadoop-root-journalnode-node4.out

##查看日志

[root@node2 logs]# tail -200 hadoop-root-journalnode-node2.log

[root@node3 logs]# tail -200 hadoop-root-journalnode-node3.log

[root@node4 logs]# tail -200 hadoop-root-journalnode-node4.log 初始化同步两台namenode(node1和node4)

格式化一台namenode,格式化之前必须启动journalnode,上面的内容

[root@node4 logs]# hdfs namenode -format##格式化node4,查看日志看是否成功

将node4格式化后的文件拷贝到node1上去

[root@node4 opt]# cd hadoop-2.5/

[root@node4 hadoop-2.5]# pwd

/opt/hadoop-2.5

[root@node1 ~]# scp -r root@node4:/opt/hadoop-2.5 /opt/

fsimage_0000000000000000000.md5 100% 62 0.1KB/s 00:00

seen_txid 100% 2 0.0KB/s 00:00

fsimage_0000000000000000000 100% 351 0.3KB/s 00:00

VERSION

##此处同步正常- 初始化HA,(zookpeer),在任意一台namenode上选择一台,此处我选择了node1

[root@node1 ~]# hdfs zkfc -formatZK

----------------------------

17/10/07 18:55:04 FATAL ha.ZKFailoverController: Unable to start failover controller. Unable to connect to ZooKeeper quorum at node1:2181,node2:2181,node3:2181. Please check the configured value for ha.zookeeper.quorum and ensure that ZooKeeper is running.

此处会出错,源头是zookpeer没有启动。

[root@node1 logs]# zkServer.sh start

[root@node2 logs]# zkServer.sh start

[root@node3 logs]# zkServer.sh start- 启动namenode

[root@node1 ~]# start-dfs.sh##在node1上进行命令启动,原因是ssh的原因

Starting namenodes on [node1 node4]

node4: starting namenode, logging to /home/hadoop-2.5.1/logs/hadoop-root-namenode-node4.out

node1: starting namenode, logging to /home/hadoop-2.5.1/logs/hadoop-root-namenode-node1.out

node3: starting datanode, logging to /home/hadoop-2.5.1/logs/hadoop-root-datanode-node3.out

node2: starting datanode, logging to /home/hadoop-2.5.1/logs/hadoop-root-datanode-node2.out

node4: starting datanode, logging to /home/hadoop-2.5.1/logs/hadoop-root-datanode-node4.out

Starting journal nodes [node2 node3 node4]

node3: journalnode running as process 1307. Stop it first.

node2: journalnode running as process 1359. Stop it first.

node4: journalnode running as process 1368. Stop it first.

Starting ZK Failover Controllers on NN hosts [node1 node4]

node1: starting zkfc, logging to /home/hadoop-2.5.1/logs/hadoop-root-zkfc-node1.out

node4: starting zkfc, logging to /home/hadoop-2.5.1/logs/hadoop-root-zkfc-node4.out

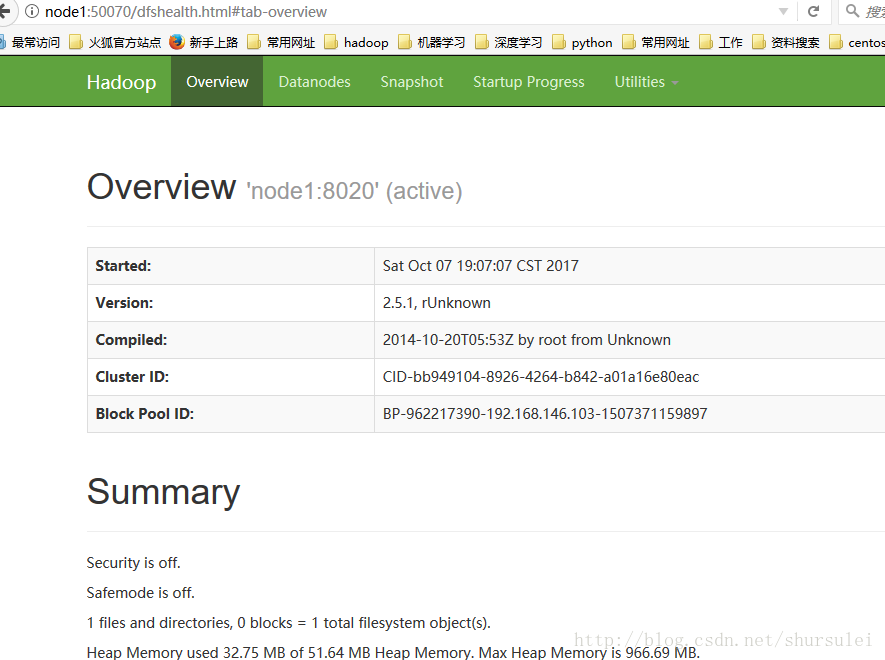

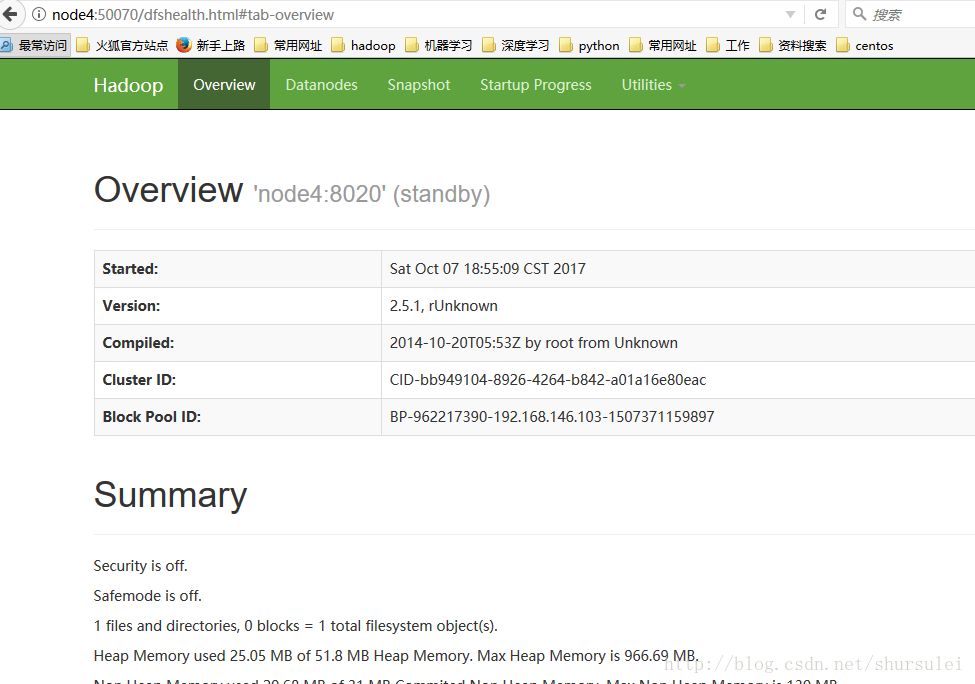

##途中会出现进程报错,使用kill杀死进程web界面

此处任意杀死一个node1,检测是否可以将node5自动切换成active状态(此处内容需要测试,查看日志zkfc的日志)

node1:停止stop-dfs.sh所有jps

配置node2,node3,node4的ssh免密码登录

在node4上生成ssh,拷贝到node1上

[root@node4 .ssh]# ls

authorized_keys id_dsa id_dsa.pub known_hosts

[root@node4 .ssh]# scp id_dsa.pub root@node1:/opt/

The authenticity of host 'node1 (192.168.146.100)' can't be established.

RSA key fingerprint is 94:04:c9:47:67:9e:8a:28:ce:05:3b:dd:92:5d:a8:b6.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node1,192.168.146.100' (RSA) to the list of known hosts.

root@node1's password:

id_dsa.pub node1上进行秘钥的复制

[root@node1 ~]# cat /opt/id_dsa.pub >> ~/.ssh/authorized_keys验证是否自动切换namenode:

[root@node1 ~]# jps

2697 DFSZKFailoverController

2432 NameNode

1468 QuorumPeerMain

2757 Jps

[root@node1 ~]# kill -9 2432

刷新node4查看是否切换了补充:每次启动集群的步骤

1.启动zookpeer(zkServer.sh start)

2.启动JournalNode:./hadoop-daemon.sh start journalnode

3.[root@node1 ~]# start-dfs.sh

4.[root@node1 ~]# stop-dfs.sh后续看:hadoop-企业版环境搭建(三)