版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/daerzei/article/details/81119025

今天早上打开ClouderaManager集群发现HBase和Hive的服务都报错了,有问题就解决吧

先看下HBase的错误日志,

HBase的报错:

Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:350)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1081)

Failed deleting my ephemeral node

org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /hbase/rs/cm02.spark.com,60020,1530822114541

at org.apache.zookeeper.KeeperException.create(KeeperException.java:99)

at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)

at org.apache.zookeeper.ZooKeeper.delete(ZooKeeper.java:873)

at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.delete(RecoverableZooKeeper.java:178)

at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNode(ZKUtil.java:1236)

at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNode(ZKUtil.java:1225)

at org.apache.hadoop.hbase.regionserver.HRegionServer.deleteMyEphemeralNode(HRegionServer.java:1435)

at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:1098)

at java.lang.Thread.run(Thread.java:745)

Region server exiting

java.lang.RuntimeException: HRegionServer Aborted

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.start(HRegionServerCommandLine.java:68)

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.run(HRegionServerCommandLine.java:87)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:127)

at org.apache.hadoop.hbase.regionserver.HRegionServer.main(HRegionServer.java:2696)再看下Hive的报错

HiveServer2的报错:

[main]: Error starting HiveServer2

java.lang.Error: Max start attempts 30 exhausted

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:550)

at org.apache.hive.service.server.HiveServer2.access$700(HiveServer2.java:83)

at org.apache.hive.service.server.HiveServer2$StartOptionExecutor.execute(HiveServer2.java:764)

at org.apache.hive.service.server.HiveServer2.main(HiveServer2.java:637)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.lang.RuntimeException: java.net.NoRouteToHostException: No Route to Host from cm02.spark.com/192.168.60.55 to cm01.spark.com:8020 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:566)

at org.apache.hive.service.cli.CLIService.applyAuthorizationConfigPolicy(CLIService.java:124)

at org.apache.hive.service.cli.CLIService.init(CLIService.java:111)

at org.apache.hive.service.CompositeService.init(CompositeService.java:59)

at org.apache.hive.service.server.HiveServer2.init(HiveServer2.java:119)

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:513)

Caused by: java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:648)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:744)

at org.apache.hadoop.ipc.Client$Connection.access$3000(Client.java:396)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1555)

at org.apache.hadoop.ipc.Client.call(Client.java:1478)发现HBase和Hive的错误日志中都有no route to host的提示,route相关的错误一般都跟跟路由相关的,Hadoop相关的服务什么跟路由相关呢?很大可能是不同节点之间的通信啦,网上查了一下,可能的原因是防火墙没有关,那就看下防火墙的状态吧:

service iptables status

果然没有关,怎么回事?我记得我是关了的啊?算了不管了,关一下吧:

# 关闭防火墙

service iptables stop

# 永久关闭防火墙

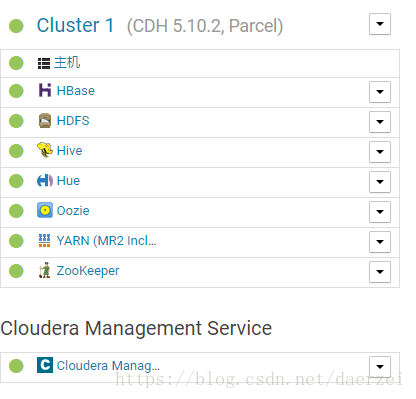

chkconfig iptables off然后重启一下HBase和Hive, 看着满屏的绿色,整个世界都清静了