笔者在搭建好Hadoop HA 高可用集群启动时报错后采用格式化NameNode后,再次启动没有DataNode,后面是3种解决方案。

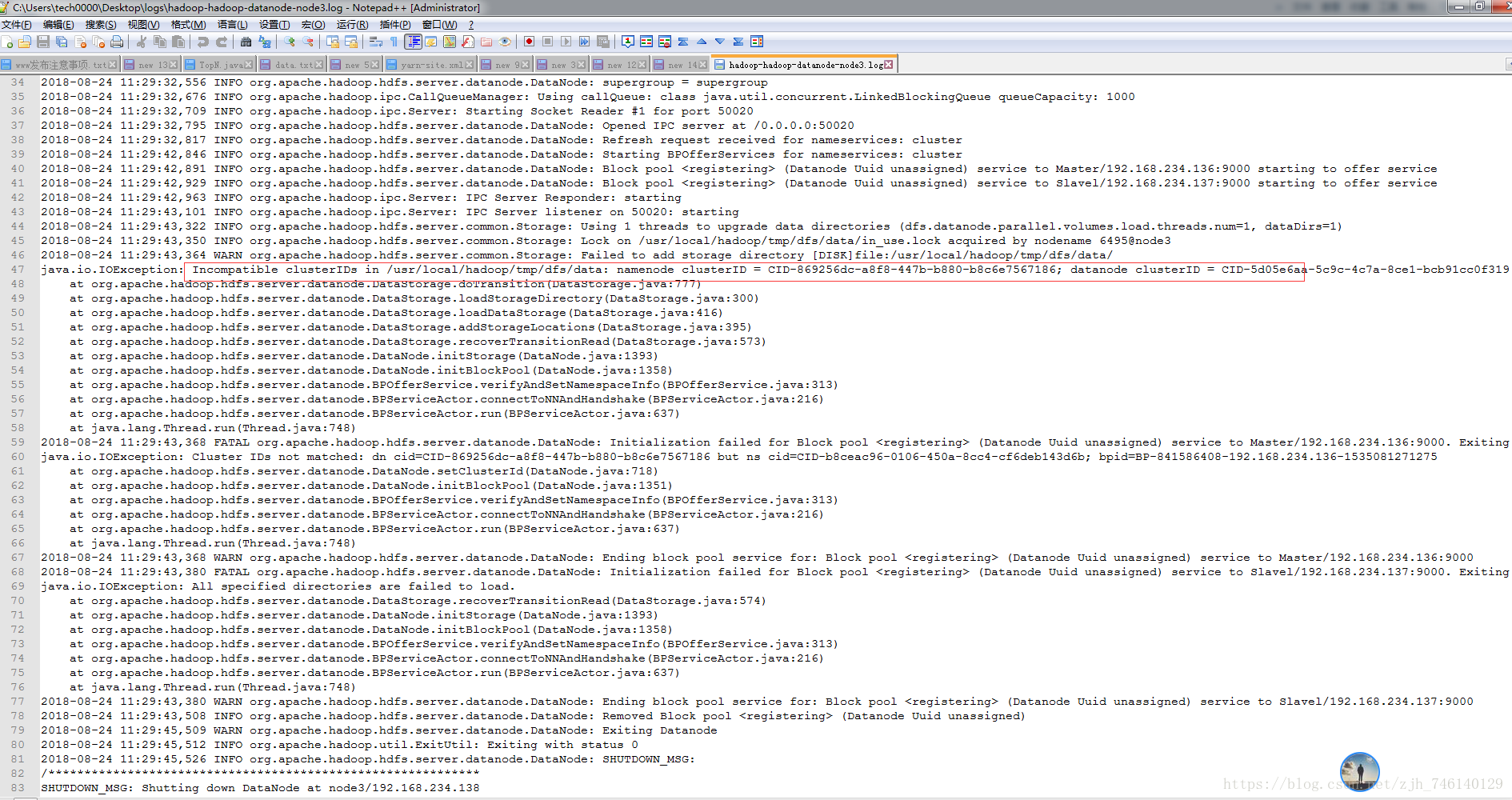

报错信息:

2018-08-24 11:29:43,322 INFO org.apache.hadoop.hdfs.server.common.Storage: Using 1 threads to upgrade data directories (dfs.datanode.parallel.volumes.load.threads.num=1, dataDirs=1)

2018-08-24 11:29:43,350 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /usr/local/hadoop/tmp/dfs/data/in_use.lock acquired by nodename 6495@node3

2018-08-24 11:29:43,364 WARN org.apache.hadoop.hdfs.server.common.Storage: Failed to add storage directory [DISK]file:/usr/local/hadoop/tmp/dfs/data/

java.io.IOException: Incompatible clusterIDs in /usr/local/hadoop/tmp/dfs/data: namenode clusterID = CID-869256dc-a8f8-447b-b880-b8c6e7567186; datanode clusterID = CID-5d05e6aa-5c9c-4c7a-8ce1-bcb91cc0f319

at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:777)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadStorageDirectory(DataStorage.java:300)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadDataStorage(DataStorage.java:416)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:395)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:573)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1393)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1358)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:313)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:216)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:637)

at java.lang.Thread.run(Thread.java:748)

2018-08-24 11:29:43,368 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to Master/192.168.234.136:9000. Exiting.

java.io.IOException: Cluster IDs not matched: dn cid=CID-869256dc-a8f8-447b-b880-b8c6e7567186 but ns cid=CID-b8ceac96-0106-450a-8cc4-cf6deb143d6b; bpid=BP-841586408-192.168.234.136-1535081271275

at org.apache.hadoop.hdfs.server.datanode.DataNode.setClusterId(DataNode.java:718)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1351)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:313)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:216)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:637)

at java.lang.Thread.run(Thread.java:748)

2018-08-24 11:29:43,368 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Ending block pool service for: Block pool <registering> (Datanode Uuid unassigned) service to Master/192.168.234.136:9000

2018-08-24 11:29:43,380 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to Slavel/192.168.234.137:9000. Exiting.

java.io.IOException: All specified directories are failed to load.

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:574)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1393)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1358)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:313)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:216)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:637)

at java.lang.Thread.run(Thread.java:748)

2018-08-24 11:29:43,380 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Ending block pool service for: Block pool <registering> (Datanode Uuid unassigned) service to Slavel/192.168.234.137:9000

2018-08-24 11:29:43,508 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Removed Block pool <registering> (Datanode Uuid unassigned)

2018-08-24 11:29:45,509 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Exiting Datanode

2018-08-24 11:29:45,512 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 0

2018-08-24 11:29:45,526 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at node3/192.168.234.138

************************************************************/错误截图:

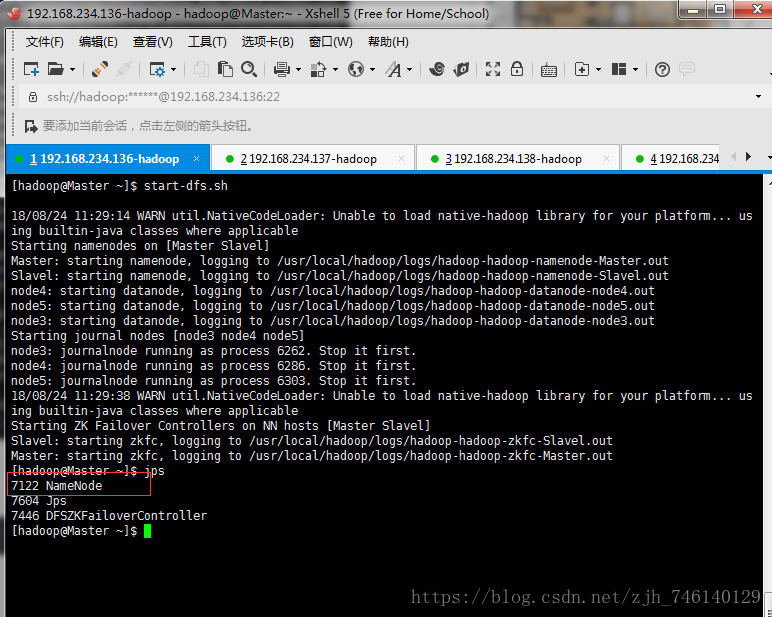

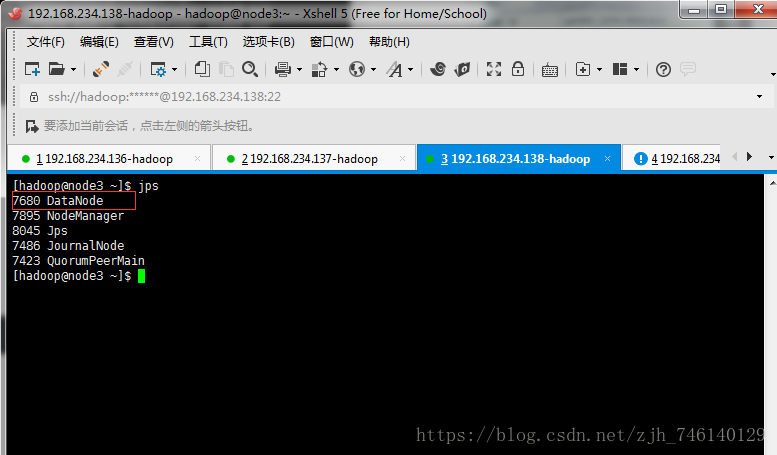

查看进程:只有NameNode没有DataNode

解决方案:

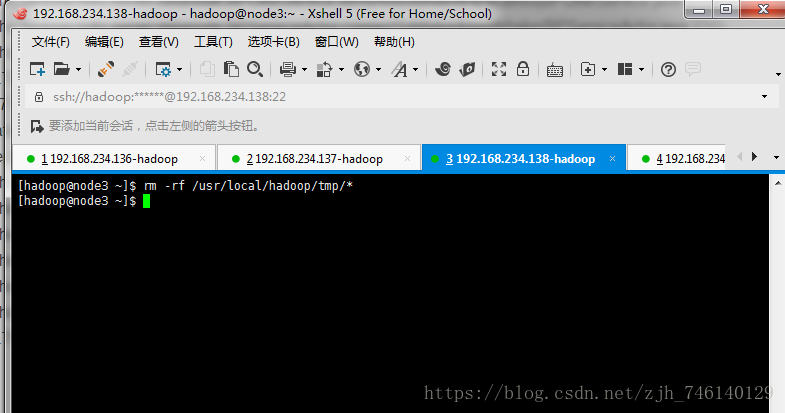

1、清空hadoop根目录下tmp下的文件并格式化NameNode

①清空tmp文件

rm -rf /usr/java/hadoop/tmp/* (所有datanode节点都要执行)

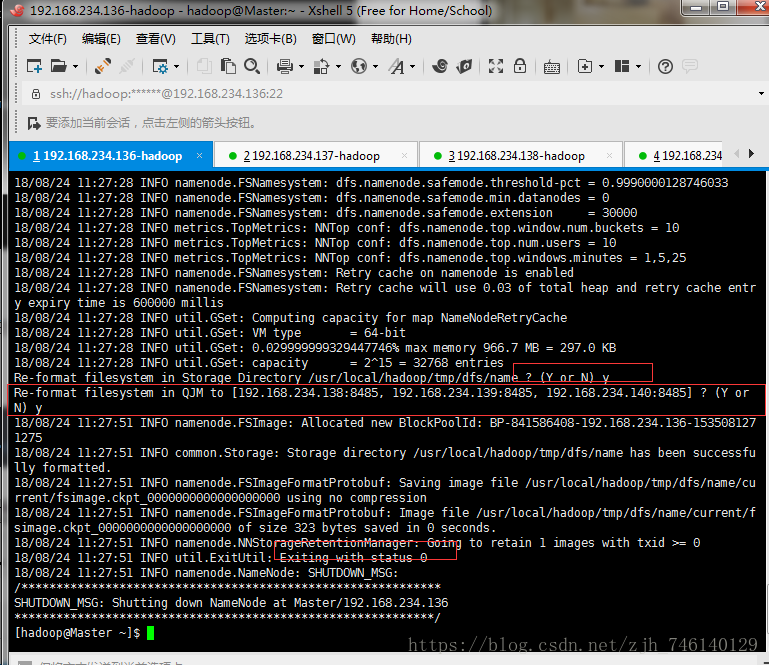

hadoop namenode -format②格式化namenode

hdfs namenode -format

scp -r /usr/local/hadoop/tmp hadoop@Slavel:/usr/local/hadoop/③再次启动