<div style="font-family:'Helvetica Neue';font-size:14px;"></div><div><pre name="code" class="java"><pre name="code" class="java"><pre name="code" class="java">int a =b;

如果想在项目中直接使用opencv的java api 并且也需要自己编写c++,那么就需要Java Api与Jni混用,下面就以人脸检测为例,实验一些混合方式

一、创建项目

创建项目FaceDetection

二、添加opencv的java api

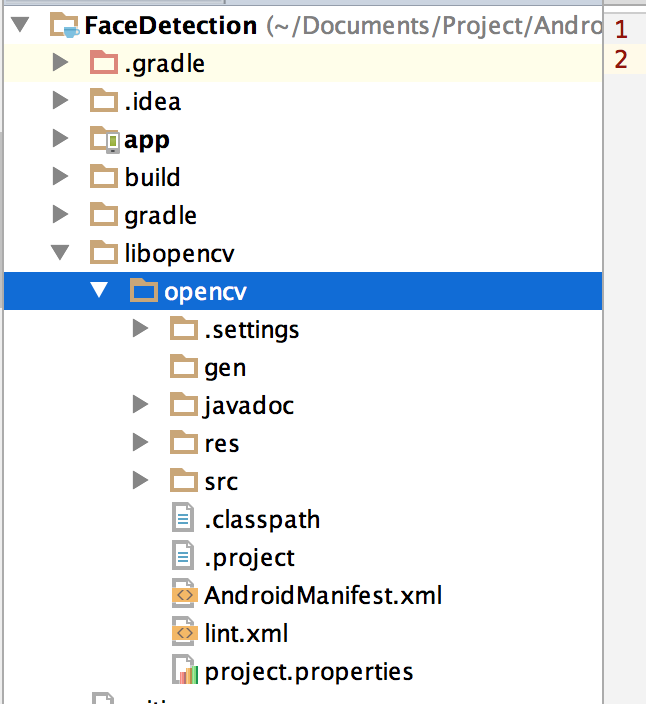

1、再项目中创建文件夹libopencv用来存放opencv的库module

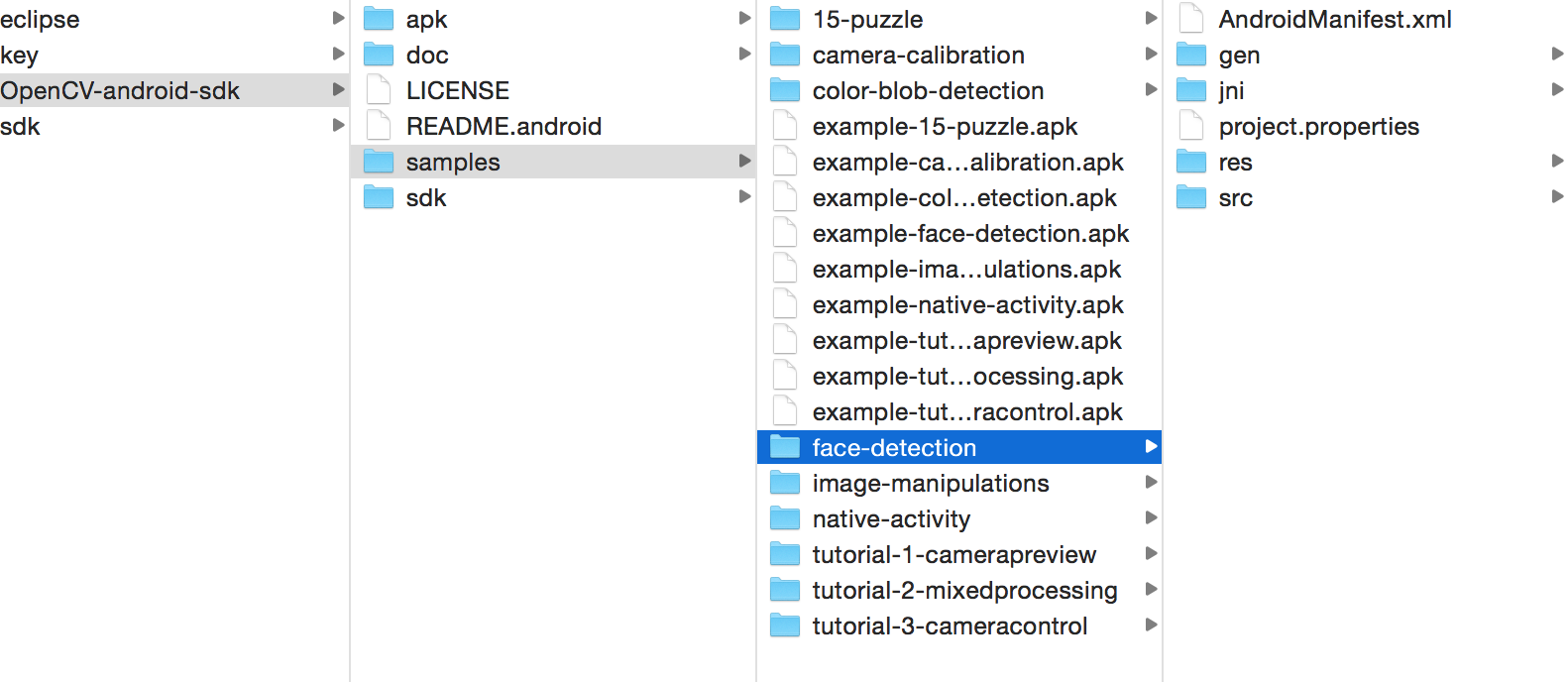

2、将

Android/OpenCV-android-sdk/sdk/java 复制到libopencv目录中,并将其改名opencv

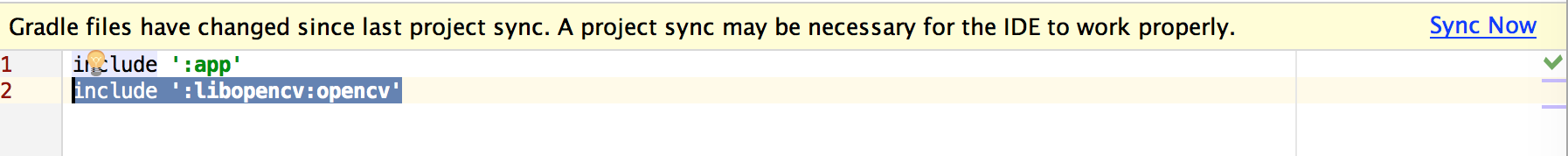

3、打开settings.gradle添加

include

':libopencv:opencv’并点击Sync Now

4、在opencv中创建build.gradle文件,并将以下内容复制进去,注意按要求

替换内容,

然后点击Sync Now

apply plugin:'android-library'

buildscript{

repositories{

mavenCentral()

}

dependencies{

classpath 'com.android.tools.build:gradle:1.3.0' // 和项目/build.gradle中的一致

}

}

android{

compileSdkVersion 22 //与 app/build.gradle中的一致

buildToolsVersion "22.0.1" //与 app/build.gradle中的一致

defaultConfig {

minSdkVersion 15 //与 app/build.gradle中的一致

targetSdkVersion 22 //与 app/build.gradle中的一致

versionCode 2411 //改成自己下的opencv的版本

versionName "2.4.11" //改成自己下的opencv的版本

}

sourceSets{

main{

manifest.srcFile 'AndroidManifest.xml'

java.srcDirs = ['src']

resources.srcDirs = ['src']

res.srcDirs = ['res']

aidl.srcDirs = ['src']

}

}

}

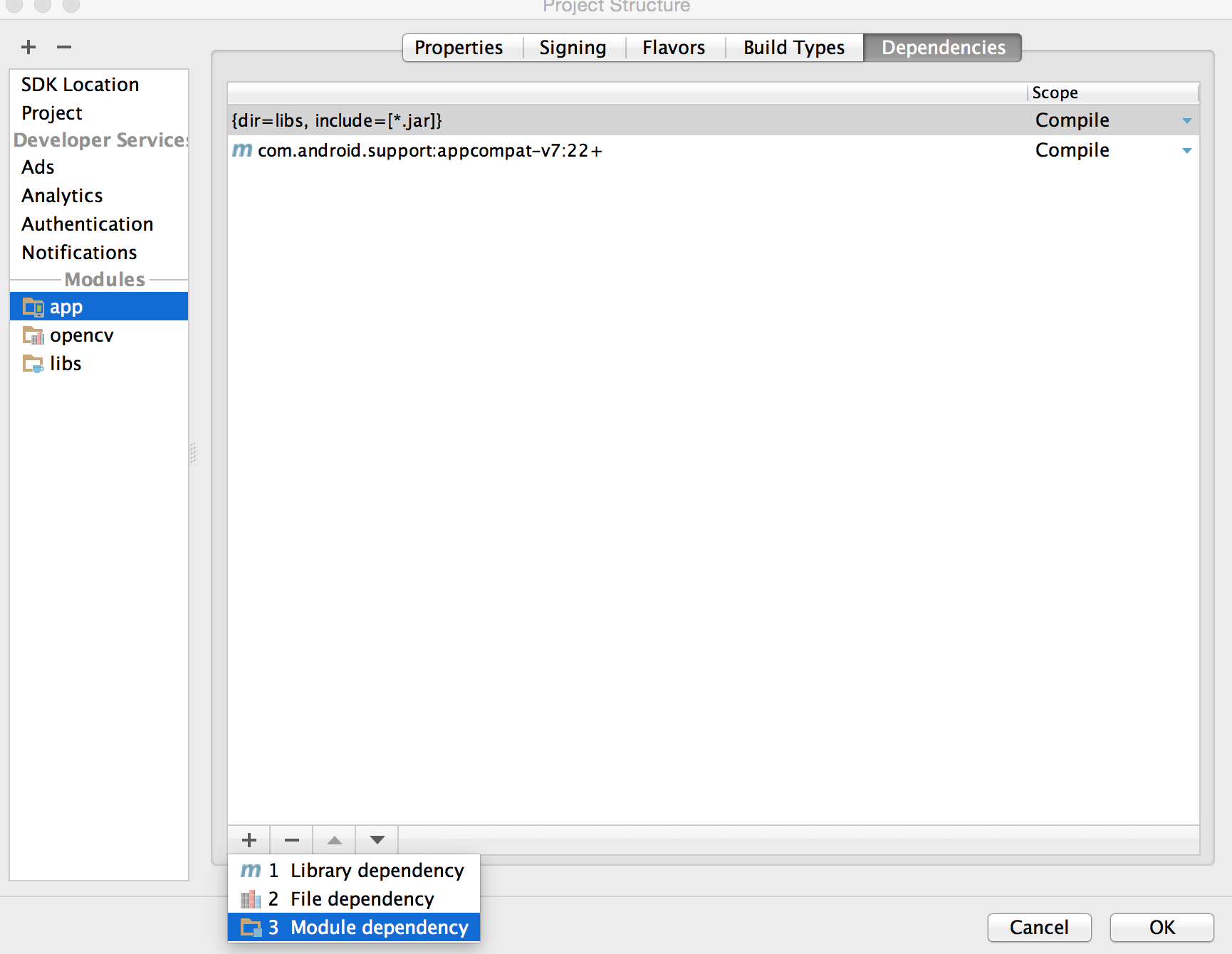

5、为app添加opencv依赖,在app上右键 open module settings,将opencv加进去

三、添加Opencv Face Detection Jni

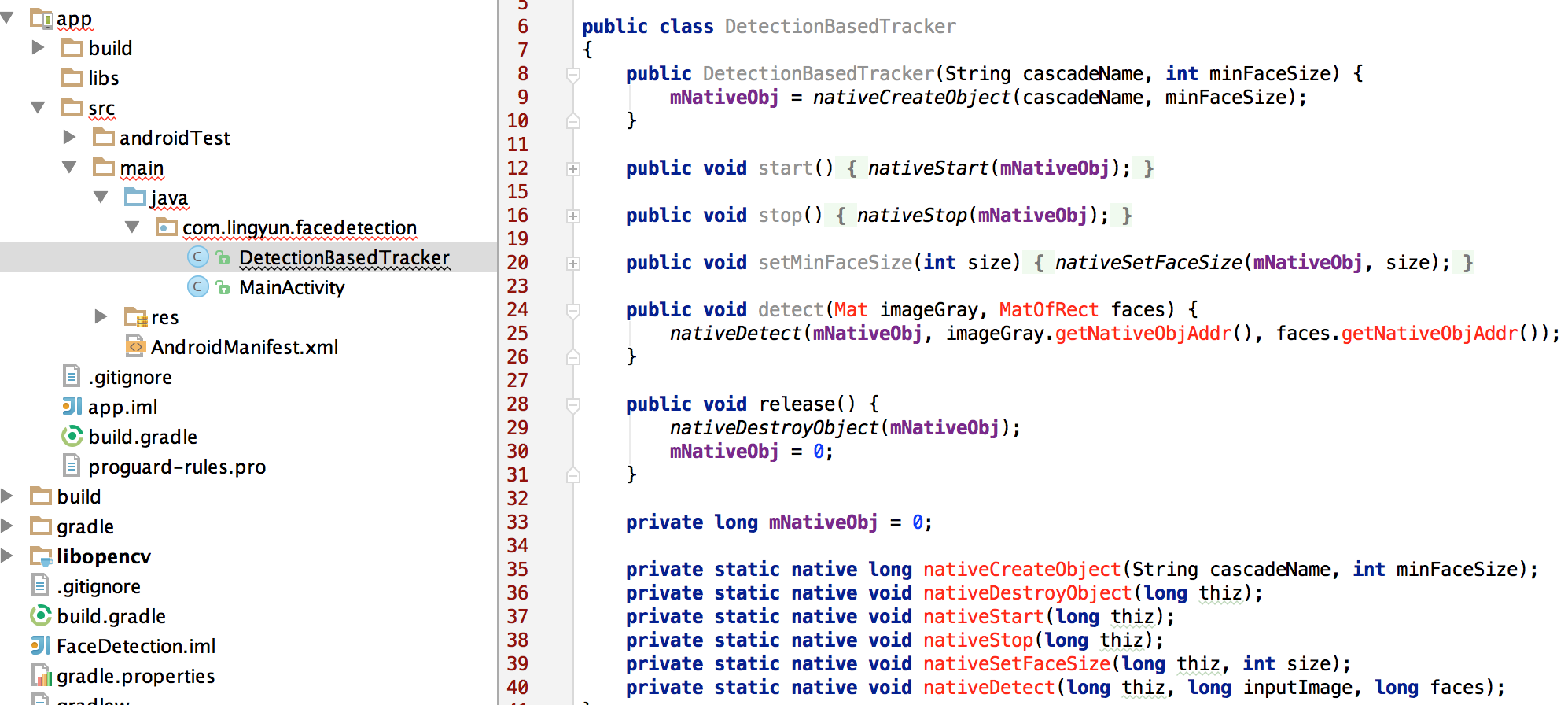

1、打开opencv提供的人脸识别示例,将samples/face-detectioin/src/org/opencv/samples/facedetect/DetectionBasedTracker.java文件拷贝到app中包下,注意java文件package修改成当前的包

错误是因为并没有native文件与之关联

2、在app中创建autojavah.sh文件,用来创建jni文件夹及.h文件,内容如下:

#!/bin/sh

export ProjectPath=$(cd "../$(dirname "$1")"; pwd)

export ProjectPath=$(cd "../$(dirname "$1")"; pwd)

export TargetClassName="com.lingyun.facedetection.DetectionBasedTracker" #换成你的包名.含有native方法的类名

export SourceFile="${ProjectPath}/app/src/main/java" #java源文件目录

export TargetPath="${ProjectPath}/app/src/main/jni" #输出jni文件目录

cd "${SourceFile}"

javah -d ${TargetPath} -classpath "${SourceFile}" "${TargetClassName}"

echo -d ${TargetPath} -classpath "${SourceFile}" "${TargetClassName}"

javah -d ${TargetPath} -classpath "${SourceFile}" "${TargetClassName}"

echo -d ${TargetPath} -classpath "${SourceFile}" "${TargetClassName}"

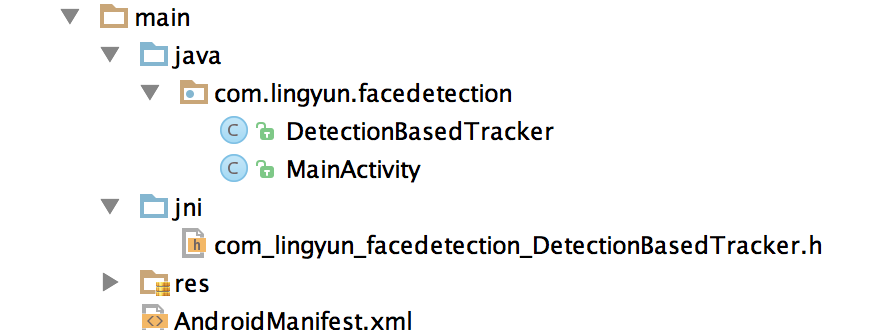

3、右键运行autojavah.sh文件,如果没有插件,android Studio会提示是否下载安装插件

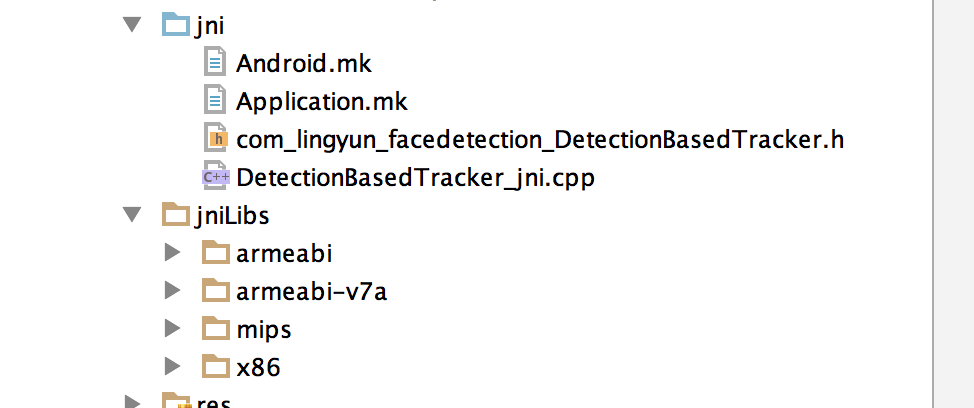

此时可以看到多了jni目录以及一个.h文件

4、将

OpenCV-android-sdk/samples/face-detection/jni中的.cpp 和.mk文件复制到jni目录中

修改.cpp中的include头文件

#include <com_lingyun_facedetection_DetectionBasedTracker.h>

修改函数名为.h中的函数名,这里有6个函数

修改Android.mk文件:

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

OPENCV_CAMERA_MODULES:=on

OPENCV_INSTALL_MODULES:=off

OPENCV_LIB_TYPE:=STATIC

下面一行换成自己的opencvsdk

include /Users/lichuanpeng/Documents/Program_File/Android/OpenCV-android-sdk/sdk/native/jni/OpenCV.mk

LOCAL_SRC_FILES := DetectionBasedTracker_jni.cpp

LOCAL_C_INCLUDES += $(LOCAL_PATH)

LOCAL_LDLIBS += -lm -llog

LOCAL_MODULE := detection_based_tracker

include $(BUILD_SHARED_LIBRARY)

修改Application.mk文件

APP_STL:=gnustl_static

APP_CPPFLAGS:=-frtti -fexceptions

APP_ABI := armeabi armeabi-v7a x86 mips

APP_PLATFORM := android-8

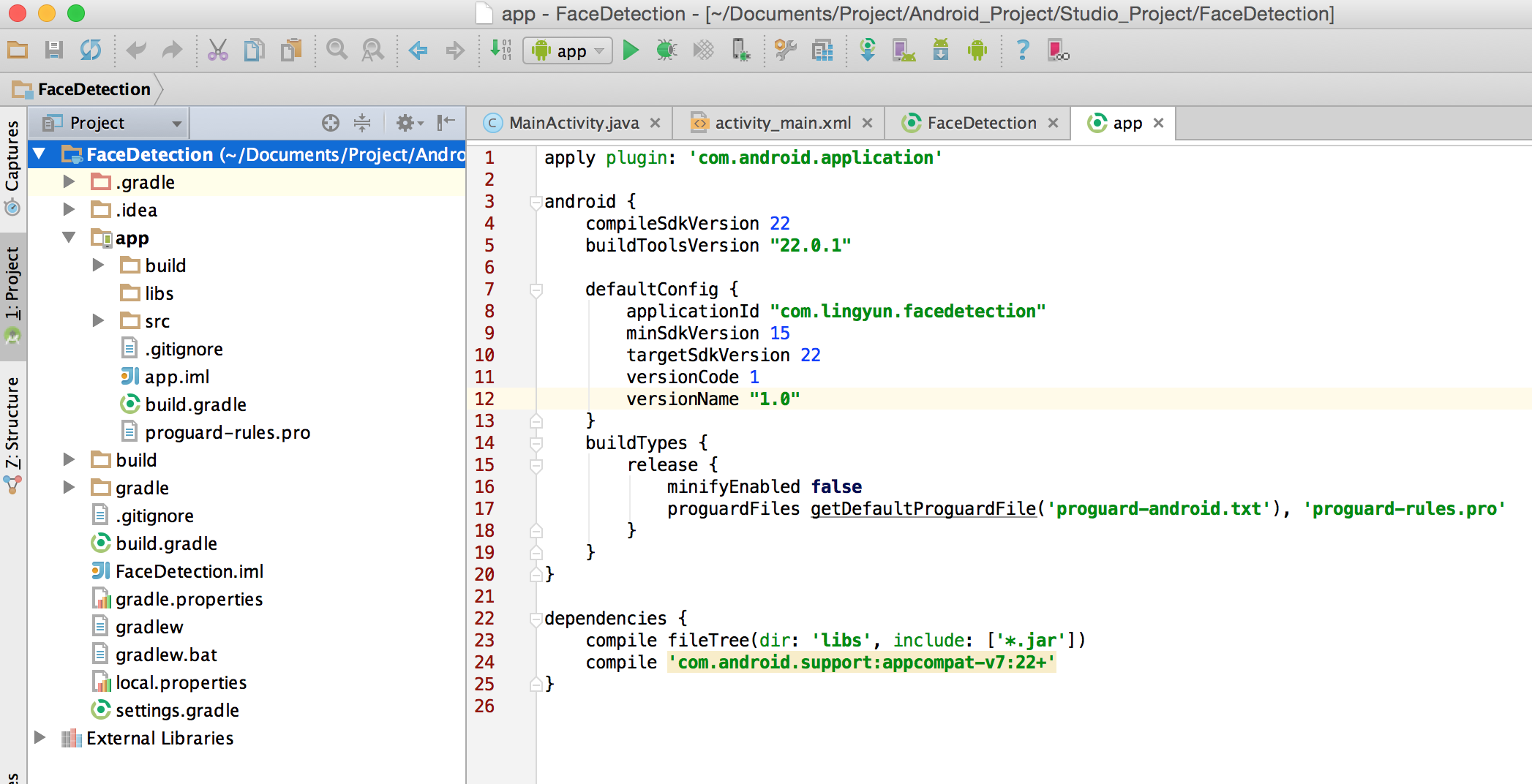

5、配置app的build.gradle

我的配置是

apply plugin: 'com.android.application'

android {

compileSdkVersion 22

buildToolsVersion "22.0.1"

defaultConfig {

applicationId "com.lingyun.facedetecttest"

minSdkVersion 15

targetSdkVersion 22

versionCode 1

versionName "1.0"

这是添加的

ndk{

moduleName "app"

}

}

这是添加的

sourceSets.main {

jniLibs.srcDir 'src/main/jnilibs'

jni.srcDirs = [] //disable automatic ndk-build call

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android.txt'), 'proguard-rules.pro'

}

}

}

dependencies {

compile fileTree(dir: 'libs', include: ['*.jar'])

compile 'com.android.support:appcompat-v7:22+'

compile project(':opencvlibs:opencv')

}

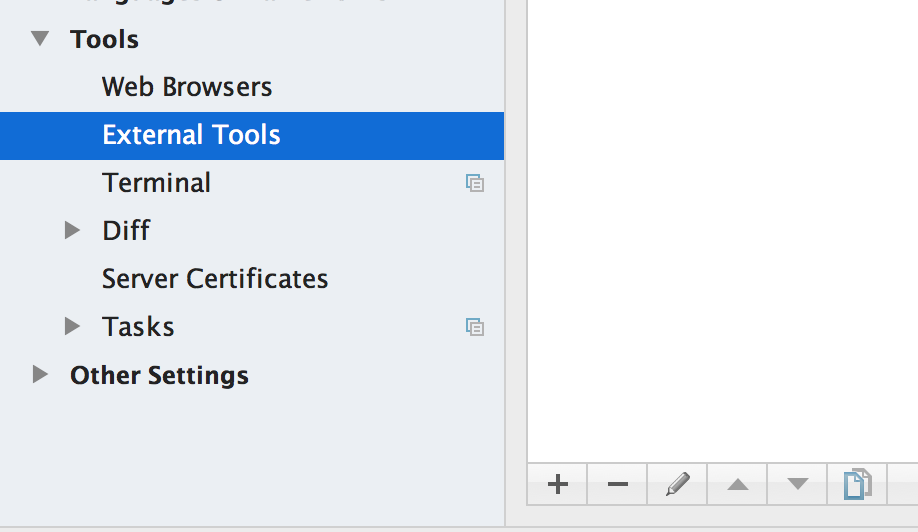

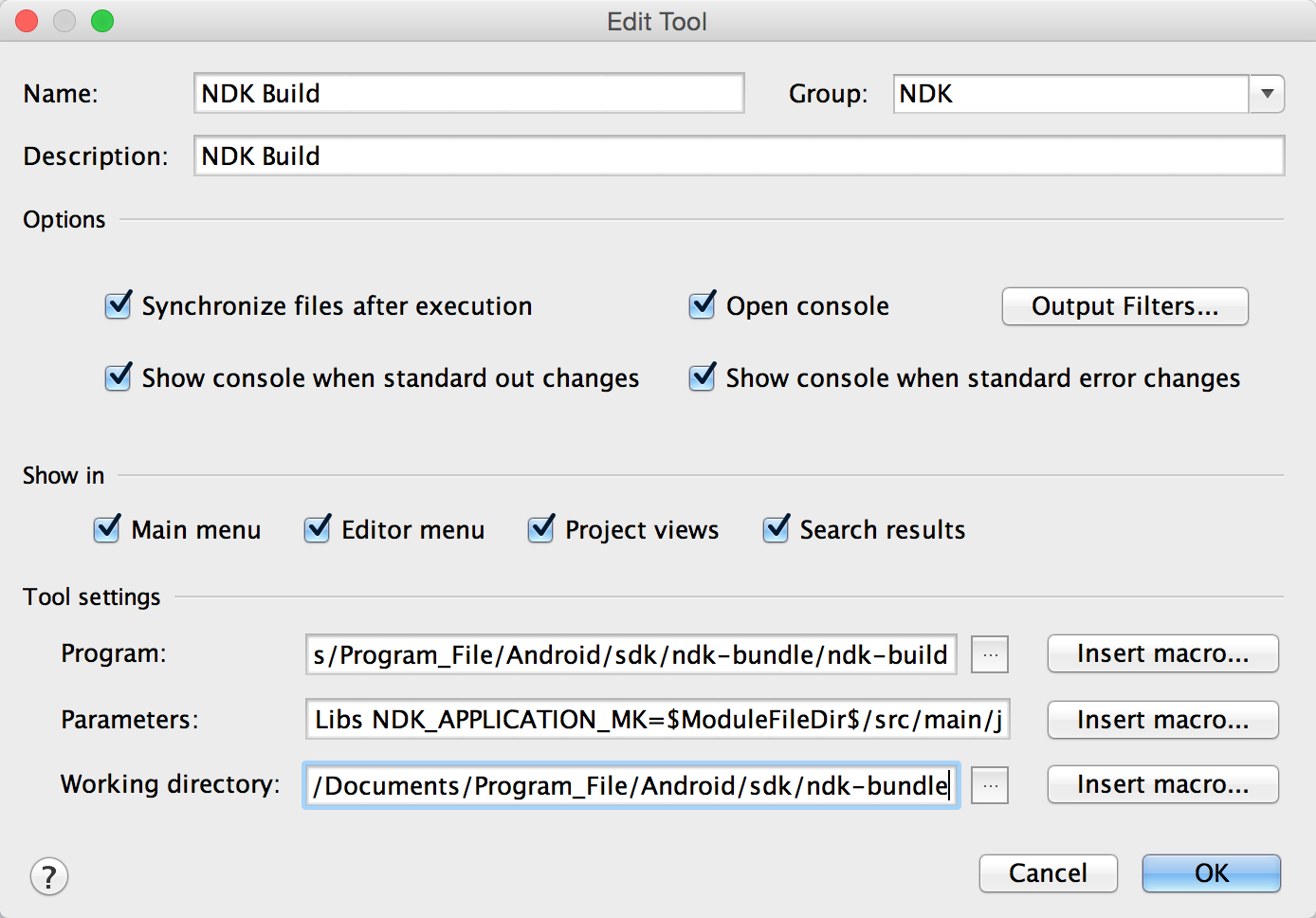

6、新增NDK_BUILD 工具

点击Android Studio->Preferences->External Tools 点击+新增

新增 NDK Build

Name: NDK Build

Group: NDK

Description: NDK Build

Options: 全打勾

Show in: 全打勾

Tools Settings:

Program: NDK目錄/ndk-build

Parameters: NDK_PROJECT_PATH=$ModuleFileDir$/build/intermediates/ndk NDK_LIBS_OUT=$ModuleFileDir$/src/main/jniLibs NDK_APPLICATION_MK=$ModuleFileDir$/src/main/jni/Application.mk APP_BUILD_SCRIPT=$ModuleFileDir$/src/main/jni/Android.mk V=1

Working directory: $SourcepathEntry$

7、在app上右键点击NDK NDK Build

可以看到多出来jniLibs目录

8、将

OpenCV-android-sdk/sdk/native/libs 目录里面四个文件夹中的libopencv_java.so分别对应放在刚才生成的目录中,因为java api需要这些。

四、添加布局文件及activity和权限

1、将

OpenCV-android-sdk/samples/face-detection/res/layout/face_detect_surface_view.xml 文件复制到app中的layout目录中

2、在res中创建raw目录,并将

OpenCV-android-sdk/samples/face-detection/res/raw/lbpcascade_frontalface.xml 文件复制到raw中

3、修改MainActivity

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewFrame;

import org.opencv.android.OpenCVLoader;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfRect;

import org.opencv.core.Rect;

import org.opencv.core.Scalar;

import org.opencv.core.Size;

import org.opencv.android.CameraBridgeViewBase;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewListener2;

import org.opencv.objdetect.CascadeClassifier;

import android.content.Context;

import android.os.Bundle;

import android.support.v7.app.AppCompatActivity;

import android.util.Log;

import android.view.Menu;

import android.view.MenuItem;

import android.view.WindowManager;

import com.lingyun.facedetection.R;

public class MainActivity extends AppCompatActivity implements CvCameraViewListener2{

private static final String TAG = "OCVSample::Activity";

private static final Scalar FACE_RECT_COLOR = new Scalar(0, 255, 0, 255);

public static final int JAVA_DETECTOR = 0;

public static final int NATIVE_DETECTOR = 1;

private MenuItem mItemFace50;

private MenuItem mItemFace40;

private MenuItem mItemFace30;

private MenuItem mItemFace20;

private MenuItem mItemType;

private Mat mRgba;

private Mat mGray;

private File mCascadeFile;

private CascadeClassifier mJavaDetector;

private DetectionBasedTracker mNativeDetector;

private int mDetectorType = JAVA_DETECTOR;

private String[] mDetectorName;

private float mRelativeFaceSize = 0.2f;

private int mAbsoluteFaceSize = 0;

private CameraBridgeViewBase mOpenCvCameraView;

static {

if(!OpenCVLoader.initDebug()){

Log.d("MyDebug","Falied");

}else{

Log.d("MyDebug","success");

System.loadLibrary("opencv_java");

}

}

public void doDetect(){

// Load native library after(!) OpenCV initialization

System.loadLibrary("detection_based_tracker");//

try {

// load cascade file from application resources

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

FileOutputStream os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

mJavaDetector = new CascadeClassifier(mCascadeFile.getAbsolutePath());

if (mJavaDetector.empty()) {

Log.e(TAG, "Failed to load cascade classifier");

mJavaDetector = null;

} else

Log.i(TAG, "Loaded cascade classifier from " + mCascadeFile.getAbsolutePath());

mNativeDetector = new DetectionBasedTracker(mCascadeFile.getAbsolutePath(), 0);

cascadeDir.delete();

} catch (IOException e) {

e.printStackTrace();

Log.e(TAG, "Failed to load cascade. Exception thrown: " + e);

}

mOpenCvCameraView.enableView();

}

public MainActivity() {

mDetectorName = new String[2];

mDetectorName[JAVA_DETECTOR] = "Java";

mDetectorName[NATIVE_DETECTOR] = "Native (tracking)";

Log.i(TAG, "Instantiated new " + this.getClass());

}

/** Called when the activity is first created. */

@Override

public void onCreate(Bundle savedInstanceState) {

Log.i(TAG, "called onCreate");

super.onCreate(savedInstanceState);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

setContentView(R.layout.face_detect_surface_view);

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.fd_activity_surface_view);

mOpenCvCameraView.setCvCameraViewListener(this);

doDetect();

}

@Override

public void onPause()

{

super.onPause();

if (mOpenCvCameraView != null)

mOpenCvCameraView.disableView();

}

@Override

public void onResume()

{

super.onResume();

// OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_2_4_3, this, mLoaderCallback);

}

public void onDestroy() {

super.onDestroy();

mOpenCvCameraView.disableView();

}

public void onCameraViewStarted(int width, int height) {

mGray = new Mat();

mRgba = new Mat();

}

public void onCameraViewStopped() {

mGray.release();

mRgba.release();

}

public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

mRgba = inputFrame.rgba();

mGray = inputFrame.gray();

if (mAbsoluteFaceSize == 0) {

int height = mGray.rows();

if (Math.round(height * mRelativeFaceSize) > 0) {

mAbsoluteFaceSize = Math.round(height * mRelativeFaceSize);

}

mNativeDetector.setMinFaceSize(mAbsoluteFaceSize);

}

MatOfRect faces = new MatOfRect();

if (mDetectorType == JAVA_DETECTOR) {

if (mJavaDetector != null)

mJavaDetector.detectMultiScale(mGray, faces, 1.1, 2, 2, // TODO: objdetect.CV_HAAR_SCALE_IMAGE

new Size(mAbsoluteFaceSize, mAbsoluteFaceSize), new Size());

}

else if (mDetectorType == NATIVE_DETECTOR) {

if (mNativeDetector != null)

mNativeDetector.detect(mGray, faces);

}

else {

Log.e(TAG, "Detection method is not selected!");

}

Rect[] facesArray = faces.toArray();

for (int i = 0; i < facesArray.length; i++)

Core.rectangle(mRgba, facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3);

return mRgba;

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

Log.i(TAG, "called onCreateOptionsMenu");

mItemFace50 = menu.add("Face size 50%");

mItemFace40 = menu.add("Face size 40%");

mItemFace30 = menu.add("Face size 30%");

mItemFace20 = menu.add("Face size 20%");

mItemType = menu.add(mDetectorName[mDetectorType]);

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

Log.i(TAG, "called onOptionsItemSelected; selected item: " + item);

if (item == mItemFace50)

setMinFaceSize(0.5f);

else if (item == mItemFace40)

setMinFaceSize(0.4f);

else if (item == mItemFace30)

setMinFaceSize(0.3f);

else if (item == mItemFace20)

setMinFaceSize(0.2f);

else if (item == mItemType) {

int tmpDetectorType = (mDetectorType + 1) % mDetectorName.length;

item.setTitle(mDetectorName[tmpDetectorType]);

setDetectorType(tmpDetectorType);

}

return true;

}

private void setMinFaceSize(float faceSize) {

mRelativeFaceSize = faceSize;

mAbsoluteFaceSize = 0;

}

private void setDetectorType(int type) {

if (mDetectorType != type) {

mDetectorType = type;

if (type == NATIVE_DETECTOR) {

Log.i(TAG, "Detection Based Tracker enabled");

mNativeDetector.start();

} else {

Log.i(TAG, "Cascade detector enabled");

mNativeDetector.stop();

}

}

}

}

4、添加摄像机权限

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.lingyun.facedetection" >

<application

android:allowBackup="true"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:theme="@style/AppTheme" >

<activity

android:name=".MainActivity"

android:label="@string/app_name" >

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

<supports-screens android:resizeable="true"

android:smallScreens="true"

android:normalScreens="true"

android:largeScreens="true"

android:anyDensity="true" />

<uses-sdk android:minSdkVersion="8" />

<uses-permission android:name="android.permission.CAMERA"/>

<uses-feature android:name="android.hardware.camera" android:required="false"/>

<uses-feature android:name="android.hardware.camera.autofocus" android:required="false"/>

<uses-feature android:name="android.hardware.camera.front" android:required="false"/>

<uses-feature android:name="android.hardware.camera.front.autofocus" android:required="false"/>

</manifest>

五、调试

运行项目