1.新建项目使用命令

scrapy startproject tencentcrawl

2.进入tencentcrawl\spiders

scrapy genspider -t crawl tencent hr.tencent.com

-t是模板的意思

3.编写items.py文件

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class TencentItem(scrapy.Item):

# define the fields for your item here like:

# 职位名

position_name = scrapy.Field()

# 详情连接

position_link = scrapy.Field()

# 职位类别

position_type = scrapy.Field()

# 招聘人数

people_num = scrapy.Field()

# 工作地点

work_location = scrapy.Field()

# 发布时间

publish_time = scrapy.Field()

4.编写爬虫/spiders/tencent.py

# -*- coding: utf-8 -*-

import scrapy

# 导入链接匹配类,用于匹配符合规则的链接

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from tencentcrawl.items import TencentItem

class TencentSpider(CrawlSpider):

name = "tencent"

allow_domains = ["hr.tencent.com"]

start_urls = ["http://hr.tencent.com/position.php?&start=0#a"]

page_link = LinkExtractor(allow="start=\d+")

# 匹配的规则

rules = (

# allow满足的正则表达式,callback:回调请求的方法,注意这个是字符串,这个字符串是回调函数名

Rule(page_link, callback='parse_item', follow=True),

)

def parse_item(self, response):

item_list = response.xpath("//tr[@class='even'] | //tr[@class='odd']")

for each in item_list:

# 职位名

item = TencentItem()

item['position_name'] = each.xpath("./td[1]/a/text()").extract()[0]

# 详情连接

item['position_link'] = each.xpath("./td[1]/a/@href").extract()[0]

# 职位类别

item['position_type'] = each.xpath("./td[2]/text()").extract()[0]

# 招聘人数

item['people_num'] = each.xpath("./td[3]/text()").extract()[0]

# 工作地点

item['work_location'] = each.xpath("./td[4]/text()").extract()[0]

# 发布时间

item['publish_time'] = each.xpath("./td[5]/text()").extract()[0]

yield item

5.写管道文件pipelines.py:

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import json

class TencentcrawlPipeline(object):

def __init__(self):

self.filename=open("position.json","wb")

def process_item(self, item, spider):

# 将json对象转成json字符串存储到文件中

text = json.dumps(dict(item),ensure_ascii=False)+",\n"

self.filename.write(text.encode("utf_8"))

return item

def close_spider(self):

self.filename.close()6.写setting.py的日志和选用的pipeline

# -*- coding: utf-8 -*-

# Scrapy settings for tencentcrawl project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'tencentcrawl'

SPIDER_MODULES = ['tencentcrawl.spiders']

NEWSPIDER_MODULE = 'tencentcrawl.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'tencentcrawl (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# LOG_ENABLED 默认: True,启用logging

# LOG_ENCODING 默认: 'utf-8',logging使用的编码

# LOG_FILE 默认: None,在当前目录里创建logging输出文件的文件名

# LOG_LEVEL 默认: 'DEBUG',log的最低级别

# LOG_STDOUT 默认: False 如果为 True,进程所有的标准输出(及错误)将会被重定向到log中。例如,执行 print "hello" ,其将会在Scrapy log中显示。

LOG_FILE="position.log"

#保存日志的等级

LOG_LEVEL="INFO"

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'tencentcrawl.middlewares.TencentcrawlSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'tencentcrawl.middlewares.TencentcrawlDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'tencentcrawl.pipelines.TencentcrawlPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

7.控制台执行命令:

scrapy crawl tencent

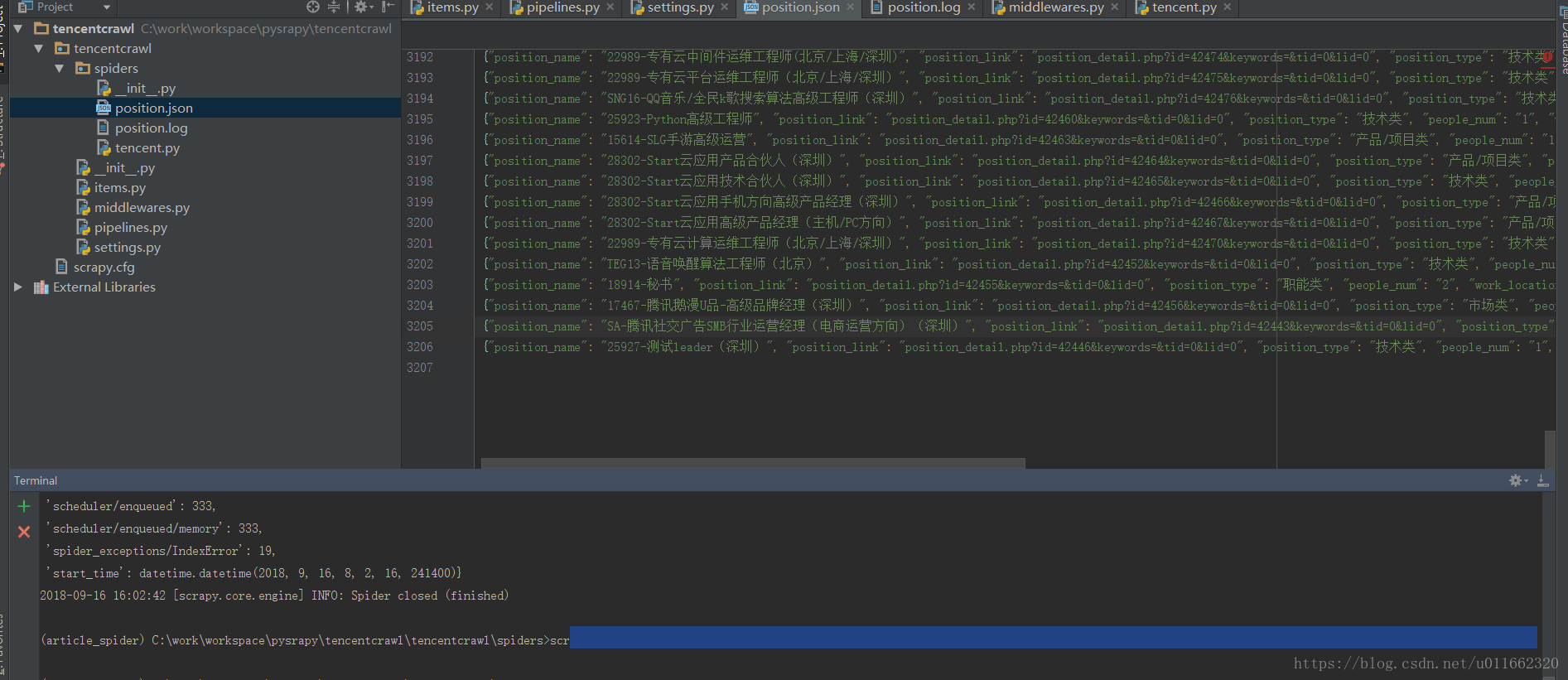

8.文件项目结构图和结果图: