答案出处:https://stats.stackexchange.com/questions/321460/dice-coefficient-loss-function-vs-cross-entropy

cross entropy 是普遍使用的loss function,但是做分割的时候很多使用Dice, 二者的区别如下;

One compelling reason for using cross-entropy over dice-coefficient or the similar IoU metric is that the gradients are nicer.

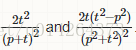

The gradients of cross-entropy wrt the logits is something like p−t, where p is the softmax outputs and t is the target. Meanwhile, if we try to write the dice coefficient in a differentiable form: or

. then the resulting gradients wrt p are much uglier:

The main reason that people try to use dice coefficient or IoU directly is that the actual goal is maximization of those metrics, and cross-entropy is just a proxy which is easier to maximize using backpropagation. In addition, Dice coefficient performs better at class imbalanced problems by design:

However, class imbalance is typically taken care of simply by assigning loss multipliers to each class, such that the network is highly disincentivized to simply ignore a class which appears infrequently, so it's unclear that Dice coefficient is really necessary in these cases.

I would start with cross-entropy loss, which seems to be the standard loss for training segmentation networks, unless there was a really compelling reason to use Dice coefficient.

简单来说,就是DICE并没有真正使结果更优秀,而是结果让人看起来更优秀。