版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/yitian881112/article/details/84586452

1.具体错误描述

2018-11-27 23:45:34,356 WARN org.apache.hadoop.hdfs.server.common.Storage: Failed to add storage directory [DISK]file:/usr/local/temp/hadoop/datanode/

java.io.IOException: Incompatible clusterIDs in /usr/local/temp/hadoop/datanode: namenode clusterID = CID-f52422a5-0ee9-46a2-bb8b-e7cc19771af5; datanode clusterID = CID-52f393e6-2bd3-4b93-9f42-da52c1581305

at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:760)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadStorageDirectory(DataStorage.java:293)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadDataStorage(DataStorage.java:409)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:388)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:556)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1649)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1610)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:374)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:280)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:816)

at java.lang.Thread.run(Thread.java:745)

2018-11-27 23:45:34,360 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool <registering> (Datanode Uuid 559dc6f6-6c85-41b9-afc0-07c5672a64da) service to localhost/10.203.128.10:9000. Exiting.

然后去网上查资料的时候解释了解到,在hadoop启动hdf服务的时候,会分别启动namenode和datanode两个节点,在hdfs-site.xml文件中设置的namenode和datanode里面会有对应的路径,我的路径分别是

<configuration>

<property>

<name>dfs.name.dir</name>

<value>/usr/local/temp/hadoop/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/usr/local/temp/hadoop/datanode</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

然后去对应的目录

下面时datanode的VERSION

root@ubuntu:/usr/local/temp/hadoop/datanode/current# cat VERSION

#Wed Nov 28 00:13:54 PST 2018

storageID=DS-7c51b817-fffc-4cdf-8baa-ac119fec47af

clusterID=CID-f52422a5-0ee9-46a2-bb8b-e7cc19771af5

cTime=0

datanodeUuid=559dc6f6-6c85-41b9-afc0-07c5672a64da

storageType=DATA_NODE

layoutVersion=-57

root@ubuntu:/usr/local/temp/hadoop/datanode/current#

namenode 的VERSION

root@ubuntu:/usr/local/temp/hadoop/namenode/current# cat VERSION

#Tue Nov 27 23:06:20 PST 2018

namespaceID=203824828

clusterID=CID-f52422a5-0ee9-46a2-bb8b-e7cc19771af4

cTime=1543388780695

storageType=NAME_NODE

blockpoolID=BP-1502030832-127.0.0.1-1543388780695

layoutVersion=-63

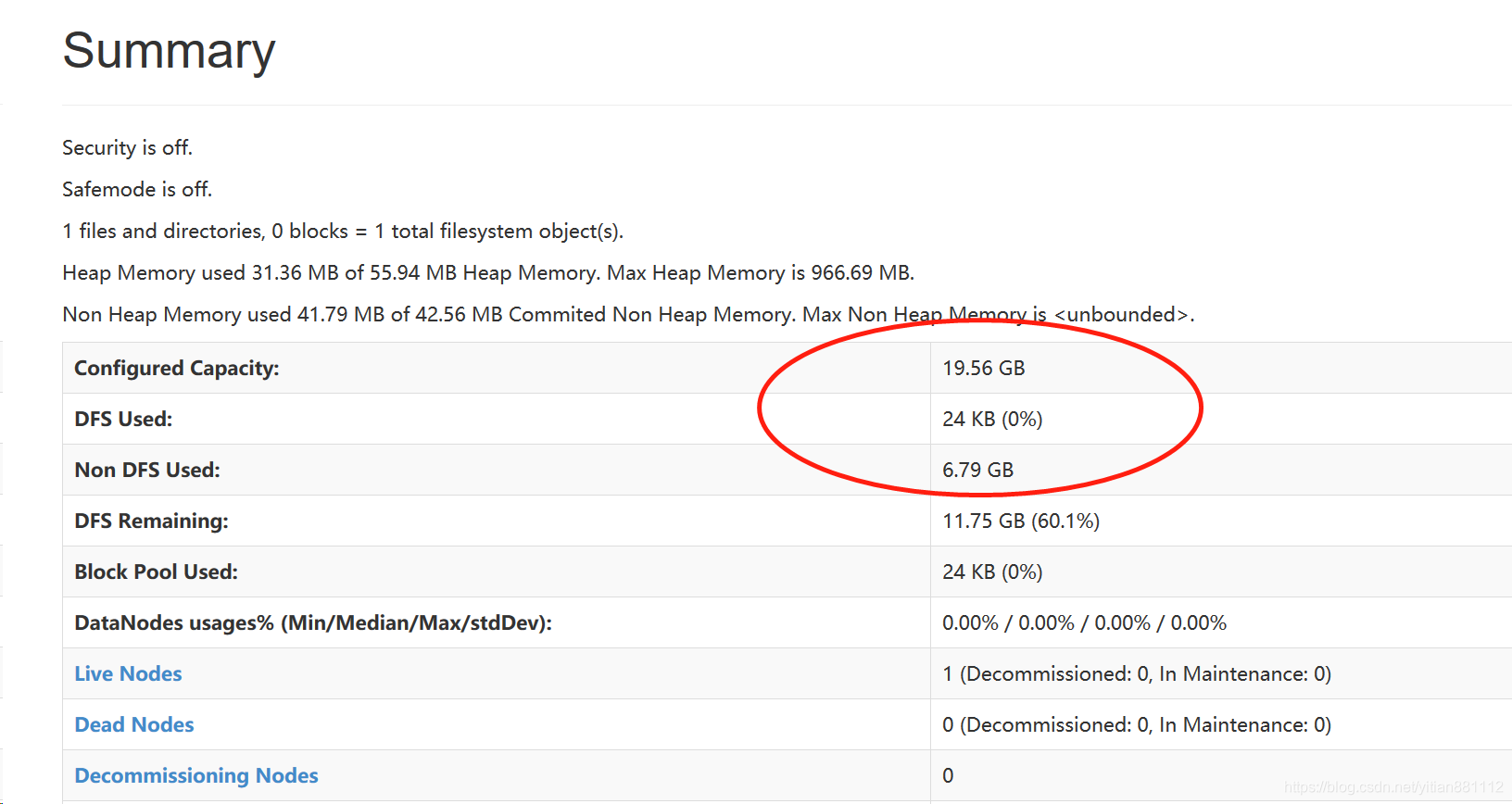

然后把两个clusterID改成一样的话,重启启动就欧克了