基于Scrapy-Redis的分布式以及cookies池

转载自:静觅 » 小白进阶之Scrapy第三篇(基于Scrapy-Redis的分布式以及cookies池)

===========================================================================================

================================================

scrapy-redis的一些配置:PS 这些配置是写在Scrapy项目的settings.py中的!

1 #启用Redis调度存储请求队列 2 SCHEDULER = "scrapy_redis.scheduler.Scheduler" 3 4 #确保所有的爬虫通过Redis去重 5 DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" 6 7 #默认请求序列化使用的是pickle 但是我们可以更改为其他类似的。PS:这玩意儿2.X的可以用。3.X的不能用 8 #SCHEDULER_SERIALIZER = "scrapy_redis.picklecompat" 9 10 #不清除Redis队列、这样可以暂停/恢复 爬取 11 #SCHEDULER_PERSIST = True 12 13 #使用优先级调度请求队列 (默认使用) 14 #SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.PriorityQueue' 15 #可选用的其它队列 16 #SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.FifoQueue' 17 #SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.LifoQueue' 18 19 #最大空闲时间防止分布式爬虫因为等待而关闭 20 #这只有当上面设置的队列类是SpiderQueue或SpiderStack时才有效 21 #并且当您的蜘蛛首次启动时,也可能会阻止同一时间启动(由于队列为空) 22 #SCHEDULER_IDLE_BEFORE_CLOSE = 10 23 24 #将清除的项目在redis进行处理 25 ITEM_PIPELINES = { 26 'scrapy_redis.pipelines.RedisPipeline': 300 27 } 28 29 #序列化项目管道作为redis Key存储 30 #REDIS_ITEMS_KEY = '%(spider)s:items' 31 32 #默认使用ScrapyJSONEncoder进行项目序列化 33 #You can use any importable path to a callable object. 34 #REDIS_ITEMS_SERIALIZER = 'json.dumps' 35 36 #指定连接到redis时使用的端口和地址(可选) 37 #REDIS_HOST = 'localhost' 38 #REDIS_PORT = 6379 39 40 #指定用于连接redis的URL(可选) 41 #如果设置此项,则此项优先级高于设置的REDIS_HOST 和 REDIS_PORT 42 #REDIS_URL = 'redis://user:pass@hostname:9001' 43 44 #自定义的redis参数(连接超时之类的) 45 #REDIS_PARAMS = {} 46 47 #自定义redis客户端类 48 #REDIS_PARAMS['redis_cls'] = 'myproject.RedisClient' 49 50 #如果为True,则使用redis的'spop'进行操作。 51 #如果需要避免起始网址列表出现重复,这个选项非常有用。开启此选项urls必须通过sadd添加,否则会出现类型错误。 52 #REDIS_START_URLS_AS_SET = False 53 54 #RedisSpider和RedisCrawlSpider默认 start_usls 键 55 #REDIS_START_URLS_KEY = '%(name)s:start_urls' 56 57 #设置redis使用utf-8之外的编码 58 #REDIS_ENCODING = 'latin1'

redis数据库按照前一片博文配置过则需要以下至少三项:

SCHEDULER = "scrapy_redis.scheduler.Scheduler" DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" REDIS_URL = 'redis://root:密码@主机IP:端口'

1.爬取队列的实现

2.去重的实现

扫描二维码关注公众号,回复:

4402208 查看本文章

3.中断后重新爬取的实现

================================

一堆user-agent:伪装头部

1 agents = [ 2 "Mozilla/5.0 (Linux; U; Android 2.3.6; en-us; Nexus S Build/GRK39F) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 3 "Avant Browser/1.2.789rel1 (http://www.avantbrowser.com)", 4 "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/532.5 (KHTML, like Gecko) Chrome/4.0.249.0 Safari/532.5", 5 "Mozilla/5.0 (Windows; U; Windows NT 5.2; en-US) AppleWebKit/532.9 (KHTML, like Gecko) Chrome/5.0.310.0 Safari/532.9", 6 "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US) AppleWebKit/534.7 (KHTML, like Gecko) Chrome/7.0.514.0 Safari/534.7", 7 "Mozilla/5.0 (Windows; U; Windows NT 6.0; en-US) AppleWebKit/534.14 (KHTML, like Gecko) Chrome/9.0.601.0 Safari/534.14", 8 "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.14 (KHTML, like Gecko) Chrome/10.0.601.0 Safari/534.14", 9 "Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.20 (KHTML, like Gecko) Chrome/11.0.672.2 Safari/534.20", 10 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.27 (KHTML, like Gecko) Chrome/12.0.712.0 Safari/534.27", 11 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/13.0.782.24 Safari/535.1", 12 "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/535.2 (KHTML, like Gecko) Chrome/15.0.874.120 Safari/535.2", 13 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.7 (KHTML, like Gecko) Chrome/16.0.912.36 Safari/535.7", 14 "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre", 15 "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.0.10) Gecko/2009042316 Firefox/3.0.10", 16 "Mozilla/5.0 (Windows; U; Windows NT 6.0; en-GB; rv:1.9.0.11) Gecko/2009060215 Firefox/3.0.11 (.NET CLR 3.5.30729)", 17 "Mozilla/5.0 (Windows; U; Windows NT 6.0; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6 GTB5", 18 "Mozilla/5.0 (Windows; U; Windows NT 5.1; tr; rv:1.9.2.8) Gecko/20100722 Firefox/3.6.8 ( .NET CLR 3.5.30729; .NET4.0E)", 19 "Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1", 20 "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0.1) Gecko/20100101 Firefox/4.0.1", 21 "Mozilla/5.0 (Windows NT 5.1; rv:5.0) Gecko/20100101 Firefox/5.0", 22 "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0a2) Gecko/20110622 Firefox/6.0a2", 23 "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:7.0.1) Gecko/20100101 Firefox/7.0.1", 24 "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:2.0b4pre) Gecko/20100815 Minefield/4.0b4pre", 25 "Mozilla/4.0 (compatible; MSIE 5.5; Windows NT 5.0 )", 26 "Mozilla/4.0 (compatible; MSIE 5.5; Windows 98; Win 9x 4.90)", 27 "Mozilla/5.0 (Windows; U; Windows XP) Gecko MultiZilla/1.6.1.0a", 28 "Mozilla/2.02E (Win95; U)", 29 "Mozilla/3.01Gold (Win95; I)", 30 "Mozilla/4.8 [en] (Windows NT 5.1; U)", 31 "Mozilla/5.0 (Windows; U; Win98; en-US; rv:1.4) Gecko Netscape/7.1 (ax)", 32 "HTC_Dream Mozilla/5.0 (Linux; U; Android 1.5; en-ca; Build/CUPCAKE) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 33 "Mozilla/5.0 (hp-tablet; Linux; hpwOS/3.0.2; U; de-DE) AppleWebKit/534.6 (KHTML, like Gecko) wOSBrowser/234.40.1 Safari/534.6 TouchPad/1.0", 34 "Mozilla/5.0 (Linux; U; Android 1.5; en-us; sdk Build/CUPCAKE) AppleWebkit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 35 "Mozilla/5.0 (Linux; U; Android 2.1; en-us; Nexus One Build/ERD62) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 36 "Mozilla/5.0 (Linux; U; Android 2.2; en-us; Nexus One Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 37 "Mozilla/5.0 (Linux; U; Android 1.5; en-us; htc_bahamas Build/CRB17) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 38 "Mozilla/5.0 (Linux; U; Android 2.1-update1; de-de; HTC Desire 1.19.161.5 Build/ERE27) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 39 "Mozilla/5.0 (Linux; U; Android 2.2; en-us; Sprint APA9292KT Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 40 "Mozilla/5.0 (Linux; U; Android 1.5; de-ch; HTC Hero Build/CUPCAKE) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 41 "Mozilla/5.0 (Linux; U; Android 2.2; en-us; ADR6300 Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 42 "Mozilla/5.0 (Linux; U; Android 2.1; en-us; HTC Legend Build/cupcake) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 43 "Mozilla/5.0 (Linux; U; Android 1.5; de-de; HTC Magic Build/PLAT-RC33) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1 FirePHP/0.3", 44 "Mozilla/5.0 (Linux; U; Android 1.6; en-us; HTC_TATTOO_A3288 Build/DRC79) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 45 "Mozilla/5.0 (Linux; U; Android 1.0; en-us; dream) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2", 46 "Mozilla/5.0 (Linux; U; Android 1.5; en-us; T-Mobile G1 Build/CRB43) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari 525.20.1", 47 "Mozilla/5.0 (Linux; U; Android 1.5; en-gb; T-Mobile_G2_Touch Build/CUPCAKE) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 48 "Mozilla/5.0 (Linux; U; Android 2.0; en-us; Droid Build/ESD20) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 49 "Mozilla/5.0 (Linux; U; Android 2.2; en-us; Droid Build/FRG22D) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 50 "Mozilla/5.0 (Linux; U; Android 2.0; en-us; Milestone Build/ SHOLS_U2_01.03.1) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 51 "Mozilla/5.0 (Linux; U; Android 2.0.1; de-de; Milestone Build/SHOLS_U2_01.14.0) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 52 "Mozilla/5.0 (Linux; U; Android 3.0; en-us; Xoom Build/HRI39) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2", 53 "Mozilla/5.0 (Linux; U; Android 0.5; en-us) AppleWebKit/522 (KHTML, like Gecko) Safari/419.3", 54 "Mozilla/5.0 (Linux; U; Android 1.1; en-gb; dream) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2", 55 "Mozilla/5.0 (Linux; U; Android 2.0; en-us; Droid Build/ESD20) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 56 "Mozilla/5.0 (Linux; U; Android 2.1; en-us; Nexus One Build/ERD62) AppleWebKit/530.17 (KHTML, like Gecko) Version/4.0 Mobile Safari/530.17", 57 "Mozilla/5.0 (Linux; U; Android 2.2; en-us; Sprint APA9292KT Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 58 "Mozilla/5.0 (Linux; U; Android 2.2; en-us; ADR6300 Build/FRF91) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 59 "Mozilla/5.0 (Linux; U; Android 2.2; en-ca; GT-P1000M Build/FROYO) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1", 60 "Mozilla/5.0 (Linux; U; Android 3.0.1; fr-fr; A500 Build/HRI66) AppleWebKit/534.13 (KHTML, like Gecko) Version/4.0 Safari/534.13", 61 "Mozilla/5.0 (Linux; U; Android 3.0; en-us; Xoom Build/HRI39) AppleWebKit/525.10 (KHTML, like Gecko) Version/3.0.4 Mobile Safari/523.12.2", 62 "Mozilla/5.0 (Linux; U; Android 1.6; es-es; SonyEricssonX10i Build/R1FA016) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 63 "Mozilla/5.0 (Linux; U; Android 1.6; en-us; SonyEricssonX10i Build/R1AA056) AppleWebKit/528.5 (KHTML, like Gecko) Version/3.1.2 Mobile Safari/525.20.1", 64 ]

==============================

获取cookie:

1 import requests 2 import json 3 import redis 4 import logging 5 from .settings import REDIS_URL 6 7 logger = logging.getLogger(__name__) 8 ##使用REDIS_URL链接Redis数据库, deconde_responses=True这个参数必须要,数据会变成byte形式 完全没法用 9 reds = redis.Redis.from_url(REDIS_URL, db=2, decode_responses=True) 10 login_url = 'http://haoduofuli.pw/wp-login.php' 11 12 ##获取Cookie 13 def get_cookie(account, password): 14 s = requests.Session() 15 payload = { 16 'log': account, 17 'pwd': password, 18 'rememberme': "forever", 19 'wp-submit': "登录", 20 'redirect_to': "http://http://www.haoduofuli.pw/wp-admin/", 21 'testcookie': "1" 22 } 23 response = s.post(login_url, data=payload) 24 cookies = response.cookies.get_dict() 25 logger.warning("获取Cookie成功!(账号为:%s)" % account) 26 return json.dumps(cookies)

===========================================================

将Cookie写入Redis数据库(分布式呀,当然得要其它其它Spider也能使用这个Cookie了)

1 def init_cookie(red, spidername): 2 redkeys = reds.keys() 3 for user in redkeys: 4 password = reds.get(user) 5 if red.get("%s:Cookies:%s--%s" % (spidername, user, password)) is None: 6 cookie = get_cookie(user, password) 7 red.set("%s:Cookies:%s--%s"% (spidername, user, password), cookie)

===============================================================

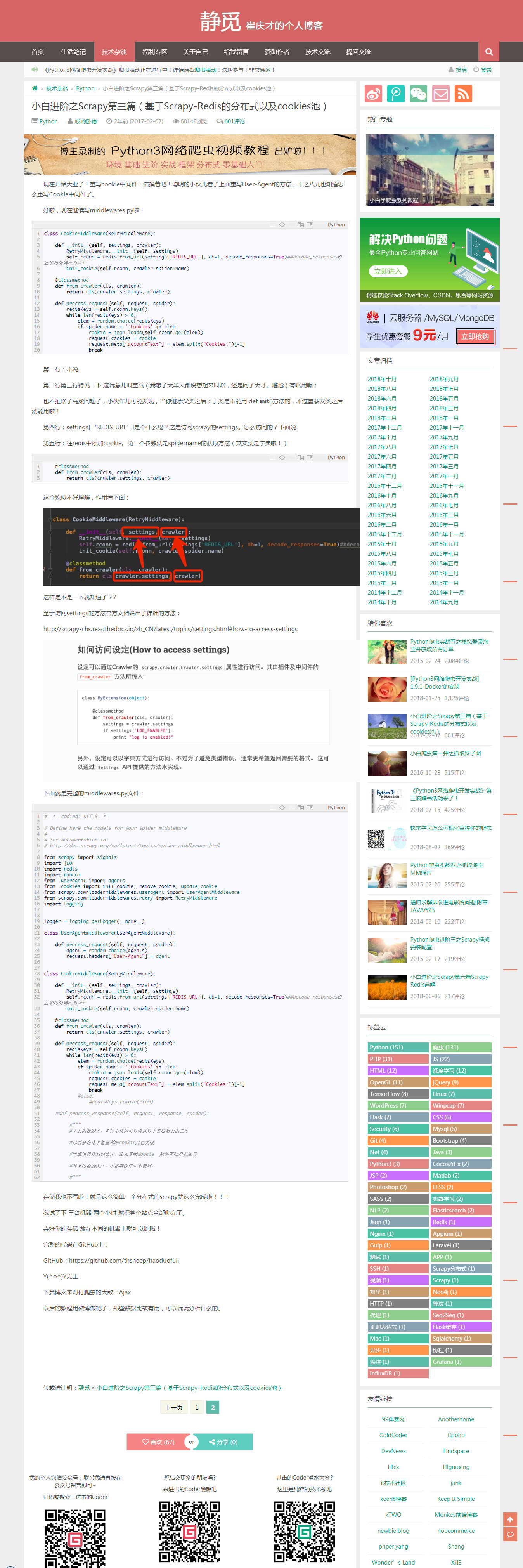

完整的middlewares.py文件:

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your spider middleware 4 # 5 # See documentation in: 6 # http://doc.scrapy.org/en/latest/topics/spider-middleware.html 7 8 from scrapy import signals 9 import json 10 import redis 11 import random 12 from .useragent import agents 13 from .cookies import init_cookie, remove_cookie, update_cookie 14 from scrapy.downloadermiddlewares.useragent import UserAgentMiddleware 15 from scrapy.downloadermiddlewares.retry import RetryMiddleware 16 import logging 17 18 19 logger = logging.getLogger(__name__) 20 21 class UserAgentmiddleware(UserAgentMiddleware): 22 23 def process_request(self, request, spider): 24 agent = random.choice(agents) 25 request.headers["User-Agent"] = agent 26 27 28 class CookieMiddleware(RetryMiddleware): 29 30 def __init__(self, settings, crawler): 31 RetryMiddleware.__init__(self, settings) 32 self.rconn = redis.from_url(settings['REDIS_URL'], db=1, decode_responses=True)##decode_responses设置取出的编码为str 33 init_cookie(self.rconn, crawler.spider.name) 34 35 @classmethod 36 def from_crawler(cls, crawler): 37 return cls(crawler.settings, crawler) 38 39 def process_request(self, request, spider): 40 redisKeys = self.rconn.keys() 41 while len(redisKeys) > 0: 42 elem = random.choice(redisKeys) 43 if spider.name + ':Cookies' in elem: 44 cookie = json.loads(self.rconn.get(elem)) 45 request.cookies = cookie 46 request.meta["accountText"] = elem.split("Cookies:")[-1] 47 break 48 #else: 49 #redisKeys.remove(elem) 50 51 #def process_response(self, request, response, spider): 52 53 #""" 54 #下面的我删了,各位小伙伴可以尝试以下完成后面的工作 55 56 #你需要在这个位置判断cookie是否失效 57 58 #然后进行相应的操作,比如更新cookie 删除不能用的账号 59 60 #写不出也没关系,不影响程序正常使用, 61 62 #"""