是时候记录怎么使用gstreamer库将h264码流转为avi、mp4、flv等视频文件了。

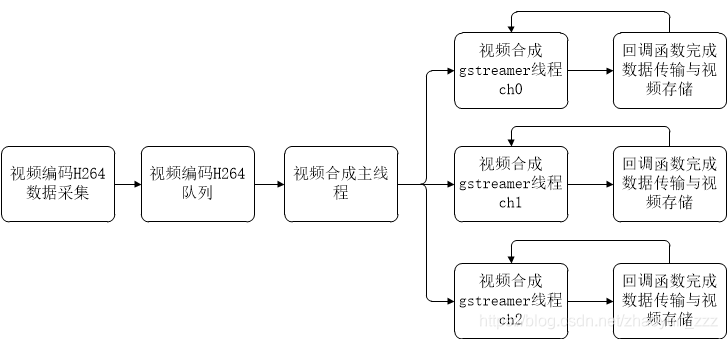

下图是本片示例视频合成的流程图,其中H264 采集与队列实现部分代码未贴上。

总体思想是,“视频合成主线程”根据视频数据通道创建gstreamer视频合成pipeline线程,然后剩余的视频合成以及文件存储操作均由pipeline的appsrc与appsink的回调函数出现。

视频合成主线程与gstreamer的pipeline线程的之间的信号同步由以下标志位以及信号量完成

guint record_flag;

sem_t frame_put;

sem_t frame_get;

sem_t record_on;

关于gstreamer-1.0库一些元件的使用注意事项:

- appsrc元件,因为每一帧H264的长度不一样,所以需要每次向其注入数据时,需要重新指定数据长度

- 视频文件合成结束时,一定要通过appsrc发送end of stream信号,否则非正常结束的文件,因为文件信息不全导致无法播放

- 采用splitmuxsink元件时,不能将其muxer属性对应的元件设置为flvmux,因为splitmuxsink的video的pad动态申请时只支持video_%u格式,flvmux的为video形式,因此不支持

- 合成视频时,mux类的元件支持的输入的H264帧格式不一样,有的是byte-stream,有的是avc格式,两者的区别是avc格式的H264帧的分隔符00 00 00 01,应该使用帧长度替换,注意为大端格式,长度放在地1、2、3字节(从0计数),且高字节放在左边1字节开始。尤其是视频“I”帧,包含sps、pps、iframe,每个分隔符都要用长度替换,长度不包含分隔符本身。

- h264parse元件存在问题,50K长度附近的的视频"I"帧,在由byte-stream转为avc格式时,会出现数据丢失

#define __USE_GNU

#include <sched.h>

#include <gst/gst.h>

#include <gst/app/gstappsrc.h>

#include <gst/app/gstappsink.h>

#include <stdio.h>

#include <string.h>

#include <unistd.h>

#include <stdlib.h>

#include <assert.h>

#include <sys/types.h>

#include <sys/un.h>

#include <fcntl.h>

#include <sys/time.h>

#include <sys/mman.h>

#include <sys/ioctl.h>

#include <linux/fb.h>

#include <signal.h>

#include <pthread.h>

#include <semaphore.h>

#include <errno.h>

#include "media.h"

#include "queue.h"

typedef struct _GstDataStruct{

GstElement *pipeline;

GstElement *appsrc;

GstElement *appsink;

//GstElement *h264parse;

//GstElement *muxfile;

GstElement *avimux;

guint sourceid;

guint appsrc_index;

guint appsink_index;

guint bus_watch_id;

GstBus *bus;

GMainLoop *loop; // GLib's Main Loop

REC_MSG *rec_msg;

guint record_flag;

guint ch;

sem_t frame_put;

sem_t frame_get;

sem_t record_on;

unsigned int width;

unsigned int height;

unsigned int fps;

char* filename;

FILE *vfile;

} MuxGstDataStruct;

#define RECORD_DIR "/mnt/httpsrv/av_record/"

#define RECORD_TIME_SEC (3 * 60)

extern unsigned long q_record;

extern int start_wait_iframe0;

extern int start_wait_iframe1;

extern int start_wait_iframe2;

extern int start_wait_iframe3;

extern int had_camera_3;

extern char startup_ID[64];

extern unsigned int bytes_per_frame;

extern int video_base_ts_uninit;

REC_MSG video_rec_msg;

MuxGstDataStruct ch0_AviMuxGst;

MuxGstDataStruct ch1_AviMuxGst;

MuxGstDataStruct ch2_AviMuxGst;

MuxGstDataStruct ch3_AviMuxGst;

unsigned int ch0_online;

unsigned int ch0_width;

unsigned int ch0_height;

unsigned int ch0_fps;

unsigned int ch1_online;

unsigned int ch1_width;

unsigned int ch1_height;

unsigned int ch1_fps;

unsigned int ch2_online;

unsigned int ch2_width;

unsigned int ch2_height;

unsigned int ch2_fps;

unsigned int ch3_online;

unsigned int ch3_width;

unsigned int ch3_height;

unsigned int ch3_fps;

#define MAX_FILE_NAME (96)

char filename0[MAX_FILE_NAME] = {0};

char filename1[MAX_FILE_NAME] = {0};

char filename2[MAX_FILE_NAME] = {0};

char filename3[MAX_FILE_NAME] = {0}; // add by luke zhao 2018.6.14, used for 360 video

static gboolean avi_mux_bus_msg_call(GstBus *bus, GstMessage *msg, MuxGstDataStruct *pAviMuxGst)

{

gchar *debug;

GError *error;

GMainLoop *loop = pAviMuxGst->loop;

GST_DEBUG ("ch:%d, got message %s", pAviMuxGst->ch, gst_message_type_get_name (GST_MESSAGE_TYPE (msg)));

switch (GST_MESSAGE_TYPE(msg))

{

case GST_MESSAGE_EOS:

printf("ch:%d, End of stream\n", pAviMuxGst->ch);

fflush(pAviMuxGst->vfile);

fclose(pAviMuxGst->vfile);

g_main_loop_quit(loop);

break;

case GST_MESSAGE_ERROR:

gst_message_parse_error(msg, &error, &debug);

g_free(debug);

g_printerr("ch:%d, Error: %s\n", pAviMuxGst->ch, error->message);

g_error_free(error);

g_main_loop_quit(loop);

break;

default:

break;

}

return TRUE;

}

static void start_feed(GstElement * pipeline, guint size, MuxGstDataStruct *pAviMuxGst)

{

GstFlowReturn ret;

GstBuffer *buffer;

GstMemory *memory;

gpointer data;

gsize len;

sem_wait(&pAviMuxGst->frame_put);

if(pAviMuxGst->record_flag == 0)

{

printf("ch:%d, end of stream change to new file!\n", pAviMuxGst->ch);

g_signal_emit_by_name (pAviMuxGst->appsrc, "end-of-stream", &ret);

}

else

{

data = (gpointer)video_rec_msg.frame;

len = (gsize)video_rec_msg.used_size;

char szTemp[64] = {0};

sprintf(szTemp, "%d", video_rec_msg.used_size);

g_object_set(G_OBJECT(pAviMuxGst->appsrc), "blocksize", szTemp, NULL);

gst_app_src_set_size (pAviMuxGst->appsrc, len);

//printf("ch:%d, get frame:%p, len:%d, szTemp:%s!!!!\n", pAviMuxGst->ch, data, len, szTemp);

pAviMuxGst->appsrc_index++;

buffer = gst_buffer_new();

memory = gst_memory_new_wrapped(GST_MEMORY_FLAG_READONLY, data, len, 0, len, NULL, NULL);

gst_buffer_append_memory (buffer, memory);

g_signal_emit_by_name (pAviMuxGst->appsrc, "push-buffer", buffer, &ret);

gst_buffer_unref(buffer);

}

sem_post(&pAviMuxGst->frame_get);

}

static void stop_feed(GstElement * pipeline, MuxGstDataStruct *pAviMuxGst)

{

g_print("ch:%d, stop feed ...................\n", pAviMuxGst->ch);

// if (pMuxGstData->sourceid != 0)

// {

// //GST_DEBUG ("ch:%d, stop feeding...\n", pAviMuxGst->ch);

// g_source_remove (pAviMuxGst->sourceid);

// pAviMuxGst->sourceid = 0;

// }

}

static void new_sample_on_appsink (GstElement *sink, MuxGstDataStruct *pAviMuxGst)

{

int ret = 0;

GstSample *sample = NULL;

struct timeval tvl;

gettimeofday(&tvl, NULL);

g_signal_emit_by_name (sink, "pull-sample", &sample);

if(sample)

{

pAviMuxGst->appsink_index++;

GstBuffer *buffer = gst_sample_get_buffer(sample);

GstMapInfo info;

if(gst_buffer_map((buffer), &info, GST_MAP_READ))

{

//printf("ch:%d, mux appsink rcv data len:%d time: %d, index:%d!\n", pAviMuxGst->ch,

// (unsigned int)info.size, (unsigned int)tvl.tv_sec, pAviMuxGst->appsink_index);

fwrite(info.data, info.size, 1, pAviMuxGst->vfile);

fflush(pAviMuxGst->vfile);

gst_buffer_unmap(buffer, &info);

}

gst_sample_unref(sample);

}

}

static int thr_avi_mux_gst_pipeline(void* args)

{

MuxGstDataStruct *pAviMuxGst;

//char elementname[32] = {0};

cpu_set_t mask;

__CPU_ZERO_S (sizeof (cpu_set_t), &mask);

__CPU_SET_S (0, sizeof (cpu_set_t), &mask);

pthread_setaffinity_np(pthread_self(), sizeof(mask), &mask);

re_start:

pAviMuxGst = (MuxGstDataStruct*)args;

printf("============= ch:%d, mux gst init start ============\n", pAviMuxGst->ch);

gst_init (NULL, NULL);

printf("============ ch:%d, create mux pipeline ============\n", pAviMuxGst->ch);

printf("===== ch:%d, width:%d, height:%d, framerate:%d =====\n",

pAviMuxGst->ch, pAviMuxGst->width, pAviMuxGst->height, pAviMuxGst->fps);

pAviMuxGst->pipeline = gst_pipeline_new ("avimux pipeline");

pAviMuxGst->appsrc = gst_element_factory_make ("appsrc", "appsrc");

pAviMuxGst->appsink = gst_element_factory_make ("appsink", "appsink");

//pAviMuxGst->h264parse = gst_element_factory_make ("h264parse", "h264parse");

pAviMuxGst->avimux = gst_element_factory_make ("avimux", "avimux");

//pAviMuxGst->muxfile = gst_element_factory_make ("filesink", "filesink");

if (!pAviMuxGst->appsrc || !pAviMuxGst->avimux || !pAviMuxGst->appsink)

{

g_printerr ("ch:%d:not all element could be created... Exit\n", pAviMuxGst->ch);

printf("ch:%d, appsrc:%p, mux:%p, appsink:%p !!\n", pAviMuxGst->ch,

pAviMuxGst->appsrc, pAviMuxGst->avimux, pAviMuxGst->appsink);

return -1;

}

printf("============= ch:%d, link mux pipeline =============\n", pAviMuxGst->ch);

g_object_set(G_OBJECT(pAviMuxGst->appsrc), "stream-type", 0, "format", GST_FORMAT_TIME, NULL);

g_object_set(G_OBJECT(pAviMuxGst->appsrc), "min-percent", 0, NULL);

GstCaps *caps_h264_byte;

caps_h264_byte = gst_caps_new_simple("video/x-h264", "stream-format", G_TYPE_STRING,"byte-stream",

"alignment", G_TYPE_STRING, "au",

"width", G_TYPE_INT, pAviMuxGst->width,

"height", G_TYPE_INT, pAviMuxGst->height,

"framerate",GST_TYPE_FRACTION, pAviMuxGst->fps, 1, NULL);

g_object_set(G_OBJECT(pAviMuxGst->appsrc), "caps", caps_h264_byte, NULL);

g_signal_connect(pAviMuxGst->appsrc, "need-data", G_CALLBACK(start_feed), (gpointer)pAviMuxGst);

g_signal_connect(pAviMuxGst->appsrc, "enough-data", G_CALLBACK(stop_feed), (gpointer)pAviMuxGst);

// g_object_set(G_OBJECT(pAviMuxGst->muxfile), "location", pAviMuxGst->filename,

// "sync", FALSE, "buffer-mode", 2, NULL);

g_object_set(G_OBJECT(pAviMuxGst->appsink), "emit-signals", TRUE, "sync", FALSE, "async", FALSE, NULL);

g_signal_connect(pAviMuxGst->appsink, "new-sample", G_CALLBACK(new_sample_on_appsink), pAviMuxGst);

gst_bin_add_many(GST_BIN(pAviMuxGst->pipeline),pAviMuxGst->appsrc, pAviMuxGst->avimux, pAviMuxGst->appsink, NULL);

if(gst_element_link_filtered(pAviMuxGst->appsrc, pAviMuxGst->avimux, caps_h264_byte) != TRUE)

{

g_printerr ("ch:%d, pAviMuxGst->appsrc could not link pAviMuxGst->avimux\n", pAviMuxGst->ch);

gst_object_unref (pAviMuxGst->pipeline);

return -1;

}

if(gst_element_link(pAviMuxGst->avimux, pAviMuxGst->appsink) != TRUE)

{

g_printerr ("ch:%d, pAviMuxGst->h264parse could not link pAviMuxGst->appsink\n", pAviMuxGst->ch);

gst_object_unref (pAviMuxGst->pipeline);

return -1;

}

gst_caps_unref (caps_h264_byte);

pAviMuxGst->bus = gst_pipeline_get_bus(GST_PIPELINE(pAviMuxGst->pipeline));

pAviMuxGst->bus_watch_id = gst_bus_add_watch(pAviMuxGst->bus, (GstBusFunc)avi_mux_bus_msg_call, (gpointer)pAviMuxGst);

gst_object_unref(pAviMuxGst->bus);

printf("=========== ch:%d, link mux pipeline ok ! ============\n", pAviMuxGst->ch);

printf("======== ch:%d, mux pipeline start to playing! =======\n", pAviMuxGst->ch);

pAviMuxGst->record_flag = 1;

sem_post(&pAviMuxGst->record_on);

gst_element_set_state (pAviMuxGst->pipeline, GST_STATE_PLAYING);

pAviMuxGst->loop = g_main_loop_new(NULL, FALSE); // Create gstreamer loop

g_main_loop_run(pAviMuxGst->loop); // Loop will run until receiving EOS (end-of-stream), will block here

printf("== ch:%d, g_main_loop_run returned, stopping record ==\n", pAviMuxGst->ch);

gst_element_set_state (pAviMuxGst->pipeline, GST_STATE_NULL); // Stop pipeline to be released

printf("============ ch:%d, deleting mux pipeline ============\n", pAviMuxGst->ch);

gst_object_unref (pAviMuxGst->pipeline); // THis will also delete all pipeline elements

g_source_remove(pAviMuxGst->bus_watch_id);

g_main_loop_unref(pAviMuxGst->loop);

if(pAviMuxGst->record_flag == 0)

{

printf("======= ch:%d, pipeline going to restart! =======\n", pAviMuxGst->ch);

goto re_start;

}

return 0;

}

void *thr_record(void *arg)

{

int ret;

unsigned int frame_size;

FILE *afp0 = NULL;

FILE *afp1 = NULL;

unsigned int count0 = 0;

unsigned int count1 = 0;

unsigned int count2 = 0;

unsigned int count3 = 0; // add by luke zhao 2018.6.14, used for 360 video

int file_count0 = 0;

int file_count1 = 0;

int file_count2 = 0;

int file_count3 = 0; // add by luke zhao 2018.6.14, used for 360 video

int record_wait_iframe0 = 0;

int record_wait_iframe1 = 0;

int record_wait_iframe2 = 0;

int record_wait_iframe3 = 0; // add by luke zhao 2018.6.14, used for 360 video

char audiofilename0[MAX_FILE_NAME] = {0};

char audiofilename1[MAX_FILE_NAME] = {0};

char filename0_index[128] = {0};

char filename1_index[128] = {0};

char filename2_index[128] = {0};

char filename3_index[128] = {0}; // add by luke zhao 2018.6.14, used for 360 video

struct media_header head = {0};

struct timeval tvl;

struct timeval ch0_video_base_time;

struct timeval ch1_video_base_time;

struct timeval ch2_video_base_time;

struct timeval ch3_video_base_time;

int audio0_fsize = 0;

int audio1_fsize = 0;

int audio0_stop = 0;

int audio1_stop = 0;

pthread_t tid;

cpu_set_t mask;

__CPU_ZERO_S (sizeof (cpu_set_t), &mask);

__CPU_SET_S (0, sizeof (cpu_set_t), &mask);

pthread_setaffinity_np(pthread_self(), sizeof(mask), &mask);

memset((unsigned char*)&ch0_AviMuxGst, 0, sizeof(MuxGstDataStruct));

memset((unsigned char*)&ch1_AviMuxGst, 0, sizeof(MuxGstDataStruct));

memset((unsigned char*)&ch2_AviMuxGst, 0, sizeof(MuxGstDataStruct));

memset((unsigned char*)&ch3_AviMuxGst, 0, sizeof(MuxGstDataStruct));

sem_init(&ch0_AviMuxGst.frame_put, 0, 0);

sem_init(&ch0_AviMuxGst.frame_get, 0, 0);

sem_init(&ch0_AviMuxGst.record_on, 0, 0);

sem_init(&ch1_AviMuxGst.frame_put, 0, 0);

sem_init(&ch1_AviMuxGst.frame_get, 0, 0);

sem_init(&ch2_AviMuxGst.record_on, 0, 0);

sem_init(&ch2_AviMuxGst.frame_put, 0, 0);

sem_init(&ch2_AviMuxGst.frame_get, 0, 0);

sem_init(&ch2_AviMuxGst.record_on, 0, 0);

sem_init(&ch3_AviMuxGst.frame_put, 0, 0);

sem_init(&ch3_AviMuxGst.frame_get, 0, 0);

sem_init(&ch3_AviMuxGst.record_on, 0, 0);

printf("thr_record start!!!!!+++++++++++++++++++++\n");

while(1)

{

REC_MSG* rec_msg;

char *pbuf;

ret = q_rcv(q_record, (void *)&video_rec_msg, sizeof(REC_MSG));

if (unlikely(ret < 0)) printf("q_rcv at q_record fault: ret = %d\n", ret);

rec_msg = &video_rec_msg;

pbuf = (void *)rec_msg->frame;

if (unlikely(video_base_ts_uninit))

{

if(rec_msg->msg_type != MEDIA_VIDEO) continue;

if((rec_msg->channel != 0) && (rec_msg->channel != 1) && (rec_msg->channel != 2)) continue;

video_base_ts_uninit = 0;

}

if(rec_msg->msg_type == MEDIA_VIDEO)

{

if(start_wait_iframe0 && (rec_msg->channel == 0))

{

if(!is_iframe(pbuf[4])) continue;

start_wait_iframe0 = 0;

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

sprintf(filename0_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year,1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 0, startup_ID, 0);

sprintf(filename0, "%s%s.avi", RECORD_DIR, filename0_index);

printf("video filename0 %s\n", filename0);

ch0_video_base_time.tv_sec = rec_msg->ts_sec;

add_file(filename0);

ch0_AviMuxGst.vfile = fopen(filename0,"ab");

ch0_AviMuxGst.filename = filename0;

ch0_AviMuxGst.ch = 0;

ch0_AviMuxGst.width = ch0_width;

ch0_AviMuxGst.height = ch0_height;

ch0_AviMuxGst.fps = ch0_fps;

if(ch0_online)

{

ret = pthread_create(&tid, NULL, thr_avi_mux_gst_pipeline, (void*)&ch0_AviMuxGst);

printf("ch0 create thr_avi_mux_gst_pipeline thread, ret = %d \n", ret);

}

sem_wait(&ch0_AviMuxGst.record_on);

}

if(start_wait_iframe1 && (rec_msg->channel == 1))

{

if(!is_iframe(pbuf[4])) continue;

start_wait_iframe1 = 0;

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

sprintf(filename1_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year,1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 1, startup_ID, 0);

sprintf(filename1, "%s%s.avi", RECORD_DIR, filename1_index);

printf("video filename1 %s\n", filename1);

ch1_video_base_time.tv_sec = rec_msg->ts_sec;

add_file(filename1);

ch1_AviMuxGst.vfile = fopen(filename1,"ab");

ch1_AviMuxGst.filename = filename1;

ch1_AviMuxGst.ch = 1;

ch1_AviMuxGst.width = ch1_width;

ch1_AviMuxGst.height = ch1_height;

ch1_AviMuxGst.fps = ch1_fps;

if(ch1_online)

{

ret = pthread_create(&tid, NULL, thr_avi_mux_gst_pipeline, (void*)&ch1_AviMuxGst);

printf("ch1 create thr_avi_mux_gst_pipeline thread, ret = %d \n", ret);

}

sem_wait(&ch1_AviMuxGst.record_on);

}

if(start_wait_iframe3 && (rec_msg->channel == 3))

{

if(!is_iframe(pbuf[4])) continue;

start_wait_iframe3 = 0;

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

sprintf(filename3_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year,1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 3, startup_ID,0);

sprintf(filename3, "%s%s.avi", RECORD_DIR, filename3_index);

printf("video filename3 %s\n", filename3);

ch3_video_base_time.tv_sec = rec_msg->ts_sec;

add_file(filename3);

ch3_AviMuxGst.vfile = fopen(filename3,"ab");

ch3_AviMuxGst.filename = filename3;

ch3_AviMuxGst.ch = 3;

ch3_AviMuxGst.width = ch3_width;

ch3_AviMuxGst.height = ch3_height;

ch3_AviMuxGst.fps = ch3_fps;

if(ch3_online)

{

ret = pthread_create(&tid, NULL, thr_avi_mux_gst_pipeline, (void*)&ch3_AviMuxGst);

printf("ch3 create thr_avi_mux_gst_pipeline thread, ret = %d \n", ret);

}

sem_wait(&ch3_AviMuxGst.record_on);

}

if(had_camera_3 && start_wait_iframe2 && (rec_msg->channel == 2))

{

if(!is_iframe(pbuf[4])) continue;

start_wait_iframe2 = 0;

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

sprintf(filename2_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year,1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 2, startup_ID, 0);

sprintf(filename2, "%s%s.avi", RECORD_DIR, filename2_index);

printf("video filename2 %s\n", filename2);

ch2_video_base_time.tv_sec = rec_msg->ts_sec;

add_file(filename2);

ch2_AviMuxGst.vfile = fopen(filename2,"ab");

ch2_AviMuxGst.filename = filename2;

ch2_AviMuxGst.ch = 2;

ch2_AviMuxGst.width = ch2_width;

ch2_AviMuxGst.height = ch2_height;

ch2_AviMuxGst.fps = ch2_fps;

if(ch2_online)

{

ret = pthread_create(&tid, NULL, thr_avi_mux_gst_pipeline, (void*)&ch2_AviMuxGst);

printf("ch2 create thr_avi_mux_gst_pipeline thread, ret = %d \n", ret);

}

sem_wait(&ch2_AviMuxGst.record_on);

}

if(rec_msg->channel == 0)

{

count0 = rec_msg->ts_sec - ch0_video_base_time.tv_sec;

if(count0 == RECORD_TIME_SEC || record_wait_iframe0 == 1)

{

if(is_iframe(pbuf[4]))

{

printf("ch0 video use time count0: %d msg sec %d, base:%ld \n",

count0, rec_msg->ts_sec, ch0_video_base_time.tv_sec);

memset(&head.tm, 0, sizeof(struct tm));

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

ch0_video_base_time.tv_sec = rec_msg->ts_sec;

memset(filename0, 0, sizeof(filename0));

memset(filename0_index, 0, sizeof(filename0_index));

sprintf(filename0_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year, 1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 0, startup_ID, ++file_count0);

sprintf(filename0, "%s%s.avi", RECORD_DIR, filename0_index);

add_file(filename0);

record_wait_iframe0 = 0;

audio0_stop = 1;

//g_signal_emit_by_name (ch0_AviMuxGst.appsrc, "end-of-stream", &ret);

//gst_element_send_event(ch0_AviMuxGst.pipeline, gst_event_new_eos());

printf("ch0 video chage to new file cur time:%ld, base time:%ld\n",

tvl.tv_sec, ch0_video_base_time.tv_sec);

ch0_AviMuxGst.record_flag = 0;

sem_post(&ch0_AviMuxGst.frame_put);

sem_wait(&ch0_AviMuxGst.frame_get);

sem_wait(&ch0_AviMuxGst.record_on);

ch0_AviMuxGst.vfile = fopen(filename0,"ab");

}

else

{

record_wait_iframe0 = 1;

}

}

sem_post(&ch0_AviMuxGst.frame_put);

sem_wait(&ch0_AviMuxGst.frame_get);

}

else if(rec_msg->channel == 1)

{

count1 = rec_msg->ts_sec - ch1_video_base_time.tv_sec;

if(count1 == RECORD_TIME_SEC || record_wait_iframe1 == 1)

{

if(is_iframe(pbuf[4]))

{

printf("ch1 video use time count1: %d msg sec %d, base:%ld \n",

count1, rec_msg->ts_sec, ch1_video_base_time.tv_sec);

memset(&head.tm, 0, sizeof(struct tm));

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

ch1_video_base_time.tv_sec = rec_msg->ts_sec;

memset(filename1, 0, sizeof(filename1));

memset(filename1_index, 0, sizeof(filename1_index));

sprintf(filename1_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year, 1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 1, startup_ID, ++file_count1);

sprintf(filename1, "%s%s.avi", RECORD_DIR, filename1_index);

add_file(filename1);

record_wait_iframe1 = 0;

audio1_stop = 1;

//g_signal_emit_by_name(ch1_AviMuxGst.appsrc, "end-of-stream", &ret);

//gst_element_send_event(ch1_AviMuxGst.pipeline, gst_event_new_eos());

ch1_AviMuxGst.record_flag = 0;

printf("ch1 video chage to new file cur time:%ld, base time:%ld\n",

tvl.tv_sec, ch1_video_base_time.tv_sec);

sem_post(&ch1_AviMuxGst.frame_put);

sem_wait(&ch1_AviMuxGst.frame_get);

sem_wait(&ch1_AviMuxGst.record_on);

ch1_AviMuxGst.vfile = fopen(filename1,"ab");

}

else

{

record_wait_iframe1 = 1;

}

}

sem_post(&ch1_AviMuxGst.frame_put);

sem_wait(&ch1_AviMuxGst.frame_get);

}

else if(rec_msg->channel == 2)

{

count2 = rec_msg->ts_sec - ch2_video_base_time.tv_sec;

if(count2 == RECORD_TIME_SEC || record_wait_iframe2 == 1)

{

if(is_iframe(pbuf[4]))

{

printf("ch2 video use time count2: %d msg sec %d, base:%ld \n",

count2, rec_msg->ts_sec, ch2_video_base_time.tv_sec);

memset(&head.tm, 0, sizeof(struct tm));

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

ch2_video_base_time.tv_sec = rec_msg->ts_sec;

memset(filename2, 0, sizeof(filename2));

memset(filename2_index, 0, sizeof(filename2_index));

sprintf(filename2_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year, 1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 2, startup_ID, ++file_count2);

sprintf(filename2, "%s%s.avi", RECORD_DIR, filename2_index);

add_file(filename2);

record_wait_iframe2 = 0;

//g_signal_emit_by_name (ch2_AviMuxGst.appsrc, "end-of-stream", &ret);

//gst_element_send_event(ch2_AviMuxGst.pipeline, gst_event_new_eos());

printf("ch2 video chage to new file cur time:%ld, base time:%ld\n",

tvl.tv_sec, ch2_video_base_time.tv_sec);

ch2_AviMuxGst.record_flag = 0;

sem_post(&ch2_AviMuxGst.frame_put);

sem_wait(&ch2_AviMuxGst.frame_get);

sem_wait(&ch2_AviMuxGst.record_on);

ch2_AviMuxGst.vfile = fopen(filename2,"ab");

}

else

{

record_wait_iframe2 = 1;

}

}

sem_post(&ch2_AviMuxGst.frame_put);

sem_wait(&ch2_AviMuxGst.frame_get);

}

else //if(channel == MAX_CHANNEL-1)

{

count3 = rec_msg->ts_sec - ch3_video_base_time.tv_sec;

if(count3 == RECORD_TIME_SEC || record_wait_iframe3 == 1)

{

if(is_iframe(pbuf[4]))

{

printf("ch3 video use time count3: %d msg sec %d, base:%ld \n",

count3, rec_msg->ts_sec, ch3_video_base_time.tv_sec);

memset(&head.tm, 0, sizeof(struct tm));

gettimeofday(&tvl, NULL);

localtime_r(&tvl.tv_sec, &head.tm);

ch3_video_base_time.tv_sec = rec_msg->ts_sec;

memset(filename3, 0, sizeof(filename3));

memset(filename3_index, 0, sizeof(filename3_index));

sprintf(filename3_index, "%d-%02d-%02d_%02d_%02d_%02d_ch%d_%s_%d",

1900 + head.tm.tm_year, 1 + head.tm.tm_mon, head.tm.tm_mday,

head.tm.tm_hour, head.tm.tm_min, head.tm.tm_sec, 3, startup_ID, ++file_count3);

sprintf(filename3, "%s%s.avi", RECORD_DIR, filename3_index);

add_file(filename3);

record_wait_iframe3 = 0;

//g_signal_emit_by_name (ch3_AviMuxGst.appsrc, "end-of-stream", &ret);

//gst_element_send_event(ch3_AviMuxGst.pipeline, gst_event_new_eos());

printf("ch3 video chage to new file cur time:%ld, base time:%ld\n",

tvl.tv_sec, ch3_video_base_time.tv_sec);

ch3_AviMuxGst.record_flag = 0;

sem_post(&ch3_AviMuxGst.frame_put);

sem_wait(&ch3_AviMuxGst.frame_get);

sem_wait(&ch3_AviMuxGst.record_on);

ch3_AviMuxGst.vfile = fopen(filename3,"ab");

}

else

{

record_wait_iframe3 = 1;

}

}

sem_post(&ch3_AviMuxGst.frame_put);

sem_wait(&ch3_AviMuxGst.frame_get);

}

}

}

return 0;

}