ELK6.4安装ES集群kibana展示logstash日志收集##

场景1:

业务:**功能挂了,让开发看下问题吧

开发A:(运维)哥们帮忙查一下日志

这时候运维就熟练的使用了grep,awk等命令,获取开发想要的信息。遇到多维度,复杂检索时还是蛮费劲的,文本检索也比较慢,不会熟练使用Linux命令的开发人员,则陷入尴尬的地位,无法快速定位问题所在。

场景2:

开发A:哥们帮我拉一下日志,我排查个问题。

运维:好嘞。

开发B:兄弟再帮忙拉一下/home/bea/*/logs/*的日志。

运维:刚才开发A拉过了,你可以问他要一下。

开发B:刚才那哥们给你的日志不是那个目录下的,麻烦再拉一下,谢谢!

运维:好的吧

开发C:兄弟帮我拉一下另外一台的另外一个应用的日志。

运维:晓得了

开发C:谢谢你兄弟

我们的运维人员可以帮我们做,但是拉下来的日志,就是我们自己的事情了,遇到日志很小的时候,可以很简单,用UE或者其他编辑器打开,搜索关键词,查看信息。

遇到很大的日志,比如说超过50M的用编辑器就很难打开,即使打开了,也是很慢,电脑再有点卡,根本没有办法查日志。

日志方面当前遇到的问题

- 要对Linux命令特别熟悉

- 文本搜索太慢

- 不能实时监控

- 分布式查询日志效率更低 等

ELK解决方案

ELK是三个开源项目的缩写,分别是Elasticsearch、kibana、logstash

- Elasticsearch(ES) 是个开源分布式搜索引擎,提供搜集、分析、存储数据三大功能。它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

- Logstash 主要是用来日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。一般工作方式为c/s架构,client端安装在需要收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往elasticsearch上去。

- Kibana 也是一个开源和免费的工具,Kibana可以为 Logstash 和 ElasticSearch 提供的日志分析友好的Web 界面,可以帮助汇总、分析和搜索重要数据日志。

具体了解原理参考文末链接和官网了解

ELK是一个日志,具体官网了解

ELK官网地址https://www.elastic.co/cn/

安装Elasticsearch集群

依赖环境:

系统:Linux系统 ,本次操作Centos7

JDK:1.8版本JDK,本次是自带openJDK,装在默认路径下

机器:两台机器

192.168.220.71

192.168.220.72

检查环境

[root@localhost ~]# java -version

openjdk version "1.8.0_65"

OpenJDK Runtime Environment (build 1.8.0_65-b17)

OpenJDK 64-Bit Server VM (build 25.65-b01, mixed mode)

[root@localhost ~]# which java

/usr/bin/java

[root@localhost ~]# ls -lrt /usr/bin/java

lrwxrwxrwx. 1 root root 22 Jan 17 2018 /usr/bin/java -> /etc/alternatives/java

[root@localhost ~]# ls -lrt /etc/alternatives/java

lrwxrwxrwx. 1 root root 70 Jan 17 2018 /etc/alternatives/java -> /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.65-3.b17.el7.x86_64/jre/bin/java

[root@localhost ~]# cd /usr/lib/jvm

[root@localhost jvm]# ls

java-1.7.0-openjdk-1.7.0.91-2.6.2.3.el7.x86_64 jre-1.7.0 jre-1.8.0 jre-openjdk

java-1.8.0-openjdk-1.8.0.65-3.b17.el7.x86_64 jre-1.7.0-openjdk jre-1.8.0-openjdk

jre jre-1.7.0-openjdk-1.7.0.91-2.6.2.3.el7.x86_64 jre-1.8.0-openjdk-1.8.0.65-3.b17.el7.x86_64

[root@localhost jvm]#

角色划分

主节点:192.168.220.71

从节点:192.168.220.72

Elasticsearch 主动节点都要安装

kibana 主节点上安装

logstash 日志收集的机器上 主从都要安装

ELK版本信息:

elasticsearch-6.4.0

kibana-6.4.0

logstash-6.4.0

filebeat-6.4.0

修改服务器hosts

在71机器上执行

[root@localhost ~] vim /etc/hosts

添加 192.168.220.71 master-node

在72机器上执行

[root@localhost ~] vim /etc/hosts

添加 192.168.220.72 data-node1

centos 7 防火墙设置或者开通你端口

停止防火墙

[root@localhost ~]# systemctl stop firewalld.service

禁止防火墙开机启动

[root@localhost ~]# systemctl disable firewalld.service

重启机器

[root@localhost ~]# reboot

修改完后的主机名

主节点

[root@master-node ~]# hostname

master-node

从节点

[root@data-node1 ~]# hostname

data-node1

安装ES

如果无法下载则需要自己下载上传到/root/product目录下

我是手动下载放在对应目录

elasticsearch下载地址:https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.4.0.rpm

kibana下载地址:https://artifacts.elastic.co/downloads/kibana/kibana-6.4.0-x86_64.rpm

logstash下载地址:https://artifacts.elastic.co/downloads/logstash/logstash-6.4.0.rpm

[root@master-node product]# mkdir -p /root/product

[root@master-node product]# cd /root/product

[root@master-node product]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.4.0.rpm

[root@master-node product]# rpm -ivh elasticsearch-6.4.0.rpm

warning: elasticsearch-6.4.0.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

Updating / installing...

1:elasticsearch-0:6.4.0-1 ################################# [100%]

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

Created elasticsearch keystore in /etc/elasticsearch

[root@master-node product]#

[root@master-node product]# ll /etc/elasticsearch

total 28

-rw-rw----. 1 root elasticsearch 207 Sep 12 19:43 elasticsearch.keystore

-rw-rw----. 1 root elasticsearch 2869 Aug 18 07:23 elasticsearch.yml

-rw-rw----. 1 root elasticsearch 3009 Aug 18 07:23 jvm.options

-rw-rw----. 1 root elasticsearch 6380 Aug 18 07:23 log4j2.properties

-rw-rw----. 1 root elasticsearch 473 Aug 18 07:23 role_mapping.yml

-rw-rw----. 1 root elasticsearch 197 Aug 18 07:23 roles.yml

-rw-rw----. 1 root elasticsearch 0 Aug 18 07:23 users

-rw-rw----. 1 root elasticsearch 0 Aug 18 07:23 users_roles

[root@master-node product]#

jvm.options是 设置java相关的参数

-Xms1g

-Xmx1g

配置ES运行内存大小。

安装配置或者参考官网

https://www.elastic.co/guide/en/elasticsearch/reference/6.0/rpm.html

配置ES

[root@master-node elasticsearch]# more /etc/elasticsearch/elasticsearch.yml |grep -v "^#"

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

[root@master-node elasticsearch]#vim /etc/elasticsearch/elasticsearch.yml

vim小技巧大写GG跳到最后一行

添加

cluster.name: master-node # 集群中的名称,集群之间要一致

node.name: master # 该节点名称

node.master: true # 意思是该节点为主节点

node.data: true # 表示这不是数据节点

network.host: 0.0.0.0 # 监听全部ip,在实际环境中应设置为一个安全的ip

http.port: 9200 # es服务的端口号

discovery.zen.ping.unicast.hosts: ["192.168.220.71", "192.168.220.72"] # 配置自动发现

从节点添加

cluster.name: master-node # 集群中的名称,集群之间要一致

node.name: master # 该节点名称

node.master: true # 意思是该节点为主节点

node.data: true # 表示这不是数据节点

network.host: 0.0.0.0 # 监听全部ip,在实际环境中应设置为一个安全的ip

http.port: 9200 # es服务的端口号

discovery.zen.ping.unicast.hosts: ["192.168.220.71", "192.168.220.72"] # 配置自动发现

启动 先启动主节点,再启动从节点

systemctl start elasticsearch.service

日志查看

[root@master-node ~]# ls /var/log/elasticsearch/

[root@master-node ~]# tail -50f /var/log/messages

检查启动情况

[root@master-node elasticsearch]# curl '192.168.220.71:9200/_cluster/health?pretty'

{

"cluster_name" : "master-node",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

[root@master-node elasticsearch]#

检查集群情况

[root@master-node elasticsearch]# curl '192.168.220.71:9200/_cluster/state?pretty'

{

"cluster_name" : "master-node",

"compressed_size_in_bytes" : 9574,

"cluster_uuid" : "OYnLCw6DSdeWet020B-zzA",

"version" : 16,

"state_uuid" : "1GtRg_ZhT2qOPPJeyzPY_w",

"master_node" : "45ktex-MTPKmE9Jpcd2HBQ",

"blocks" : { },

"nodes" : {

"45ktex-MTPKmE9Jpcd2HBQ" : {

"name" : "master",

"ephemeral_id" : "bHU_jIfUQ1KQvomp2Pyx_g",

"transport_address" : "192.168.220.71:9300",

"attributes" : {

"ml.machine_memory" : "1888342016",

"xpack.installed" : "true",

"ml.max_open_jobs" : "20",

"ml.enabled" : "true"

}

},

"624Y_ao2Svq0wfbdmaqHUg" : {

"name" : "data-node1",

"ephemeral_id" : "Do0nAllcSQmmtpNeocV3wA",

"transport_address" : "192.168.220.72:9300",

"attributes" : {

"ml.machine_memory" : "1913507840",

"ml.max_open_jobs" : "20",

"xpack.installed" : "true",

"ml.enabled" : "true"

}

}

},

......

"snapshot_deletions" : {

"snapshot_deletions" : [ ]

}

}

出现此场景说明ES集群搭建成功

安装kibana

在ES主节点上安装kibana

[root@master-node ~]# cd /root/product

[root@master-node product]# wget https://artifacts.elastic.co/downloads/kibana/kibana-6.4.0-x86_64.rpm

[root@master-node product]# rpm -ivh kibana-6.0.0-x86_64.rpm

error: open of kibana-6.0.0-x86_64.rpm failed: No such file or directory

[root@master-node product]# rpm -ivh kibana-6.0.0-x86_64.rpm^C

[root@master-node product]# rpm -ivh kibana-6.4.0-x86_64.rpm

warning: kibana-6.4.0-x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:kibana-6.4.0-1 ################################# [100%]

[root@master-node product]#

[root@master-node elasticsearch]#

编辑 kibana

[root@master-node product]# more /etc/kibana/kibana.yml |grep -v "^#"

[root@master-node product]# vim /etc/kibana/kibana.yml

添加如下配置

server.port: 5601 # 配置kibana的端口

server.host: 192.168.220.71 # 配置监听ip

elasticsearch.url: "http://192.168.220.71:9200" # 配置es服务器的ip,如果是集群则配置该集群中主节点的ip

logging.dest: /var/log/kibana.log # 配置kibana的日志文件路径,不然默认是messages里记录日志

创建日志赋权

[root@master-node product]# touch /var/log/kibana.log

[root@master-node log]# chmod 777 /var/log/kibana.log

启动kibana 查看进程

[root@master-node log]# systemctl start kibana

[root@master-node log]# ps aux |grep kibana

kibana 5307 37.8 9.1 1122624 168436 ? Rsl 21:23 0:11 /usr/share/kibana/bin/../node/bin/node --no-warnings /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.yml

root 5362 0.0 0.0 112644 948 pts/0 R+ 21:24 0:00 grep --color=auto kibana

[root@master-node log]#

查看监听端口

[root@master-node log]# netstat -lntp |grep 5601

tcp 0 0 192.168.220.71:5601 0.0.0.0:* LISTEN 5307/node

[root@master-node log]#

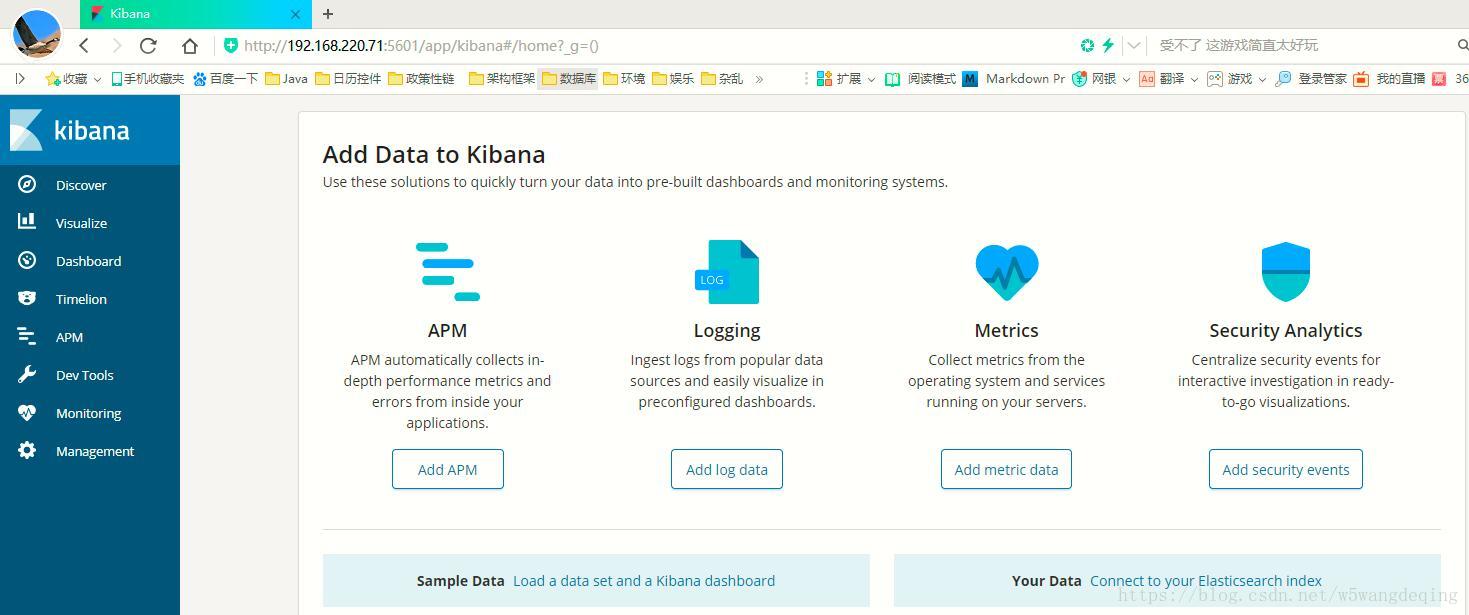

浏览器访问 http://192.168.220.71:5601

安装logstash

logstash是日志收集的工具,安装在所要收集日志的机器上。

安装在192.168.220.72上安装logstash,但是要注意的是目前logstash不支持JDK1.9。

安装步骤多种方式安装参考

https://www.elastic.co/guide/en/logstash/current/installing-logstash.html

[root@data-node1 ~]# cd /root/product

[root@data-node1 product]# wget https://artifacts.elastic.co/downloads/logstash/logstash-6.4.0.rpm

[root@data-node1 product]# rpm -ivh logstash-6.4.0.rpm

warning: logstash-6.4.0.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:logstash-1:6.4.0-1 ################################# [100%]

Using provided startup.options file: /etc/logstash/startup.options

Successfully created system startup script for Logstash

[root@data-node1 product]#

安装完之后,先配置logstash收集syslog日志:

[root@data-node1 ~]# vim /etc/logstash/conf.d/syslog.conf

input { # 定义日志源

syslog {

type => "system-syslog" # 定义类型

port => 10514 # 定义监听端口

}

}

output { # 定义日志输出

stdout {

codec => rubydebug # 将日志输出到当前的终端上显示

}

}

"/etc/logstash/conf.d/syslog.conf" [New] 12L, 248C written

[root@data-node1 ~]#

检测配置文件是否有错:

[root@data-node1 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-09-13T10:14:12,020][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/var/lib/logstash/queue"}

[2018-09-13T10:14:12,081][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/var/lib/logstash/dead_letter_queue"}

[2018-09-13T10:14:13,808][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-09-13T10:14:21,559][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

[root@data-node1 bin]#

#出现 Configuration OK 即可

–path.settings 用于指定logstash的配置文件所在的目录

-f 指定需要被检测的配置文件的路径

–config.test_and_exit 指定检测完之后就退出,不然就会直接启动了

配置kibana服务器的ip以及配置的监听端口:

[root@data-node1 bin]# vim /etc/rsyslog.conf

*.* @@192.168.220.71:10514

重启rsyslog,让配置生效:

[root@data-node1 bin]# systemctl restart rsyslog

[root@data-node1 bin]#

指定刚才的配置文件,启动logstash:

日志文件会输出到此终端

浏览器访问

http://192.168.220.72:10514/

或者开启一个新的终端

curl http://192.168.220.72:10514/

会在屏幕上打印日志即显示收集成功

[root@data-node1 ~]# cd /usr/share/logstash/bin

[root@data-node1 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-09-13T11:01:58,406][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2018-09-13T11:02:00,454][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.4.0"}

[2018-09-13T11:02:08,785][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2018-09-13T11:02:09,979][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x1d9e66b1 run>"}

[2018-09-13T11:02:10,085][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2018-09-13T11:02:10,131][INFO ][logstash.inputs.syslog ] Starting syslog udp listener {:address=>"0.0.0.0:10514"}

[2018-09-13T11:02:10,180][INFO ][logstash.inputs.syslog ] Starting syslog tcp listener {:address=>"0.0.0.0:10514"}

[2018-09-13T11:02:11,596][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2018-09-13T11:03:00,340][INFO ][logstash.inputs.syslog ] new connection {:client=>"192.168.220.72:34664"}

{

"type" => "system-syslog",

"facility_label" => "kernel",

"facility" => 0,

"message" => "GET / HTTP/1.1\r\n",

"severity_label" => "Emergency",

"@version" => "1",

"host" => "192.168.220.72",

"severity" => 0,

"tags" => [

[0] "_grokparsefailure_sysloginput"

],

"@timestamp" => 2018-09-13T03:03:00.405Z,

"priority" => 0

}

{

"type" => "system-syslog",

"facility_label" => "kernel",

"facility" => 0,

"message" => "User-Agent: curl/7.29.0\r\n",

"severity_label" => "Emergency",

"@version" => "1",

"host" => "192.168.220.72",

"severity" => 0,

"tags" => [

[0] "_grokparsefailure_sysloginput"

],

"@timestamp" => 2018-09-13T03:03:00.568Z,

"priority" => 0

}

配置logstash

[root@data-node1 ~]# vim /etc/logstash/conf.d/syslog.conf

input { # 定义日志源

syslog {

type => "system-syslog" # 定义类型

port => 10514 # 定义监听端口

}

}

output { # 定义日志输出

elasticsearch {

hosts => ["192.168.220.71:9200"] # 定义es服务器的ip

index => "system-syslog-%{+YYYY.MM}" # 定义索引

}

}

~

"/etc/logstash/conf.d/syslog.conf" 13L, 305C written

[root@data-node1 ~]#

配置监听IP

[root@data-node1 ~]# vim /etc/logstash/logstash.yml

http.host: "192.168.220.72"

检测配置文件有没有错误

[root@data-node1 ~]# cd /usr/share/logstash/bin

[root@data-node1 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-09-13T11:11:11,314][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-09-13T11:11:22,311][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

[root@data-node1 bin]#

给日志赋权

[root@data-node1 ~]# chown logstash /var/log/logstash/logstash-plain.log

[root@data-node1 ~]# ll !$

ll /var/log/logstash/logstash-plain.log

-rw-r--r--. 1 logstash root 4688 Sep 13 11:11 /var/log/logstash/logstash-plain.log

赋权文件夹

[root@data-node1 ~]# ll /var/lib/logstash/

total 4

drwxr-xr-x. 2 root root 6 Sep 13 10:14 dead_letter_queue

drwxr-xr-x. 2 root root 6 Sep 13 10:14 queue

-rw-r--r--. 1 root root 36 Sep 13 10:25 uuid

[root@data-node1 ~]# chown -R logstash /var/lib/logstash/

[root@data-node1 ~]# ll /var/lib/logstash/

total 4

drwxr-xr-x. 2 logstash root 6 Sep 13 10:14 dead_letter_queue

drwxr-xr-x. 2 logstash root 6 Sep 13 10:14 queue

-rw-r--r--. 1 logstash root 36 Sep 13 10:25 uuid

重启logstash

[root@data-node1 ~]# systemctl restart logstash

[root@data-node1 ~]#

查看检测端口

[root@data-node1 ~]# netstat -lntp |grep 10514

tcp6 0 0 :::10514 :::* LISTEN 10922/java

[root@data-node1 ~]# netstat -lntp |grep 9600

tcp6 0 0 192.168.220.72:9600 :::* LISTEN 10922/java

[root@data-node1 ~]#

在浏览器上访问一下

http://192.168.220.72:10514/

或者 curl http://192.168.220.72:10514/

查看ES的索引

[root@data-node1 ~]# curl '192.168.220.71:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open system-syslog-2018.09 K1M6FtzXS7CLjfmJ4rfeog 5 1 5 0 59kb 33.4kb

green open .kibana k94rlEYtQi-AGx42BoTFiQ 1 1 1 0 8kb 4kb

[root@data-node1 ~]#

[root@data-node1 ~]# curl -XGET '192.168.220.71:9200/system-syslog-2018.09?pretty'

{

"system-syslog-2018.09" : {

"aliases" : { },

"mappings" : {

"doc" : {

"properties" : {

"@timestamp" : {

"type" : "date"

},

"@version" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

……

"settings" : {

"index" : {

"creation_date" : "1536809299144",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "K1M6FtzXS7CLjfmJ4rfeog",

"version" : {

"created" : "6040099"

},

"provided_name" : "system-syslog-2018.09"

}

}

}

}

[root@data-node1 ~]#

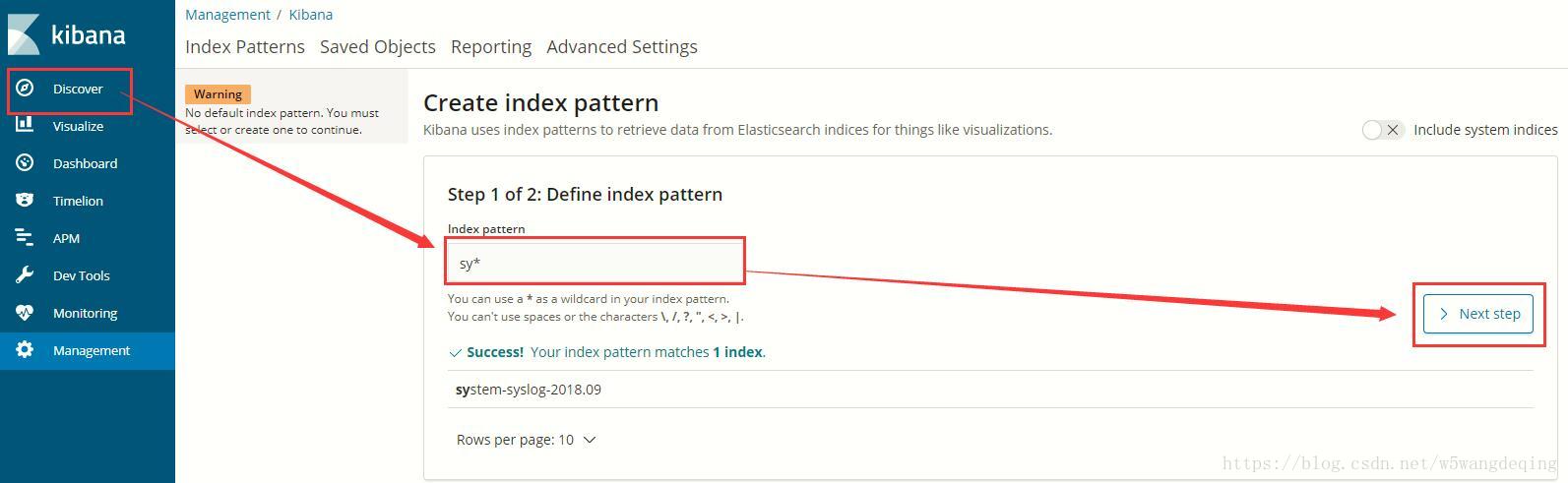

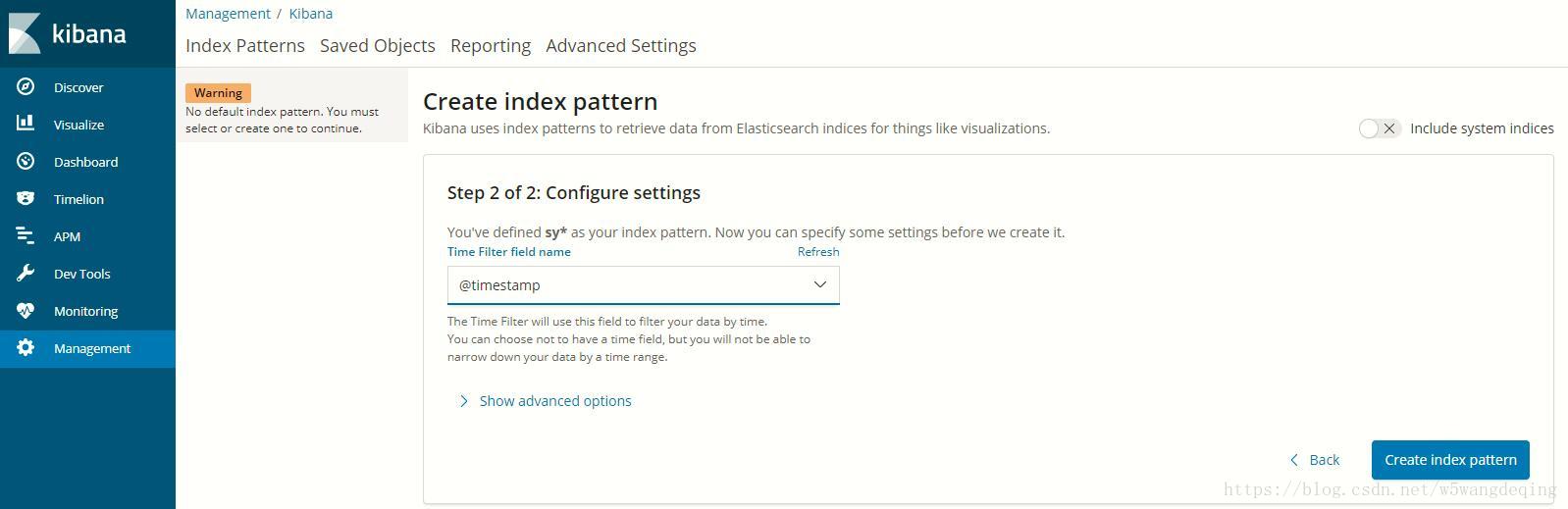

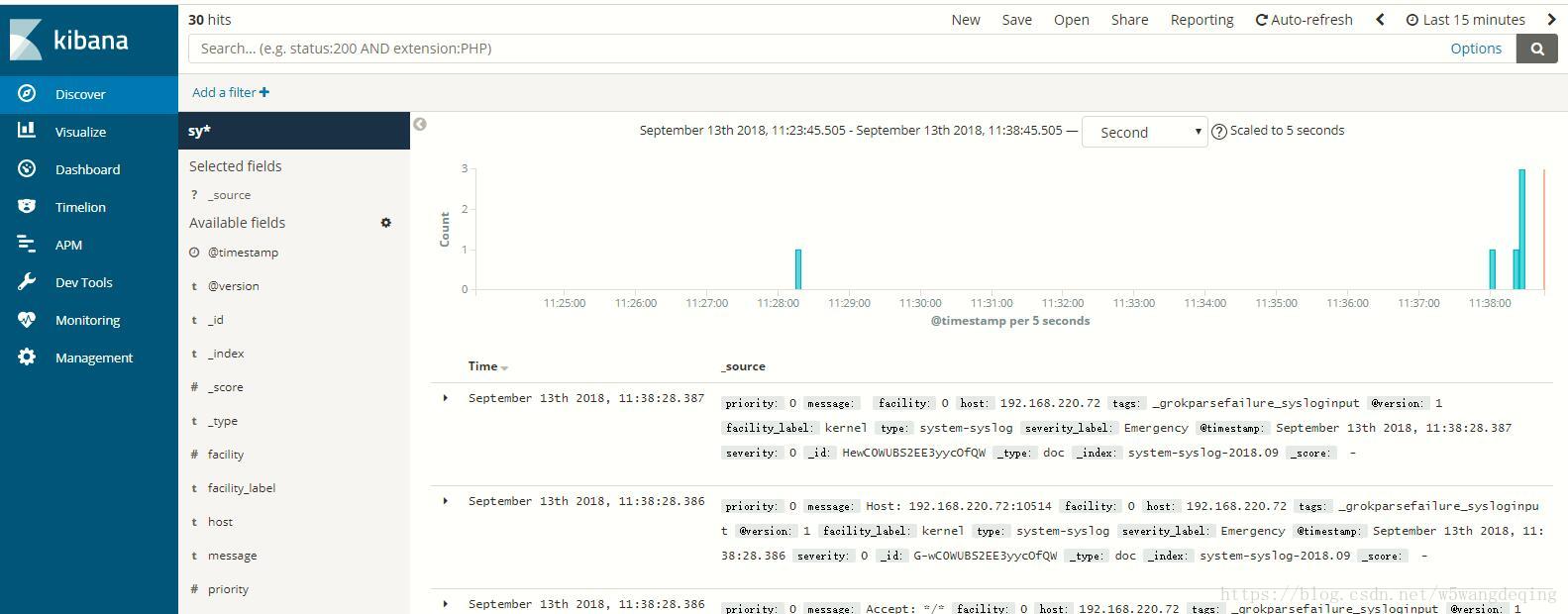

在kibana中配置索引

安装filebeat

[root@data-node1 ~]# cd /root/product/

[root@data-node1 product]# rpm -ivh filebeat-6.4.0-x86_64.rpm

warning: filebeat-6.4.0-x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:filebeat-6.4.0-1 ################################# [100%]

编辑配置

[root@data-node1 product]# vim /etc/filebeat/filebeat.yml

filebeat.prospectors:

- type: log

#enabled: false 这一句要注释掉

paths:

- /var/log/messages # 指定需要收集的日志文件的路径

#output.elasticsearch: # 先将这几句注释掉

# Array of hosts to connect to.

#hosts: ["localhost:9200"]

output.console: # 指定在终端上输出日志信息

enable: true

"/etc/filebeat/filebeat.yml" 204L, 7576C written

[root@data-node1 product]#

临时启动

[root@data-node1 product]# /usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml

去除屏幕打印日志,输出到ES中

[root@data-node1 ~]# vim /etc/filebeat/filebeat.yml

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["192.168.220.71:9200"]

# Optional protocol and basic auth credentials.

#protocol: "https"

#username: "elastic"

#password: "changeme"

#output.console: # 指定在终端上输出日志信息

#enable: true

启动

[root@data-node1 ~]# systemctl start filebeat

查看进程

[root@data-node1 ~]# ps axu |grep filebeat

root 5421 0.0 0.8 376148 15548 ? Ssl 15:13 0:04 /usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat

root 8168 0.0 0.0 112644 952 pts/0 R+ 17:07 0:00 grep --color=auto filebeat

查看是否有filebeat开头的索引

[root@data-node1 ~]# curl '192.168.220.71:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open filebeat-6.4.0-2018.09.19 kwrZC65IToG9Q2_y0Evlvg 3 1 3349 0 1.3mb 643.9kb

参考

http://www.cnblogs.com/aresxin/p/8035137.html

http://blog.51cto.com/zero01/2079879

http://blog.51cto.com/zero01/2082794