HBase介绍

HBase – Hadoop Database,是一个高可靠性、高性能、面向列、可伸缩的分布式存储系统,利用HBase技术可在廉价PC Server上搭建起大规模结构化存储集群。

HBase是Google Bigtable的开源实现,类似Google Bigtable利用GFS作为其文件存储系统,HBase利用Hadoop HDFS作为其文件存储系统;Google运行MapReduce来处理Bigtable中的海量数据,HBase同样利用Hadoop MapReduce来处理HBase中的海量数据。

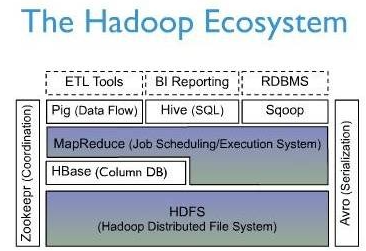

上图描述了Hadoop EcoSystem中的各层系统,其中HBase位于结构化存储层,Hadoop HDFS为HBase提供了高可靠性的底层存储支持,Hadoop MapReduce为HBase提供了高性能的计算能力,Zookeeper为HBase提供了稳定服务和failover机制。

此外,Pig和Hive还为HBase提供了高层语言支持,使得在HBase上进行数据统计处理变的非常简单。 Sqoop则为HBase提供了方便的RDBMS数据导入功能,使得传统数据库数据向HBase中迁移变的非常方便。

把下载好的包 传到/home/hadoop/hbase 解压后:

[hadoop@master hbase]$ ll total 788 drwxr-xr-x. 4 hadoop hadoop 4096 Dec 5 10:53 bin -rw-r--r--. 1 hadoop hadoop 228302 Dec 5 10:58 CHANGES.txt drwxr-xr-x. 2 hadoop hadoop 4096 Dec 5 10:54 conf drwxr-xr-x. 12 hadoop hadoop 4096 Dec 5 11:53 docs drwxr-xr-x. 7 hadoop hadoop 4096 Dec 5 11:43 hbase-webapps -rw-r--r--. 1 hadoop hadoop 261 Dec 5 11:56 LEGAL drwxrwxr-x. 3 hadoop hadoop 4096 Jan 8 11:10 lib -rw-r--r--. 1 hadoop hadoop 143082 Dec 5 11:56 LICENSE.txt -rw-r--r--. 1 hadoop hadoop 404470 Dec 5 11:56 NOTICE.txt -rw-r--r--. 1 hadoop hadoop 1477 Dec 5 10:53 README.txt

设置环境变量

[root@master master]# vi /etc/profile

export HBASE_HOME=/home/hadoop/hbase

export PATH=$PATH:$HBASE_HOME/bin

[root@master master]# source /etc/profile

[hadoop@master ~]$ hbase version

HBase 1.4.9

Source code repository git://apurtell-ltm4.internal.salesforce.com/Users/apurtell/src/hbase revision=d625b212e46d01cb17db9ac2e9e927fdb201afa1

Compiled by apurtell on Wed Dec 5 11:54:10 PST 2018

From source with checksum a7716fc1849b07ea6dd830a08291e754

编辑hbase-env.sh

#Java环境 export JAVA_HOME=/usr/local/jdk1.8 #通过hadoop的配置文件找到hadoop集群 export HBASE_CLASSPATH=/home/hadoop/hbase/conf #使用HBASE自带的zookeeper管理集群 export HBASE_MANAGES_ZK=true

编辑hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name> #设置hbase数据库存放数据的目录

<value>hdfs://192.168.1.30:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name> #打开hbase分布模式

<value>true</value>

</property>

<property>

<name>hbase.master</name> #指定hbase集群主控节点

<value>192.168.1.30:60000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,saver1,saver2</value> #指定zookeeper集群节点名,因为是由zookeeper表决算法决定的

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name> #指zookeeper集群data目录

<value>/home/hadoop/hbase/temp/zookeeper</value>

</property>

</configuration>

编辑regionservers

[hadoop@master conf]$ vi regionservers

saver1

saver2

拷贝到其他节点:

scp -r hbase [email protected]:/home/hadoop

scp -r hbase [email protected]:/home/hadoop

启动:

start-hbase.sh

[hadoop@master conf]$ start-hbase.sh master: running zookeeper, logging to /home/hadoop/hbase/bin/../logs/hbase-hadoop-zookeeper-master.out saver1: running zookeeper, logging to /home/hadoop/hbase/bin/../logs/hbase-hadoop-zookeeper-saver1.out saver2: running zookeeper, logging to /home/hadoop/hbase/bin/../logs/hbase-hadoop-zookeeper-saver2.out running master, logging to /home/hadoop/hbase/logs/hbase-hadoop-master-master.out Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 saver1: running regionserver, logging to /home/hadoop/hbase/bin/../logs/hbase-hadoop-regionserver-saver1.out saver2: running regionserver, logging to /home/hadoop/hbase/bin/../logs/hbase-hadoop-regionserver-saver2.out saver1: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 saver1: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 saver2: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 saver2: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

进入HBASE shell

[hadoop@master conf]$ hbase shell 2019-01-08 12:12:30,900 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/hadoop/hbase/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] HBase Shell Use "help" to get list of supported commands. Use "exit" to quit this interactive shell. Version 1.4.9, rd625b212e46d01cb17db9ac2e9e927fdb201afa1, Wed Dec 5 11:54:10 PST 2018 hbase(main):001:0>

未完成。。。