版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/lanxueCC/article/details/53284398

本文主要实现caffe框架中/src/caffe/layers/Relu_layer.cpp文件,该文件实现的是激活函数Relu。

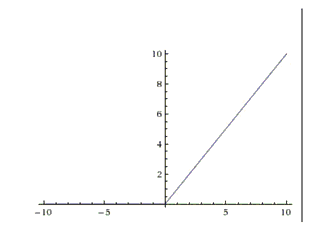

ReLU是近些年非常流行的激活函数。相比于sigmoid与Tanh,它具有一定的优越性,这三者对比可见https://zhuanlan.zhihu.com/p/21462488?refer=intelligentunit,它的函数公式是f(x)=max(0,x)。换句话说,这个激活函数就是一个关于0的阈值。如下图:::

下面记录我在看relu层时的代码注释:::

Relu_layer.hpp:::

#ifndef CAFFE_RELU_LAYER_HPP_

#define CAFFE_RELU_LAYER_HPP_

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/layer.hpp"

#include "caffe/proto/caffe.pb.h"

#include "caffe/layers/neuron_layer.hpp"

namespace caffe {

/**

* @brief Rectified Linear Unit non-linearity @f$ y = \max(0, x) @f$.

* The simple max is fast to compute, and the function does not saturate.

*/

/*Relu层类,派生于NeuronLayer类*/

template <typename Dtype>

class ReLULayer : public NeuronLayer<Dtype> {

public:

/**

* @param param provides ReLUParameter relu_param,

* with ReLULayer options:

* - negative_slope (\b optional, default 0).

* the value @f$ \nu @f$ by which negative values are multiplied.

*/

/*构造函数,NeuronLayer层的参数显式传递给ReluLayer,这些参数就是protobuf文件中存储的参数*/

explicit ReLULayer(const LayerParameter& param)

: NeuronLayer<Dtype>(param) {}

/*内联函数,将当前层类型返回*/

virtual inline const char* type() const { return "ReLU"; }

protected:

/**

* @param bottom input Blob vector (length 1)

* -# @f$ (N \times C \times H \times W) @f$

* the inputs @f$ x @f$

* @param top output Blob vector (length 1)

* -# @f$ (N \times C \times H \times W) @f$

* the computed outputs @f$

* y = \max(0, x)

* @f$ by default. If a non-zero negative_slope @f$ \nu @f$ is provided,

* the computed outputs are @f$ y = \max(0, x) + \nu \min(0, x) @f$.

*/

//前向传播cpu实现

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

//前向传播gpu实现

virtual void Forward_gpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

/*注意:前向传播函数以bottom为输入,top为输出*/

/**

* @brief Computes the error gradient w.r.t. the ReLU inputs.

*

* @param top output Blob vector (length 1), providing the error gradient with

* respect to the outputs

* -# @f$ (N \times C \times H \times W) @f$

* containing error gradients @f$ \frac{\partial E}{\partial y} @f$

* with respect to computed outputs @f$ y @f$

* @param propagate_down see Layer::Backward.

* @param bottom input Blob vector (length 1)

* -# @f$ (N \times C \times H \times W) @f$

* the inputs @f$ x @f$; Backward fills their diff with

* gradients @f$

* \frac{\partial E}{\partial x} = \left\{

* \begin{array}{lr}

* 0 & \mathrm{if} \; x \le 0 \\

* \frac{\partial E}{\partial y} & \mathrm{if} \; x > 0

* \end{array} \right.

* @f$ if propagate_down[0], by default.

* If a non-zero negative_slope @f$ \nu @f$ is provided,

* the computed gradients are @f$

* \frac{\partial E}{\partial x} = \left\{

* \begin{array}{lr}

* \nu \frac{\partial E}{\partial y} & \mathrm{if} \; x \le 0 \\

* \frac{\partial E}{\partial y} & \mathrm{if} \; x > 0

* \end{array} \right.

* @f$.

*/

//返向传播cpu实现

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom);

//返向传播gpu实现

virtual void Backward_gpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom);

/*注意:返向传播中bottom为输出,top为输入,其中propagate_down为bottom是否返向传播梯度的bool值的向量,个数与bottom数据个数相同*/

};

} // namespace caffe

#endif // CAFFE_RELU_LAYER_HPP_Relu_layer.cpp:::

#include <algorithm>

#include <vector>

#include "caffe/layers/relu_layer.hpp"

namespace caffe {

/*Relu层的前向传播函数*/

template <typename Dtype>

void ReLULayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const Dtype* bottom_data = bottom[0]->cpu_data(); //获得输入数据内存地址指针

Dtype* top_data = top[0]->mutable_cpu_data(); //获得输出数据内存地址指针

//输入的blob的个数

const int count = bottom[0]->count();

//negative_slope是Leak Relu的参数,默认为0,就是普通的Relu函数。

//Leaky ReLU是为解决“ReLU死亡”问题的尝试。

//一般的ReLU中当x<0时,函数值为0。禠eaky ReLU则是给出一个很小的负数梯度值,比如0.01。

//Leaky Relu公式如下 f(x) = max(x, 0) + alpha*min(x, 0) 其中alpha就是下面的代码中参数negative_slope

Dtype negative_slope = this->layer_param_.relu_param().negative_slope();

for (int i = 0; i < count; ++i) {

top_data[i] = std::max(bottom_data[i], Dtype(0))

+ negative_slope * std::min(bottom_data[i], Dtype(0));

}

}

/*Relu层的返向传播函数*/

template <typename Dtype>

void ReLULayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) {

//propagate_down与计算bottom的梯度有关,在caffe的BP实现中非常重要

if (propagate_down[0]) {

//获得前一层的前向传播的数据内存地址

const Dtype* bottom_data = bottom[0]->cpu_data();

//获得后一层的后向传播的导数的内存地址(对于本层来说是输入数据)

const Dtype* top_diff = top[0]->cpu_diff();

//获得前一层的后向传播的导数的内存地址(对于本层来说是输出数据)

Dtype* bottom_diff = bottom[0]->mutable_cpu_diff();

//参与计算的blob个数

const int count = bottom[0]->count();

//见上面的Forward_cpu函数中关于这个参数的解释

Dtype negative_slope = this->layer_param_.relu_param().negative_slope();

//这里(bottom_data[i] > 0)实现的就是Relu的导数, 这是一个逻辑判断,如果bottom_data[i]值大于0则(bottom_data[i] > 0)值为1,反之为0

//这里((bottom_data[i] > 0) + negative_slope * (bottom_data[i] <= 0)) 实现的是Leaky Relu的导数

//根据求导链式法则,前一层(对于返向传播前一层为输出层)的导数对于上一层导数乘以当前层函数的导数

for (int i = 0; i < count; ++i) {

bottom_diff[i] = top_diff[i] * ((bottom_data[i] > 0)

+ negative_slope * (bottom_data[i] <= 0));

}

}

}

#ifdef CPU_ONLY

STUB_GPU(ReLULayer);

#endif

INSTANTIATE_CLASS(ReLULayer);

} // namespace caffe