今天我们使用scrapy来爬 https://www.tous.com/us-en/jewelry 这个站的产品信息。

先创建工程 scrapy stratproject tous

然后创建spider scrapy genspider tou tous.com

首先第一步我们先定义item

class TousItem(scrapy.Item):

file_urls = scrapy.Field()

files = scrapy.Field()

image_urls = scrapy.Field()

images = scrapy.Field()

current_time = scrapy.Field()

product_url = scrapy.Field()

name = scrapy.Field()

marketprice = scrapy.Field()

price = scrapy.Field()

sku = scrapy.Field()

des = scrapy.Field()

image = scrapy.Field()

v_categories_name_1 = scrapy.Field()

v_categories_name_2 = scrapy.Field()

v_categories_name_3 = scrapy.Field()

v_categories_name_4 = scrapy.Field()

v_categories_name_5 = scrapy.Field()

v_categories_name_6 = scrapy.Field()

v_categories_name_7 = scrapy.Field()

shop = scrapy.Field()

第二步:我们使用item这个类,来编写我们的tou.py提去网页中我们需要的数据,最后返回给pipelines

class TouSpider(CrawlSpider):

name = 'tou'

allowed_domains = ['tous.com']

start_urls = ['https://www.tous.com/us-en/jewelry']

rules = (

Rule(LinkExtractor(allow=r'https://www.tous.com/us-en/[a-zA-Z-0-9-]+/'),callback='parse_item',follow=True),

Rule(LinkExtractor(allow=r'https://www.tous.com/us-en/[a-zA-Z-0-9-]+/'), follow=True),

Rule(LinkExtractor(allow=r'https://www.tous.com/us-en/[a-zA-Z-0-9-]+/[a-zA-Z-0-9-]+/'), follow = True),

Rule(LinkExtractor(allow=r'https://www.tous.com/us-en/[a-zA-Z-0-9-]+/[a-zA-Z-0-9-]+/\?p=\d+'), follow = True),

Rule(LinkExtractor(allow=r'https://www.tous.com/us-en/[a-zA-Z-0-9-]+/\?p=\d+'), follow = True),

)

def parse_item(self, response):

try:

item = TousItem()

item["name"] = response.css("h1#products-name::text").extract()[0].strip()

item["sku"] ='N'+response.css("span#product-sku::text").extract()[0]

item["product_url"] =re.findall(r'<meta\s*property=\"og:url\"\s*content=\"(.*?)\"',str(response.body))[0]

item["price"]= response.css("div.product-shop-inner div.price-box span.regular-price span.price::text").extract()[0]

item["marketprice"]= response.css("div.product-shop-inner div.price-box span.regular-price span.price::text").extract()[0]

item["des"]= response.css("div.easytabs-content ul li::text").extract()[0]

try:

all_image = response.css("a.thumbnail").extract()

all_img = []

for i in range(0,len(all_image)):

all_img.append(re.findall(r'<img\s*src=\"(.*?)\"',all_image[i])[0].replace("/140x/","/1000x/"))

item["image"]= all_img

except:

all_img = []

all_img.append(re.findall(r'<meta\s*property=\"og:image\"\s*content=\"(.*?)\"',str(response.body))[0])

item["current_time"]= time.strftime("%Y-%m-%d %H:%M:%S",time.localtime())

try:

classify = response.css("div.breadcrumbs ul li a::text").extract()

item["v_categories_name_1"] = classify[0] if len(classify) > 0 else ""

item["v_categories_name_2"] = classify[0] if len(classify) > 1 else ""

item["v_categories_name_3"] = classify[0] if len(classify) > 2 else ""

item["v_categories_name_4"] = classify[0] if len(classify) > 3 else ""

item["v_categories_name_5"] = classify[0] if len(classify) > 4 else ""

item["v_categories_name_6"] = classify[0] if len(classify) > 5 else ""

item["v_categories_name_7"] = classify[0] if len(classify) > 6 else ""

except:

print("目录")

item["shop"]= "https://www.tous.com/us-en/jewelry"

item["file_urls"] = [item["product_url"]]

item["image_urls"] = all_img

print(item["file_urls"])

print(item["image_urls"])

print("是产品页面")

print("--------------------------")

yield item

except:

print("不是产品页面")

第三步:我们编写pipe管道文件 处理爬虫返回的信息 这边我们实现两个功能:1、将数据存进mongo数据库 2、自定义命名文件的存储形式

class MongoDBPipeline(object):

def __init__(self):

client = pymongo.MongoClient(settings['MONGODB_SERVER'], settings['MONGODB_PORT'])

db = client[settings['MONGODB_DB']]

self.product_col = db[settings['MONGODB_COLLECTION']]

def process_item(self, item, spider):

result = self.product_col.find_one({"sku": item["sku"], "product_shop": item["shop"]})

print("查询结果:"+str(result))

try:

if len(result) > 0:

mongoExistFlag = 1

except Exception as e:

mongoExistFlag = 0

if (mongoExistFlag == 0):

print("这条记录数据库没有 可以插入")

self.product_col.insert_one(

{"sku": item["sku"], "img": item["image"], "name": item["name"], "price": item["price"], "marketprice": item["marketprice"], "des": item["des"],

"addtime": item["current_time"], "product_url": item["product_url"], "v_specials_last_modified": "",

"v_products_weight": "0", "v_last_modified": "", "v_date_added": "", "v_products_quantity": "1000",

"v_manufacturers_name": "1000", "v_categories_name_1": item["v_categories_name_1"],

"v_categories_name_2": item["v_categories_name_2"], "v_categories_name_3": item["v_categories_name_3"],

"v_categories_name_4": item["v_categories_name_4"], "v_categories_name_5": item["v_categories_name_5"],

"v_categories_name_6": item["v_categories_name_6"], "v_categories_name_7": item["v_categories_name_7"],

"v_tax_class_title": "Taxable Goods", "v_status": "1", "v_metatags_products_name_status": "0",

"v_metatags_title_status": "0", "v_metatags_model_status": "0", "v_metatags_price_status": "0",

"v_metatags_title_tagline_status": "0", "v_metatags_title_1": "", "v_metatags_keywords_1": "",

"v_metatags_description_1": "", "product_shop": item["shop"]})

print("数据插入mongo数据库成功")

print("---------------------------------------")

# time.sleep(2)

if (mongoExistFlag == 1):

print("该字段已在数据库中有记录")

print("该页面是产品,准备存进磁盘")

print("------------------------------------------")

# time.sleep(2)

return item

class DownloadImagePipeline(ImagesPipeline):

def get_media_requests(self, item, info): # 下载图片

print("进入图片的 get_media_requests")

print(item["image_urls"])

for image_url in item['image_urls']:

yield Request(image_url, meta={'item': item, 'index': item['image_urls'].index(image_url)})

def file_path(self, request, response=None, info=None):

print("下载图片")

item = request.meta['item'] # 通过上面的meta传递过来item

index = request.meta['index']

# print(item)

# print(index)

image_name = item["sku"]+"_"+str(index)+".jpg"

print("-----------------------------------")

print("image_name:"+str(image_name))

print("-----------------------------------")

time.sleep(1)

down_image_name = u'full/{0}/{1}'.format(item["sku"], image_name)

print("----------------------------------")

print("down_image_name:"+str(down_image_name))

print("----------------------------------")

time.sleep(1)

return down_image_name

class DownloadFilePipeline(FilesPipeline):

def get_media_requests(self, item, info):

for file_url in item['file_urls']:

yield Request(file_url, meta={'item': item, 'index': item['file_urls'].index(file_url)})

def file_path(self, request, response=None, info=None):

print("下载文件")

item = request.meta['item'] # 通过上面的meta传递过来item

# index = request.meta['index']

file_name = item["sku"]+".html"

print("-----------------------------------")

print("file_name:"+str(file_name))

print("-----------------------------------")

time.sleep(1)

down_file_name = u'full/{0}/{1}'.format(item["sku"], file_name)

print("----------------------------------")

print("down_file_name:"+str(down_file_name))

print("----------------------------------")

time.sleep(1)

return down_file_name

第四步:有时候我们如果用自己的ip地址,不断访问,可能最后会被禁止访问,那咋办呢,使用代理呀,这个文件我们在middleware文件编写一个类

class TouspiderProxyIPLoadMiddleware(object):

def __init__(self):

self.proxy = ""

self.expire_datetime = datetime.datetime.now() - datetime.timedelta(minutes=1)

# self._get_proxyip()

def _get_proxyip(self):

f = open("proxy.txt")

proxys = f.read().split("\n")

p = random.sample(proxys, 1)[0]

print("proxy:", p)

self.proxy = p

self.expire_datetime = datetime.datetime.now() + datetime.timedelta(minutes=1)

def _check_expire(self):

if datetime.datetime.now() >= self.expire_datetime:

self._get_proxyip()

print("切换ip")

def process_request(self, spider, request):

self._check_expire()

request.meta['proxy'] = "http://" + self.proxy

最后一步当然是去setting里面注册啦,注册代理,注册mongo,注册文件以及图片的自定义:

DOWNLOADER_MIDDLEWARES = {

# 'tous.middlewares.TousDownloaderMiddleware': 543,

'tous.middlewares.TouspiderProxyIPLoadMiddleware':543,

}

ITEM_PIPELINES = {

# 'tous.pipelines.TousPipeline': 300,

'tous.pipelines.MongoDBPipeline':1,

'tous.pipelines.DownloadFilePipeline':2,

'tous.pipelines.DownloadImagePipeline':3,

}

FILES_STORE = 'files'

IMAGES_STORE = "images"

MONGODB_SERVER = '数据库地址'

MONGODB_PORT = 27017

# 设置数据库名称

MONGODB_DB = 'product'

# 存放本数据的表名称

MONGODB_COLLECTION = 'product'

然后最后再更改一下这两个东西即可:请求头 和 ROBOTSTXT_OBEY 改为false

ROBOTSTXT_OBEY = False

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36',

'Reference':'https://www.tous.com/us-en/jewelry',

}

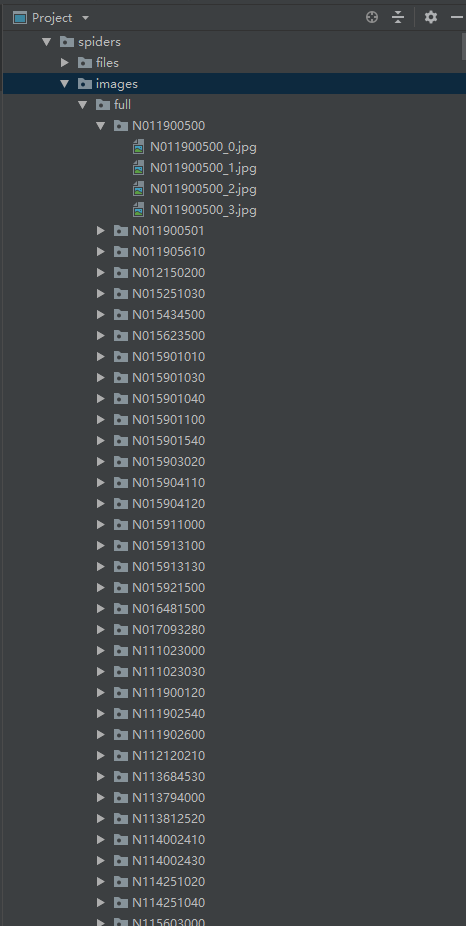

然后我们 scrapy crawl tou 就可以爬了 以下是下载好的图片以及文件 存放格式

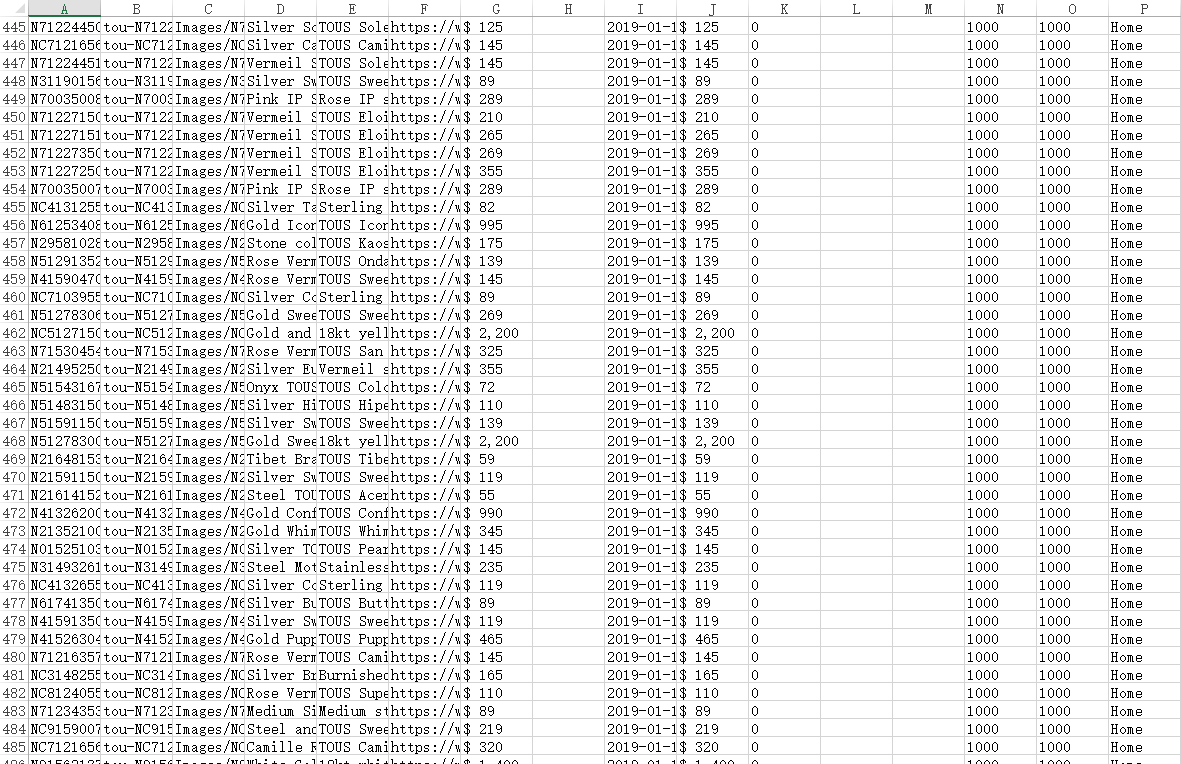

这时候等你运行完之后,图片和文件都下好啦,最后我们把Mongo数据库里面的数据库产品导成excel 使用这个库openpyxl即可

from openpyxl import Workbook

这边就不做介绍啦 相对简单 以下是运行完excel的部分截图

这个就是一个爬虫的完整过程啦!