For perspective transformation, you need a 3x3 transformation matrix. Straight lines will remain straight even after the transformation. To find this transformation matrix, you need 4 points on the input image and corresponding points on the output image. Among these 4 points,3 of them should not be collinear.

透视变换(perspective transformation)是将图像投影到一个新的视平面,也称为投影映射。

公式:dst(x,y) = src((M11x+M12y+M13)/(M31x+M32y+M33), (M21x+M22y+M23)/(M31x+M32y+M33))

仿射变换与透视变换的区别:

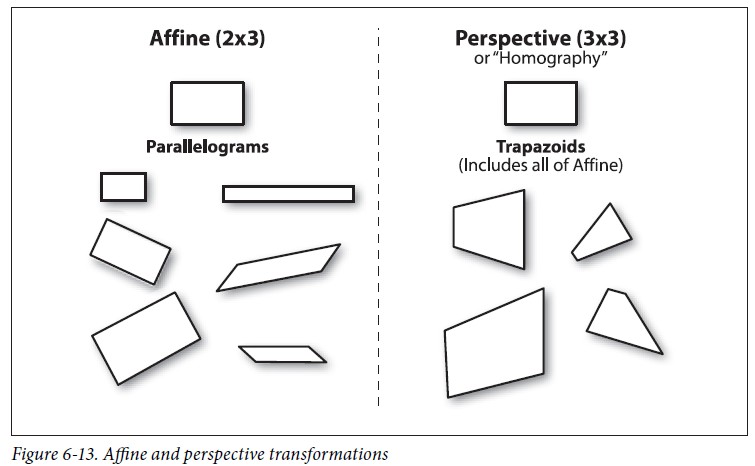

(1)、仿射变换,它可以将矩形转换成平行四边形,也可以将矩形的边压扁但必须保持边是平行的,也可以将矩形旋转或者按比例缩放。透视变换除了能够处理仿射变换的操作外,还可以将矩形转换成梯形。即仿射变换后还是平行四边形,透视变换后是四边形。因此可以说仿射变换是透视变换的一个子集。

(2)、在OpenCV中,基于2*3矩阵进行的变换,是图像的仿射变换;基于3*3矩阵进行的变换,是图像的透视变换或者单应性映射。图像透视变换多用于图像校正

下图是截取的《Learning OpenCV》一书中的插图:

目前支持uchar和float两种类型,经测试,与OpenCV3.1结果完全一致。

实现代码warpPerspective.hpp:

// fbc_cv is free software and uses the same licence as OpenCV

// Email: [email protected]

#ifndef FBC_CV_WARP_PERSPECTIVE_HPP_

#define FBC_CV_WARP_PERSPECTIVE_HPP_

/* reference: include/opencv2/imgproc.hpp

modules/imgproc/src/imgwarp.cpp

*/

#include <typeinfo>

#include "core/mat.hpp"

#include "core/invert.hpp"

#include "imgproc.hpp"

#include "remap.hpp"

namespace fbc {

// Calculates a perspective transform from four pairs of the corresponding points

FBC_EXPORTS int getPerspectiveTransform(const Point2f src1[], const Point2f src2[], Mat_<double, 1>& dst);

// Applies a perspective transformation to an image

// The function cannot operate in - place

// support type: uchar/float

/*

\f[\texttt{ dst } (x, y) = \texttt{ src } \left(\frac{ M_{ 11 } x + M_{ 12 } y + M_{ 13 } }{M_{ 31 } x + M_{ 32 } y + M_{ 33 }},

\frac{ M_{ 21 } x + M_{ 22 } y + M_{ 23 } }{M_{ 31 } x + M_{ 32 } y + M_{ 33 }} \right)\f]

*/

template<typename _Tp1, typename _Tp2, int chs1, int chs2>

int warpPerspective(const Mat_<_Tp1, chs1>& src, Mat_<_Tp1, chs1>& dst, const Mat_<_Tp2, chs2>& M_,

int flags = INTER_LINEAR, int borderMode = BORDER_CONSTANT, const Scalar& borderValue = Scalar())

{

FBC_Assert(src.data != NULL && dst.data != NULL && M_.data != NULL);

FBC_Assert(src.cols > 0 && src.rows > 0 && dst.cols > 0 && dst.rows > 0);

FBC_Assert(src.data != dst.data);

FBC_Assert(typeid(double).name() == typeid(_Tp2).name() && M_.rows == 3 && M_.cols == 3);

FBC_Assert((typeid(uchar).name() == typeid(_Tp1).name()) || (typeid(float).name() == typeid(_Tp1).name())); // uchar/float

double M[9];

Mat_<double, 1> matM(3, 3, M);

int interpolation = flags & INTER_MAX;

if (interpolation == INTER_AREA)

interpolation = INTER_LINEAR;

if (!(flags & WARP_INVERSE_MAP))

invert(M_, matM);

Range range(0, dst.rows);

const int BLOCK_SZ = 32;

short XY[BLOCK_SZ*BLOCK_SZ * 2], A[BLOCK_SZ*BLOCK_SZ];

int x, y, x1, y1, width = dst.cols, height = dst.rows;

int bh0 = std::min(BLOCK_SZ / 2, height);

int bw0 = std::min(BLOCK_SZ*BLOCK_SZ / bh0, width);

bh0 = std::min(BLOCK_SZ*BLOCK_SZ / bw0, height);

for (y = range.start; y < range.end; y += bh0) {

for (x = 0; x < width; x += bw0) {

int bw = std::min(bw0, width - x);

int bh = std::min(bh0, range.end - y); // height

Mat_<short, 2> _XY(bh, bw, XY), matA;

Mat_<_Tp1, chs1> dpart;

dst.getROI(dpart, Rect(x, y, bw, bh));

for (y1 = 0; y1 < bh; y1++) {

short* xy = XY + y1*bw * 2;

double X0 = M[0] * x + M[1] * (y + y1) + M[2];

double Y0 = M[3] * x + M[4] * (y + y1) + M[5];

double W0 = M[6] * x + M[7] * (y + y1) + M[8];

if (interpolation == INTER_NEAREST) {

x1 = 0;

for (; x1 < bw; x1++) {

double W = W0 + M[6] * x1;

W = W ? 1. / W : 0;

double fX = std::max((double)INT_MIN, std::min((double)INT_MAX, (X0 + M[0] * x1)*W));

double fY = std::max((double)INT_MIN, std::min((double)INT_MAX, (Y0 + M[3] * x1)*W));

int X = saturate_cast<int>(fX);

int Y = saturate_cast<int>(fY);

xy[x1 * 2] = saturate_cast<short>(X);

xy[x1 * 2 + 1] = saturate_cast<short>(Y);

}

} else {

short* alpha = A + y1*bw;

x1 = 0;

for (; x1 < bw; x1++) {

double W = W0 + M[6] * x1;

W = W ? INTER_TAB_SIZE / W : 0;

double fX = std::max((double)INT_MIN, std::min((double)INT_MAX, (X0 + M[0] * x1)*W));

double fY = std::max((double)INT_MIN, std::min((double)INT_MAX, (Y0 + M[3] * x1)*W));

int X = saturate_cast<int>(fX);

int Y = saturate_cast<int>(fY);

xy[x1 * 2] = saturate_cast<short>(X >> INTER_BITS);

xy[x1 * 2 + 1] = saturate_cast<short>(Y >> INTER_BITS);

alpha[x1] = (short)((Y & (INTER_TAB_SIZE - 1))*INTER_TAB_SIZE + (X & (INTER_TAB_SIZE - 1)));

}

}

}

if (interpolation == INTER_NEAREST) {

remap(src, dpart, _XY, Mat_<float, 1>(), interpolation, borderMode, borderValue);

} else {

Mat_<ushort, 1> _matA(bh, bw, A);

remap(src, dpart, _XY, _matA, interpolation, borderMode, borderValue);

}

}

}

return 0;

}

} // namespace fbc

#endif // FBC_CV_WARP_PERSPECTIVE_HPP_

#include "test_warpPerspective.hpp"

#include <assert.h>

#include <opencv2/opencv.hpp>

#include <warpPerspective.hpp>

int test_getPerspectiveTransform()

{

cv::Mat matSrc = cv::imread("E:/GitCode/OpenCV_Test/test_images/lena.png", 1);

if (!matSrc.data) {

std::cout << "read image fail" << std::endl;

return -1;

}

fbc::Point2f src_vertices[4], dst_vertices[4];

src_vertices[0] = fbc::Point2f(0, 0);

src_vertices[1] = fbc::Point2f(matSrc.cols - 5, 0);

src_vertices[2] = fbc::Point2f(matSrc.cols - 10, matSrc.rows - 1);

src_vertices[3] = fbc::Point2f(8, matSrc.rows - 13);

dst_vertices[0] = fbc::Point2f(17, 21);

dst_vertices[1] = fbc::Point2f(matSrc.cols - 23, 19);

dst_vertices[2] = fbc::Point2f(matSrc.cols / 2 + 5, matSrc.rows / 3 + 7);

dst_vertices[3] = fbc::Point2f(55, matSrc.rows / 5 + 33);

fbc::Mat_<double, 1> warpMatrix(3, 3);

fbc::getPerspectiveTransform(src_vertices, dst_vertices, warpMatrix);

cv::Point2f src_vertices_[4], dst_vertices_[4];

src_vertices_[0] = cv::Point2f(0, 0);

src_vertices_[1] = cv::Point2f(matSrc.cols - 5, 0);

src_vertices_[2] = cv::Point2f(matSrc.cols - 10, matSrc.rows - 1);

src_vertices_[3] = cv::Point2f(8, matSrc.rows - 13);

dst_vertices_[0] = cv::Point2f(17, 21);

dst_vertices_[1] = cv::Point2f(matSrc.cols - 23, 19);

dst_vertices_[2] = cv::Point2f(matSrc.cols / 2 + 5, matSrc.rows / 3 + 7);

dst_vertices_[3] = cv::Point2f(55, matSrc.rows / 5 + 33);

cv::Mat warpMatrix_ = cv::getPerspectiveTransform(src_vertices_, dst_vertices_);

assert(warpMatrix.cols == warpMatrix_.cols && warpMatrix.rows == warpMatrix_.rows);

assert(warpMatrix.step == warpMatrix_.step);

for (int y = 0; y < warpMatrix.rows; y++) {

const fbc::uchar* p = warpMatrix.ptr(y);

const uchar* p_ = warpMatrix_.ptr(y);

for (int x = 0; x < warpMatrix.step; x++) {

assert(p[x] == p_[x]);

}

}

return 0;

}

int test_warpPerspective_uchar()

{

cv::Mat matSrc = cv::imread("E:/GitCode/OpenCV_Test/test_images/lena.png", 1);

if (!matSrc.data) {

std::cout << "read image fail" << std::endl;

return -1;

}

for (int interpolation = 0; interpolation < 5; interpolation++) {

fbc::Point2f src_vertices[4], dst_vertices[4];

src_vertices[0] = fbc::Point2f(0, 0);

src_vertices[1] = fbc::Point2f(matSrc.cols - 5, 0);

src_vertices[2] = fbc::Point2f(matSrc.cols - 10, matSrc.rows - 1);

src_vertices[3] = fbc::Point2f(8, matSrc.rows - 13);

dst_vertices[0] = fbc::Point2f(17, 21);

dst_vertices[1] = fbc::Point2f(matSrc.cols - 23, 19);

dst_vertices[2] = fbc::Point2f(matSrc.cols / 2 + 5, matSrc.rows / 3 + 7);

dst_vertices[3] = fbc::Point2f(55, matSrc.rows / 5 + 33);

fbc::Mat_<double, 1> warpMatrix(3, 3);

fbc::getPerspectiveTransform(src_vertices, dst_vertices, warpMatrix);

fbc::Mat_<uchar, 3> mat(matSrc.rows, matSrc.cols, matSrc.data);

fbc::Mat_<uchar, 3> warp_dst;

warp_dst.zeros(mat.rows, mat.cols);

fbc::warpPerspective(mat, warp_dst, warpMatrix, interpolation);

cv::Point2f src_vertices_[4], dst_vertices_[4];

src_vertices_[0] = cv::Point2f(0, 0);

src_vertices_[1] = cv::Point2f(matSrc.cols - 5, 0);

src_vertices_[2] = cv::Point2f(matSrc.cols - 10, matSrc.rows - 1);

src_vertices_[3] = cv::Point2f(8, matSrc.rows - 13);

dst_vertices_[0] = cv::Point2f(17, 21);

dst_vertices_[1] = cv::Point2f(matSrc.cols - 23, 19);

dst_vertices_[2] = cv::Point2f(matSrc.cols / 2 + 5, matSrc.rows / 3 + 7);

dst_vertices_[3] = cv::Point2f(55, matSrc.rows / 5 + 33);

// Get the Perspective Transform

cv::Mat warpMatrix_ = cv::getPerspectiveTransform(src_vertices_, dst_vertices_);

// Set the dst image the same type and size as src

cv::Mat warp_dst_ = cv::Mat::zeros(matSrc.rows, matSrc.cols, matSrc.type());

cv::Mat mat_;

matSrc.copyTo(mat_);

// Apply the Affine Transform just found to the src image

cv::warpPerspective(mat_, warp_dst_, warpMatrix_, warp_dst_.size(), interpolation);

assert(warp_dst.cols == warp_dst_.cols && warp_dst.rows == warp_dst_.rows);

assert(warp_dst.step == warp_dst_.step);

for (int y = 0; y < warp_dst.rows; y++) {

const fbc::uchar* p = warp_dst.ptr(y);

const uchar* p_ = warp_dst_.ptr(y);

for (int x = 0; x < warp_dst.step; x++) {

assert(p[x] == p_[x]);

}

}

}

return 0;

}

int test_warpPerspective_float()

{

cv::Mat matSrc = cv::imread("E:/GitCode/OpenCV_Test/test_images/lena.png", 1);

if (!matSrc.data) {

std::cout << "read image fail" << std::endl;

return -1;

}

cv::cvtColor(matSrc, matSrc, CV_BGR2GRAY);

matSrc.convertTo(matSrc, CV_32FC1);

for (int interpolation = 0; interpolation < 5; interpolation++) {

fbc::Point2f src_vertices[4], dst_vertices[4];

src_vertices[0] = fbc::Point2f(0, 0);

src_vertices[1] = fbc::Point2f(matSrc.cols - 5, 0);

src_vertices[2] = fbc::Point2f(matSrc.cols - 10, matSrc.rows - 1);

src_vertices[3] = fbc::Point2f(8, matSrc.rows - 13);

dst_vertices[0] = fbc::Point2f(17, 21);

dst_vertices[1] = fbc::Point2f(matSrc.cols - 23, 19);

dst_vertices[2] = fbc::Point2f(matSrc.cols / 2 + 5, matSrc.rows / 3 + 7);

dst_vertices[3] = fbc::Point2f(55, matSrc.rows / 5 + 33);

fbc::Mat_<double, 1> warpMatrix(3, 3);

fbc::getPerspectiveTransform(src_vertices, dst_vertices, warpMatrix);

fbc::Mat_<float, 1> mat(matSrc.rows, matSrc.cols, matSrc.data);

fbc::Mat_<float, 1> warp_dst;

warp_dst.zeros(mat.rows, mat.cols);

fbc::warpPerspective(mat, warp_dst, warpMatrix, interpolation);

cv::Point2f src_vertices_[4], dst_vertices_[4];

src_vertices_[0] = cv::Point2f(0, 0);

src_vertices_[1] = cv::Point2f(matSrc.cols - 5, 0);

src_vertices_[2] = cv::Point2f(matSrc.cols - 10, matSrc.rows - 1);

src_vertices_[3] = cv::Point2f(8, matSrc.rows - 13);

dst_vertices_[0] = cv::Point2f(17, 21);

dst_vertices_[1] = cv::Point2f(matSrc.cols - 23, 19);

dst_vertices_[2] = cv::Point2f(matSrc.cols / 2 + 5, matSrc.rows / 3 + 7);

dst_vertices_[3] = cv::Point2f(55, matSrc.rows / 5 + 33);

// Get the Perspective Transform

cv::Mat warpMatrix_ = cv::getPerspectiveTransform(src_vertices_, dst_vertices_);

// Set the dst image the same type and size as src

cv::Mat warp_dst_ = cv::Mat::zeros(matSrc.rows, matSrc.cols, matSrc.type());

cv::Mat mat_;

matSrc.copyTo(mat_);

// Apply the Affine Transform just found to the src image

cv::warpPerspective(mat_, warp_dst_, warpMatrix_, warp_dst_.size(), interpolation);

assert(warp_dst.cols == warp_dst_.cols && warp_dst.rows == warp_dst_.rows);

assert(warp_dst.step == warp_dst_.step);

for (int y = 0; y < warp_dst.rows; y++) {

const fbc::uchar* p = warp_dst.ptr(y);

const uchar* p_ = warp_dst_.ptr(y);

for (int x = 0; x < warp_dst.step; x++) {

assert(p[x] == p_[x]);

}

}

}

return 0;

}

GitHub: https://github.com/fengbingchun/OpenCV_Test

再分享一下我老师大神的人工智能教程吧。零基础!通俗易懂!风趣幽默!还带黄段子!希望你也加入到我们人工智能的队伍中来!https://blog.csdn.net/jiangjunshow