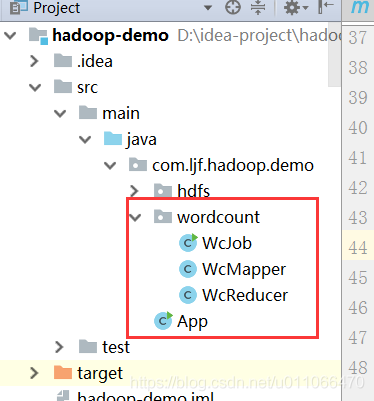

项目工程见:

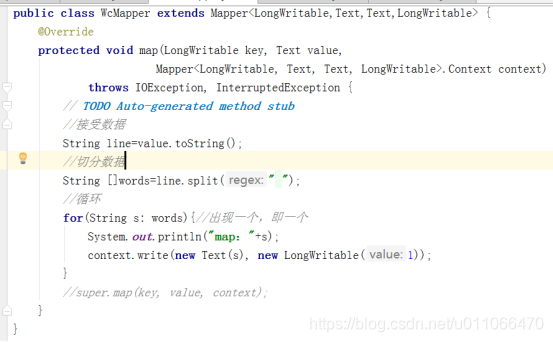

1.mapper代码: WcMapper

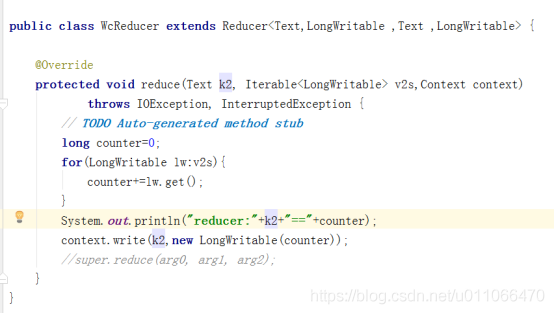

2.reducer代码:WcReducer

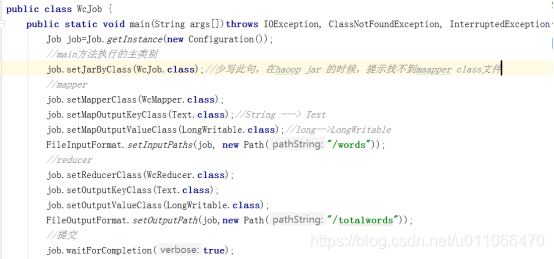

3.job调用:

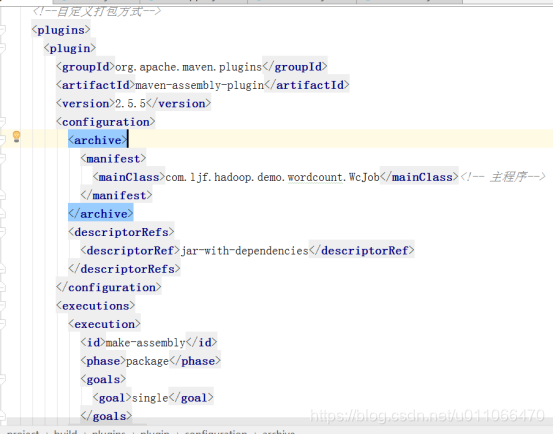

4.使用pom文件配置打包方式:

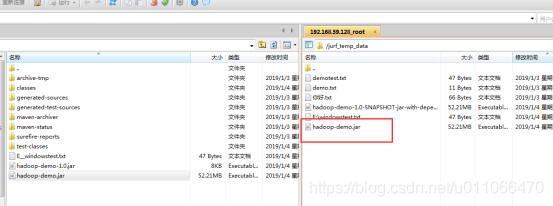

5.会在工程下生成一个jar:且修改jar包为hadoop-demo.jar

6.jar包上传到linux系统上

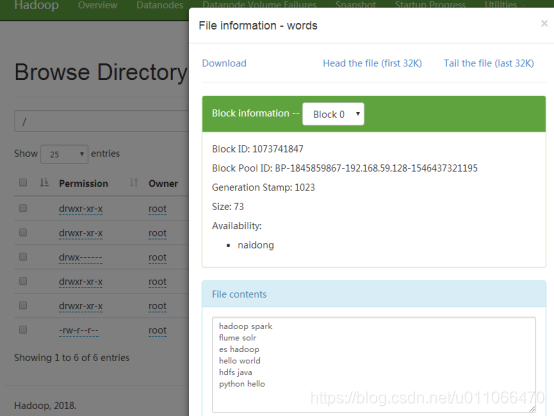

7.将待统计文件words.txt上传到hdfs上

Hadoop fs –put /jurf_temp_data/words.txt /words

8.执行jar:

[root@naidong jurf_temp_data]# hadoop jar hadoop-demo.jar

2019-01-04 11:59:50,916 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2019-01-04 11:59:57,490 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

2019-01-04 11:59:57,578 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1546572216242_0003

2019-01-04 12:00:00,103 INFO input.FileInputFormat: Total input files to process : 1

2019-01-04 12:00:01,179 INFO mapreduce.JobSubmitter: number of splits:1

2019-01-04 12:00:01,692 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

2019-01-04 12:00:03,131 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1546572216242_0003

2019-01-04 12:00:03,135 INFO mapreduce.JobSubmitter: Executing with tokens: []

2019-01-04 12:00:04,502 INFO conf.Configuration: resource-types.xml not found

2019-01-04 12:00:04,503 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2019-01-04 12:00:05,256 INFO impl.YarnClientImpl: Submitted application application_1546572216242_0003

2019-01-04 12:00:05,504 INFO mapreduce.Job: The url to track the job: http://naidong:8088/proxy/application_1546572216242_0003/

2019-01-04 12:00:05,544 INFO mapreduce.Job: Running job: job_1546572216242_0003

2019-01-04 12:00:33,928 INFO mapreduce.Job: Job job_1546572216242_0003 running in uber mode : false

2019-01-04 12:00:33,952 INFO mapreduce.Job: map 0% reduce 0%

2019-01-04 12:00:58,116 INFO mapreduce.Job: map 100% reduce 0%

2019-01-04 12:01:30,074 INFO mapreduce.Job: map 100% reduce 100%

2019-01-04 12:01:32,169 INFO mapreduce.Job: Job job_1546572216242_0003 completed successfully

2019-01-04 12:01:32,782 INFO mapreduce.Job: Counters: 53

File System Counters

FILE: Number of bytes read=195

FILE: Number of bytes written=426255

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=170

HDFS: Number of bytes written=76

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=41852

Total time spent by all reduces in occupied slots (ms)=87912

Total time spent by all map tasks (ms)=20926

Total time spent by all reduce tasks (ms)=29304

Total vcore-milliseconds taken by all map tasks=20926

Total vcore-milliseconds taken by all reduce tasks=29304

Total megabyte-milliseconds taken by all map tasks=42856448

Total megabyte-milliseconds taken by all reduce tasks=90021888

Map-Reduce Framework

Map input records=6

Map output records=12

Map output bytes=165

Map output materialized bytes=195

Input split bytes=97

Combine input records=0

Combine output records=0

Reduce input groups=10

Reduce shuffle bytes=195

Reduce input records=12

Reduce output records=10

Spilled Records=24

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=461

CPU time spent (ms)=5640

Physical memory (bytes) snapshot=371195904

Virtual memory (bytes) snapshot=7103193088

Total committed heap usage (bytes)=165810176

Peak Map Physical memory (bytes)=229015552

Peak Map Virtual memory (bytes)=2739154944

Peak Reduce Physical memory (bytes)=142180352

Peak Reduce Virtual memory (bytes)=4364038144

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=73

File Output Format Counters

Bytes Written=76

2019-01-04 12:01:42,813 WARN util.ShutdownHookManager: ShutdownHook '' timeout, java.util.concurrent.TimeoutException

java.util.concurrent.TimeoutException

at java.util.concurrent.FutureTask.get(FutureTask.java:205)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:68)

[root@naidong jurf_temp_data]#h

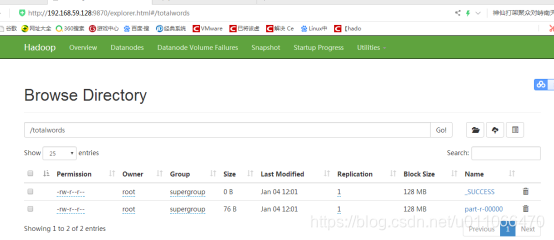

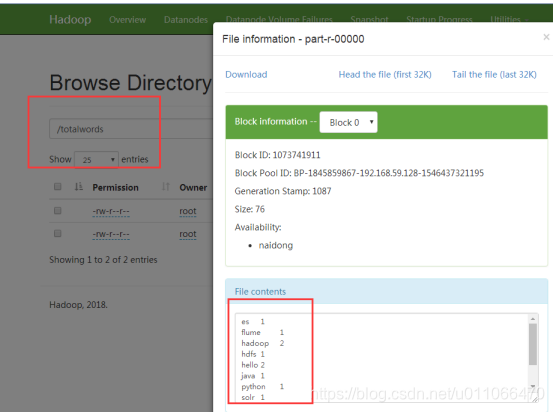

7.查看统计结果:

结果文件一般由三部分组成:

1) _SUCCESS文件:表示MapReduce运行成功。

2) Part-r-00000文件:存放结果,也是默认生成的结果文件。