一、集群

是指一组相互独立的计算机,利用高速通信网络组成的一个较大的计算机服务系统,每个集群节点都是运行各自服务的独立服务器,这些服务器之间可以彼此通信,协同向用户提供应用程序,系统资源和数据,并以单一系统的模式加以管理。

二、集群优点

- 高性能

- 价格有效性

- 可伸缩性

- 高可用

- 透明性

- 可管理

- 可编程

三、RHCS 集群组成

1.集群架构管理器

这是RHCS 集群的一个基础套件,提供您一个集群的基本功能,使各个节点组成的集群在一起工作,具体包含分布式集群管理器(CMAN),成员关系管理、锁管理(DLM)配置文件管理(CCS)、栅设备(FENCE)

2. 高可用服务管理器

提供节点服务监控和服务故障转移,当一个节点服务出现故障时,将服务转移到另一个健康的节点上。

3. 集群配置管理工具

通过LUCI 来管理和配置RHCS集群,LUCI是一个基于web的集群配置方式,通过luci可以轻松的搭建一个功能强大的集群系统,节点主机可以使用ricci来和luci 管理段进行通信。

4. Linuxvirtualserver

LVS 是一个开源的负载均衡软件,利用LVS 可以将客户端的请求根据指定的负载策略和算法合理分配到各个节点,实现动态、智能的负载分担。

5. RedHatGS(globalfilesystem)

GFS 是Redhat公司开发的一款集群文件系统,目前最新的版本是GFS2,GFS文件系统允许多个服务同时读写一个磁盘分区,通过GFS可以实现数据的集中管理,免去了数据同步和拷贝的麻烦,但GFS不能独立存在,需要RHCS的底层组件支持

6. clusterlogicalvolumemanger

CLuster 逻辑卷管理,及CLVM,是LVM的扩展,这种允许cluster 中的机器使用LVM来管理共享存储

7. ISCSI

是一种在Internet协议上,特别是以太网上进行数据传输的标准,他是一种基于IPstorage理论的新型存储技术,RHCS可以通过ISCSI技术来导出和分配共享存储的使用。

RHCS(红帽集群套件)

- kvm:底层的虚拟化

- qemu:IO设备的虚拟化

- libvirtd:虚拟化接口

- HighAvailability 高可用

- LoadBalancer 负载均衡

- ResilientStorage 存储

-

ScalableFileSystem 文件系统

四、搭建环境并配置服务

首先搭建server1,server2的高级yum源

[rhel-source]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.254.70/rhel6.5

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.254.70/rhel6.5/HighAvailability

enabled=1

gpgcheck=0

[LoadBalancer]

name=HighAvailability

baseurl=http://172.25.254.70/rhel6.5/LoadBalancer

enabled=1

gpgcheck=0

[ResilientStorage]

name=HighAvailability

baseurl=http://172.25.254.70/rhel6.5/ResilientStorage

enabled=1

gpgcheck=0

[ScalableFileSystem]

name=HighAvailability

baseurl=http://172.25.254.70/rhel6.5/ScalableFileSystem

enabled=1

gpgcheck=0

server1 管理节点 高可用节点(配置)

ricci 高可用

luci RHCS基于Web的集群管理工具 图形界面

[root@server1 yum.repos.d]# yum install -y ricci luci

[root@server1 yum.repos.d]# passwd ricci 改用户密码

密码 redhat 输入两次

[root@server1 yum.repos.d]# /etc/init.d/ricci start

[root@server1 yum.repos.d]# /etc/init.d/luci start

[root@server1 yum.repos.d]# chkconfig ricci on 开机自启

[root@server1 yum.repos.d]# chkconfig luci on

[root@server1 yum.repos.d]# netstat -tnlp 查看端口

接着浏览器https://172.25.254.1:8084 :显示出集群高可用性管理界面,通过界面创建集群,把主,备服务器加入集群

配置server2

[root@server2 ~]# yum install -y ricci

[root@server2 ~]# passwd ricci

密码:redhat 输入两次

[root@server2 ~]# /etc/init.d/ricci start

[root@server2 ~]# chkconfig ricci on打开 https://172.25.254.1:8084 登录 root redhat

server1和server2重启 进行检查

server1

[root@server1 ~]# cat /etc/cluster/cluster.conf

<?xml version="1.0"?>

<cluster config_version="1" name="westos_ls">

<clusternodes>

<clusternode name="server1" nodeid="1"/>

<clusternode name="server2" nodeid="2"/>

</clusternodes>

<cman expected_votes="1" two_node="1"/>

<fencedevices/>

<rm/>

</cluster>

[root@server1 ~]# clustat

Cluster Status for westos_ls @ Sat Feb 16 21:09:40 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local

server2 2 Online

server2

[root@server2 ~]# cat /etc/cluster/cluster.conf

<?xml version="1.0"?>

<cluster config_version="1" name="westos_ls">

<clusternodes>

<clusternode name="server1" nodeid="1"/>

<clusternode name="server2" nodeid="2"/>

</clusternodes>

<cman expected_votes="1" two_node="1"/>

<fencedevices/>

<rm/>

</cluster>

[root@server2 ~]# clustat

Cluster Status for westos_ls @ Sat Feb 16 21:10:11 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online

server2 2 Online, Local

真机配置:

[root@foundation150 ~]# yum search fence 查询安装包

[root@foundation150 ~]# yum install -y fence-virtd.x86_64 fence-virtd-libvirt.x86_64 fence-virtd-multicast.x86_64 安装需要软件

[root@foundation150 ~]# fence_virtd -c 初始化设置

Module search path [/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Available listeners:

multicast 1.2

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]:

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to 'none' for no interface.

Interface [virbr0]: br0 注意!!!

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]:

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

backends {

libvirt {

uri = "qemu:///system";

}

}

listeners {

multicast {

port = "1229";

family = "ipv4";

interface = "br0";

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key";

}

}

fence_virtd {

module_path = "/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";

}

=== End Configuration ===

Replace /etc/fence_virt.conf with the above [y/N]? y

[root@foundation150 ~]# mkdir /etc/cluster 创建需要的文件

[root@foundation150 ~]# cd /etc/cluster/

[root@foundation150 cluster]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1 截取随机数,生成密钥

1+0 records in

1+0 records out

128 bytes (128 B) copied, 0.000152427 s, 840 kB/s

[root@foundation150 cluster]# hexdump -C fence_xvm.key

00000000 47 25 d8 eb 0b cb 1f 32 87 3e 40 6a bb 8f 1f 9d |G%.....2.>@j....|

00000010 ce b2 e2 35 5c 48 60 2a 8c 49 20 93 c4 1b e8 e9 |...5\H`*.I .....|

00000020 ad 1f f6 e4 6e 7b 56 45 f7 16 30 ea 2a c6 15 33 |....n{VE..0.*..3|

00000030 af 8c 19 11 51 35 6c ff 2f 76 2b a7 8a 4b 85 07 |....Q5l./v+..K..|

00000040 d7 e5 06 87 91 a2 eb 71 b0 23 80 be ea eb ae 90 |.......q.#......|

00000050 e0 76 e8 93 11 6d a7 4c f2 61 c3 a5 19 53 69 11 |.v...m.L.a...Si.|

00000060 a1 97 7f d1 6c e4 3a 67 47 a8 e5 51 12 3d ad 67 |....l.:gG..Q.=.g|

00000070 24 b7 ef 75 2e 7c 70 82 9a 11 52 6a ea ab 02 a1 |$..u.|p...Rj....|

00000080

[root@foundation150 cluster]# scp fence_xvm.key [email protected]:/etc/cluster 发送密钥

[root@foundation150 cluster]# scp fence_xvm.key [email protected]:/etc/cluster

[root@foundation150 cluster]# systemctl status fence_virtd.service

[root@foundation150 cluster]# systemctl start fence_virtd.service

[root@foundation150 cluster]# systemctl status fence_virtd.service

查看server1

[root@server1 ~]# cd /etc/cluster/

[root@server1 cluster]# ll

total 12

-rw-r----- 1 root root 263 Feb 16 21:07 cluster.conf

drwxr-xr-x 2 root root 4096 Sep 16 2013 cman-notify.d

-rw-r--r-- 1 root root 128 Feb 16 21:25 fence_xvm.key

接着在浏览器将节点和fence建立联系

Fence Devices

Add

Fence virt (Multicast Mode)

Name:vmfence

server1和server2 同样执行以下操作

Nodes

Add Fence Method 添加

Name:fence-1

Add Fence Instance 添加

UIDD

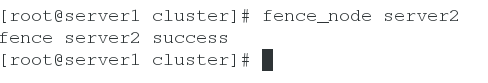

检查server1 查看server2是否会重新启动

[root@server1 cluster]# fence_node server2

fence server2 success 执行完命名server2重启

服务器宕机后 ip漂移

打开 https://172.25.254.1:8084

登录 root redhatFailover Domain 故障切换域(失效率,优先级)

Add

Name:webfile

第一个第二个打勾

No Failback 不选这个ip会漂移回去

Priority 优先级 数字越小优先级越高

server1,2的member都打勾 ##意思是两台服务器都可运行上面这个失效率

Resources 资源(一个服务能被正常运行,工作,他需要满足哪些条件,这个条件就是资源的意思)

Add IP Address

IP Address 172.25.254.100

Netmask 24

等待时间 5s

Add Script

Name httpd

Full Path... /etc/init.d/httpd

Service Groups 使用之前创建的失效率和资源(让服务正常运行)

Name: apache (可随意)

两个打勾

选择Automatically Start This Service:自动开启服务

选择Run Exclusive:独占运行

Failover Domain: weibfile

Add Resource

添加刚才的两个配置即可

sercer1,server2安装httpd服务,编辑默认文件

检测:

检查

由于server2优先级高于server1,curl访问时到server2

server2用命令echo c> /proc/sysrq-trigger宕掉服务器

5s之后服务器自动重启,在宕机期间,查询会到server1服务器上,恢复之后继续回到server2

server2正常时

当server2 执行echo c> /proc/sysrq-trigger 命令时宕掉服务器 则虚拟ip会漂移到server1

当server2重新启动后,虚拟ip又会回到server2

高可用 mysql

关闭server3服务器,添加一块8G的硬盘

配置server3

[root@server3 ~]# yum install -y scsi-*

[root@server3 ~]# fdisk -l 8G /dev/vda

[root@server3 ~]# vim /etc/tgt/targets.conf

<target iqn.2019-02.com.example:server.target1>

backing-store /dev/vda

</target>

[root@server3 ~]# /etc/init.d/tgtd start

[root@server3 ~]# ps ax配置server1

server1:

[root@server1 ~]# yum install -y iscsi-*

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.254.3

[root@server1 ~]# iscsiadm -m node -l

[root@server1 ~]# yum install -y mysql-server

[root@server1 ~]# fdisk -l 8G /dev/sdb

[root@server1 ~]# mkfs.ext4 /dev/sdb

[root@server1 ~]# chown mysql.mysql /var/lib/mysql/配置server2

[root@server2 ~]# yum install -y iscsi-*

[root@server2 ~]# iscsiadm -m discovery -t st -p 172.25.254.3

[root@server2 ~]# iscsiadm -m node -l

[root@server2 ~]# yum install -y mysql-server

[root@server2 ~]# chown mysql.mysql /var/lib/mysql/

打开 https://172.25.3.1:8084

登录 root redhat

Failover Domains

Add

Name:dbfile

第一个第二个打勾

No Failback 不选这个ip会漂移回去

Priority 优先级 数字越小优先级越高

Resources

Add IP Address

IP Address 172.25.254.200

Netmask 24

等待时间 10s

Add Script

Name mysqld

Full Path... /etc/init.d/mysqld

Add Filesystem

Name:dbdata

Filesystem Type:ext4

Mount Point:/var/lib/mysql

Device,FS Label,or UUID:/dev/sdb

第一,四,五打勾

Service Groups

Name: mysql

两个打勾

第二个是:独占运行 如果选中,服务绑定到服务器,不可转移

Failover Domain: dbfile

Add Resource

添加刚才的三个配置即可

查看:

RHCS存储

配置server1

[root@server1 mysql]# clustat

Cluster Status for westos_ls @ Sun Feb 17 13:38:17 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server2 started

service:mysql server1 started

[root@server1 ~]# cd /var/lib/mysql/

[root@server1 mysql]# ll

total 20504

-rw-rw---- 1 mysql mysql 10485760 Feb 16 23:37 ibdata1

-rw-rw---- 1 mysql mysql 5242880 Feb 17 13:37 ib_logfile0

-rw-rw---- 1 mysql mysql 5242880 Feb 16 23:31 ib_logfile1

drwx------ 2 mysql mysql 16384 Feb 16 22:44 lost+found

drwx------ 2 mysql mysql 4096 Feb 16 23:31 mysql

srwxrwxrwx 1 mysql mysql 0 Feb 17 13:37 mysql.sock

drwx------ 2 mysql mysql 4096 Feb 16 23:31 test

[root@server1 mysql]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1153352 17009000 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

/dev/sdb 8256952 170960 7666564 3% /var/lib/mysql

[root@server1 mysql]# clusvcadm -d apache

Local machine disabling service:apache...Success

[root@server1 ~]# /etc/init.d/mysqld stop

Stopping mysqld: [ OK ]

[root@server1 ~]# clusvcadm -d mysql

Local machine disabling service:mysql...Success

查看server1和server2

server1

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1153380 17008972 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

[root@server1 ~]# clustat

Cluster Status for westos_ls @ Sun Feb 17 13:47:18 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache (server2) disabled

service:mysql (server1) disabled

server2

[root@server2 mysql]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1098292 17064060 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

[root@server1 ~]# /etc/init.d/clvmd status

clvmd (pid 1304) is running...

Clustered Volume Groups: (none)

Active clustered Logical Volumes: (none)

[root@server1 ~]# vim /etc/lvm/lvm.conf

[root@server1 ~]# fdisk -l

[root@server1 ~]# pvcreate /dev/sdb

[root@server1 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 VolGroup lvm2 a-- 19.51g 0

/dev/sdb lvm2 a-- 8.00g 8.00g

[root@server1 ~]# vgcreate clustervg /dev/sdb

[root@server1 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

VolGroup 1 2 0 wz--n- 19.51g 0

clustervg 1 0 0 wz--nc 8.00g 8.00g

[root@server1 ~]# lvcreate -L +4G -n demo clustervg

Logical volume "demo" created

[root@server1 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 18.54g

lv_swap VolGroup -wi-ao---- 992.00m

demo clustervg -wi-a----- 4.00g

[root@server1 ~]# mkfs.gfs2 -p lock_dlm -j 2 -t westos_ls:mygfs2 /dev/clustervg/demo

This will destroy any data on /dev/clustervg/demo.

It appears to contain: symbolic link to `../dm-2'

Are you sure you want to proceed? [y/n] y

Device: /dev/clustervg/demo

Blocksize: 4096

Device Size 4.00 GB (1048576 blocks)

Filesystem Size: 4.00 GB (1048575 blocks)

Journals: 2

Resource Groups: 16

Locking Protocol: "lock_dlm"

Lock Table: "westos_ls:mygfs2"

UUID: 2e9c0dc5-fe0b-8d87-c417-f9127cf29f4a

[root@server1 ~]# mount /dev/clustervg/demo /var/lib/mysql/

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1153392 17008960 7% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

/dev/mapper/clustervg-demo 4193856 264776 3929080 7% /var/lib/mysql

[root@server1 ~]# ll -d /var/lib/mysql/

drwxr-xr-x 2 root root 3864 Feb 17 13:57 /var/lib/mysql/

[root@server1 ~]# chown mysql.mysql /var/lib/mysql/

[root@server1 ~]# blkid

/dev/sda1: UUID="9d35754e-7f30-4131-a583-40ba3a356c7d" TYPE="ext4"

/dev/sda2: UUID="k809Gj-VsiO-wmFZ-tXDP-xNrb-NBNn-EV4ZNL" TYPE="LVM2_member"

/dev/mapper/VolGroup-lv_root: UUID="a0956d52-bed0-41df-b592-7033fe3bfcb5" TYPE="ext4"

/dev/mapper/VolGroup-lv_swap: UUID="bfd545d2-b6b1-4b06-af3a-f97d31623b47" TYPE="swap"

/dev/sdb: UUID="JVYGgZ-sq60-bO01-xrcM-SRDg-ATEI-695s9c" TYPE="LVM2_member"

/dev/mapper/clustervg-demo: LABEL="westos_ls:mygfs2" UUID="2e9c0dc5-fe0b-8d87-c417-f9127cf29f4a" TYPE="gfs2"

[root@server1 ~]# /etc/init.d/mysqld start

[root@server1 ~]# cd /var/lib/mysql/

[root@server1 mysql]# ll

total 20560

-rw-rw---- 1 mysql mysql 10485760 Feb 17 14:02 ibdata1

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:02 ib_logfile0

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:02 ib_logfile1

drwx------ 2 mysql mysql 2048 Feb 17 14:02 mysql

srwxrwxrwx 1 mysql mysql 0 Feb 17 14:02 mysql.sock

drwx------ 2 mysql mysql 3864 Feb 17 14:02 test

配置server2

[root@server2 mysql]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1098320 17064032 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

[root@server2 mysql]# cd

[root@server2 ~]# mount /dev/clustervg/demo /var/lib/mysql/

[root@server2 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1098320 17064032 7% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

/dev/mapper/clustervg-demo 4193856 286516 3907340 7% /var/lib/mysql

[root@server2 ~]# cd /var/lib/mysql/

[root@server2 mysql]# ll

total 20560

-rw-rw---- 1 mysql mysql 10485760 Feb 17 14:02 ibdata1

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:02 ib_logfile0

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:02 ib_logfile1

drwx------ 2 mysql mysql 2048 Feb 17 14:02 mysql

srwxrwxrwx 1 mysql mysql 0 Feb 17 14:02 mysql.sock

drwx------ 2 mysql mysql 3864 Feb 17 14:02 test

手动添加内存(clustat 查看状态)

在网页登录 root redhat

service Groups

删除存储 并提交

进入Resources

删除dbdate

继续在server1配置

[root@server1 mysql]# /etc/init.d/mysqld status

mysqld (pid 6547) is running...

[root@server1 mysql]# /etc/init.d/mysqld stop

Stopping mysqld: [ OK ]

[root@server1 mysql]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1153432 17008920 7% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

/dev/mapper/clustervg-demo 4193856 286516 3907340 7% /var/lib/mysql

[root@server1 mysql]# cd

[root@server1 ~]# umount /var/lib/mysql/

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1153432 17008920 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

[root@server1 ~]# mount /dev/clustervg/demo /var/lib/mysql/

[root@server1 ~]# blkid

/dev/mapper/clustervg-demo: LABEL="westos_ls:mygfs2" UUID="2e9c0dc5-fe0b-8d87-c417-f9127cf29f4a" TYPE="gfs2"

[root@server1 ~]# vim /etc/fstab

![]()

[root@server1 ~]# umount /var/lib/mysql/

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1153460 17008892 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

[root@server1 ~]# mount -a

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1153460 17008892 7% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

/dev/mapper/clustervg-demo 4193856 286512 3907344 7% /var/lib/mysql

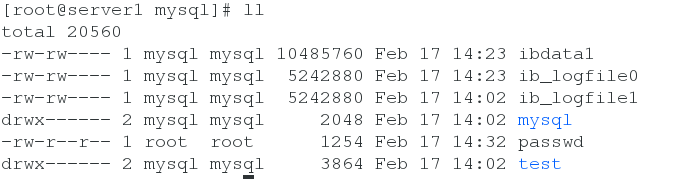

[root@server1 ~]# cd /var/lib/mysql/

[root@server1 mysql]# ll

total 20556

-rw-rw---- 1 mysql mysql 10485760 Feb 17 14:23 ibdata1

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:23 ib_logfile0

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:02 ib_logfile1

drwx------ 2 mysql mysql 2048 Feb 17 14:02 mysql

drwx------ 2 mysql mysql 3864 Feb 17 14:02 test

继续在server2配置

[root@server2 mysql]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1098320 17064032 7% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

/dev/mapper/clustervg-demo 4193856 286512 3907344 7% /var/lib/mysql

[root@server2 mysql]# cd

[root@server2 ~]# umount /var/lib/mysql/

[root@server2 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1098320 17064032 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

[root@server2 ~]# vim /etc/fstab

UUID="2e9c0dc5-fe0b-8d87-c417-f9127cf29f4a" /var/lib/mysql gfs2 _netdev 0 0

[root@server2 ~]# mount -a

[root@server2 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1098336 17064016 7% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33454 436790 8% /boot

/dev/mapper/clustervg-demo 4193856 286512 3907344 7% /var/lib/mysql

[root@server2 ~]# cd /var/lib/mysql/

[root@server2 mysql]# ll

total 20556

-rw-rw---- 1 mysql mysql 10485760 Feb 17 14:23 ibdata1

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:23 ib_logfile0

-rw-rw---- 1 mysql mysql 5242880 Feb 17 14:02 ib_logfile1

drwx------ 2 mysql mysql 2048 Feb 17 14:02 mysql

drwx------ 2 mysql mysql 3864 Feb 17 14:02 test测试存储是否同步

在server2 /var/lib/mysql目录下建立文件查看server1该目录下是否有文件

server1查看产生passwd文件 内存同步成功

查看日志