Maven配置

- 下载maven,解压至

/opt/module/ - 配置环境变量

$ sudo vim /etc/profile

export M2_HOME=/opt/module/maven

export PATH=$PATH:$M2_HOME/bin

$ source /etc/profile

$ mvn -v # 验证,不行就重启

- 修改maven的

settings.xml文件,配置仓库位置如下:

<localRepository>/repository</localRepository>

- 更换阿里云源

<mirror>

<id>alimaven</id>

<mirrorOf>central</mirrorOf>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

</mirror>

- 配置JDK8

<profile>

<id>jdk-1.8</id>

<activation>

<activeByDefault>true</activeByDefault>

<jdk>1.8</jdk>

</activation>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<maven.compiler.compilerVersion>1.8</maven.compiler.compilerVersion>

</properties>

</profile>

修改仓库权限

我们配置的maven仓库位置

/repository,此时是属于root用户的,登录的hadoop没有写入权限。

$ cd /

$ sudo chown -R hadoop:hadopo /repository

$ ll # 查看

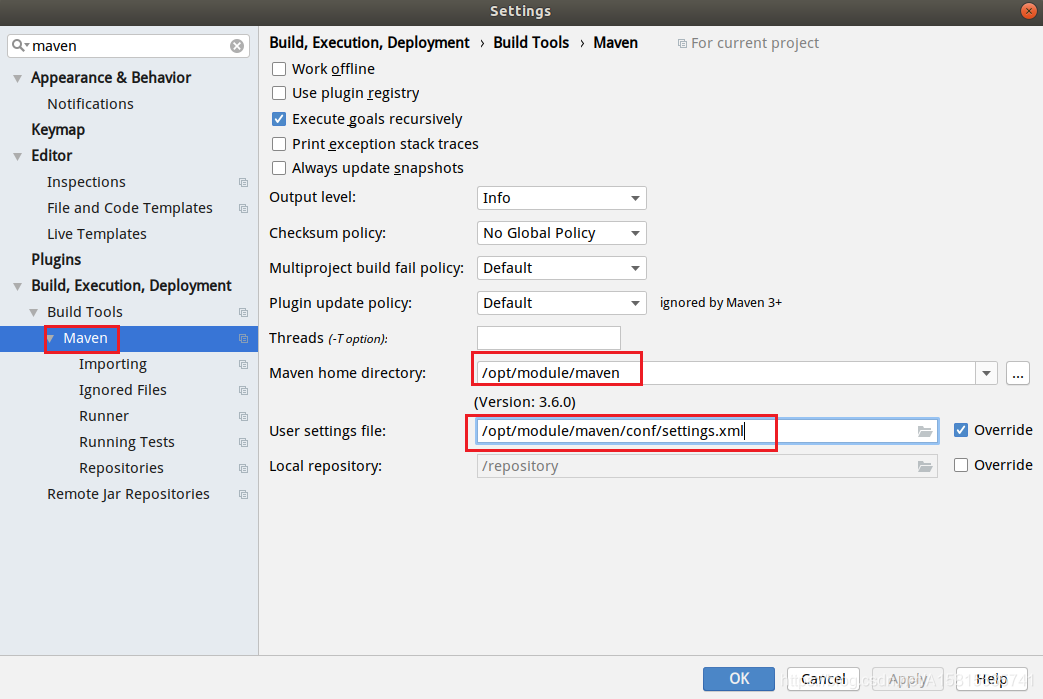

idea创建Maven工程

- 首先,使用自定义maven

- pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.hadoop</groupId>

<artifactId>hdfs</artifactId>

<version>1.0</version>

<!--定义Hadoop版本号-->

<properties>

<hadoop-version>2.6.0-cdh5.15.1</hadoop-version>

</properties>

<!--引入cdh仓库-->

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

</repository>

</repositories>

<dependencies>

<!--添加junit依赖包-->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.10</version>

<scope>test</scope>

</dependency>

<!--添加Hadoop依赖包-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop-version}</version>

</dependency>

</dependencies>

</project>

- log4j.properties

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

测试HDFS使用

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.net.URI;

/**

* 使用Java API操作HDFS文件系统

*/

public class HDFSApp {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://localhost:9000"),conf,"hadoop");

// 在HDFS上创建文件夹/user/hadoop/hdfsapi/test

boolean result = fs.mkdirs(new Path("hdfsapi/test"));

System.out.println(result);

fs.close();

}

}

- 命令行查看HDFS

$ hadoop fs -lsr /

- Web界面查看HDFS

localhost:50070