代码:

# 分布式简易爬虫服务端

from multiprocessing.managers import BaseManager

from queue import Queue

import os

class ServiceSpider(BaseManager):

def __init__(self, address='', port=8007, authkey=b'abc'):

#

super(ServiceSpider, self).__init__(address=(address, port), authkey=authkey)

# 初始化化一个任务队列

self.__task_queue = Queue()

# 初始化一个接收队列

self.__result_queue = Queue()

# 运行开始函数

def runStart(self, urls=(""), path=None):

# 暴露指定模块

self.register("get_task_queue", callable=lambda: self.__task_queue)

self.register("get_result_queue", callable=lambda: self.__result_queue)

# 指定模块暴露启动

self.start()

self.task = self.get_task_queue()

self.result = self.get_result_queue()

self.funcRun(urls, path)

# 运行函数主体部分

def funcRun(self, urls, path):

for url in urls:

senddata = {'title': 'spider', 'content': url}

# 发送字典数据

self.task.put(senddata)

print("已发送数据: {}".format(senddata))

del senddata

print("正在接收数据...")

for link in range(10):

try:

data = self.result.get(timeout=10)

if data == 'nodata':

raise

except Exception as e:

if e == "No active exception to reraise":

e = ''

print("接收数据完成 {}".format(e))

self.task.put("quit")

break

else:

if data["title"] == "quitSir":

print(data["content"])

continue

self.writeFile(path, data)

print("已写入数据: {}".format(data['title']))

self.task.put("quit")

self.shutdown()

print("Service Exit!")

# 写文件

def writeFile(self, path, data):

savePath = os.path.join(path, data['title'])

if not os.path.exists(savePath):

os.mkdir(savePath)

with open(os.path.join(savePath, data['title'] + '.txt'), 'a+') as f:

f.write(data['title'] + '\n' + data['content'])

if __name__ == '__main__':

urls = (r'http://www.xbiquge.la/0/499/', r'http://www.xbiquge.la/15/15410/')

path = r'/home/oliver/Documents'

servicer = ServiceSpider()

servicer.runStart(urls=urls, path=path)

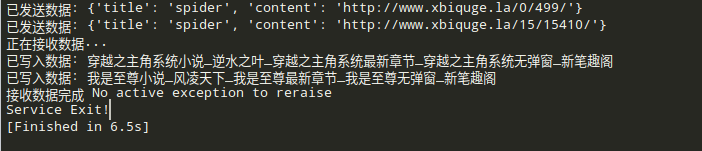

服务端图片:

*# 分布式简易爬虫客户端

from multiprocessing.managers import BaseManager

import re, random

import requests

class ClientSpider(BaseManager):

def __init__(self,address='192.168.1.102', port=8007, authkey=b'abc'):

super(ClientSpider, self).__init__(address=(address, port), authkey=authkey)

# 开始运行函数

def runStart(self, headers):

self.register("get_task_queue")

self.register("get_result_queue")

self.connect()

print("连接服务器成功!")

self.funcRun(headers)

# 运行函数主体部分

def funcRun(self, headers):

self.task = self.get_task_queue()

self.result = self.get_result_queue()

print("接受数据启动...")

while True:

try:

dictData = self.task.get(timeout=1)

if dictData == "quit":

break

except Exception as e:

print("服务器端停止发送数据!")

self.result.put('nodata')

else:

if dictData['title'] == 'spider':

try:

content = self.spiderBody(dictData['content'], headers)

except Exception as e:

self.result.put({"title": "quitSir", "content": "爬虫出错{}".format(e)})

else:

self.result.put(content)

print("已发送页面数据 {}".format(content['title']))

else:

self.result.put({"title": "quitSir", "content": "你所使用的客户端模块不存在!{}".format(dictData["content"])})

# 爬虫主体

def spiderBody(self, url, headers):

header = random.choice(headers)

try:

res = requests.get(url, headers=header)

except Exception as e:

raise e

else:

res.encoding = res.apparent_encoding

try:

title = re.findall("<title>(.*?)</title>", res.text, re.I|re.S)[0].replace('|', '').replace(' ', '')

content = re.findall("<p>(.*?)</p>", res.text, re.M)

except Exception as e:

raise e

else:

return {'title': title, 'content': '\n'.join(content)}

if __name__ == '__main__':

headers = [{"User-Agent": "UCWEB7.0.2.37/28/999"},]

client = ClientSpider()

client.runStart(headers=headers)

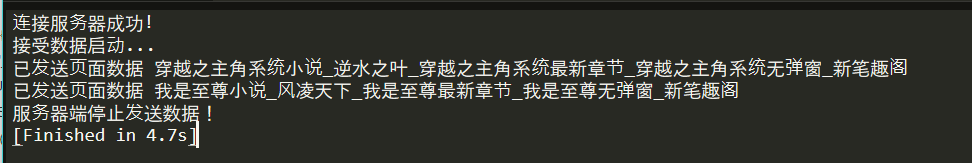

运行结果:

服务器端保存的数据: