local 模式

package com.imooc.spark.Test

import org.apache.spark.sql.types.{StringType, StructField, StructType}

import org.apache.spark.sql.{Row, SaveMode, SparkSession}

/**

* 测试sparkContext 案例

*/

object TestOfSparkContext2 {

def main(args: Array[String]): Unit = {

//System.setProperty("hadoop.home.dir", "E:\\soft\\winutils\\hadoop-common-2.2.0-bin-master")

val spark = SparkSession.builder()

.appName("TestOfSparkContext2")

.master("local[2]").getOrCreate()

//反射的方式 RDD=>DF

// reflection(spark)

//编程的方式 RDD=>DF

program(spark)

spark.stop()

}

private def reflection(spark: SparkSession) = {

val rdd = spark.sparkContext

.textFile("file///home/hadoop/data/test-data-spark/emp-forSpark.txt")

.map(w => w.split("\t"))

import spark.implicits._

val empDF = rdd.map(line =>

EMP(line(0), line(1), line(2), line(3),

line(4), line(5), line(6), line(7))).toDF()

empDF.printSchema()

empDF.show()

}

def program(spark: SparkSession) = {

val infoRdd = spark.sparkContext

.textFile("/home/hadoop/data/test-data-spark/emp-forSpark.txt")

.map(w => w.split("\t")).map(line => Row(line(0), line(1), line(2), line(3), line(4), line(5), line(6), line(7)))

val filedName = Array(StructField("empNo", StringType, true)

, StructField("ename", StringType, true), StructField("job", StringType, true)

, StructField("mgr", StringType, true), StructField("hireDate", StringType, true)

, StructField("sal", StringType, true), StructField("comm", StringType, true)

, StructField("deptNo", StringType, true))

val schema = StructType(filedName)

val empDf = spark.createDataFrame(infoRdd, schema)

empDf.printSchema()

empDf.show(false)

//注册临时表

empDf.createOrReplaceTempView("emp")

val sqlDF = spark.sql("select ename,job,comm from emp")

// sqlDF.printSchema()

//sqlDF.show()

/*empDf.write.format("json")

.mode(SaveMode.Overwrite).save("E:///testData/empTable3")*/

empDf.coalesce(1).write.format("json").mode(SaveMode.Overwrite)

.partitionBy("deptno").save("/home/hadoop/app/spark-2.1.1-bin-2.6.0-cdh5.7.0/testData/emp-jsonTable")

}

case class EMP(empNo: String, empName: String, job: String, mgr: String, hiredate: String,

sal: String, comm: String, deptNo: String)

}

打包

提交

在$SPARK_HOME 下执行

./bin/spark-submit \

--class com.imooc.spark.Test.TestOfSparkContext2 \

--master local[2] \

/home/hadoop/data/test-jar/sql-1.0.jar

参数解释

对empDf.write.format(“json”)

.mode(SaveMode.Overwrite).save(“E:///testData/empTable3”)*/

empDf.coalesce(1).write.format(“json”).mode(SaveMode.Overwrite)

.partitionBy(“deptno”).save("/home/hadoop/app/spark-2.1.1-bin-2.6.0-cdh5.7.0/testData/emp-jsonTable")中的参数解释

write.format(“json”) 写入文件的格式

mode(SaveMode.Overwrite). 保存文件,如果文件已经存在,则覆盖.还有:Append(如果存在,则追加),ErrorIfExists(如果存在报错,则退出)等)

empDf.coalesce(1) 分区的个数,如10,则在每个部门分区下被分成10个新分区

partitionBy(“deptno”). 指定存储分区字段

save("/home/hadoop/app/spark-2.1.1-bin-2.6.0-cdh5.7.0/testData/emp-jsonTable") 报错文件路径.注意:如果是直接保存到本地,file://path…;如果是保存到HDFS下,则路径是:hdfs:/ip:port+path;特别是如果是yarn模式提交,文件路径,直接写hdfs上的路径,且只能是hdfs上的路,如:/user/hadoop/…

yarn client模式提交

package com.imooc.spark.Test

import org.apache.spark.sql.types.{StringType, StructField, StructType}

import org.apache.spark.sql.{Row, SaveMode, SparkSession}

/**

* 测试sparkContext 案例

*/

object TestOfSparkContext2OnYarn {

def main(args: Array[String]): Unit = {

//System.setProperty("hadoop.home.dir", "E:\\soft\\winutils\\hadoop-common-2.2.0-bin-master")

/*val spark = SparkSession.builder()

.appName("TestOfSparkContext2OnYarn")

.master("local[2]").getOrCreate()*/

val path = "/user/hadoop/data/test-data-forSpark/emp-forSpark.txt"

val spark = SparkSession.builder().getOrCreate()

//反射的方式 RDD=>DF

// reflection(spark,path)

//编程的方式 RDD=>DF

program(spark,path)

spark.stop()

}

private def reflection(spark: SparkSession,path:String ) = {

val rdd = spark.sparkContext

.textFile(path)

.map(w => w.split("\t"))

import spark.implicits._

val empDF = rdd.map(line =>

EMP(line(0), line(1), line(2), line(3),

line(4), line(5), line(6), line(7))).toDF()

empDF.printSchema()

empDF.show()

}

def program(spark: SparkSession,path:String) = {

val infoRdd = spark.sparkContext

.textFile(path)

.map(w => w.split("\t")).map(line => Row(line(0), line(1), line(2), line(3), line(4), line(5), line(6), line(7)))

val filedName = Array(StructField("empNo", StringType, true)

, StructField("ename", StringType, true), StructField("job", StringType, true)

, StructField("mgr", StringType, true), StructField("hireDate", StringType, true)

, StructField("sal", StringType, true), StructField("comm", StringType, true)

, StructField("deptNo", StringType, true))

val schema = StructType(filedName)

val empDf = spark.createDataFrame(infoRdd, schema)

empDf.printSchema()

empDf.show(false)

//注册临时表

empDf.createOrReplaceTempView("emp")

val sqlDF = spark.sql("select ename,job,comm from emp")

// sqlDF.printSchema()

//sqlDF.show()

/*empDf.write.format("json")

.mode(SaveMode.Overwrite).save("E:///testData/empTable3")*/

empDf.coalesce(1).write.format("json").mode(SaveMode.Overwrite)

.partitionBy("deptno").save("hdfs://hadoop001:9000/user/hadoop/emp-spark-test/emp-jsonOnYarnTable")

}

case class EMP(empNo: String, empName: String, job: String, mgr: String, hiredate: String,

sal: String, comm: String, deptNo: String)

}

提交

./bin/spark-submit \

--class com.imooc.spark.Test.TestOfSparkContext2OnYarn \

--master yarn \

/home/hadoop/data/jar-test/sql-3.0-onYarn.jar

./bin/spark-submit \

--class com.imooc.spark.Test.TestOfSparkContext2OnYarn \

--master yarn \

--deploy-mode client \

/home/hadoop/data/jar-test/sql-3.0-onYarn.jar

注意: spark-submit 提交jar到yarn,数据输入输出路径都必须是HDFS路径,否则报错 :Input path does not exist

查看

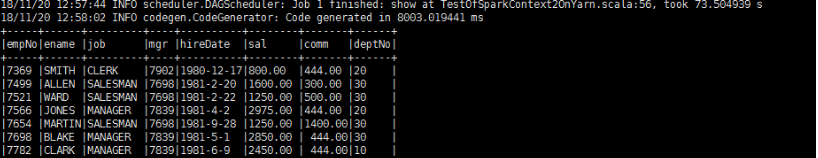

日志直接在控制台输出

结果

[hadoop@hadoop001 ~]$ hadoop fs -ls /user/hadoop/emp-spark-test/emp-jsonOnYarnTable

18/11/20 17:42:27 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 5 items

-rw-r--r-- 1 hadoop supergroup 0 2018-11-20 17:33 /user/hadoop/emp-spark-test/emp-jsonOnYarnTable/_SUCCESS

drwxr-xr-x - hadoop supergroup 0 2018-11-20 17:33 /user/hadoop/emp-spark-test/emp-jsonOnYarnTable/deptno=10

drwxr-xr-x - hadoop supergroup 0 2018-11-20 17:33 /user/hadoop/emp-spark-test/emp-jsonOnYarnTable/deptno=20

drwxr-xr-x - hadoop supergroup 0 2018-11-20 17:33 /user/hadoop/emp-spark-test/emp-jsonOnYarnTable/deptno=30

drwxr-xr-x - hadoop supergroup 0 2018-11-20 17:33 /user/hadoop/emp-spark-test/emp-jsonOnYarnTable/deptno=44

注意

本地提交:输入输出路文件在本地写法: file:///

输入输出 HDFS写法: hdfs://ip:port/

其中提交模式是yarn模式,输入输出只能是在hdfs上

yarn cluster 模式

提交命令

sql-1.0-yarnCluster.jar

./bin/spark-submit \

--class com.imooc.spark.Test.TestOfSparkContext2OnYarn \

--master yarn \

----deploy-mode cluster \

/home/hadoop/data/jar-test/sql-3.0-onYarn.jar

查看输出日志,yarn的日志查看

Spark on YARN 之 client 与cluster 模式区别

| yarn-client | Driver运行在Client端;Client请求container完成作业调度执行,client不能退出;日志在控制台输出,方便查看 |

| yarn-cluster | Driver运行在applicationMaster;Client一旦提交作业就可以关掉,作业已经运行在yarn上;日志在客户端看不到,因为作业运行在yarn上,通过 yarn logs -applicationIdapplication_id 查看 |

standalone 模式

代码和local模式一样

提交

./bin/spark-submit

–class com.imooc.spark.Test.TestOfSparkContext2

–master spark://192.168.52.130:7077

–executor-memory 4G

–total-executor-cores 6

/home/hadoop/data/jar-test/sql-1.0-yarnCluster.jar

三种模式比较

模式 简介

local 开发使用

standalone Spark自带,如果一个集群是Standalone的话,需要在每台机器上部署Spark

YARN 生产使用模式,统一使用YARN对整个集群作业(MR/Spark)的资源调度

Mesos

提交参数详解

参数 作用

–class jar包的包路径,如:com.imooc.spark.Test.TestOfSparkContext2OnYarn

–master 1.local[k]:本地模式,设置k个core ;2.yarn模式(1)-master yarn \ ----deploy-mode yarn-client–master;(2) yarn \ ----deploy-mode yarn-cluster 注意:不写----deploy-mode:默认是yarn-client模式;

执行jar包 3./home/hadoop/data/jar-test/sql-3.0-onYarn.jar 执行的jar包

–executor-memory 分配内存

–master local[8] 8个core

1000 1000个task

原文:https://blog.csdn.net/huonan_123/article/details/84282843