1.安装Hadoop

2.安装MySQL

3.安装HAProxy

3.下载并解压Hive

cd /usr/local

wget http://mirror.bit.edu.cn/apache/hive/hive-1.2.2/apache-hive-1.2.2-bin.tar.gz

tar -zxvf apache-hive-1.2.2-bin.tar.gz

#重命名

mv apache-hive-1.2.2-bin hive

#在Hadoop上创建Hive路径地址

hdfs dfs -mkdir -p /usr/local/hive/warehouse

hdfs dfs -mkdir -p /usr/local/hive/tmp

hdfs dfs -chmod 777 /usr/local/hive/warehouse

hdfs dfs -chmod 777 /usr/local/hive/tmp

#配置环境变量

export HIVE_HOME=/usr/local/hive

export PATH=$PATH:$HIVE_HOME/bin

#配置HADOOP_HOME

cp /usr/local/hive/conf/hive-env.sh.template /usr/local/hive/conf/hive-env.sh

HADOOP_HOME=/usr/local/hadoop-2.7.1

#配置日志信息

mkdir /usr/local/hive/log

cp /usr/local/hive/conf/hive-log4j.properties.template /usr/local/hive/conf/hive-log4j.properties

vi $HIVE_HOME/conf/hive-log4j.properties

hive.log.dir=/usr/local/hive/log

hive.log.file=hive.log

#配置hive-site.xml文件

cp /usr/local/hive/conf/hive-default.xml.template /usr/local/hive/conf/hive-site.xml

修改hive-site.xml配置文件(找到对应的name修改value)

<property>

<name>hive.exec.scratchdir</name>

<value>/usr/local/hive/tmp</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/usr/local/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://dn1:3306/hive?createDataseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/usr/local/hive/log</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/usr/local/hive/tmp</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

#下载mysql-connector-java-5.1.46.jar到/usr/local/hive/lib中

cd /usr/local/hive/lib

wget http://central.maven.org/maven2/mysql/mysql-connector-java/5.1.46/mysql-connector-java-5.1.46.jar

#将元数据信息初始化到Mysql数据库中

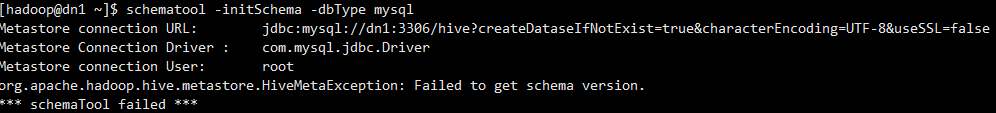

schematool -initSchema -dbType mysql

如果出现下列错误,则需要自己先创建好hive数据库

#直接运行Hive SQL脚本,测试Hive是否可用(显示ok则成功)

hive -e "show tables"

#将/etc/profile和hive文件夹发给dn2和dn3两个节点

scp /etc/profile hadoop@dn2:/etc

scp /etc/profile hadoop@dn3:/etc

scp /usr/local/hive hadoop@dn2:/usr/local

scp /usr/local/hive hadoop@dn3:/usr/local

#分别在dn2和dn3两个节点下启动hiveserver2服务

source /etc/profile

hive --service hiveserver2 &

#在dn1节点启动haproxy服务

cd /usr/local/haproxy

haproxy -f config.cfg