Volume

一、 什么是Volume

默认情况下容器的数据都是非持久化的,在容器消亡以后数据也跟着丢失,所以 Docker 提供了 Volume 机制以便将数据持久化存储。类似的,Kubernetes 提供了更强大的 Volume 机制和丰富的插件,解决了容器数据持久化和容器间共享数据的问题。

与 Docker 不同,Kubernetes Volume 的生命周期与 Pod 绑定。容器挂掉后 Kubelet 再次重启容器时,Volume 的数据依然还在,而 Pod 删除时,Volume 才会清理。数据是否丢失取决于具体的 Volume 类型,比如 emptyDir 的数据会丢失,而 PV 的数据则不会丢

二、 Volume类型

常用的Volume类型有如下几种:

emptyDir:把宿主机的空目录挂载到pod, 会在宿主机上创建数据卷目录并挂在到容器中。这种方式,Pod被删除后,数据也会丢失

hostPath:把宿主机的真实存在的目录挂载到pod

nfs:把nfs共享存储的目录挂载到pod

glusterfs:把glusterFS集群文件系统挂载到pod

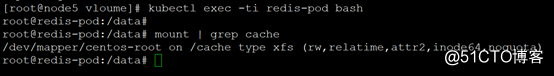

三、 emptyDir类型演示

创建emptyDir.ymal文件,内容如下:

apiVersion: v1

kind: Pod

metadata:

name: redis-pod

spec:

containers:

- image: redis

name: redis

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

使用redis镜像,创建一个叫redis-pod的pod,并在宿主机上创建一个空目录挂载到pod的/cache目录下。

创建pod:

kubectl create -f emptdir.ymal

进去看一下

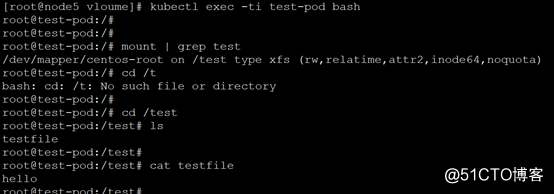

四、 HostPath类型演示

在所有节点上创建/k8sdata目录,并创建一个文件

mkdir /k8sdata

echo "hello" > /k8sdata/testfile

创建hostPath.ymal文件

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- image: nginx

name: test-container

volumeMounts:

- mountPath: /test

name: test-volume

volumes:

- name: test-volume

hostPath:

path: /k8sdata

把源主机止的/k8sdata目录挂载到pod的/test目录下。

创建pod

kubectl create -f hostPath.ymal

进去看一下

五、 NFS类型演示

在集群外的一个节点上安装NFS,我这里使用了192.168.117.90作为NFS服务器,并执行如下命令:

yum install -y rpcbind

yum install -y nfs-utils

echo "/nfs *(rw,no_root_squash)" >> /etc/exports

mkdir /nfs

echo "hello nfs" > /nfs/index.html

启动nfs

systemctl restart rpcbind

systemctl status rpcbind

systemctl enable rpcbind

systemctl restart nfs

systemctl status nfs

systemctl enable nfs

在master上创建nginx-nfs.ymal文件

cat nginx-nfs.ymal

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 1

selector:

app: web01

template:

metadata:

name: nginx

labels:

app: web01

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html

readOnly: false

name: nginx-data

volumes:

- name: nginx-data

nfs:

server: 192.168.117.90

path: "/nfs"

创建Pod

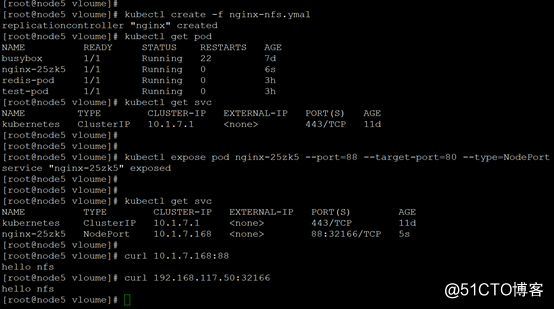

kubectl create -f nginx-nfs.ymal

验证

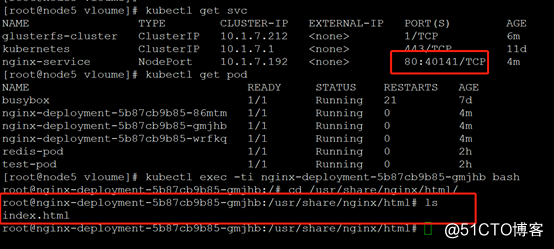

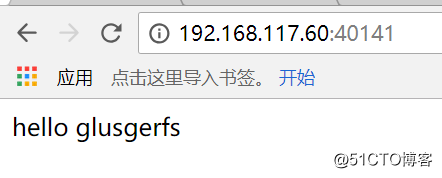

六、 GlusterFS类型演示

部署glusterFS,另开两台机器

我这里使用了192.168.117.90和192.168.117.100做为node9和node10部署GlusterFS

在Node9和node10上执行

yum install -y epel-release

yum install centos-release-gluster -y

yum install glusterfs-server –y

systemctl restart glusterd

systemctl status glusterd

systemctl enable glusterd

mkdir /data

echo "hello glusgerfs" > /data/index.html

在Node9上执行

gluster peer probe node10

gluster peer probe node9

gluster peer status

gluster volume create gv0 replica 2 node9:/data node10:/data force

gluster volume start gv0

gluster volume info

gluster部署完成,下面开始测试

在master上创建gluster-endpoints.json

cat glusterfs-endpoints.json

{

"kind": "Endpoints",

"apiVersion": "v1",

"metadata": {

"name": "glusterfs-cluster"

},

"subsets": [

{

"addresses": [

{

"ip": "192.168.117.90"

}

],

"ports": [

{

"port": 1

}

]

},

{

"addresses": [

{

"ip": "192.168.117.100"

}

],

"ports": [

{

"port": 1

}

]

}

]

}

创建

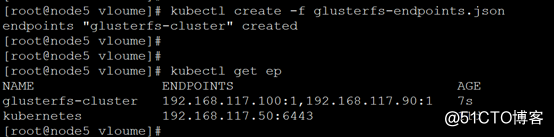

kubectl create -f glusterfs-endpoints.json

在master上创建glusterfs-service.json

cat glusterfs-service.json

{

"kind": "Service",

"apiVersion": "v1",

"metadata": {

"name": "glusterfs-cluster"

},

"spec": {

"ports": [

{"port": 1}

]

}

}

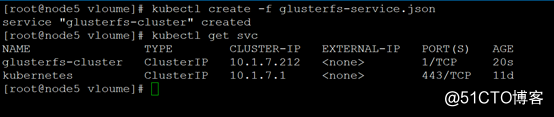

kubectl create -f glusterfs-service.json

在master上创建nginx-deployment.yaml

cat nginx-deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: glusterfsvol

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: glusterfsvol

glusterfs:

endpoints: glusterfs-cluster

path: gv0

readOnly: false

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

type: NodePort

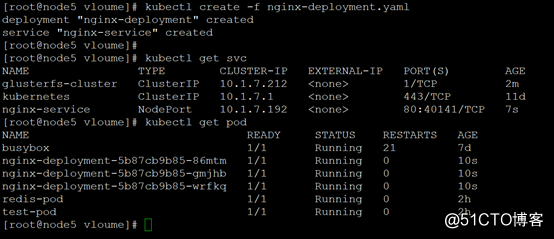

kubectl create -f nginx-deployment.yaml

验证