Pytest命令行执行测试

from collections import namedtuple Task = namedtuple('Task', ['summary','owner','done','id']) # __new__.__defaults__创建默认的Task对象 Task.__new__.__defaults__ = (None, None, False, None) def test_default(): """ 如果不传任何参数,则默认调用缺省对象Task.__new__.__defaults__ = (None, None, False, None) """ t1 = Task() t2 = Task(None, None, False, None) assert t1 == t2 def test_member_access(): """ 利用属性名来访问对象成员 :return: """ t = Task('buy milk', 'brian') assert t.summary == 'buy milk' assert t.owner == 'brian' assert(t.done, t.id) == (False, None) def test_asdict(): """ _asdict()返回一个字典 """ t_task = Task('do something','okken',True, 21) t_dict = t_task._asdict() expected_dict = {'summary': 'do something', 'owner': 'okken', 'done': True, 'id': 21} assert t_dict == expected_dict def test_replace(): """ _replace()替换对象成员 """ t_before_replace = Task('finish book', 'brian', False) t_after_replace = t_before_replace._replace(id=10, done=True) t_expected = Task('finish book', 'brian', True, 10) assert t_after_replace == t_expected

Pytest执行规则

pytest命令的执行可以加options和file or directory,如果不提供这些参数,pytes会在当前目录及其子目录下寻找测试文件,然后运行搜索到的测试代码;如果提供了一个或者多个文件名、目录,pytest会逐一查找并运行所有测试,为了搜索到所有的测试代码,pytest会递归遍历每个目录及其子目录,但也只是执行以test_开头或者_test开头的测试函数

pytest搜索测试文件和测试用例的过程称为测试搜索,只要遵循如下几条原则便能够被它搜索到

- 测试文件应命名为test_(something).py或者(something)_test.py

- 测试函数、测试类方法应命名为test_(something)

- 测试类应命名为Test(something)

如果执行上述代码文件,执行结果为:

D:\PythonPrograms\PythonPytest\TestScripts>pytest test_two.py ============================= test session starts ============================= platform win32 -- Python 3.7.2, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 rootdir: D:\PythonPrograms\PythonPytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 4 items test_two.py .... [100%] ========================== 4 passed in 0.04 seconds ===========================

- 在执行结果的第一行显示执行代码的操作系统、python版本以及pytest的版本:platform win32 – Python 3.7.2, pytest-4.0.2, py-1.8.0, pluggy-0.12.0

- 第二行显示搜索代码的启示目录以及配置文件,在本例中没有配置文件,因此inifile为空:rootdir: D:\PythonPrograms\PythonPytest\TestScripts, inifile:

- 第三行显示pytest插件

- 第四行 collected 4 items表示找到4个测试函数。

- test_two.py … 显示的是测试文件名,后边的点表示测试通过,除了点以外,还可能遇到Failure、error(测试异常)、skip、xfail(预期失败并确实失败)、xpass(预期失败但实际通过,不符合预期)分别会显示F、E、s、x、X

- 4 passed in 0.04 seconds 则表示测试结果和执行时间

执行单个测试用例

使用命令 pytest -v 路径/文件名::测试用例函数名 执行结果如下:

D:\PythonPrograms\Python_Pytest\TestScripts>pytest -v test_two.py::test_replace ============================= test session starts ============================= platform win32 -- Python 3.7.2, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 -- c:\python37\python.exe cachedir: .pytest_cache rootdir: D:\PythonPrograms\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 1 item test_two.py::test_replace PASSED [100%] ========================== 1 passed in 0.04 seconds ===========================

命令行规则如下:

运行某个模块内的某个测试函数:

pytest test_mod.py::test_func

运行某个模块内某个类的某个测试方法

pytest test_mod.py::TestClass::test_method

执行单个测试模块

pytest test_module.py

执行某个目录下的所有测试

pytest test/

–collect-only选项

在批量执行测试用例之前,我们往往会想知道哪些用例将被执行是否符合我们的预期等等,这种场景下可以使用–collect-only选项,如下执行结果所示:

D:\PythonPrograms\Python_Pytest\TestScripts>pytest --collect-only ============================= test session starts ============================= platform win32 -- Python 3.7.2, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 rootdir: D:\PythonPrograms\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 17 items <Package 'D:\\PythonPrograms\\Python_Pytest\\TestScripts'> <Module 'test_asserts.py'> <Function 'test_add'> <Function 'test_add2'> <Function 'test_add3'> <Function 'test_add4'> <Function 'test_in'> <Function 'test_not_in'> <Function 'test_true'> <Module 'test_fixture1.py'> <Function 'test_numbers_3_4'> <Function 'test_strings_a_3'> <Module 'test_fixture2.py'> <Class 'TestUM'> <Function 'test_numbers_5_6'> <Function 'test_strings_b_2'> <Module 'test_one.py'> <Function 'test_equal'> <Function 'test_not_equal'> <Module 'test_two.py'> <Function 'test_default'> <Function 'test_member_access'> <Function 'test_asdict'> <Function 'test_replace'> ======================== no tests ran in 0.09 seconds =========================

-k选项

该选项允许我们使用表达式指定希望运行的测试用例,如果某测试名是唯一的或者多个测试名的前缀或后缀相同,则可以使用这个选项来执行,如下执行结果所示:

D:\PythonPrograms\Python_Pytest\TestScripts>pytest -k "asdict or default" --collect-only ============================= test session starts ============================= platform win32 -- Python 3.7.2, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 rootdir: D:\PythonPrograms\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 17 items / 15 deselected <Package 'D:\\PythonPrograms\\Python_Pytest\\TestScripts'> <Module 'test_two.py'> <Function 'test_default'> <Function 'test_asdict'> ======================== 15 deselected in 0.06 seconds ========================

从执行结果中我们能看到使用-k和–collect-only组合能够查询到我们设置的参数所能执行的测试方法。

然后我们将–collect-only从命令行移出,只使用-k便可执行test_default和test_asdict了

D:\PythonPrograms\Python_Pytest\TestScripts>pytest -k "asdict or default" ============================= test session starts ============================= platform win32 -- Python 3.7.2, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 rootdir: D:\PythonPrograms\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 17 items / 15 deselected test_two.py .. [100%] =================== 2 passed, 15 deselected in 0.07 seconds ===================

如果我们在定义用例名的时候特别注意一下便可以使用-k的方式执行一系列测试用例了,同时表达式中科包含 and、or、not

-m选项

用于标记并分组,然后仅执行带有标记的用例,如此便实现了执行某个测试集合的场景,如下代码所示,给我们之前的两个测试方法添加标记

@pytest.mark.run_these_cases def test_member_access(): """ 利用属性名来访问对象成员 :return: """ t = Task('buy milk', 'brian') assert t.summary == 'buy milk' assert t.owner == 'brian' assert(t.done, t.id) == (False, None) @pytest.mark.run_these_cases def test_asdict(): """ _asdict()返回一个字典 """ t_task = Task('do something','okken',True, 21) t_dict = t_task._asdict() expected_dict = {'summary': 'do something', 'owner': 'okken', 'done': True, 'id': 21} assert t_dict == expected_dict

执行命令:pytest -v -m run_these_cases,结果如下:

D:\PythonPrograms\Python_Pytest\TestScripts>pytest -v -m run_these_cases ============================= test session starts ============================= platform win32 -- Python 3.7.2, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 -- c:\python37\python.exe cachedir: .pytest_cache rootdir: D:\PythonPrograms\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 17 items / 15 deselected test_two.py::test_member_access PASSED [ 50%] test_two.py::test_asdict PASSED [100%] =================== 2 passed, 15 deselected in 0.07 seconds ===================

-m选项也可以用表达式指定多个标记名,例如-m “mark1 and mark2” 或者-m “mark1 and not mark2” 或者-m “mark1 or mark2”

-x选项

Pytest会运行每一个搜索到的测试用例,如果某个测试函数被断言失败,或者触发了外部异常,则该测试用例的运行就会停止,pytest将其标记为失败后继续运行一下测试用例,然而在debug的时候,我们往往希望遇到失败时立刻停止整个会话,-x选项为我们提供了该场景的支持,如下执行结果所示:

E:\Programs\Python\Python_Pytest\TestScripts>pytest -x =============================== test session starts ============================== platform win32 -- Python 3.7.3, pytest-4.5.0, py-1.8.0, pluggy-0.11.0 rootdir: E:\Programs\Python\Python_Pytest\TestScripts plugins: allure-pytest-2.6.3 collected 17 items test_asserts.py ...F ========================FAILURES ================================================= _________________________test_add4 _____________________________________________________ def test_add4(): > assert add(17,22) >= 50 E assert 39 >= 50 E + where 39 = add(17, 22) test_asserts.py:34: AssertionError ======================== warnings summary ==================================== c:\python37\lib\site-packages\_pytest\mark\structures.py:324 c:\python37\lib\site-packages\_pytest\mark\structures.py:324: PytestUnknownMarkWarning: Unknown pytest.mark.run_these_cases - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pyt est.org/en/latest/mark.html PytestUnknownMarkWarning, -- Docs: https://docs.pytest.org/en/latest/warnings.html ===================== 1 failed, 3 passed, 1 warnings in 0.41 seconds ====================

在执行结果中我们可以看到实际收集的测试用例是17条,但执行了4条,通过3条失败一条,执行便停止了。

如果不适用-x选项再执行一次结果如下:

E:\Programs\Python\Python_Pytest\TestScripts>pytest --tb=no =============================== test session starts ================================ platform win32 -- Python 3.7.3, pytest-4.5.0, py-1.8.0, pluggy-0.11.0 rootdir: E:\Programs\Python\Python_Pytest\TestScripts plugins: allure-pytest-2.6.3 collected 17 items test_asserts.py ...F..F [ 41%] test_fixture1.py .. [ 52%] test_fixture2.py .. [ 64%] test_one.py .F [ 76%] test_two.py .... [100%] ============================ warnings summary ================================= c:\python37\lib\site-packages\_pytest\mark\structures.py:324 c:\python37\lib\site-packages\_pytest\mark\structures.py:324: PytestUnknownMarkWarning: Unknown pytest.mark.run_these_cases - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pyt est.org/en/latest/mark.html PytestUnknownMarkWarning, -- Docs: https://docs.pytest.org/en/latest/warnings.html ================= 3 failed, 14 passed, 1 warnings in 0.31 seconds ========================

从执行结果中我们看到一共收集的测试用例为17条,14条通过,3条失败,使用了选项–tb=no关闭错误信息回溯,当我们只想看执行结果而不想看那么多报错信息的时候可以使用它。

–maxfail=num选项

-x是遇到失败便全局停止,如果我们想遇到失败几次再停止呢?–maxfail选项为我们提供了这个场景的支持,如下执行结果所示:

E:\Programs\Python\Python_Pytest\TestScripts>pytest --maxfail=2 --tb=no ================================ test session starts ==================================== platform win32 -- Python 3.7.3, pytest-4.5.0, py-1.8.0, pluggy-0.11.0 rootdir: E:\Programs\Python\Python_Pytest\TestScripts plugins: allure-pytest-2.6.3 collected 17 items test_asserts.py ...F..F ============================= warnings summary ==================================== c:\python37\lib\site-packages\_pytest\mark\structures.py:324 c:\python37\lib\site-packages\_pytest\mark\structures.py:324: PytestUnknownMarkWarning: Unknown pytest.mark.run_these_cases - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pyt est.org/en/latest/mark.html PytestUnknownMarkWarning, -- Docs: https://docs.pytest.org/en/latest/warnings.html =========================== 2 failed, 5 passed, 1 warnings in 0.22 seconds ===================

从执行结果中我们看到收集了17条用例,执行了7条,当错误数量达到2的时候便停止了执行。

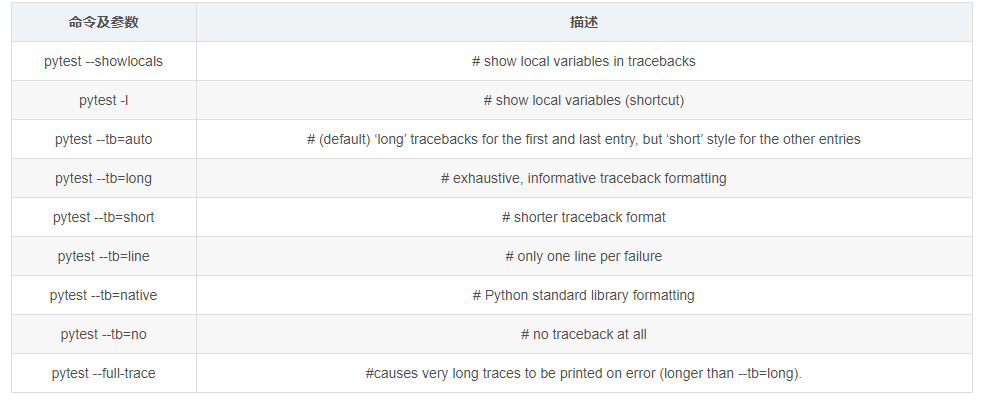

–tb=

-v(–verbose)

-v, --verbose:increase verbosity.

-q(–quiet)

-q, --quiet:decrease verbosity.

–lf(–last-failed)

–lf, --last-failed:rerun only the tests that failed at the last run (or all if none failed)

E:\Programs\Python\Python_Pytest\TestScripts>pytest --lf --tb=no ========================== test session starts ======================================== platform win32 -- Python 3.7.3, pytest-4.5.0, py-1.8.0, pluggy-0.11.0 rootdir: E:\Programs\Python\Python_Pytest\TestScripts plugins: allure-pytest-2.6.3 collected 9 items / 6 deselected / 3 selected run-last-failure: rerun previous 3 failures (skipped 7 files) test_asserts.py FF [ 66%] test_one.py F [100%] ======================== 3 failed, 6 deselected in 0.15 seconds ============================

–ff(–failed-first)

–ff, --failed-first :run all tests but run the last failures first. This may re-order tests and thus lead to repeated fixture setup/teardown

E:\Programs\Python\Python_Pytest\TestScripts>pytest --ff --tb=no ================================= test session starts ================================== platform win32 -- Python 3.7.3, pytest-4.5.0, py-1.8.0, pluggy-0.11.0 rootdir: E:\Programs\Python\Python_Pytest\TestScripts plugins: allure-pytest-2.6.3 collected 17 items run-last-failure: rerun previous 3 failures first test_asserts.py FF [ 11%] test_one.py F [ 17%] test_asserts.py ..... [ 47%] test_fixture1.py .. [ 58%] test_fixture2.py .. [ 70%] test_one.py . [ 76%] test_two.py .... [100%] ======================== warnings summary ========================================== c:\python37\lib\site-packages\_pytest\mark\structures.py:324 c:\python37\lib\site-packages\_pytest\mark\structures.py:324: PytestUnknownMarkWarning: Unknown pytest.mark.run_these_cases - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pyt est.org/en/latest/mark.html PytestUnknownMarkWarning, -- Docs: https://docs.pytest.org/en/latest/warnings.html ================= 3 failed, 14 passed, 1 warnings in 0.25 seconds ==========================

-s与–capture=method

-s等同于–capture=no,执行结果如下:

(venv) D:\Python_Pytest\TestScripts>pytest -s ============================= test session starts ============================================ platform win32 -- Python 3.7.3, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 rootdir: D:\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 18 items test_asserts.py ...F...F test_fixture1.py setup_module================> setup_function------> test_numbers_3_4 .teardown_function---> setup_function------> test_strings_a_3 .teardown_function---> teardown_module=============> test_fixture2.py setup_class=========> setup_method----->> setup-----> test_numbers_5_6 .teardown--> teardown_method-->> setup_method----->> setup-----> test_strings_b_2 .teardown--> teardown_method-->> teardown_class=========> test_one.py .F test_two.py .... ========================= FAILURES ============================================= _____________________________ test_add4 ______________________________________________ @pytest.mark.aaaa def test_add4(): > assert add(17, 22) >= 50 E assert 39 >= 50 E + where 39 = add(17, 22) test_asserts.py:36: AssertionError _____________________________________________________________________________________________________________ test_not_true ______________________________________________________________________________________________________________ def test_not_true(): > assert not is_prime(7) E assert not True E + where True = is_prime(7) test_asserts.py:70: AssertionError _______________________________________ test_not_equal ________________________________________________ def test_not_equal(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Use -v to get the full diff test_one.py:9: AssertionError ================================== 3 failed, 15 passed in 0.15 seconds =================================

–capture=method per-test capturing method: one of fd|sys|no.

-l(–showlocals)

-l, --showlocals show locals in tracebacks (disabled by default).

–duration=N

–durations=N show N slowest setup/test durations (N=0 for all).

绝大多数用于调优测试代码,该选项展示最慢的N个用例,等于0则表示全部倒序

(venv) D:\Python_Pytest\TestScripts>pytest --duration=5 ============================= test session starts ============================= platform win32 -- Python 3.7.3, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 rootdir: D:\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 18 items test_asserts.py ...F...F [ 44%] test_fixture1.py .. [ 55%] test_fixture2.py .. [ 66%] test_one.py .F [ 77%] test_two.py .... [100%] ================================== FAILURES =================================== __________________________________ test_add4 __________________________________ @pytest.mark.aaaa def test_add4(): > assert add(17, 22) >= 50 E assert 39 >= 50 E + where 39 = add(17, 22) test_asserts.py:36: AssertionError ________________________________ test_not_true ________________________________ def test_not_true(): > assert not is_prime(7) E assert not True E + where True = is_prime(7) test_asserts.py:70: AssertionError _______________________________ test_not_equal ________________________________ def test_not_equal(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Use -v to get the full diff test_one.py:9: AssertionError ========================== slowest 5 test durations =========================== 0.01s call test_asserts.py::test_add4 (0.00 durations hidden. Use -vv to show these durations.) ===================== 3 failed, 15 passed in 0.27 seconds =====================

在执行结果中我们看到提示(0.00 durations hidden. Use -vv to show these durations.),如果加上-vv,执行结果如下:

(venv) D:\Python_Pytest\TestScripts>pytest --duration=5 -vv ============================= test session starts ============================= platform win32 -- Python 3.7.3, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 -- c:\python37\python.exe cachedir: .pytest_cache rootdir: D:\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 18 items test_asserts.py::test_add PASSED [ 5%] test_asserts.py::test_add2 PASSED [ 11%] test_asserts.py::test_add3 PASSED [ 16%] test_asserts.py::test_add4 FAILED [ 22%] test_asserts.py::test_in PASSED [ 27%] test_asserts.py::test_not_in PASSED [ 33%] test_asserts.py::test_true PASSED [ 38%] test_asserts.py::test_not_true FAILED [ 44%] test_fixture1.py::test_numbers_3_4 PASSED [ 50%] test_fixture1.py::test_strings_a_3 PASSED [ 55%] test_fixture2.py::TestUM::test_numbers_5_6 PASSED [ 61%] test_fixture2.py::TestUM::test_strings_b_2 PASSED [ 66%] test_one.py::test_equal PASSED [ 72%] test_one.py::test_not_equal FAILED [ 77%] test_two.py::test_default PASSED [ 83%] test_two.py::test_member_access PASSED [ 88%] test_two.py::test_asdict PASSED [ 94%] test_two.py::test_replace PASSED [100%] ================================== FAILURES =================================== __________________________________ test_add4 __________________________________ @pytest.mark.aaaa def test_add4(): > assert add(17, 22) >= 50 E assert 39 >= 50 E + where 39 = add(17, 22) test_asserts.py:36: AssertionError ________________________________ test_not_true ________________________________ def test_not_true(): > assert not is_prime(7) E assert not True E + where True = is_prime(7) test_asserts.py:70: AssertionError _______________________________ test_not_equal ________________________________ def test_not_equal(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Full diff: E - (1, 2, 3) E ? ^ ^ E + (3, 2, 1) E ? ^ ^ test_one.py:9: AssertionError ========================== slowest 5 test durations =========================== 0.00s setup test_one.py::test_not_equal 0.00s setup test_fixture1.py::test_strings_a_3 0.00s setup test_asserts.py::test_add3 0.00s call test_fixture2.py::TestUM::test_strings_b_2 0.00s call test_asserts.py::test_in ===================== 3 failed, 15 passed in 0.16 seconds =====================

-r

生成一个简短的概述报告,同时配合-r还可以使用

例如只想看失败的和跳过的测试,可以这样执行

(venv) E:\Python_Pytest\TestScripts>pytest -rfs ============================= test session starts ============================= platform win32 -- Python 3.7.3, pytest-4.0.2, py-1.8.0, pluggy-0.12.0 rootdir: E:\Python_Pytest\TestScripts, inifile: plugins: allure-adaptor-1.7.10 collected 18 items test_asserts.py ...F...F [ 44%] test_fixture1.py .. [ 55%] test_fixture2.py .. [ 66%] test_one.py .F [ 77%] test_two.py .... [100%] ================================== FAILURES =================================== __________________________________ test_add4 __________________________________ @pytest.mark.aaaa def test_add4(): > assert add(17, 22) >= 50 E assert 39 >= 50 E + where 39 = add(17, 22) test_asserts.py:36: AssertionError ________________________________ test_not_true ________________________________ def test_not_true(): > assert not is_prime(7) E assert not True E + where True = is_prime(7) test_asserts.py:70: AssertionError _______________________________ test_not_equal ________________________________ def test_not_equal(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Use -v to get the full diff test_one.py:9: AssertionError =========================== short test summary info =========================== FAIL test_asserts.py::test_add4 FAIL test_asserts.py::test_not_true FAIL test_one.py::test_not_equal ===================== 3 failed, 15 passed in 0.10 seconds =====================

pytest --help 获取更多参数

在命令行输入pytest --help 然后执行结果如下,在打印出来的结果中我们能够看到pytest命令的使用方式usage: pytest [options] [file_or_dir] [file_or_dir] […]以及一系列的执行方式(options)及其描述。

C:\Users\Administrator>pytest --help usage: pytest [options] [file_or_dir] [file_or_dir] [...] positional arguments: file_or_dir general: -k EXPRESSION only run tests which match the given substring expression. An expression is a python evaluatable expression where all names are substring-matched against test names and their parent classes. Example: -k 'test_method or test_other' matches all test functions and classes whose name contains 'test_method' or 'test_other', while -k 'not test_method' matches those that don't contain 'test_method' in their names. Additionally keywords are matched to classes and functions containing extra names in their 'extra_keyword_matches' set, as well as functions which have names assigned directly to them. -m MARKEXPR only run tests matching given mark expression. example: -m 'mark1 and not mark2'. --markers show markers (builtin, plugin and per-project ones). -x, --exitfirst exit instantly on first error or failed test. --maxfail=num exit after first num failures or errors. --strict marks not registered in configuration file raise errors. -c file load configuration from `file` instead of trying to locate one of the implicit configuration files. --continue-on-collection-errors Force test execution even if collection errors occur. --rootdir=ROOTDIR Define root directory for tests. Can be relative path: 'root_dir', './root_dir', 'root_dir/another_dir/'; absolute path: '/home/user/root_dir'; path with variables: '$HOME/root_dir'. --fixtures, --funcargs show available fixtures, sorted by plugin appearance (fixtures with leading '_' are only shown with '-v') --fixtures-per-test show fixtures per test --import-mode={prepend,append} prepend/append to sys.path when importing test modules, default is to prepend. --pdb start the interactive Python debugger on errors or KeyboardInterrupt. --pdbcls=modulename:classname start a custom interactive Python debugger on errors. For example: --pdbcls=IPython.terminal.debugger:TerminalPdb --trace Immediately break when running each test. --capture=method per-test capturing method: one of fd|sys|no. -s shortcut for --capture=no. --runxfail run tests even if they are marked xfail --lf, --last-failed rerun only the tests that failed at the last run (or all if none failed) --ff, --failed-first run all tests but run the last failures first. This may re-order tests and thus lead to repeated fixture setup/teardown --nf, --new-first run tests from new files first, then the rest of the tests sorted by file mtime --cache-show show cache contents, don't perform collection or tests --cache-clear remove all cache contents at start of test run. --lfnf={all,none}, --last-failed-no-failures={all,none} change the behavior when no test failed in the last run or no information about the last failures was found in the cache --sw, --stepwise exit on test fail and continue from last failing test next time --stepwise-skip ignore the first failing test but stop on the next failing test --allure_severities=SEVERITIES_SET Comma-separated list of severity names. Tests only with these severities will be run. Possible values are:blocker, critical, minor, normal, trivial. --allure_features=FEATURES_SET Comma-separated list of feature names. Run tests that have at least one of the specified feature labels. --allure_stories=STORIES_SET Comma-separated list of story names. Run tests that have at least one of the specified story labels. reporting: -v, --verbose increase verbosity. -q, --quiet decrease verbosity. --verbosity=VERBOSE set verbosity -r chars show extra test summary info as specified by chars (f)ailed, (E)error, (s)skipped, (x)failed, (X)passed, (p)passed, (P)passed with output, (a)all except pP. Warnings are displayed at all times except when --disable-warnings is set --disable-warnings, --disable-pytest-warnings disable warnings summary -l, --showlocals show locals in tracebacks (disabled by default). --tb=style traceback print mode (auto/long/short/line/native/no). --show-capture={no,stdout,stderr,log,all} Controls how captured stdout/stderr/log is shown on failed tests. Default is 'all'. --full-trace don't cut any tracebacks (default is to cut). --color=color color terminal output (yes/no/auto). --durations=N show N slowest setup/test durations (N=0 for all). --pastebin=mode send failed|all info to bpaste.net pastebin service. --junit-xml=path create junit-xml style report file at given path. --junit-prefix=str prepend prefix to classnames in junit-xml output --result-log=path DEPRECATED path for machine-readable result log. collection: --collect-only only collect tests, don't execute them. --pyargs try to interpret all arguments as python packages. --ignore=path ignore path during collection (multi-allowed). --deselect=nodeid_prefix deselect item during collection (multi-allowed). --confcutdir=dir only load conftest.py's relative to specified dir. --noconftest Don't load any conftest.py files. --keep-duplicates Keep duplicate tests. --collect-in-virtualenv Don't ignore tests in a local virtualenv directory --doctest-modules run doctests in all .py modules --doctest-report={none,cdiff,ndiff,udiff,only_first_failure} choose another output format for diffs on doctest failure --doctest-glob=pat doctests file matching pattern, default: test*.txt --doctest-ignore-import-errors ignore doctest ImportErrors --doctest-continue-on-failure for a given doctest, continue to run after the first failure test session debugging and configuration: --basetemp=dir base temporary directory for this test run.(warning: this directory is removed if it exists) --version display pytest lib version and import information. -h, --help show help message and configuration info -p name early-load given plugin (multi-allowed). To avoid loading of plugins, use the `no:` prefix, e.g. `no:doctest`. --trace-config trace considerations of conftest.py files. --debug store internal tracing debug information in 'pytestdebug.log'. -o OVERRIDE_INI, --override-ini=OVERRIDE_INI override ini option with "option=value" style, e.g. `-o xfail_strict=True -o cache_dir=cache`. --assert=MODE Control assertion debugging tools. 'plain' performs no assertion debugging. 'rewrite' (the default) rewrites assert statements in test modules on import to provide assert expression information. --setup-only only setup fixtures, do not execute tests. --setup-show show setup of fixtures while executing tests. --setup-plan show what fixtures and tests would be executed but don't execute anything. pytest-warnings: -W PYTHONWARNINGS, --pythonwarnings=PYTHONWARNINGS set which warnings to report, see -W option of python itself. logging: --no-print-logs disable printing caught logs on failed tests. --log-level=LOG_LEVEL logging level used by the logging module --log-format=LOG_FORMAT log format as used by the logging module. --log-date-format=LOG_DATE_FORMAT log date format as used by the logging module. --log-cli-level=LOG_CLI_LEVEL cli logging level. --log-cli-format=LOG_CLI_FORMAT log format as used by the logging module. --log-cli-date-format=LOG_CLI_DATE_FORMAT log date format as used by the logging module. --log-file=LOG_FILE path to a file when logging will be written to. --log-file-level=LOG_FILE_LEVEL log file logging level. --log-file-format=LOG_FILE_FORMAT log format as used by the logging module. --log-file-date-format=LOG_FILE_DATE_FORMAT log date format as used by the logging module. reporting: --alluredir=DIR Generate Allure report in the specified directory (may not exist) [pytest] ini-options in the first pytest.ini|tox.ini|setup.cfg file found: markers (linelist) markers for test functions empty_parameter_set_mark (string) default marker for empty parametersets norecursedirs (args) directory patterns to avoid for recursion testpaths (args) directories to search for tests when no files or dire console_output_style (string) console output: classic or with additional progr usefixtures (args) list of default fixtures to be used with this project python_files (args) glob-style file patterns for Python test module disco python_classes (args) prefixes or glob names for Python test class discover python_functions (args) prefixes or glob names for Python test function and m xfail_strict (bool) default for the strict parameter of xfail markers whe junit_suite_name (string) Test suite name for JUnit report junit_logging (string) Write captured log messages to JUnit report: one of n doctest_optionflags (args) option flags for doctests doctest_encoding (string) encoding used for doctest files cache_dir (string) cache directory path. filterwarnings (linelist) Each line specifies a pattern for warnings.filterwar log_print (bool) default value for --no-print-logs log_level (string) default value for --log-level log_format (string) default value for --log-format log_date_format (string) default value for --log-date-format log_cli (bool) enable log display during test run (also known as "li log_cli_level (string) default value for --log-cli-level log_cli_format (string) default value for --log-cli-format log_cli_date_format (string) default value for --log-cli-date-format log_file (string) default value for --log-file log_file_level (string) default value for --log-file-level log_file_format (string) default value for --log-file-format log_file_date_format (string) default value for --log-file-date-format addopts (args) extra command line options minversion (string) minimally required pytest version environment variables: PYTEST_ADDOPTS extra command line options PYTEST_PLUGINS comma-separated plugins to load during startup PYTEST_DISABLE_PLUGIN_AUTOLOAD set to disable plugin auto-loading PYTEST_DEBUG set to enable debug tracing of pytest's internals to see available markers type: pytest --markers to see available fixtures type: pytest --fixtures (shown according to specified file_or_dir or current dir if not specified; fixtures with leading '_' are only shown with the '-v' option