前言:最近一个月都在参加上海电信主办的智慧教育竞赛,虽然侥幸通过了初赛,但是复赛和初赛犯了一个同样的错误,导致过拟合严重排名从public榜的第7名一落千丈到了private榜的第11名。所以今天写这篇文章,主要是复盘,一来记录自己这一个月以来的辛勤成果,二来也让自己警醒,不要再犯同类的错误。

一、竞赛题目

随着人工智能(AI)的发展,“AI+教育”“智慧课堂”等名词逐渐出现在大众视野,越来越多的学校将人工智能助手融入课堂,当下中国正逐步进入“智慧教育”时代。在传统课堂中,由于时间和精力的限制,老师和家长无法兼顾学生的学习状态和学业进展,不会关注大量对于学生能反应其真实问题和情况的数据。

请参赛选手,利用比赛对应训练集提供的学生信息、考试知识点信息、考试总得分信息等建立模型,预测测试集中学生在指定考试中的成绩总分,预测目标如下:

初赛:利用初中最后一年的相关考试和考点信息,预测初中最后一学期倒数第二、第三次考试的成绩。

复赛:利用初中 4 年中的相关考试和考点信息,预测初中最后一学期最后一次考试的的成绩。

复赛题目分析:这里有2个关注重点,也是复赛想得高分的关键,很可惜我这两个点都只抓住了表面,而且因为一个过拟合严重的特征值蒙蔽了我的双眼。

两个关键点:

1、最后一学期:初中4年的相关考试和考点信息因为知识点并没有关联,所以可能把最后一学期的数据提取出来,单独来做分析可能会效果更好;

2、知识点:每个知识点都归属于不同的section(段落)和category(类别),我太纠结于用模型做,反而忽视了知识点本身自己的作用,第二名一开始成绩也不算好,后来用知识点做了规则计算,再和原来的模型融合了一下,成绩一下子就提升上去了,所以这也告诉了我不要忽视经验法则。

二、几个表的结构

1、all_knowledge.csv

分析:每个学科都有知识点,知识点属于不同的section和category,且有不同的难度complexity

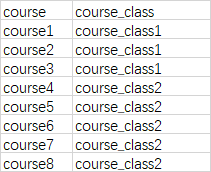

2、course.csv

分析:这个表其实我认为基本没用,因为在实际操作中肯定会把course放进去的,也就是已经分了8类了

3、course1_exams.csv…course8_exams.csv

分析:这个表结合all_knowledge.csv可以有很多操作,最简单的就是计算每门课的难度值。稍微复杂一点的是把每门课的的知识点收敛成section或cateogory。但最关键的一点还是要把每个知识点的得分计算出来,这也是我之前想到过却不知道该怎么办的,后来才知道原来用经验法则直接把最后期末考试成绩根据前面考试的相关信息简单乘除加减就做出来了,吐血!

4、exam_score.csv

分析:这个其实也就是训练集,只有4个字段,需要结合上面几张表做特征工程

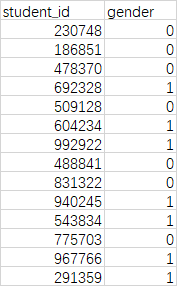

5、gender.csv

分析:学生的性别表格,其实我觉得作用虽然有,但效果会很弱,也是一个迷惑的特征。

三、我的部分代码

既然写了这么多代码,总要留点念想,以后碰到类似的问题,也方便查找。

当然里面的部分特征值最后证明都是无用的,大家主要看代码实现即可。

1、获得最近一次考试的成绩(特征值过拟合严重)

exam_score[exam_score.score == 0] = np.nan #这行的作用其实是删除值为0的行

# exam_score['score'] = exam_score['score'].replace(0, np.nan) #这行才是真正的把0替换成nan

exam_score = exam_score.sort_values(by=['student_id', 'course'])

exam_score['last_score'] = 0

new_exam_score = pd.DataFrame(columns=('student_id', 'course', 'exam_id', 'score', 'last_score'))

for student_id in student['student_id']:

for course_id in course_list:

exam_score_temp = exam_score[(exam_score.student_id == student_id) & (exam_score.course == course_id)]

exam_score_temp = exam_score_temp.fillna(exam_score_temp['score'].mean())

exam_score_temp.reset_index(drop=True, inplace=True)

temp_length = len(exam_score_temp)

for i in range(temp_length):

if i == 0:

exam_score_temp.iloc[i, 4] = exam_score_temp.iloc[i, 3]

else:

exam_score_temp.iloc[i, 4] = exam_score_temp.iloc[(i-1), 3]

last_score = exam_score_temp.iloc[temp_length-1, 3]

submission_s2.loc[(submission_s2['student_id'] == student_id) & (submission_s2['course'] == course_id), 'last_score'] = last_score

new_exam_score = pd.concat([new_exam_score, exam_score_temp], axis=0)

new_exam_score.to_csv('new_exam_score.csv', encoding='utf-8', index=False)

new_submission_s2 = submission_s2

new_submission_s2.to_csv('new_submission_s2.csv', encoding='utf-8', index=False)

new_exam_score.head()

分析:这段代码运行效率不高,而且这个特征值是导致我过拟合的元凶之一。

2、获得每一次考试的上一次考试排名

#1.1、获得exam_score里所有的exam_id

train_exam_id_list = list(exam_score['exam_id'].unique())

#1.2获得每一次考试的上一次考试排名

# exam_score = exam_score[~exam_score['score'].isin([0])] #删除分数为0的行

exam_score_rank = pd.DataFrame(columns=['student_id', 'course', 'exam_id', 'score', 'last_score', 'last_rank'])

for exam_id in train_exam_id_list:

exam_score_temp = new_exam_score[new_exam_score.loc[:, 'exam_id'] == exam_id]

exam_score_temp.loc[:, 'last_rank'] = exam_score_temp.loc[:, 'last_score'].rank(method='min', ascending=False)

exam_score_rank = pd.concat([exam_score_rank, exam_score_temp[['student_id', 'course', 'exam_id', 'score', 'last_score', 'last_rank']]], axis=0, sort=False)

exam_score_rank = exam_score_rank[['student_id', 'course', 'exam_id', 'score', 'last_score', 'last_rank']]

exam_score_rank.head()

分析:上一个考试成绩的补充,其实也没什么用,只是public榜给了我一种假象。

3、获知每门考试属于哪个学期、是学期中的第几门考试等信息

#2、获知每门考试属于哪个学期、是学期中的第几门考试等信息

def get_previous_big_exam(course_exam_number, max_row, max_col, this_big_exam_row, exams_level):

'''

:param course_exam_number: 考试类别

:param max_row: 这门课程的最大行号

:param max_col: 这门课程的最大列号

:param this_big_exam_row: 大考的考试行号

:param exams_level: 考试对应的层级,比如第一学期上半学期(term1_1)...第四学期下半学期(term4_2)

:return: 上一门大考的行号

'''

#获得这一行最早的知识点

for i in range(1, max_col):

if course_exam_number.iloc[this_big_exam_row, i] > 0:

earliest_col = i

break

#获得这个知识点最早的考试,进而推算出上一次大考的行号

for j in range(0, max_row):

if course_exam_number.iloc[j, earliest_col] > 1:

if j == 0: previous_big_exam_row = 0

elif course_exam_number.iloc[j-1, -5] < course_exam_number.iloc[j, -5]: previous_big_exam_row = j-2

else: previous_big_exam_row = j-1

if previous_big_exam_row > 1:

course_exam_number.iloc[previous_big_exam_row, -4] = 'big'

course_exam_number.iloc[previous_big_exam_row, -3] = 'term' + str(exams_level-1) + '_2' #上一次大考属于第几个学期的下半学期

course_exam_number.iloc[previous_big_exam_row, -2] = 'term' + str(exams_level-1) #上一次大考属于第几个学期

break

#获得本次大考的学期(term)

if previous_big_exam_row == 0: previous_big_exam_row = -1

course_exam_number.iloc[this_big_exam_row, -1] = this_big_exam_row - previous_big_exam_row #本学期第几门考试

course_exam_number.iloc[this_big_exam_row, -2] = 'term' + str(exams_level) #第几个学期

#获得2场大考间的中型考试(考点最多的一场)

this_median_exam_row = course_exam_number.iloc[previous_big_exam_row+1:this_big_exam_row-1, -5].idxmax()

course_exam_number.iloc[this_median_exam_row, -4] = 'median'

course_exam_number.iloc[this_median_exam_row, -3] = 'term' + str(exams_level) + '_1'

course_exam_number.iloc[this_median_exam_row, -2] = 'term' + str(exams_level) #第几个学期

course_exam_number.iloc[this_median_exam_row, -1] = this_median_exam_row - previous_big_exam_row #本学期第几门考试

#获得每一门小考的相关信息

for k in range(previous_big_exam_row+1, this_median_exam_row):

course_exam_number.iloc[k, -4] = 'small'

course_exam_number.iloc[k, -3] = 'term' + str(exams_level) + '_1'

course_exam_number.iloc[k, -2] = 'term' + str(exams_level)

course_exam_number.iloc[k, -1] = k - previous_big_exam_row

for l in range(this_median_exam_row+1, this_big_exam_row):

course_exam_number.iloc[l, -4] = 'small'

course_exam_number.iloc[l, -3] = 'term' + str(exams_level) + '_2'

course_exam_number.iloc[l, -2] = 'term' + str(exams_level)

course_exam_number.iloc[l, -1] = l - previous_big_exam_row

return previous_big_exam_row

course_exam_total = pd.DataFrame(columns={'exam_id', 'exam_number', 'knowledge_count', 'exam_type', 'exam_range', 'term', 'term_course_sn'})

for course_id in course_list:

course_exam_number = pd.read_csv('/home/kesci/input/smart_edu7557/' + course_id + '_exams.csv', engine='python')

max_row, max_col = course_exam_number.shape

course_exam_number['exam_number'] = range(len(course_exam_number))

course_exam_number['exam_number'] = course_exam_number['exam_number'] + 1

course_exam_number['knowledge_count'] = (course_exam_number.iloc[:, 1:max_col] > 0).sum(axis=1)

course_exam_number['exam_type'] = 'exam_type'

course_exam_number['exam_range'] = 'exam_range'

course_exam_number['term'] = 'term'

course_exam_number['term_course_sn'] = 1

eight_big_exam_row = max_row - 1 #第8场大考行号

course_exam_number.iloc[0, -4] = 'small' #第一行的exam_type

course_exam_number.iloc[-1, -4] = 'big' #最后一行的exam_type

course_exam_number.iloc[-1, -2] = 'term8' #最后一行的term

course_exam_number.iloc[-1, -3] = 'term8_2' #最后一行的exam_range

seven_big_exam_row = get_previous_big_exam(course_exam_number, max_row, max_col, eight_big_exam_row, 8) #第7场大考行号

six_big_exam_row = get_previous_big_exam(course_exam_number, max_row, max_col, seven_big_exam_row, 7) #第6场大考行号

five_big_exam_row = get_previous_big_exam(course_exam_number, max_row, max_col, six_big_exam_row, 6) #第5场大考行号

four_big_exam_row = get_previous_big_exam(course_exam_number, max_row, max_col, five_big_exam_row, 5) #第4场大考行号

three_big_exam_row = get_previous_big_exam(course_exam_number, max_row, max_col, four_big_exam_row, 4) #第3场大考行号

two_big_exam_row = get_previous_big_exam(course_exam_number, max_row, max_col, three_big_exam_row, 3) #第2场大考行号

one_big_exam_row = get_previous_big_exam(course_exam_number, max_row, max_col, two_big_exam_row, 2) #第1场大考行号

get_previous_big_exam(course_exam_number, max_row, max_col, one_big_exam_row, 1) #把第一场中相关考试标好

course_exam_total = pd.concat([course_exam_total, course_exam_number[['exam_id', 'exam_number', 'knowledge_count', 'exam_type', 'exam_range', 'term', 'term_course_sn']]], axis=0, sort=False)

# print(course_exam_total['exam_type'].value_counts())

#相关字段join到exam_score里

new_exam_score_1 = pd.merge(exam_score_rank, course_exam_total, how='left', left_on='exam_id', right_on='exam_id')

new_exam_score_1 = new_exam_score_1[['student_id', 'course', 'exam_id', 'score', 'exam_number', 'exam_type', 'knowledge_count',

'exam_range', 'term', 'term_course_sn', 'last_score', 'last_rank']]

new_submission_1 = pd.merge(submission_score_rank, course_exam_total, how='left', left_on='exam_id', right_on='exam_id')

# print(new_exam_score_1.shape)

new_exam_score_1.head()

分析:这段代码是我的精华,通过这段代码判断出每一门考试属于哪个学期?是月考、期中考还是期末考?是这个学期的第几门课?

4、增加年份的相关信息(没啥用)

#根据term增加学年year的相关列

def get_year(dataframe):

year_dict = {'term1':'year1', 'term2':'year1', 'term3':'year2', 'term4':'year2',

'term5':'year3', 'term6':'year3', 'term7':'year4', 'term8':'year4'}

this_year = dataframe['term']

return year_dict[this_year]

new_exam_score_1['year'] = new_exam_score_1.apply(get_year, axis=1)

new_submission_1['year'] = new_submission_1.apply(get_year, axis=1)

new_submission_1.head()

5、把分数聚类一下(也没啥用)

def score_category(score):

'''对分数进行聚合,变成A、B、C、D、E五种'''

if score>=90:

return 'A'

elif score>=80:

return 'B'

elif score>=70:

return 'C'

elif score>=60:

return 'D'

else:

return 'E'

new_exam_score_1['score_grade'] = new_exam_score_1['score'].apply(score_category)

new_exam_score_1.head()

6、数据清洗,删除分数为0的行

new_exam_score_2 = new_exam_score_1[~new_exam_score_1['score'].isin([0])] #删除分数为0的行

print(new_exam_score_2.shape)

new_exam_score_2.head()

7、求每个学生在不同科目上,分数分类后的众数(也没啥用)

def get_mode(student_score_mode):

'''获得最常考的分数区间'''

score_list = ['A', 'B', 'C', 'D', 'E']

the_mode = 0

for key in score_list:

if student_score_mode[key] >= the_mode:

the_mode = student_score_mode[key]

best = key

else:

continue

return best

student_score_mode = new_exam_score_1.pivot_table(index=['student_id', 'course'], values='score', columns=['score_grade'], aggfunc='count', fill_value=0).reset_index()

student_score_mode['mode'] = student_score_mode.apply(get_mode, axis=1)

student_score_mode = student_score_mode[['student_id', 'course', 'mode']]

student_score_mode.head()

8、尝试使用kmeans来获取student_id的聚类特征值,把学生分成n类(有效果,但不是特别好)

from sklearn.cluster import KMeans

from sklearn import preprocessing

student_type_score = new_exam_score_2.pivot_table(index=['student_id'], values='score', columns='course', aggfunc=[np.mean, np.std])

#先做标准化

# scaler = preprocessing.StandardScaler()

# student_type_score.iloc[:, :] = scaler.fit_transform(student_type_score)

#然后再聚类获得标签值

estimator = KMeans(n_clusters=8, random_state=36).fit(student_type_score)

labelPred = estimator.labels_

student_type_score['student_type'] = labelPred

#最后提取相关信息

student_type_score = student_type_score.reset_index().rename(columns={'index': 'student_id'})

student_type_score = student_type_score[['student_id', 'student_type']]

student_type_score = pd.concat([student_type_score['student_id'], student_type_score['student_type']], axis=1)

student_type_score.head()

9、通过数据透视表,获得不同半学期、不同学生、不同课程平均成绩和标准差

range_avg_score = new_exam_score_2.pivot_table(index=['student_id', 'course', 'exam_range'],

values='score', aggfunc=np.mean).reset_index().rename(columns={'score': 'range_avg_score'})

range_std_score = new_exam_score_2.pivot_table(index=['student_id', 'course', 'exam_range'],

values='score', aggfunc=np.std).reset_index().rename(columns={'score': 'range_std_score'})

range_avg_score.head()

分析:造成我过拟合的最大元凶,其实我早就意识到了这个特征值会过拟合,但是因为public因为这个特征值分数高了很多,所以给了我一种假象,以为这个特征值有用,实际。。。。

10、因为有些表student_id类型不一样,所以统一改一下

new_exam_score_2['student_id'] = new_exam_score_2['student_id'].astype(int)

print('修改成功!')

11、获得每门考试的难度值

course_exams_final = pd.DataFrame(columns=['exam_id', 'sum_complexity'])

for i in range(1, 9):

all_knowledge_temp = all_knowledge[all_knowledge['course'] == 'course' + str(i)]

sum_complexity = all_knowledge_temp['complexity']

course_exams_temp = pd.read_csv('/home/kesci/input/smart_edu7557/' + 'course'+str(i)+'_exams.csv')

course_exams_temp['sum_complexity'] = np.dot(course_exams_temp.iloc[:, 1:], sum_complexity)

course_exams_temp = course_exams_temp[['exam_id', 'sum_complexity']]

course_exams_final = pd.concat([course_exams_final, course_exams_temp], axis=0)

course_exams_final.head()

分析:这个特征值其实还是蛮有用的,而且用到了点乘

12、获得每门课每个难度的占比(百分制)

total_exam_level = pd.DataFrame(columns=['level_0', 'level_1', 'level_2', 'level_3', 'level_4'])

for course_Number in course_list:

course_temp_exams = pd.read_csv('/home/kesci/input/smart_edu7557/' + course_Number + '_exams.csv', engine='python')

course_temp_exams_T = pd.DataFrame(course_temp_exams.values.T, index=course_temp_exams.columns, columns=course_temp_exams.exam_id)

all_knowledge_temp = all_knowledge[all_knowledge.course == course_Number][['knowledge_point', 'complexity']].set_index('knowledge_point')

course_temp_exams_T = pd.merge(course_temp_exams_T, all_knowledge_temp, left_index=True, right_index=True)

level_sum = pd.DataFrame(columns=course_temp_exams_T.columns)

level_sum['level_0'] =course_temp_exams_T[course_temp_exams_T['complexity'] == 0].apply(lambda x: x.sum())

level_sum['level_1'] =course_temp_exams_T[course_temp_exams_T['complexity'] == 1].apply(lambda x: x.sum())

level_sum['level_2'] =course_temp_exams_T[course_temp_exams_T['complexity'] == 2].apply(lambda x: x.sum())

level_sum['level_3'] =course_temp_exams_T[course_temp_exams_T['complexity'] == 3].apply(lambda x: x.sum())

level_sum['level_4'] =course_temp_exams_T[course_temp_exams_T['complexity'] == 4].apply(lambda x: x.sum())

level_sum = level_sum.iloc[:-1, -5:]

total_exam_level = pd.concat([total_exam_level, level_sum], axis=0)

total_exam_level = total_exam_level.reset_index().rename(columns={'index': 'exam_id'})

total_exam_level.head()

分析:又是一段我的得意代码,想了很久,而且实际使用效果也不错,用到了矩阵乘法、转置、转置后再转置等方法。

13、获取每一门考试category(版本一,只获得占比)

'''先新增n列category,列名是C:n,值都是0,然后遍历原列名(都是K:n),与字典{knowledge_point : category}进行比对,把该列的值都加入到所对应的C:n下'''

total_category = pd.DataFrame(columns=('exam_id', 'C:0', 'C:1', 'C:2', 'C:3', 'C:4', 'C:5', 'C:6', 'C:7', 'C:8', 'C:9',

'C:10', 'C:11', 'C:12', 'C:13', 'C:14', 'C:15', 'C:16', 'C:17', 'C:18', 'C:19',

'C:20', 'C:21', 'C:22', 'C:23', 'C:24', 'C:25', 'C:26', 'C:27', 'C:28', 'C:29',

'C:30', 'C:31', 'C:32', 'C:33', 'C:34'))

for course_lv in range(1, 9):

course_temp = 'course' + str(course_lv)

course_exams_temp = pd.read_csv('/home/kesci/input/smart_edu7557/' + course_temp + '_exams.csv', engine='python')

old_length = course_exams_temp.shape[1] #原来course_exams中的列数

all_knowledge_temp = all_knowledge[all_knowledge['course'] == course_temp][['knowledge_point', 'category']].set_index(

'knowledge_point')

length = len(all_knowledge_temp['category'].unique()) #category的个数

temp_dict = all_knowledge_temp.to_dict()['category']

for i in range(0, 35): #增加C:n列

course_exams_temp['C:' + str(i)] = 0

for j in range(1, old_length): #遍历原来course_exams中的列数

for k in range(0, length): #遍历category的个数

if 'C:' + str(k) == temp_dict['K:' + str(j-1)]:

temp_C = k+old_length

course_exams_temp['C:' + str(k)] = course_exams_temp['C:' + str(k)] + course_exams_temp['K:' + str(j-1)]

break

course_exams_temp = pd.concat([course_exams_temp['exam_id'], course_exams_temp.iloc[:, old_length:]], axis=1)

total_category = pd.concat([total_category, course_exams_temp], axis=0)

total_category.head()

分析:这段代码其实也写的蛮烦的,但作用也比较大。

14、获取每一门考试category(版本二,可以获得category的难度值)

'''先新增n列category,列名是C:n,值都是0,然后遍历原列名(都是K:n),与字典{knowledge_point : category}进行比对,把该列的值都加入到所对应的C:n下'''

total_category = pd.DataFrame(columns=('exam_id', 'course', 'C:0', 'C:1', 'C:2', 'C:3', 'C:4', 'C:5', 'C:6', 'C:7', 'C:8', 'C:9',

'C:10', 'C:11', 'C:12', 'C:13', 'C:14', 'C:15', 'C:16', 'C:17', 'C:18', 'C:19',

'C:20', 'C:21', 'C:22', 'C:23', 'C:24', 'C:25', 'C:26', 'C:27', 'C:28', 'C:29',

'C:30', 'C:31', 'C:32', 'C:33', 'C:34'))

for course_lv in range(1, 9):

course_temp = 'course' + str(course_lv)

course_temp_exams = pd.read_csv('/home/kesci/input/smart_edu7557/' + course_temp + '_exams.csv', engine='python')

#下面的步骤是为了获得每一门考试每一个知识点的比重*难度值

course_temp_exams_T = pd.DataFrame(course_temp_exams.values.T, index=course_temp_exams.columns, columns=course_temp_exams.exam_id)

all_knowledge_temp_1 = all_knowledge[all_knowledge.course == course_temp][['knowledge_point', 'complexity']].set_index('knowledge_point')

course_temp_exams_T = pd.merge(course_temp_exams_T, all_knowledge_temp_1, left_index=True, right_index=True)

temp_columns_length = course_temp_exams_T.shape[1]

for i in range(0, temp_columns_length-1):

course_temp_exams_T.iloc[:, i] = course_temp_exams_T.iloc[:, i] * course_temp_exams_T.iloc[:, -1]

new_course_temp_exams = course_temp_exams_T.T.iloc[:-1, :]

new_course_temp_exams = new_course_temp_exams.reset_index().rename(columns={'index': 'exam_id'})

#下面的步骤是为了把每一门考试的knowledge变成category

old_length = new_course_temp_exams.shape[1] #原来course_exams中的列数

all_knowledge_temp_2 = all_knowledge[all_knowledge['course'] == course_temp][['knowledge_point', 'category']].set_index(

'knowledge_point')

length = len(all_knowledge_temp_2['category'].unique()) #category的个数

temp_dict = all_knowledge_temp_2.to_dict()['category']

for i in range(0, 35): #增加C:n列

new_course_temp_exams['C:' + str(i)] = 0

for j in range(1, old_length): #遍历原来course_exams中的列数

for k in range(0, length): #遍历category的个数

if 'C:' + str(k) == temp_dict['K:' + str(j-1)]:

temp_C = k+old_length

new_course_temp_exams['C:' + str(k)] = new_course_temp_exams['C:' + str(k)] + new_course_temp_exams['K:' + str(j-1)]

break

course_exams_temp = pd.concat([new_course_temp_exams['exam_id'], new_course_temp_exams.iloc[:, old_length:]], axis=1)

course_exams_temp['course'] = course_temp

total_category = pd.concat([total_category, course_exams_temp], axis=0, sort=False)

del total_category['course'] #如果我不想聚合,那就把course删除

#把C:0-C:34整合成all_term_category

term_category = course_exam_total.merge(total_category, how='left', left_on='exam_id', right_on='exam_id')

term_category = term_category[['exam_id', 'course', 'term', 'C:0', 'C:1', 'C:2', 'C:3', 'C:4', 'C:5', 'C:6', 'C:7', 'C:8', 'C:9',

'C:10', 'C:11', 'C:12', 'C:13', 'C:14', 'C:15', 'C:16', 'C:17', 'C:18', 'C:19',

'C:20', 'C:21', 'C:22', 'C:23', 'C:24', 'C:25', 'C:26', 'C:27', 'C:28', 'C:29',

'C:30', 'C:31', 'C:32', 'C:33', 'C:34']]

term_list = ['term1', 'term2', 'term3', 'term4', 'term5', 'term6', 'term7', 'term8']

all_term_category = pd.DataFrame(columns=['exam_id', 'course', 'term', 'term_C1', 'term_C2', 'term_C3', 'term_C4', 'term_C5'])

for course_id in course_list:

for term_id in term_list:

temp_term_category = term_category[(term_category['term'] == term_id) & (term_category['course'] == course_id)]

temp_term_category = temp_term_category.ix[:, ~(temp_term_category==0).all()] #删除所有值是0的列

temp_length = temp_term_category.shape[1]

for i in range(temp_length, 8):

temp_term_category[str(i)] = 0

temp_term_category.columns = ['exam_id', 'course', 'term', 'term_C1', 'term_C2', 'term_C3', 'term_C4', 'term_C5']

# print(temp_term_category)

all_term_category = pd.concat([all_term_category, temp_term_category], axis=0, sort=False)

all_term_category = all_term_category[['exam_id', 'term_C1', 'term_C2', 'term_C3', 'term_C4', 'term_C5']]

print(all_term_category.shape)

all_term_category.head()

分析:我现在还是觉得应该有点用的,只是被之前那个特征值给影响到了,所以没有体现出价值。

15、labelEncoder

#上面主要是特征工程,接下来就是labelEncoder、数据缩放和模型建立了

#所以先把上面的数据整理保存为final_exam_score和final_submission

#接下来先导入

import pandas as pd

import numpy as np

pd.set_option('display.max_columns', None) #显示所有列

final_exam_score = pd.read_csv('/home/kesci/work/final_exam_score.csv')

# final_exam_score = final_exam_score[final_exam_score['exam_number'] != 1] #剔除第一场考试

final_submission = pd.read_csv('/home/kesci/work/final_submission.csv')

#1、将部分字符串数值化

from sklearn.preprocessing import LabelEncoder

for col in ['course', 'course_class', 'exam_type', 'exam_range', 'term']:

le = LabelEncoder()

le.fit(final_exam_score[col])

final_exam_score[col] = le.transform(final_exam_score[col])

final_submission[col] = le.transform(final_submission[col])

final_exam_score.head()

16、lightgbm——简单预测

#10、数据预测(lightgbm)方法一:直接跑,主要是用来测试数据

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn import metrics

from sklearn.model_selection import GridSearchCV

import matplotlib.pyplot as plt

dataSet_new = ['course', 'exam_number', 'exam_type', 'gender', 'student_type', 'course_class', 'term',

'last_score', 'last_rank', 'exam_range', 'sum_complexity', 'term_course_sn',

'term_std_score', 'term_avg_score', 'term_avg_score_rank',

'total_rank', 'range_avg_score', 'range_std_score',

'total_avg_score', 'total_std_score',

'level_0', 'level_1', 'level_2', 'level_3', 'level_4',

'C:0', 'C:1', 'C:2', 'C:3', 'C:4', 'C:5', 'C:6', 'C:7', 'C:8', 'C:9',

'C:10', 'C:11', 'C:12', 'C:13', 'C:14', 'C:15', 'C:16', 'C:17', 'C:18', 'C:19',

'C:20', 'C:21', 'C:22', 'C:23', 'C:24', 'C:25', 'C:26', 'C:27', 'C:28', 'C:29',

'C:30', 'C:31', 'C:32', 'C:33', 'C:34']

seed = 36

X_train, X_val, y_train, y_val = train_test_split(final_exam_score[dataSet_new], final_exam_score['score'],

test_size=0.2, random_state=seed, stratify=final_exam_score['exam_type'])

train_data = lgb.Dataset(X_train, label=y_train)

val_data = lgb.Dataset(X_val, label=y_val, reference=train_data)

params = {

'task': 'train',

'boosting_type': 'gbdt',

'objective': 'regression',

'metric': 'rmse',

'num_leaves': 35, #目前最佳:seed=36, num_leaves=35

'learning_rate': 0.01,

'is_unbalance': True

}

evals_result = {} #记录训练结果所用

model = lgb.train(params,

train_data,

num_boost_round=30000,

valid_sets=val_data,

early_stopping_rounds=10,

categorical_feature = ['course', 'exam_type', 'gender', 'exam_range', 'student_type', 'term', 'course_class'],

evals_result = evals_result,

verbose_eval=500)

pred1 = model.predict(final_submission[dataSet_new])

print('lightgbm预测完成')

分析:主要看代码结构吧,特征值就不要看了,现在想想都是泪。

17、catboost

import catboost as cb

cat_model = cb.CatBoostRegressor(n_estimators=3000,

learning_rate=0.1,

loss_function='RMSE',

logging_level='Verbose',

eval_metric='RMSE',

random_seed=36)

cat_model.fit(X_train, y_train, eval_set=(X_val, y_val), verbose=500)

cat_pred = cat_model.predict(final_submission[dataSet_new])

cat_pred

18、获得lightgbm的feature_importance

fig,ax = plt.subplots(figsize=(15,15))

lgb.plot_importance(model,

height=0.5,

ax=ax,

max_num_features=64)

plt.title("Feature importances")

19、standardscaler

normal_list = ['exam_number', 'knowledge_count', 'range_avg_score', 'term_avg_score', 'range_std_score','term_std_score',

'last_score', 'last2_score', 'last3_score', 'sum_complexity']

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

scaler.fit(final_exam_score[normal_list])

final_exam_score[normal_list] = scaler.transform(final_exam_score[normal_list])

final_submission[normal_list] = scaler.transform(final_submission[normal_list])

final_submission.head()

20、xgboost

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import train_test_split

import xgboost

xg_reg = xgboost.XGBRegressor(eval_metric='rmse',

nthread=-1,

learning_rate=0.1,

n_estimators=2500,

max_depth=5,

min_child_weight=5,

seed=36,

subsample=0.9,

colsample_bytree=1,

gamma=0,

reg_alpha=5,

reg_lambda=5,

verbose=True,

silent=True)

eval_set =[(X_val, y_val)]

xg_reg.fit(X_train, y_train, eval_set=eval_set, verbose=500)

#下面是对验证数据集进行评测

test_pred = xg_reg.predict(X_val)

mse = mean_squared_error(y_pred=test_pred, y_true=y_val)

rmse = np.sqrt(mse)

final_score = 10 * np.log10(rmse)

print('xgboost val rmse: {}, val final_score: {}'.format(rmse, final_score))

#下面是对正式数据集进行预测

pred2 = xg_reg.predict(final_submission[dataSet_new])

print('xgboost预测完成')

pred2

21、lightgbm(使用交叉验证)

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn import metrics

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import KFold

from sklearn.model_selection import StratifiedKFold

import matplotlib.pyplot as plt

dataSet_new = ['course', 'exam_number', 'exam_type', 'gender', 'student_type',

'last_score', 'last_rank', 'exam_range', 'sum_complexity', 'term_course_sn',

'term_std_score', 'term_avg_score', 'term_avg_score_rank',

'total_rank', 'range_avg_score', 'range_std_score',

'total_avg_score', 'total_std_score', 'term', 'course_class',

'level_0', 'level_1', 'level_2', 'level_3', 'level_4',

'C:0', 'C:1', 'C:2', 'C:3', 'C:4', 'C:5', 'C:6', 'C:7', 'C:8', 'C:9',

'C:10', 'C:11', 'C:12', 'C:13', 'C:14', 'C:15', 'C:16', 'C:17', 'C:18', 'C:19',

'C:20', 'C:21', 'C:22', 'C:23', 'C:24', 'C:25', 'C:26', 'C:27', 'C:28', 'C:29',

'C:30', 'C:31', 'C:32', 'C:33', 'C:34']

train_x = final_exam_score[dataSet_new]

train_y = final_exam_score['score']

test_x = final_submission[dataSet_new]

X, y, X_test = train_x.values, train_y.values, test_x.values # 转为np.array类型

param = {

'boosting_type': 'gbdt',

'objective': 'regression',

'metric': 'rmse',

'num_leaves': 35,

'learning_rate': 0.01,

'verbose': -1

}

# 五折交叉验证

folds = StratifiedKFold(n_splits=5, shuffle=True, random_state=36)

predictions = [] #最后的预测值

for fold_, (train_index, test_index) in enumerate(folds.split(X, y)):

print("第{}次交叉验证:".format(fold_+1))

X_train, X_valid, y_train, y_valid = X[train_index], X[test_index], y[train_index], y[test_index]

train_data = lgb.Dataset(X_train, label=y_train) # 训练数据

validation_data = lgb.Dataset(X_valid, label=y_valid) # 验证数据

clf = lgb.train(param,

train_data,

num_boost_round=50000,

valid_sets=[validation_data],

# categorical_feature=['course', 'exam_type', 'gender', 'exam_range', 'student_type', 'term', 'course_class'],

verbose_eval=1000,

early_stopping_rounds=100,

)

x_pred = clf.predict(X_valid, num_iteration=clf.best_iteration)

y_test = clf.predict(X_test, num_iteration=clf.best_iteration) # 预测

print(y_test[:10])

predictions.append(y_test)

# oof[val_idx] = clf.predict(X_train[val_idx], num_iteration=clf.best_iteration)

# print(predictions)

final_scoreList = []

for i in range(0, 4000):

final_score = (predictions[0][i] + predictions[1][i] + predictions[2][i] + predictions[3][i] + predictions[4][i]) / 5

final_scoreList.append(final_score)

print(final_scoreList[:10])

22、xgboost(使用交叉验证)

import xgboost as xgb

from sklearn.model_selection import StratifiedKFold

param = {'max_depth': 5,

'learning_rate ': 0.1,

'silent': 1,

'min_child_weight': 5,

'subsample':0.9,

'gamma': 0,

'reg_alpha':5,

'reg_lambda':5,

'colsample_bytree':1,

'seed':36,

}

train_x = final_exam_score[dataSet_new]

train_y = final_exam_score['score']

test_x = final_submission[dataSet_new]

# 五折交叉验证

folds = StratifiedKFold(n_splits=5, shuffle=True, random_state=36)

predictions2 = [] #最后的预测值

for fold_, (train_index, test_index) in enumerate(folds.split(X, y)):

print("第{}次交叉验证:".format(fold_+1))

X_train, X_valid, y_train, y_valid = X[train_index], X[test_index], y[train_index], y[test_index]

xg_reg = xgb.XGBRegressor(eval_metric='rmse',

nthread=-1,

learning_rate=0.1,

n_estimators=4000,

max_depth=5,

min_child_weight=5,

seed=36,

subsample=0.9,

colsample_bytree=1,

gamma=0,

reg_alpha=5,

reg_lambda=5,

early_stopping_rounds=10,

verbose=True,

silent=True)

eval_set =[(X_valid, y_valid)]

xg_reg.fit(X_train, y_train, eval_set=eval_set, verbose=500)

y_test = xg_reg.predict(X_test) # 预测

print(y_test[:10])

predictions2.append(y_test)

final_scoreList2 = []

for i in range(0, 4000):

final_score = (predictions2[0][i] + predictions2[1][i] + predictions2[2][i] + predictions2[3][i] + predictions2[4][i]) / 5

final_scoreList2.append(final_score)

print(final_scoreList2[:10])

结束语:

虽然这次竞赛最后决赛没进,但是通过这次竞赛我还是成长了很多,作为我在开始机器学习后的第一场竞赛,其实能进复赛已经是运气了。

首先就是我的代码能力有了质的提升,之前对于pandas里的一些操作不大熟悉,现在都能直接打出来了。

然后是机器学习一些库的调用,比如xgboost、lightgbm、catboost,都已经可以正常使用了,而且也对5折交叉验证做了代码实现(而且实际用下来的确要比不做交叉验证的效果好很多)。

最后是对这种竞赛的流程有了比较清晰的了解,知道了哪些应该做,哪些就算表面效果看起来好但是千万不能用,还有经验法则真的很重要!

最后的最后:感谢自己这一个多月的付出,希望在下次竞赛中能进决赛!