依赖

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.3.0</version>

</dependency>

生产者

import org.apache.kafka.clients.producer.*;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class KafkaConProducer {

//发送消息的个数

private static final int MSG_SIZE = 1000;

//负责发送消息的线程池

private static ExecutorService executorService

= Executors.newFixedThreadPool(

Runtime.getRuntime().availableProcessors());

private static CountDownLatch countDownLatch

= new CountDownLatch(MSG_SIZE);

/*发送消息的任务*/

private static class ProduceWorker implements Runnable{

private ProducerRecord<String,String> record;

private KafkaProducer<String,String> producer;

public ProduceWorker(ProducerRecord<String, String> record,

KafkaProducer<String, String> producer) {

this.record = record;

this.producer = producer;

}

public void run() {

final String id = Thread.currentThread().getId()

+"-"+System.identityHashCode(producer);

try {

producer.send(record, new Callback() {

public void onCompletion(RecordMetadata metadata,

Exception exception) {

if(null!=exception){

exception.printStackTrace();

}

if(null!=metadata){

System.out.println(id+"|"

+String.format("偏移量:%s,分区:%s",

metadata.offset(),metadata.partition()));

}

}

});

System.out.println(id+":数据["+record.key()+"-" + record.value()+"]已发送。");

countDownLatch.countDown();

} catch (Exception e) {

e.printStackTrace();

}

}

}

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.100.14:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

KafkaProducer<String,String> producer

= new KafkaProducer(

properties);

try {

//循环发送,通过线程池的方式

for(int i=0;i<MSG_SIZE;i++){

ProducerRecord<String,String> record

= new ProducerRecord(

"concurrent-test",null,

"value" + i

);

executorService.submit(new ProduceWorker(record,producer));

}

countDownLatch.await();

} catch (Exception e) {

e.printStackTrace();

} finally {

producer.close();

executorService.shutdown();

}

}

}

消费者

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.Collections;

import java.util.Properties;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class KafkaConConsumer {

private static final int CONCURRENT_PARTITIONS_COUNT = 2;

private static ExecutorService executorService

= Executors.newFixedThreadPool(CONCURRENT_PARTITIONS_COUNT);

private static class ConsumerWorker implements Runnable{

private KafkaConsumer<String,String> consumer;

public ConsumerWorker(Properties properties, String topic) {

this.consumer = new KafkaConsumer(properties);

consumer.subscribe(Collections.singletonList(topic));

}

public void run() {

final String id = Thread.currentThread().getId()

+"-"+System.identityHashCode(consumer);

try {

while(true){

ConsumerRecords<String, String> records

= consumer.poll(Duration.ofMillis(500));

for(ConsumerRecord<String, String> record:records){

System.out.println(id+"|"+String.format(

"主题:%s,分区:%d,偏移量:%d," +

"key:%s,value:%s",

record.topic(),record.partition(),

record.offset(),record.key(),record.value()));

//do our work

}

}

} finally {

consumer.close();

}

}

}

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.100.14:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"concurrent");

for(int i = 0; i<CONCURRENT_PARTITIONS_COUNT; i++){

executorService.submit(new ConsumerWorker(properties,

"concurrent-test"));

}

}

}

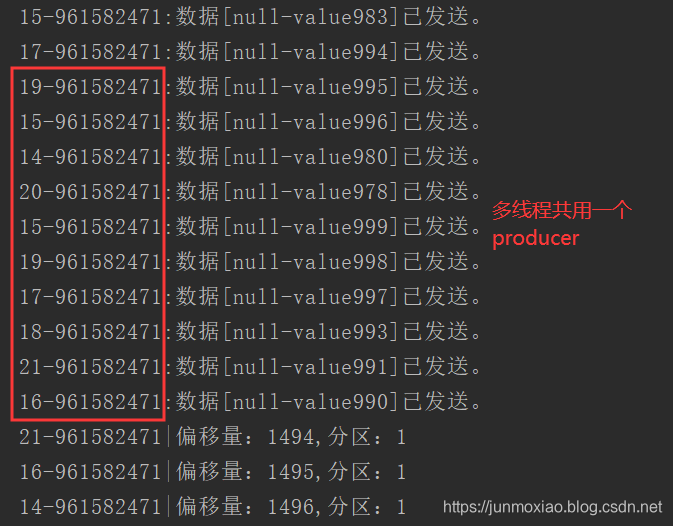

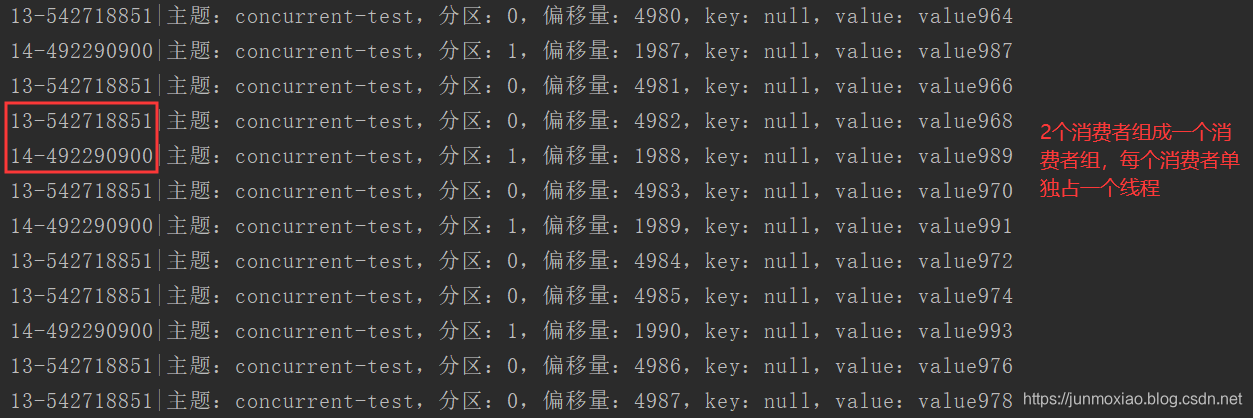

结果

生产者

消费者

参考:King——笔记-Kafka