机器翻译和数据集

机器翻译(MT):将一段文本从一种语言自动翻译为另一种语言,用神经网络解决这个问题通常称为神经机器翻译(NMT)。 主要特征:输出是单词序列而不是单个单词。 输出序列的长度可能与源序列的长度不同。

数据预处理

将数据集清洗、转化为神经网络的输入minbatch

with open('/home/kesci/input/fraeng6506/fra.txt', 'r') as f:

raw_text = f.read()

print(raw_text[0:1000])

def preprocess_raw(text):

text = text.replace('\u202f', ' ').replace('\xa0', ' ')

out = ''

for i, char in enumerate(text.lower()):

if char in (',', '!', '.') and i > 0 and text[i-1] != ' ':

out += ' '

out += char

return out

text = preprocess_raw(raw_text)

print(text[0:1000])

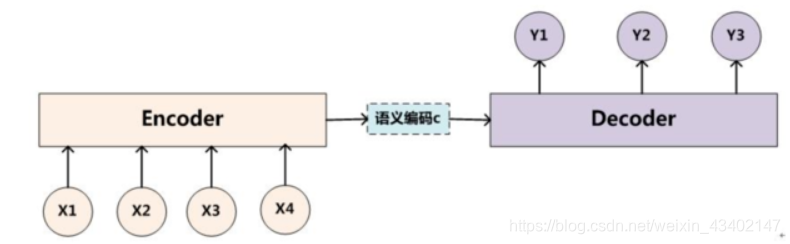

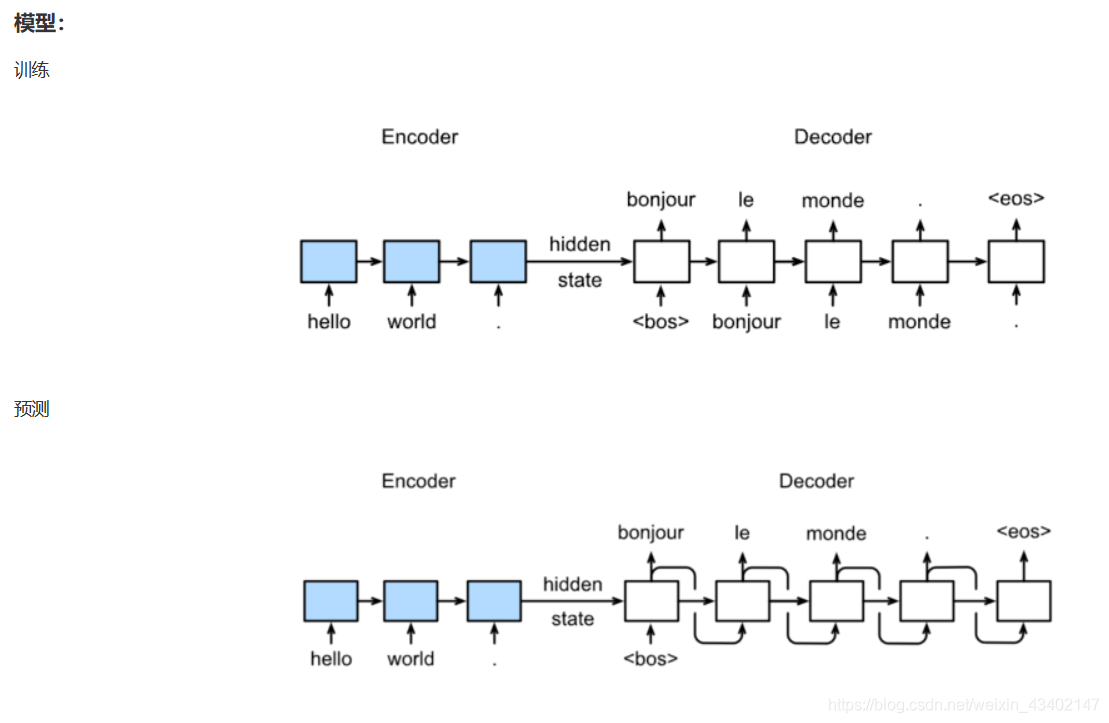

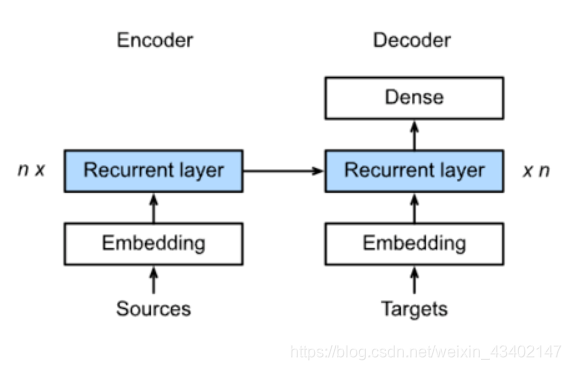

Encoder-Decoder

class Encoder(nn.Module):

def __init__(self, **kwargs):

super(Encoder, self).__init__(**kwargs)

def forward(self, X, *args):

raise NotImplementedError

class Decoder(nn.Module):

def __init__(self, **kwargs):

super(Decoder, self).__init__(**kwargs)

def init_state(self, enc_outputs, *args):

raise NotImplementedError

def forward(self, X, state):

raise NotImplementedError

class EncoderDecoder(nn.Module):

def __init__(self, encoder, decoder, **kwargs):

super(EncoderDecoder, self).__init__(**kwargs)

self.encoder = encoder

self.decoder = decoder

def forward(self, enc_X, dec_X, *args):

enc_outputs = self.encoder(enc_X, *args)

dec_state = self.decoder.init_state(enc_outputs, *args)

return self.decoder(dec_X, dec_state)

Sequence to Sequence模型

class Seq2SeqEncoder(d2l.Encoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqEncoder, self).__init__(**kwargs)

self.num_hiddens=num_hiddens

self.num_layers=num_layers

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.LSTM(embed_size,num_hiddens, num_layers, dropout=dropout)

def begin_state(self, batch_size, device):

return [torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device),

torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device)]

def forward(self, X, *args):

X = self.embedding(X) # X shape: (batch_size, seq_len, embed_size)

X = X.transpose(0, 1) # RNN needs first axes to be time

# state = self.begin_state(X.shape[1], device=X.device)

out, state = self.rnn(X)

# The shape of out is (seq_len, batch_size, num_hiddens).

# state contains the hidden state and the memory cell

# of the last time step, the shape is (num_layers, batch_size, num_hiddens)

return out, state

class Seq2SeqDecoder(d2l.Decoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqDecoder, self).__init__(**kwargs)

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.LSTM(embed_size,num_hiddens, num_layers, dropout=dropout)

self.dense = nn.Linear(num_hiddens,vocab_size)

def init_state(self, enc_outputs, *args):

return enc_outputs[1]

def forward(self, X, state):

X = self.embedding(X).transpose(0, 1)

out, state = self.rnn(X, state)

# Make the batch to be the first dimension to simplify loss computation.

out = self.dense(out).transpose(0, 1)

return out, state

Beam Search