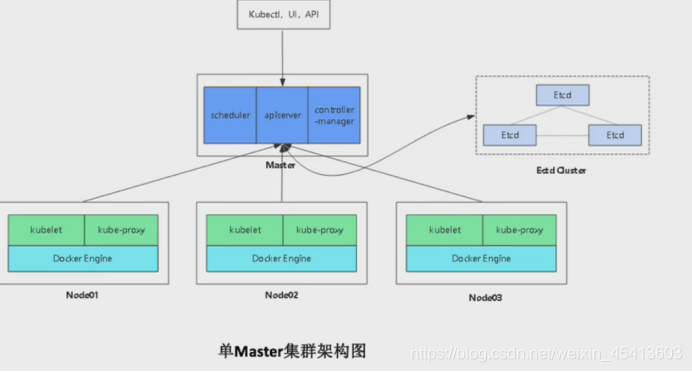

192.168.74.229 k8s-master

kube-apiserver kube-controller-manager kube-scheduler etcd

192.168.74.238 k8s-node1 kubelet kube-proxy docker flannel

192.168.74.248 k8s-node2

https://github.com/kubernetes/kubernetes/tags

一、安装前的准备

1.关闭防火墙以及swap

systemctl stop firewalld && systemctl disable firewalld

iptables -F -t nat && iptables -F

sed -i ‘s/enforcing/disabled/’ /etc/selinux/config && setenforce 0

sed -i ‘/swap/ s/^/# /’ /etc/fstab && swapoff -a

2.修改主机名

hostname k8s-master

hostname k8s-node1

hostname k8s-node2

3.配置固定IP(因为都一样就只列一个)

vim /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO=static

NETMASK=255.255.255.0

GATEWAY=192.168.74.2

IPADDR=192.168.74.229

systemctl restart network

vim /etc/resolv.conf

nameserver 202.106.0.20

nameserver 192.168.74.2

4.配置hosts 解析

192.168.74.229 k8s-master

192.168.74.238 k8s-node1

192.168.74.248 k8s-node2

5.配置时间同步

# 设置系统时区为 中国/上海

timedatectl set-timezone Asia/Shanghai

# 将当前的 UTC 时间写入硬件时钟

timedatectl set-local-rtc 0

# 重启依赖于系统时间的服务

systemctl restart rsyslog

systemctl restart crond

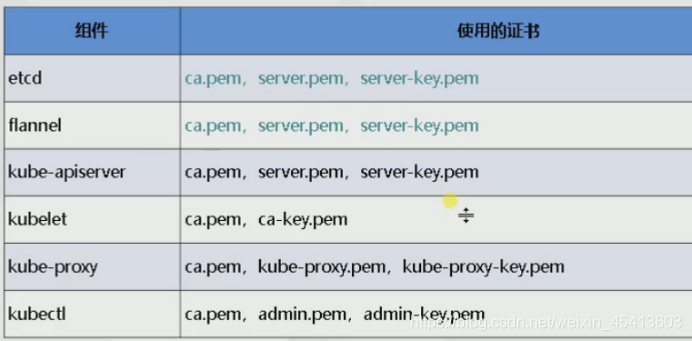

二、签发证书

1.为etcd签发证书

mkdir -p k8s/{etcd-cert,k8s-cert}

cd k8s/etcd-cert/

这里导入2个脚本

#脚本1

#cfssl.sh

#有两种认证形式,openssl、cfssl、我们这里用的cfssl

#cfssl是一个证书工具,json、详细信息生成,并赋予权限

#这里也可以直接执行,我是把它放到一个脚本里,直接执行的

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

脚本2

etcd-cert.sh

cat > ca-config.json <<EOF #ca的配置(属性)

{

"signing": {

"default": {

"expiry": "87600h" #有效期(随意这里是10年)

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF #ca的请求

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

#第一步先粘贴复制执行以上的内容会有2个json文件

cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #生成证书

#第二部生成证书

#-----------------------

cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"192.168.190.133", #这里是给etcd颁发证书

"192.168.190.134", #生产环境一般是5台,这里3台

"192.168.190.135" #内部通过IP访问所以要写部署etcd的主机IP

],

"key": {

"algo": "rsa", #加密算法

"size": 2048 #2048位

},

"names": [ #证书的属性

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

三、部署etcd集群

软件包下载地址:https://github.com/etcd-io/etcd/releases

一般下载amd64位

mkdir soft #创建目录并上传etcd的tar.gz软件包

mkdir -p /opt/etcd/{cfg,ssl,bin} #创建目录存放配置文件,证书文件,可执行文件

mv etcd etcdctl /opt/etcd/bin/

cd k8s/

chmod +x etcd.sh #脚本是用来部署etcd的

#!/bin/bash

**# example: ./etcd.sh etcd01 192.168.1.10 etcd02=https://192.168.1.11:2380,etcd03=https://192.168.1.12:2380**

#这个是示例,有shell就可以看出来通过传参,第一个是你当前部署etcd的节点IP以及etcd 01,后面的是你第二个和第三个etcd节点

ETCD_NAME=$1

ETCD_IP=$2

ETCD_CLUSTER=$3

WORK_DIR=/opt/etcd

cat <<EOF >$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380" #集群通信端口

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379" #获取数据端口类似于mysql的3306

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" #名字保持一致加入集群

ETCD_INITIAL_CLUSTER_STATE="new" #创建新的集群

EOF

cat <<EOF >/usr/lib/systemd/system/etcd.service #etcd服务的配置文件

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

bash

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

./etcd.sh etcd01 192.168.74.229 etcd02=https://192.168.74.238:2380,etcd03=https://192.168.74.248:2380

#会报错,因为证书还没拿过来,但是会生成配置文件,看一下

cat /opt/etcd/cfg/etcd

cat /usr/lib/systemd/system/etcd.service

相当于把etcd中的变量代入至etcd.service中

拷贝证书并查看

cp /root/k8s/etcd-cert/{ca,server-key,server}.pem /opt/etcd/ssl/

ls /opt/etcd/ssl/

现在就可以直接启动etcd了(我做的是先配置etcd02和03然后在123依次序启动)

systemctl restart etcd

#但是因为他找不到另外2个节点所以我们还需要给另外2个节点添加etcd,直接scp过去即可

scp -r /opt/etcd k8s-node1:/opt/

scp -r /opt/etcd k8s-node2:/opt/

scp /usr/lib/systemd/system/etcd.service k8s-node1:/usr/lib/systemd/system

scp /usr/lib/systemd/system/etcd.service k8s-node2:/usr/lib/systemd/system

#传过去要检查一下是否传输正确,路径是否ok,因为变量写死了路径不对的话无法启动

#虽然串数过去那么我们还需要修改配置文件本机的配置

vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.74.238:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.74.238:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.74.238:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.74.238:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.74.229:2380,etcd02=https://192.168.74.238:2380,etcd03=https://192.168.74.248:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

#03也是一样的,如果起不来说明你etcd配置文件修改有问题

ETCD_NAME 节点名称

ETCD_DATA_DIR 数据目录

ETCD_LISTEN_PEER_URLS 集群通信监听地址

ETCD_LISTEN_CLIENT_URLS 客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS 集群通告地址

ETCD_ADVERTISE_CLIENT_URLS 客户端通告地址

ETCD_INITIAL_CLUSTER 集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN 集群Token

ETCD_INITIAL_CLUSTER_STATE 加入集群的当前状态,new是新集群,

existing表示加入已有集群

检测etcd集群状态

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints=“https://192.168.74.229:2379,https://192.168.74.238:2379,https://192.168.74.248:2379” cluster-health

四、node节点安装doker

1.安装依赖包

yum install -y yum-utils device-mapper-persistent-data lvm2

2.下载repo文件

wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

3.安装docker并启动,配置加速器

yum install docker-ce -y

systemctl start docker

echo '{"registry-mirrors": ["https://h5d0mk6d.mirror.aliyuncs.com"]}' > /etc/docker/daemon.json

systemctl restart docker && systemctl enable docker

五、部署flannel

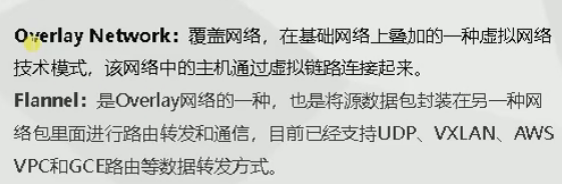

使用k8s网络通信原理实现有两种方案,隧道方案和路由方案

常用fannle、100台以内,支持很多的封包类型,传输形式,支持路由表同一局域网限制,对网络环境跨互联网进行使用,支持已有的进行通信,使用的是重叠网络进行隧道方案设计性能开销大,基于现有的tcp数据包再封装一次,传输,两边有这样一次封装和解封装的进程,使用重叠网络(flannel)

callco、上百台 使用BJP 、 协议通信,不支持多网络环境,必须在支持bjp的环境,在路由表中的环境学习IP进行通信,一般大型公司使用callco路由方案是有路由表进行转发的,不会对数据包封装和解封装,性能好,走的是三层

Overlay Network:覆盖网络,在基础网络上叠加的一种虚拟网络

技术模式,该网络中的主机通过虚拟链路连接起来。

Flannel:是Overlay网络的一种,也是将源数据包封装在另一种网

络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、AWS

VPC和GCE路由等数据转发方式

1.为你的key设置数组,为k8s节点设置子网,再为大子网分配一个小的子网,再分配到每个node上,数据转发方式为vxlan(这里也可以改为hostGAY,还有一种是结合vxlan和hostGAY两者的具体详细信息,找笔记网络篇)

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints=“https://192.168.74.229:2379,https://192.168.74.238:2379,https://192.168.74.248:2379” set /coreos.com/network/config ‘{ “Network”: “172.17.0.0/16”, “Backend”: {“Type”: “vxlan”}}’

用get去查看子网范围状态

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints=“https://192.168.74.229:2379,https://192.168.74.238:2379,https://192.168.74.248:2379” get /coreos.com/network/config ‘{ “Network”: “172.17.0.0/16”, “Backend”: {“Type”: “vxlan”}}’

二进制下载地址

https://github.com/coreos/flannel/releases

2.部署与配置flannel

传入二进制包和脚本

cat flannel.sh #flannel部署在node节点,master需要的话可以部署

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld #生成配置文件

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service #生成service配置文件

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

#红色标注是一个脚本分配子网的脚本

[Install]

WantedBy=multi-user.target

EOF

cat <<EOF >/usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd \$DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP \$MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

systemctl restart docker

mkdir /opt/kubernetes/{bin,cfg,ssl} -p

tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

mv flanneld mk-docker-opts.sh /opt/kubernetes/bin

chmod +x flannel.sh

./flannel.sh https://192.168.74.229:2379,https://192.168.74.238:2379,https://192.168.74.248:2379

#这里是你3个etcd集群的地址

systemctl start flanneld && systemctl enable flanneld

systemctl restart docker

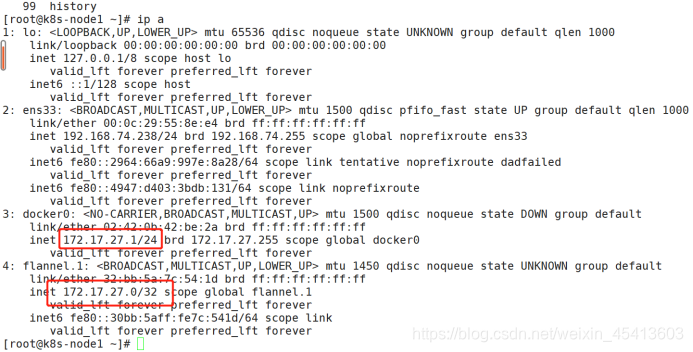

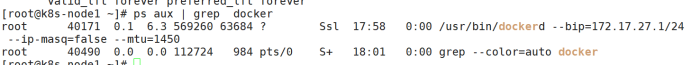

然后查看ip

在同一网络,并且每个node都不一样

验证方式每个节点的容器都可以互相通信,并且node和另一个node的容器也可以通信

部署node2的flannel

[root@k8s-node1 ~]# scp -r /opt/kubernetes/ [email protected]:/opt

[root@k8s-node1 ~]# scp /usr/lib/systemd/system/{flanneld,docker}.service [email protected]:/usr/lib/systemd/system/

[root@k8s-node2 ~]# systemctl start flanneld

[root@k8s-node2 ~]# systemctl restart docker

Ip a查看

六、部署master

1.生成api-server配置文件

mkdir -p /opt/kubernetes/{bin,cfg,ssl}

[root@k8s-master bin]# cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

[root@k8s-master bin]# pwd

/root/soft/kubernetes/server/bin

./apiserver.sh 192.168.74.229 https://192.168.74.229:2379,https://192.168.74.238:2379,https://192.168.74.248:2379

注意:这个是脚本不是api的启动程序

配置k8s日志路径

vim /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=false \

--log-dir=/opt/kubernetes/logs \

mkdir /opt/kubernetes/logs

apiserver.sh

#!/bin/bash

MASTER_ADDRESS=$1

ETCD_SERVERS=$2

cat <<EOF >/opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \\

--v=4 \\

--etcd-servers=${ETCD_SERVERS} \\

--bind-address=${MASTER_ADDRESS} \\

--secure-port=6443 \\

--advertise-address=${MASTER_ADDRESS} \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--kubelet-https=true \\

--enable-bootstrap-token-auth \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-50000 \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

配置文件信息

—logtostderr 启用日志

—-v 日志等级

—etcd-servers etcd集群地址

—bind-address 监听地址

—secure-port https安全端口

—advertise-address 集群通告地址

—allow-privileged 启用授权

—service-cluster-ip-range Service虚拟IP地址段

—enable-admission-plugins 准入控制模块

—authorization-mode 认证授权,启用RBAC授权和节点自管理

—enable-bootstrap-token-auth 启用TLS bootstrap功能,后面会讲到

—token-auth-file token文件

—service-node-port-range Service Node类型默认分配端口范围

2.生成证书

生成证书的脚本

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF #为api生成的证书

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1", #service的第一个

"127.0.0.1",

"192.168.74.229", #写master的和lb的,可以多写几个,不需要写node的

"192.168.74.231",

"192.168.74.232",

"192.168.74.233",

"192.168.74.234",

"192.168.74.235",

"192.168.74.236",

"192.168.74.237",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

bash k8s-cert.sh

cp ca.pem server.pem server-key.pem ca-key.pem /opt/kubernetes/ssl

3.生成token

# 创建 TLS Bootstrapping Token

#BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ’ ')

BOOTSTRAP_TOKEN=0fb61c46f8991b718eb38d27b605b008 #用上面命令或者自己写满足即可

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,“system:kubelet-bootstrap”

EOF

(1)BOOTSTRAP_TOKEN=0fb61c46f8991b718eb38d27b605b008

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,“system:kubelet-bootstrap”

EOF

Kubelet请求给他颁发证书用的

mv token.csv /opt/kubernetes/cfg

systemctl restart kube-apiserver

apiserver默认监听8080和6443

4部署 scheduler

./scheduler.sh 127.0.0.1

这个也可以修改日志目录 跟api一个修改方法

脚本为

#!/bin/bash

MASTER_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \\

--v=4 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

—master 连接本地apiserver

—leader-elect 当该组件启动多个时,自动选举(HA)

5.部署controller-manager

./controller-manager.sh 127.0.0.1

脚本为

#!/bin/bash

MASTER_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\

--v=4 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect=true \\

--address=127.0.0.1 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

cp soft/kubernetes/server/bin/kubectl /usr/bin

检查组件状态

kubectl get cs

七、部署node

1.生成认证信息

[root@k8s-master1 cfg]# cat token.csv

aa70bb385b5a864e477b8c641fbef3d0,kubelet-bootstrap,10001,“system:kubelet-bootstrap”

将kubelet-bootstrap用户绑定到系统集群角色

[root@k8s-master1 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

APISERVER=$1

SSL_DIR=KaTeX parse error: Expected 'EOF', got '#' at position 54: …8d27b605b008 **#̲ 创建kubelet boot…APISERVER:6443"

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER=“https://$APISERVER:6443”

# 设置集群参数

kubectl config set-cluster kubernetes

–certificate-authority=KaTeX parse error: Undefined control sequence: \ at position 16: SSL_DIR/ca.pem \̲ ̲ --embed-certs…{KUBE_APISERVER}

–kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap

–token=${BOOTSTRAP_TOKEN}

–kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default

–cluster=kubernetes

–user=kubelet-bootstrap

–kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

bash kubeconfig.sh 192.168.74.229 /root/k8s/k8s-cert #master节点和证书位置

scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg

scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg

2.部署kubelet

bash kubelet.sh 192.168.74.238

脚本为

#!/bin/bash

NODE_ADDRESS=$1

DNS_SERVER_IP=${2:-"10.0.0.2"}

cat <<EOF >/opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \\

--v=4 \\

--hostname-override=${NODE_ADDRESS} \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet.config \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

cat <<EOF >/opt/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: ${NODE_ADDRESS}

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- ${DNS_SERVER_IP}

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true

EOF

cat <<EOF >/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

可能起不来

修改日志路径

vim /opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=false \

--log-dir=/opt/kubernetes/logs \

mkdir /opt/kubernetes/logs

配置文件

参数说明:

—hostname-override 在集群中显示的主机名

—kubeconfig 指定kubeconfig文件位置,会自动生成

—bootstrap-kubeconfig 指定刚才生成的bootstrap.kubeconfig文件

—cert-dir 颁发证书存放位置

—pod-infra-container-image 管理Pod网络的镜像

scp kubelet kube-proxy 192.168.74.238:/opt/kubernetes/bin/

scp kubelet kube-proxy 192.168.74.248:/opt/kubernetes/bin/

拷贝二进制文件

然后启动

systemctl restart kubelet

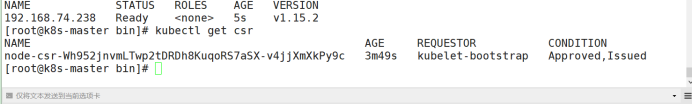

在主节点颁发

Kubectl get csr

kubectl certificate approve node-csr-Wh952jnvmLTwp2tDRDh8KuqoRS7aSX-v4jjXmXkPy9c

3.部署kube-proxy

bash proxy.sh 192.168.74.238

这里指定当前节点的node地址

脚本为

#!/bin/bash

NODE_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \\

--masquerade-all=true \\

--v=4 \\

--hostname-override=${NODE_ADDRESS} \\

--cluster-cidr=10.0.0.0/24 \\

--proxy-mode=ipvs \\

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

4.扩容node节点

把用到的直接scp过去

scp -r /opt/kubernetes/ 192.168.74.248:/opt/

scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system/

修改一些配置

修改指定IP

[root@k8s-node2 cfg]# pwd

/opt/kubernetes/cfg

[root@k8s-node2 cfg]# grep 238 *

flanneld:FLANNEL_OPTIONS="–etcd-endpoints=https://192.168.74.229:2379,https://192.168.74.238:2379,https://192.168.74.248:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem"

kubelet:–hostname-override=192.168.74.238

kubelet.config:address: 192.168.74.238

kube-proxy:–hostname-override=192.168.74.238

修改红色标注的文件中的ip改为当前nodeIP

[root@k8s-node2 cfg]# grep 248 *

flanneld:FLANNEL_OPTIONS="–etcd-endpoints=https://192.168.74.229:2379,https://192.168.74.238:2379,https://192.168.74.248:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem"

kubelet:–hostname-override=192.168.74.248

kubelet.config:address: 192.168.74.248

kube-proxy:–hostname-override=192.168.74.248

改完后

删除被颁发的证书

[root@k8s-node2 ssl]# pwd

/opt/kubernetes/ssl

[root@k8s-node2 ssl]# rm -rf *

启动

systemctl restart kubelet

systemctl restart kube-proxy

在master颁发证书

kubectl certificate approve node-csr-bbLj42R2XtlG00cFDDiqXJNQSWmh0NaVP2jzJ3Hb6kY

创建集群用户的配置文件config

kubectl config set-cluster kubernetes --kubeconfig=/root/test.conf --embed-certs=true --certificate-authority=/opt/kubernetes/ssl/ca.pem --server=“https://192.168.74.229:6443”

kubectl config set-credentials kubernetes-admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=/root/test.conf

这个adm.pem是你装完k8s集群以后会有的一个集群用户的证书

kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=/root/test.conf

mv test.conf .kube/config

kubectl config use-context kubernetes-admin@kubernetes

绑定集群用户可以使用exec进入容器

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

如果ingress的控制器起不来请在node节点修改所有kube-proxy的配置文件

KUBE_PROXY_OPTS="--logtostderr=true \

--masquerade-all=true \

--v=4 \

--hostname-override=192.168.190.134 \

--cluster-cidr=10.0.0.0/24 \

--proxy-mode=ipvs \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"