Centos7安装完成后克隆其他子节点

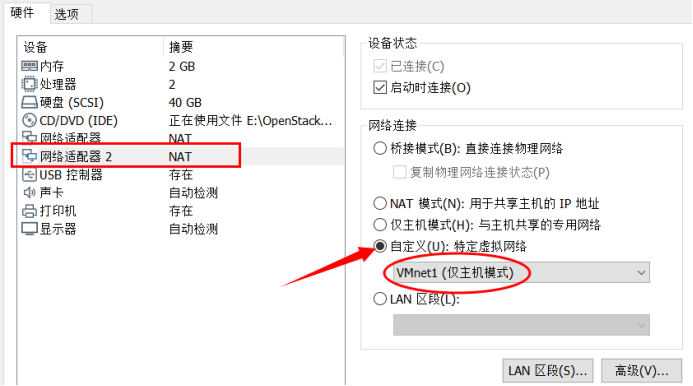

首先在VMware中:右击 虚拟机controller-->设置-->添加-->网络适配器,然后做如下设置:

在VMware中操作

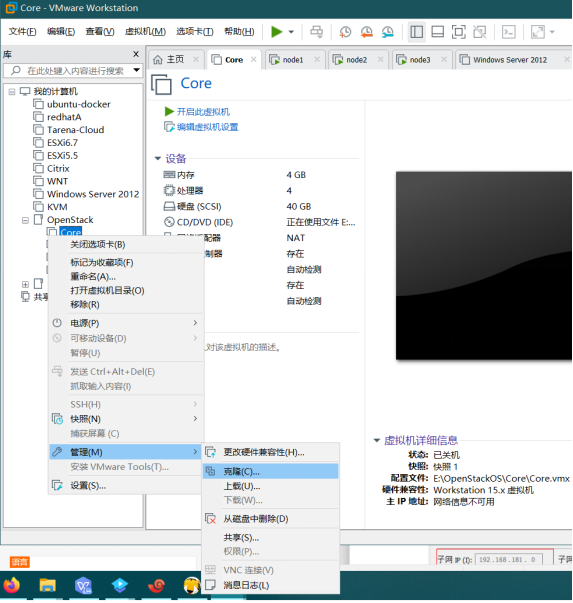

点击:克隆-->下一步-->虚拟机中的当前状态-->创建完整克隆-->下一步(克隆controller、compuet、storage)

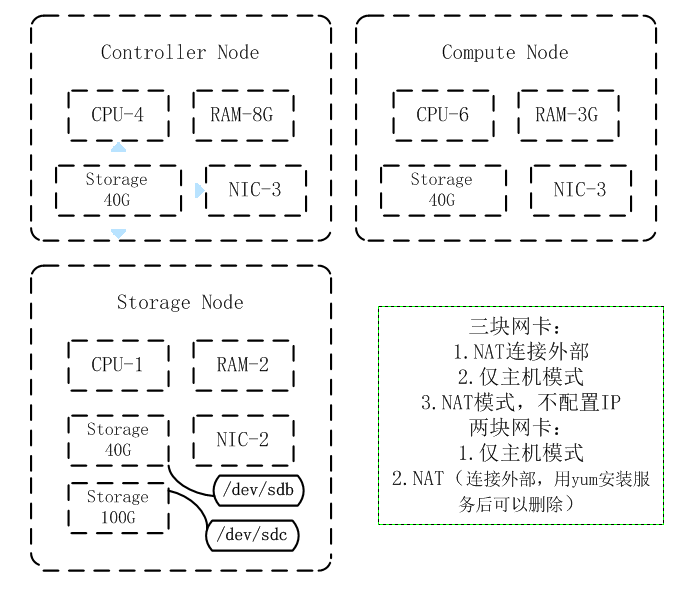

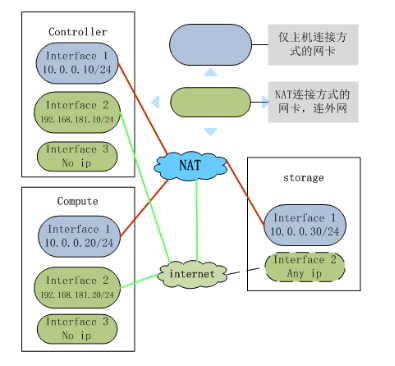

总体硬件架构:

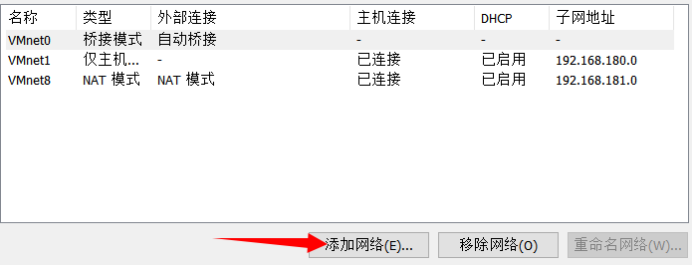

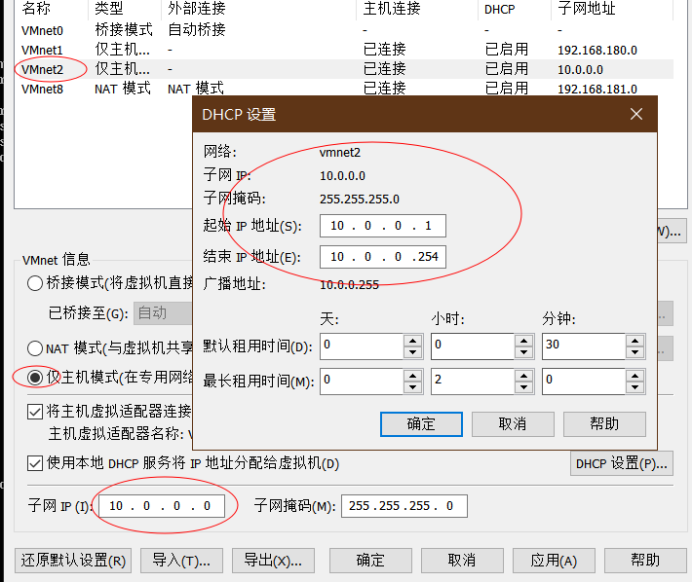

添加虚拟网络

步骤:打开VMware-->点击编辑-->虚拟网络编辑器-->更改设置-->添加网络(备用)

添加成功后可以修改网段也可以不改,我这里改为10.0.0.0网段

最终效果:

Controller

Compute

Storage

Linux修改主机名

- 命令方式修改

[root@ Core ~]# hostnamectl set-hostname compute

[root@Core ~]# hostname compute

[root@Core ~]# exit 重新登录发现主机名修改成功了!

- 修改配置文件

[root@Core ~]# echo "storage" > /etc/hostname

[root@Core ~]# hostname storage

[root@Core ~]# exit 重新登录发现主机名修改成功了!

最终要确保重启后主机名不重复,且有以下三台主机:

controller compute storage

Linux静态IP地址配置

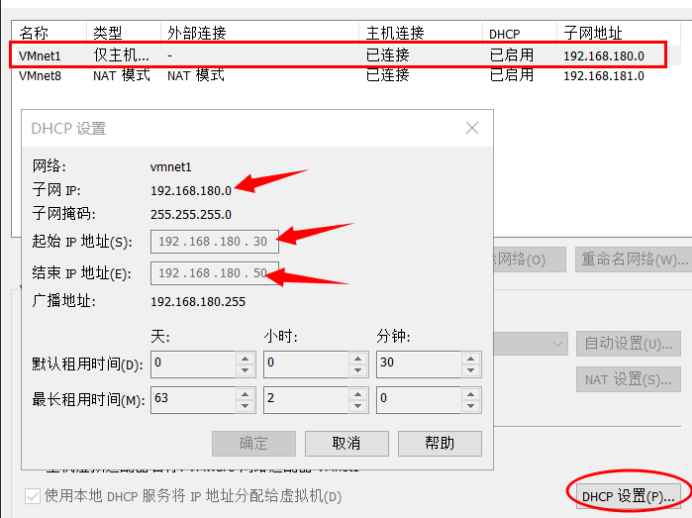

首先在VMware菜单中点击编辑-->虚拟网卡编辑器,查看NAT网段(子网掩码、网关、起止IP地址)

下图是可以连接外网的网卡信息:

下图是只能内部连接的网卡信息,我设置了两个网段,在实验中这个网段用不到:

- 用nmcli命令配置IP地址(建议选用第一种方式配置)

配置第一块网卡用于连接外网:

[root@controller ~]# nmcli connection show

NAME UUID TYPE DEVICE

ens33 3a90c11e-a36f-401e-ba9d-e7961cea63ca ethernet ens33

[root@controller ~]# nmcli connection modify ens33 ipv4.method manual \

> ipv4.addresses 192.168.181.10/24 ipv4.gateway 192.168.181.2 \

> ipv4.dns 8.8.8.8 autoconnect yes

验证:

[root@controller ~]# nmcli connection up ens33

[root@controller ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.181.10 netmask 255.255.255.0 broadcast 192.168.181.255

[root@controller ~]# ping -c 3 g.cn

PING g.cn (203.208.50.63) 56(84) bytes of data.

64 bytes from g.cn (203.208.50.63): icmp_seq=1 ttl=128 time=40.8 ms

64 bytes from g.cn (203.208.50.63): icmp_seq=2 ttl=128 time=41.6 ms

64 bytes from g.cn (203.208.50.63): icmp_seq=3 ttl=128 time=41.6 ms

配置第二块网卡用于连接内网:

[root@controller ~]# nmcli connection add con-name ens37 ifname ens37 type ethernet ipv4.method manual ipv4.addresses 10.0.0.10/24 autoconnect yes

[root@controller ~]# nmcli connection up ens37

最终要确保重启后IP和主机名一一对应:

外网IP(ens33) 内网IP(ens37)

controller 192.168.181.10 10.0.0.10

compute 192.168.181.20 10.0.0.20

storage 10.0.0.30

配置controller、compute的第三块网卡,不获取IP

[root@controller ~]# nmcli connection add con-name ens38 ifname ens38 type ethernet ipv4.method manual ipv4.addresses 192.168.181.41/24 autoconnect

[root@controller ~]# sed -i '2,3d;5,13d' /etc/sysconfig/network-scripts/ifcfg-ens38

- 修改配置文件的方式修改IP地址

[root@controller ~]# sed -i 's/dhcp/static/' /etc/sysconfig/network-scripts/ifcfg-ens33

[root@controller ~]# sed -i '$a IPADDR="192.168.181.10"' /etc/sysconfig/network-scripts/ifcfg-ens33

[root@controller ~]# sed -i '$a PREFIX="24"' /etc/sysconfig/network-scripts/ifcfg-ens33

[root@controller ~]# sed -i '$a GATEWAY="192.168.181.2"' /etc/sysconfig/network-scripts/ifcfg-ens33

[root@controller ~]# sed -i '$a DNS1="114.114.114.114"' /etc/sysconfig/network-scripts/ifcfg-ens33

[root@controller ~]# systemctl restart network

验证:

[root@controller ~]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.181.10 netmask 255.255.255.0 broadcast 192.168.181.255

[root@controller ~]# ping -c 3 g.cn

PING g.cn (203.208.50.55) 56(84) bytes of data.

64 bytes from g.cn (203.208.50.55): icmp_seq=1 ttl=128 time=46.9 ms

64 bytes from g.cn (203.208.50.55): icmp_seq=2 ttl=128 time=46.8 ms

要确保每个节点都可以ping通g.cn

总统网络架构:

配置本地域名解析

- 在控制节点上配置本地域名解析

[root@controller ~]# sed -i '3,100d' /etc/hosts

[root@controller ~]# sed -i 's/^:/#/' /etc/hosts

[root@controller ~]# echo "10.0.0.10 controller" >> /etc/hosts

[root@controller ~]# echo "10.0.0.20 compute" >> /etc/hosts

[root@controller ~]# echo "10.0.0.30 storage" >> /etc/hosts

[root@controller ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.10 controller

10.0.0.20 compute

10.0.0.30 storage

验证结果:

[root@controller ~]# ping compute

PING controller (192.168.181.10) 56(84) bytes of data.

64 bytes from controller (192.168.181.10): icmp_seq=1 ttl=64 time=0.036 ms

64 bytes from controller (192.168.181.10): icmp_seq=2 ttl=64 time=0.096 ms

最终各个节点配置IP的目标:

要确保相互之间可以ping通,如:

[root@controller ~]# ping controller

PING controller (192.168.181.10) 56(84) bytes of data.

64 bytes from controller (192.168.181.10): icmp_seq=1 ttl=64 time=5.02 ms

64 bytes from controller (192.168.181.10): icmp_seq=2 ttl=64 time=0.940 ms

- 配置免密访问/登录

[root@controller ~]# ssh-keygen #如果没什么特殊需求一路回车即可

[root@controller ~]# ssh-copy-id compute

Are you sure you want to continue connecting (yes/no)? yes

root@controller's password: #此处输入compute的密码

验证:

[root@controller ~]# ssh root@compute

Last login: Fri Feb 14 19:00:39 2020

[root@controller ~]# exit #exit返回到原来节点

对storage做相同操作

- 发送hosts文件到其他三个节点(controller、compute、storage)

[root@controller ~]# scp /etc/hosts root@controller:/etc/hosts

hosts 100% 241 74.4KB/s 00:00

关闭SELinux

- 临时关闭selinux (4个节点做相同操作)

[root@controller ~]# getenforce #查看当前状态

Enforcing

[root@controller ~]# setenforce 0 #关闭selinux

[root@controller ~]# getenforce #查看状态

Permissive

- 永久关闭selinux(1,2可只做2)

[root@controller ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

[root@controller ~]# reboot #永久关闭需要重启才能生效

关闭防火墙

四台机器都做相同操作:

[root@controller ~]# systemctl stop firewalld.service #临时关闭

[root@controller ~]# systemctl disable firewalld.service #关闭开机自启

搭建OpenStack的yum源

- 备份旧的yum源,删除原yum源

[root@controller ~]# tar -zcf /etc/yum.repos.d/yum.repo.bak.gz /etc/yum.repos.d/*

[root@controller ~]# rm -rf /etc/yum.repos.d/CentOS-*

- 构建本地OpenStack 的yum源(与3二选一)

借鉴于:https://blog.51cto.com/12114052/2383171

- 构建在线OpenStack的yum源

阿里各类yum源地址:https://developer.aliyun.com/mirror/

构建阿里云epel源,centos源

[root@controller yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@controller yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

构建系统光盘自带的yum源

[root@controller yum.repos.d]# sed -i '$a /dev/cdrom /mnt/ iso9660 defaults 0 0' /etc/fstab

[root@controller yum.repos.d]# mount -a

[root@controller yum.repos.d]# tee /etc/yum.repos.d/CentOS-7.repo <<-'EOF'

> [centos]

> name=centos7

> baseurl=file:///mnt/

> enable=1

> gpgcheck=0

> EOF

[root@controller yum.repos.d]# yum clean all

[root@controller yum.repos.d]# yum repolist

4.在三个节点上都做相同的配置(controller、compute、storage)

在此本人选择构建在线OpenStack的yum源,即重复1,3步骤,以下代码可以复制执行

echo "nameserver 8.8.8.8

nameserver 119.29.29.29

nameserver 114.114.114.114" > /etc/resolv.conf

cd /etc/yum.repos.d/

tar -zcf yum.repo.tar.gz *

rm -rf /etc/yum.repos.d/CentOS-*

wget -O /etc/yum.repos.d/CentOS-epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

sed -i '$a /dev/cdrom /mnt/ iso9660 defaults 0 0' /etc/fstab

mount -a

tee /etc/yum.repos.d/CentOS-7.repo <<-'EOF'

[centos]

name=centos7

baseurl=file:///mnt/

enable=1

gpgcheck=0

EOF

yum -y install centos-release-ceph-jewel centos-release-qemu-ev

wget -O /opt/centos-release-openstack-ocata-1-2.el7.noarch.rpm https://mirrors.aliyun.com/centos-vault/altarch/7.6.1810/extras/ppc64le/Packages/centos-release-openstack-ocata-1-2.el7.noarch.rpm?spm=a2c6h.13651111.0.0.25962f70kIQliu&file=centos-release-openstack-ocata-1-2.el7.noarch.rpm

wget -O /opt/rdo-release-ocata-3.noarch.rpm https://repos.fedorapeople.org/repos/openstack/EOL/openstack-ocata/rdo-release-ocata-3.noarch.rpm

rpm -ivh /opt/centos-release-openstack-ocata-1-2.el7.noarch.rpm

rpm -ivh /opt/rdo-release-ocata-3.noarch.rpm

echo "[openstack-ocata]

name=ocata

baseurl=https://buildlogs.cdn.centos.org/centos/7/cloud/x86_64/openstack-ocata/

enable=1

gpgcheck=0" >> /etc/yum.repos.d/CentOS-7.repo

yum clean all

yum repolist

yum makecache

ls

服务部署架构: