foreword

After reading so many chatGPT articles, as a developer who is not proficient in algorithms, I also feel itchy for large models. But if you want to deploy your own large model, not to mention that you have no algorithm-related experience, it is actually difficult to deploy it independently on your personal computer just because of the computing resources occupied by the large model. Even if the large model compressed by the algorithm is deployed on a personal computer, it still suffers from extremely slow calculation speed and a model effect that is far from that of chatGPT.

Is there any way to deploy our own large model? It's actually very simple, let's break down the goal:

- Have a programming foundation: As a qualified programmer, this should be a necessary quality.

- Sufficient computing power resources: What if you can’t afford a professional GPU yourself? Alibaba Cloud recently launched the “Feitian” free trial plan, AI model training, and GPU resources for free!

- To understand large models: Do you really need to be proficient in large models? unnecessary. If it is just simple model deployment and use, the current open source model deployment is very simple, and you only need to master basic Python knowledge.

With the popularity of chatGPT, many open source enthusiasts have poured into the AI field, and further encapsulated many tools related to large models, so that us AI beginners can also build a private large language model with a small amount of work. Moreover, there are many mature tools at our disposal that can help us further use and fine-tune large models.

Therefore, this article is a nanny-level large-scale model deployment and usage guide written for AI beginners (including myself). Now it's Alibaba Cloud's free trial plan, and we can experience the fun of deploying our own large-scale models without spending a penny.

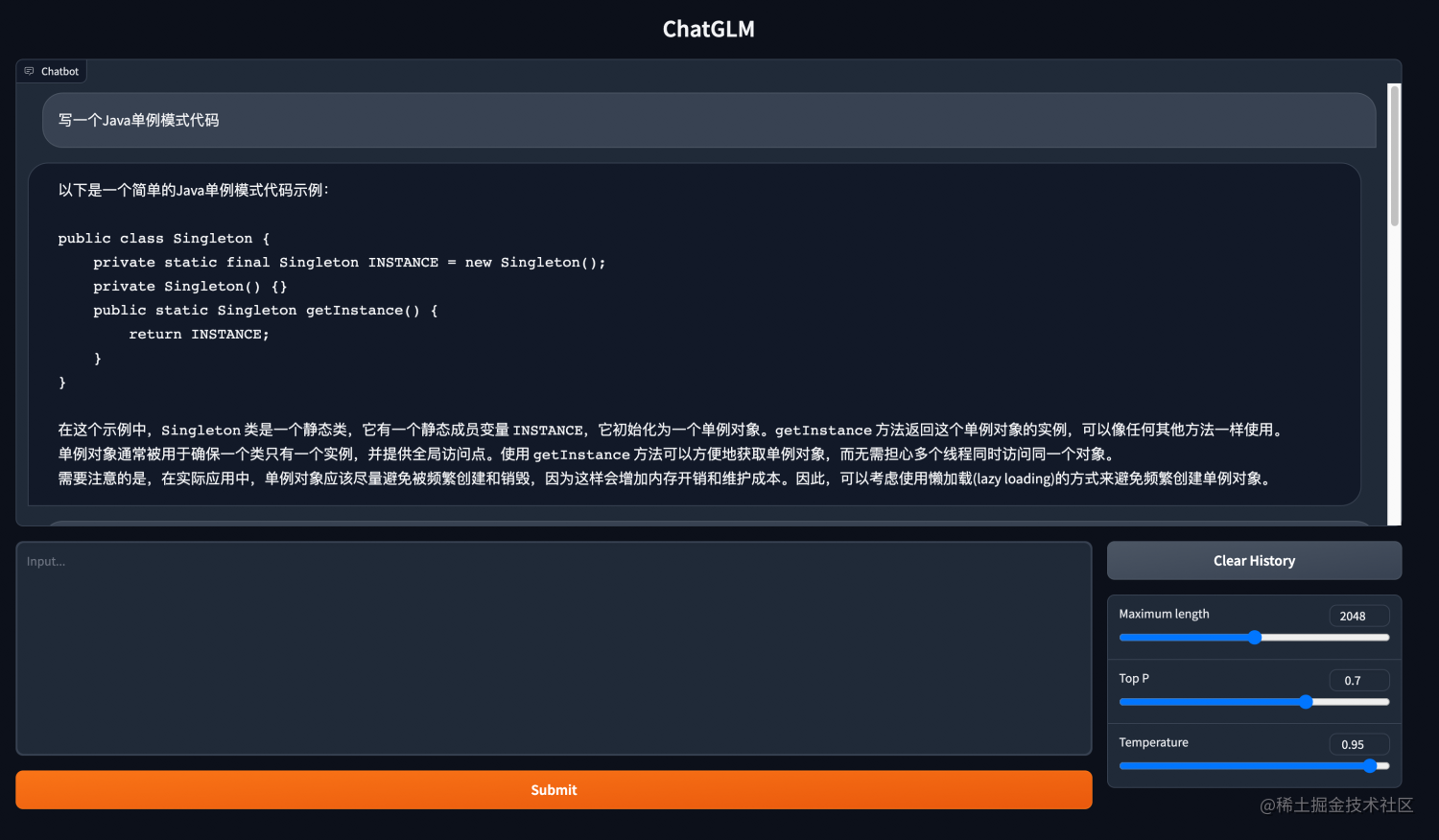

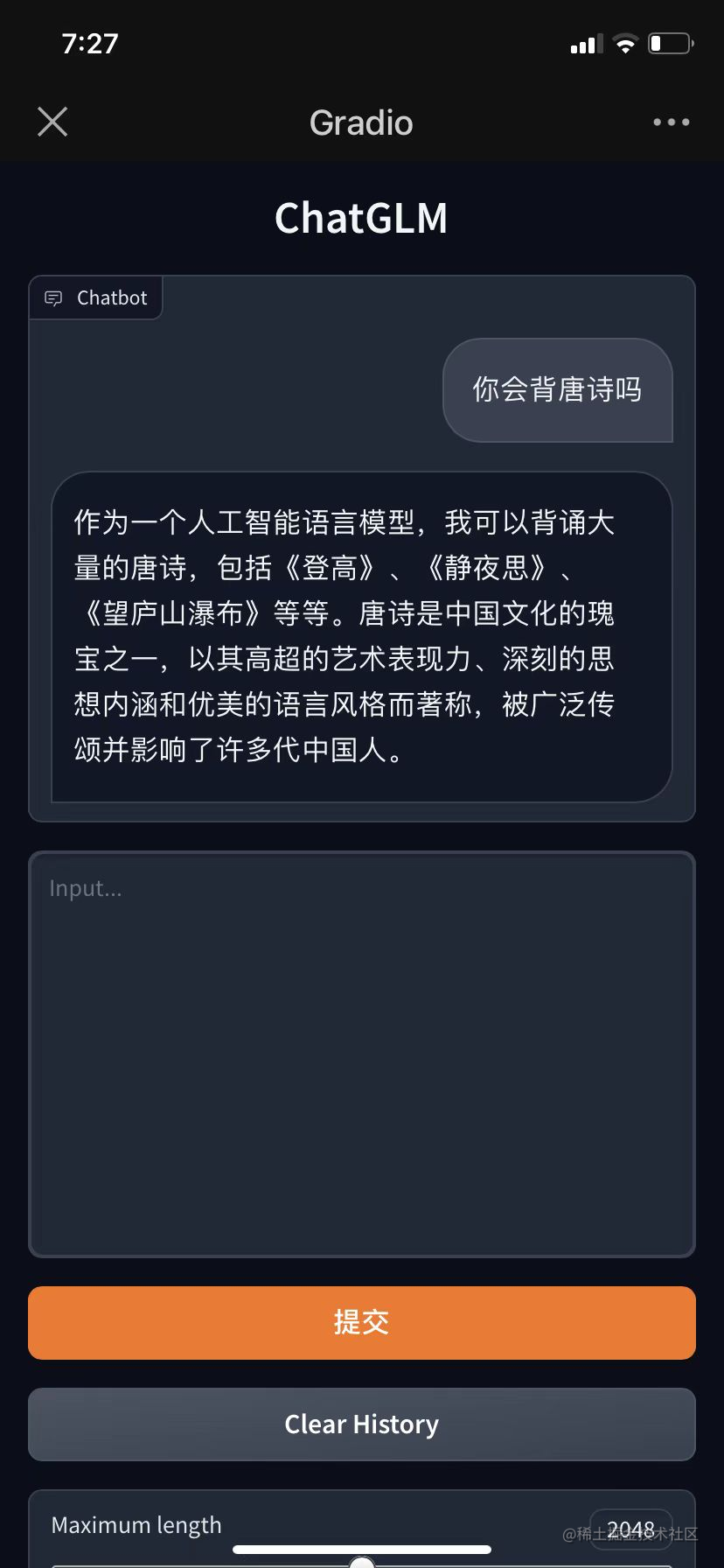

The picture below shows the PAI platform resources I applied for free through Alibaba Cloud (the graphics card is Nvidia V100), and the chatGLM dialogue model of Tsinghua University deployed, which can be directly experienced on the web and mobile terminals:

computer

Mobile terminal

The following is about how to build a large model demo by hand. The main content of the article:

- Get free resources from Alibaba Cloud

- Create and use a PAI platform instance

- Deploy Tsinghua ChatGLM large model

- Additional Meals: Free Quota Usage Inquiry

- Summary and Outlook

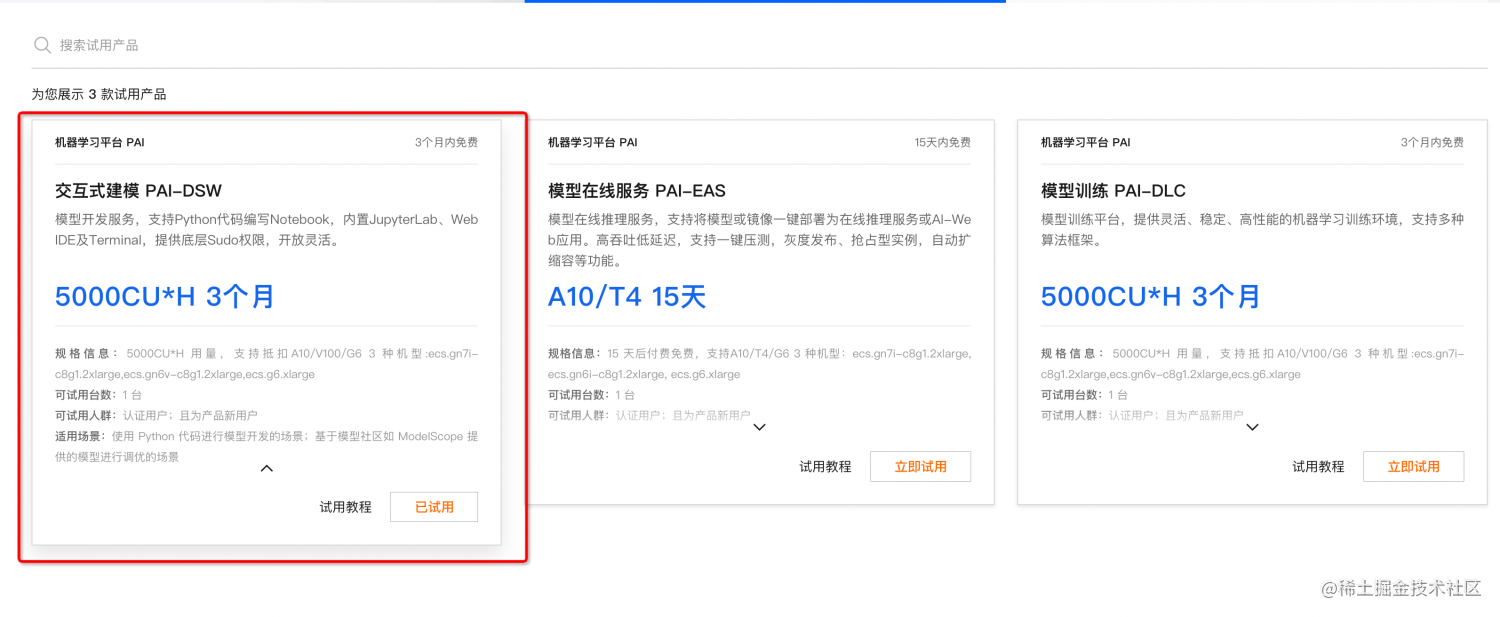

Get free resources from Alibaba Cloud

Free Trial Campaign Page

New and old users who have not applied for PAI-DSW resources can apply for a free quota of 5000CU, which can be used within 3 months.

As for how long the 5000CU can be used, it is related to the performance of the actual application instance, which will be explained below.

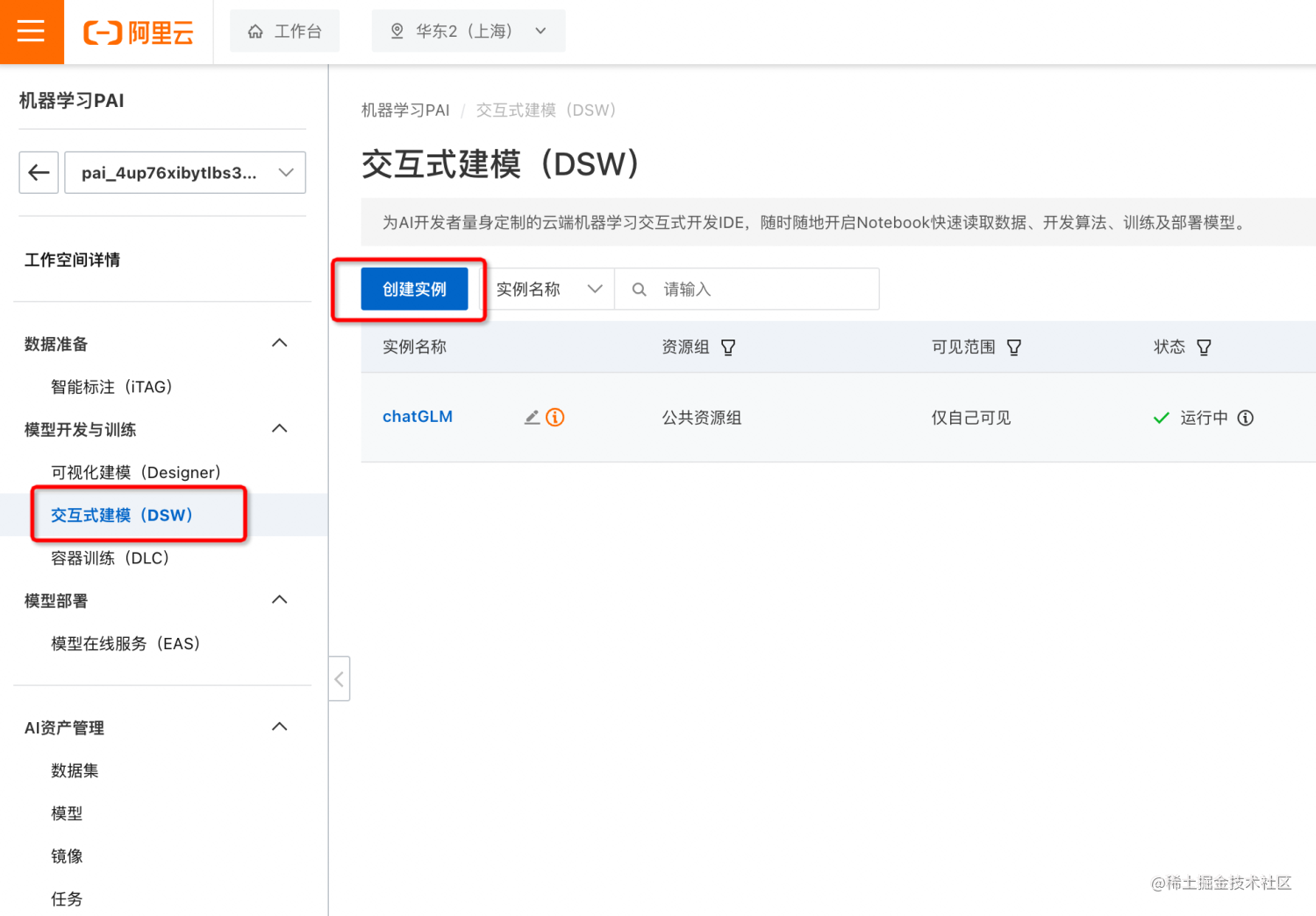

Create and use a PAI platform instance

There is an official tutorial on how to use PAI-DSW, which will teach you how to use the free resources you received to build a Stable Diffusion for AI drawing. If you are interested in SD, you can follow the official tutorial to practice.

https://help.aliyun.com/document_detail/615220.html

After we receive the quota, it arrives in seconds. After that, search for the PAI platform on the Alibaba Cloud page, click Activate Now, and activate the PAI console.

There is no screenshot of the activation page, and there are some optional activation items, such as NAS, gateway, etc., which can be selected according to your needs. For example, if you want to save your own model , you can associate NAS resources. I did not choose other resources at that time, but only activated PAI, so there was no additional charge.

Then enter the console and create a DSW instance.

Select resources here, pay attention to select GPU resources, and select resources that support resource package deduction. For example, ecs.gn6v-c8g1.2xlarg in the figure below. You can see that in their price, the CU consumed per hour is stated. You can roughly calculate how long 5000 CU can be used. The model ecs.gn6v-c8g1.2xlarg can run for 333 hours, about 13 consecutive days.

The system can be chosen arbitrarily. In this paper, in order to deploy chatGLM, choose pytorch1.12

Of course, you can stop the machine at any time in the middle, and you will not continue to deduct fees . Note that the machine here only has a system disk. If the machine is stopped, the mounted system disk will be recycled, and all kinds of files and models you downloaded on it will be recycled. You restart, it is a new system disk, and the files need to be downloaded again. (Don't ask me how I know - -!)

After the creation is complete, click Open to enter the interactive web page, where you can start your model development journey.

Deploy Tsinghua ChatGLM large model

The above has finished the application of resources and the creation and use of instances. Afterwards, small partners can play by themselves and deploy their own large models (or any AI-related resources). In the second half of this article, I will introduce my own process of deploying the ChatGLM dialogue large model, and give a sample to the small partners who do not understand the large model at all.

ChatGLM code repository:

https://github.com/THUDM/ChatGLM-6B

You can deploy it yourself according to the official documents, and ignore my tutorial below. You can also follow the process below me to avoid stepping on me again.

download model

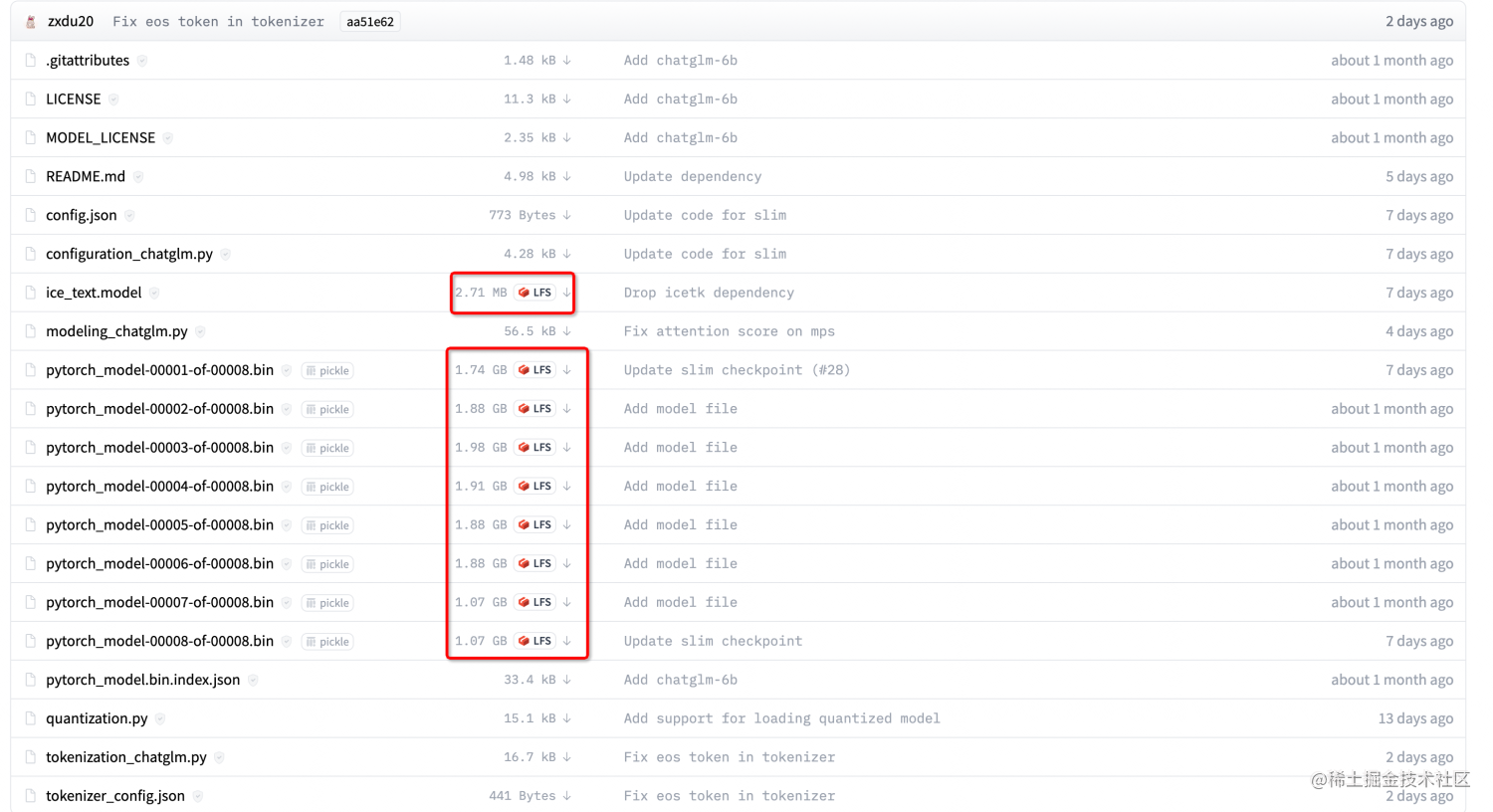

Due to the large size of the model (about 13G), we'd better pull the model locally before running it.

Of course, if you do not download the offline model, you can directly pull the model file at runtime.

Model file repository:

https://huggingface.co/THUDM/chatglm-6b

To download the model warehouse, you need to install Git LFS (Large File Storage), which is used to pull large files in the Git warehouse, such as the model bin file used this time.

Since the system we applied for is Ubuntu, to install Git LFS under Ubuntu, you can use the following command:

sudo apt-get update

sudo apt-get install git-lfs

Once done, clone the model repository:

git clone https://huggingface.co/THUDM/chatglm-6b

There may be network fluctuations, which will cause the pull to be stuck. You can stop it manually, and then enter the folder:

git lfs pull

This will pull the LFS file in the red box above.

deployment model

After the model download is complete, we download the model and run the code:

git clone https://github.com/THUDM/ChatGLM-6B.git

Enter the folder and create a virtual environment for python:

virtualenv -p /usr/bin/python3.8 venv

Activate the Python virtual environment:

source venv/bin/activate

If you encounter the following error, you need to install python3-dev additionally:

Could not build wheels for pycocotools, which is required to install pyproject.toml-based projects

error: command ‘/usr/bin/x86_64-linux-gnu-gcc’ failed with exit code 1

sudo apt-get install python3-dev

Since we downloaded the model locally in advance, let's modify the code to use the path of the local model.

In the code used, the path is changed from THUDM/chatglm-6b to your path. For example, our model is downloaded at /mnt/workspace/chatglm-6b. We will modify the main code files of the two deployment models. All are modified to the paths shown in the following figure:

- cli_demo.py: command line interactive interface

- web_demo.py: Web graphical interactive interface

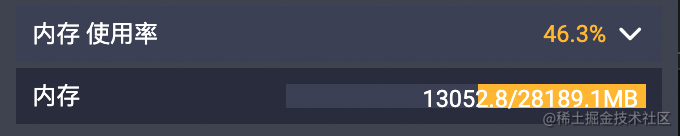

We directly use the official web version Demo to run the model, start web_demo.py, and start model deployment. First, the model will be read into memory, which takes up about 13 G.

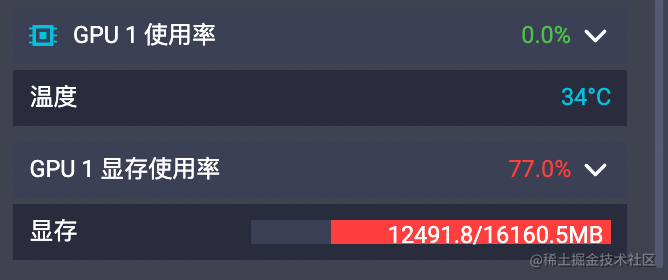

After completion, the memory will be released and start to occupy 13g of GPU memory.

Finally, after the deployment is complete, the service public network and local URLs will be obtained.

Dialogue effect display

The answer is relatively fast. On our V100 graphics card machine, the question will be answered within 1-2 seconds after the question is asked.

Here's a full conversation:

Me: write a Java singleton pattern code

ChatGLM: The following is a simple Java singleton pattern code example:

public class Singleton {

private static final Singleton INSTANCE = new Singleton();

private Singleton() {}

public static Singleton getInstance() {

return INSTANCE;

}

}

In this example, the Singleton class is a static class with a static member variable INSTANCE initialized to a singleton object. The getInstance method returns an instance of this singleton object, which can be used like any other method.

Singleton objects are often used to ensure that there is only one instance of a class and to provide a global point of access. Use the getInstance method to easily obtain a singleton object without worrying about multiple threads accessing the same object at the same time.

It should be noted that in practical applications, singleton objects should try to avoid frequent creation and destruction, because this will increase memory overhead and maintenance costs. Therefore, you can consider using lazy loading to avoid frequent creation of singleton objects.

Me: Can it be realized in another way, such as lazy style?

ChatGLM: Of course, the specific implementation of the lazy man's singleton mode is as follows:

- Make the Singleton class an interface.

- There can be more than one class that implements the Singleton interface, but they all should use the getInstance method to obtain a singleton object and return a reference to the object.

- In the class that implements the Singleton interface, there can be an inner class responsible for creating a singleton object.

- Inner classes should implement the static keyword and use the synchronized keyword for thread safety.

- Inner classes should override the getInstance method to implement lazy loading. For example, you can create a static variable to store the singleton object and only create that variable when needed.

- Where you need to use a singleton object, you can call the method of the inner class to obtain the singleton object.

The following is a sample code for a lazy singleton pattern:

public interface Singleton {

static Singleton INSTANCE;

}

public class ConcreteSingleton implements Singleton {

private static Singleton INSTANCE = new ConcreteSingleton();

@Override

public Singleton getInstance() {

return INSTANCE;

}

}

public class LazySingleton implements Singleton {

private static Singleton INSTANCE;

private Singleton() {}

public static Singleton getInstance() {

synchronized (LazySingleton.class) {

return INSTANCE;

}

}

}

In this example, the ConcreteSingleton class uses the getInstance method to create a singleton object and uses the synchronized keyword for thread safety. The LazySingleton class uses the synchronized keyword to implement lazy loading, creating a singleton object only when needed.

It should be noted that the lazy singleton mode still needs to ensure thread safety, so thread safety needs to be considered when using lazy loading. In addition, the lazy singleton pattern may not be able to make full use of the space of the class, because it needs to use shared objects to achieve lazy loading, so it is necessary to consider how to improve the performance and space utilization of the class.

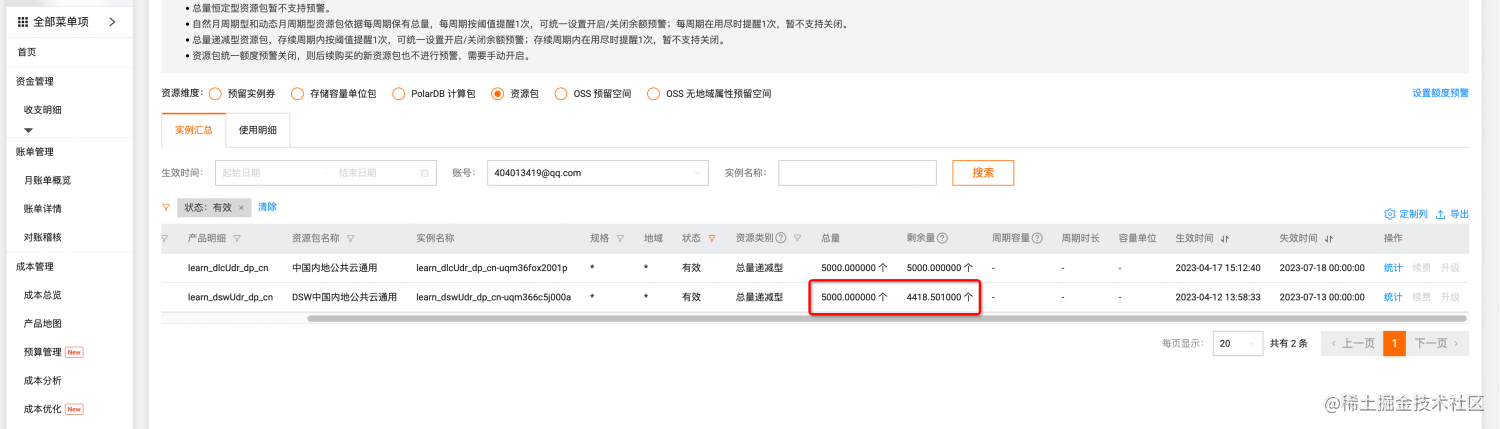

View free credit usage

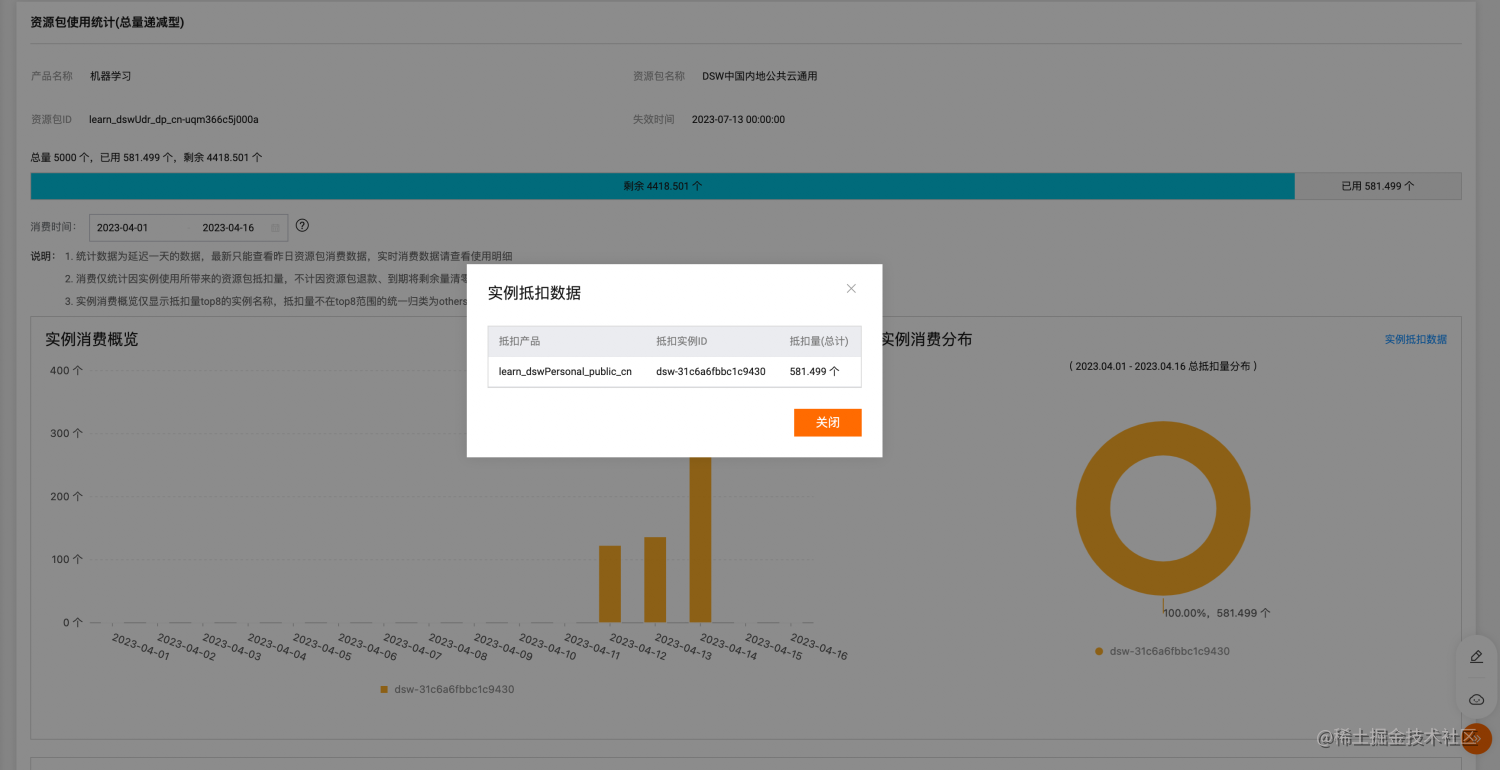

The free 5000CU quota is valid for three months, and it will expire directly after three months. I want to be aware of it and prevent the deduction of fees due to exceeding the free quota. We can check the usage of the free quota. In the upper right corner of the page, there is a "Cost" tab, select "Resource Instance Management", click "Resource Packs", and you can see the usage of your free resource packs.

Click the statistics in each row to see which instance is consuming CU.

Summarize

It took me only half a day to build a complete and usable demo in the entire deployment process.

It has to be said that when AI gradually breaks the circle and becomes an outlet, even pigs can fly, and the difficulty for ordinary programmers to get started with AI is instantly reduced by an order of magnitude. Continuous contributions from open source developers make the various tools and documentation easier to use. Tens of thousands of large model-related issues and PRs every day have brought Github a long-lost prosperity.

At this moment when AI is sweeping the world, as a programmer, we are undoubtedly the group of people who are more aware of this era. At this moment, none other than me. Pay full attention to the application scenarios and development trends of AI technology, actively explore the combination of AI and other fields, and provide more possibilities for your career development and future planning.