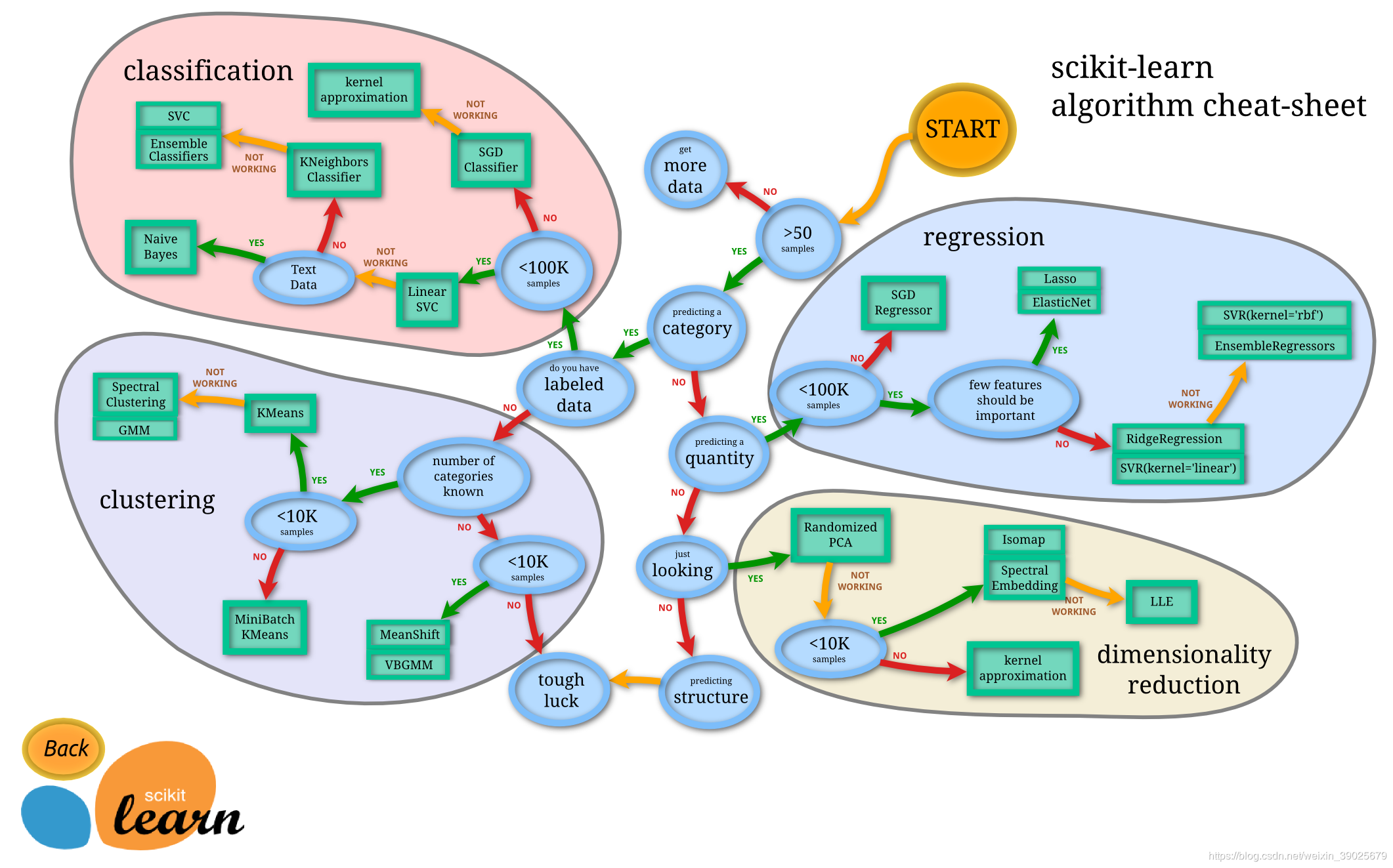

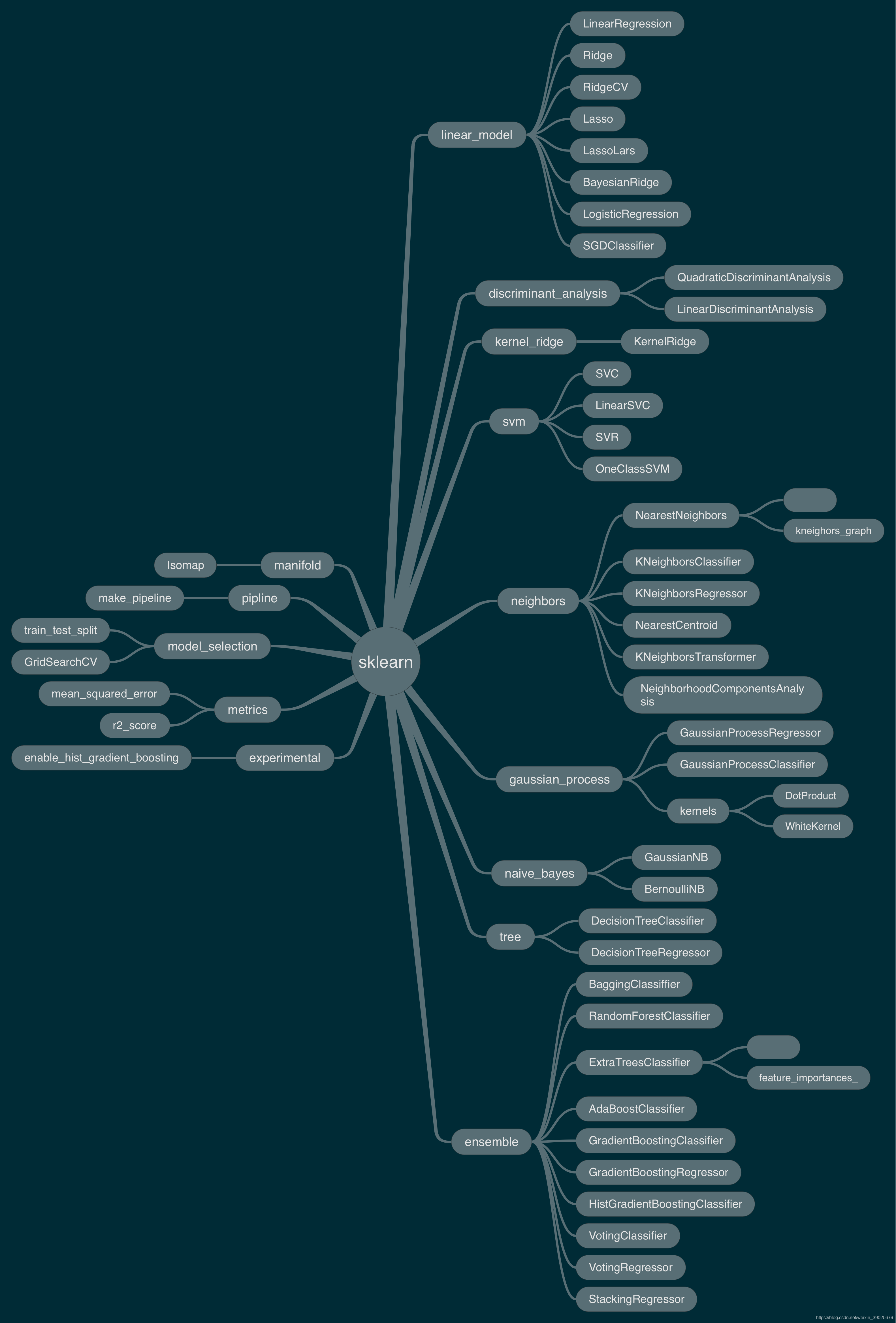

sklearn framework

Mapping function

1.11. Ensemble methods

1.11.1. Bagging meta-estimator

from sklearn.ensemble import BaggingClassifier

from sklearn.neighbors import KNeighborsClassifier

bagging = BaggingClassifier(KNeighborsClassifier(),

max_samples=0.5, max_features=0.5)

1.11.2. Forests of randomized trees

from sklearn.ensemble import RandomForestClassifier

X = [[0, 0], [1, 1]]

Y = [0, 1]

clf = RandomForestClassifier(n_estimators=10)

clf = clf.fit(X, Y)

1.11.2.2. Extremely Randomized Trees

from sklearn.model_selection import cross_val_score

from sklearn.datasets import make_blobs

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import ExtraTreesClassifier

from sklearn.tree import DecisionTreeClassifier

X, y = make_blobs(n_samples=10000, n_features=10, centers=100,

random_state=0)

clf = DecisionTreeClassifier(max_depth=None, min_samples_split=2,

random_state=0)

scores = cross_val_score(clf, X, y, cv=5)

scores.mean()

clf = RandomForestClassifier(n_estimators=10, max_depth=None,

min_samples_split=2, random_state=0)

scores = cross_val_score(clf, X, y, cv=5)

scores.mean()

clf = ExtraTreesClassifier(n_estimators=10, max_depth=None,

min_samples_split=2, random_state=0)

scores = cross_val_score(clf, X, y, cv=5)

scores.mean() > 0.999

1.11.3. AdaBoost

1.11.3.1. Usage

from sklearn.model_selection import cross_val_score

from sklearn.datasets import load_iris

from sklearn.ensemble import AdaBoostClassifier

X, y = load_iris(return_X_y=True)

clf = AdaBoostClassifier(n_estimators=100)

scores = cross_val_score(clf, X, y, cv=5)

scores.mean()

1.11.4. Gradient Tree Boosting

1.11.4.1. Classification

from sklearn.datasets import make_hastie_10_2

from sklearn.ensemble import GradientBoostingClassifier

X, y = make_hastie_10_2(random_state=0)

X_train, X_test = X[:2000], X[2000:]

y_train, y_test = y[:2000], y[2000:]

clf = GradientBoostingClassifier(n_estimators=100, learning_rate=1.0,

max_depth=1, random_state=0).fit(X_train, y_train)

clf.score(X_test, y_test)

1.11.4.2. Regression

import numpy as np

from sklearn.metrics import mean_squared_error

from sklearn.datasets import make_friedman1

from sklearn.ensemble import GradientBoostingRegressor

X, y = make_friedman1(n_samples=1200, random_state=0, noise=1.0)

X_train, X_test = X[:200], X[200:]

y_train, y_test = y[:200], y[200:]

est = GradientBoostingRegressor(n_estimators=100, learning_rate=0.1,

max_depth=1, random_state=0, loss='ls').fit(X_train, y_train)

mean_squared_error(y_test, est.predict(X_test))

1.11.4.3. Fitting additional weak-learners

_ = est.set_params(n_estimators=200, warm_start=True) # set warm_start and new nr of trees

_ = est.fit(X_train, y_train) # fit additional 100 trees to est

mean_squared_error(y_test, est.predict(X_test))

1.11.4.7.1. Feature importance

from sklearn.datasets import make_hastie_10_2

from sklearn.ensemble import GradientBoostingClassifier

X, y = make_hastie_10_2(random_state=0)

clf = GradientBoostingClassifier(n_estimators=100, learning_rate=1.0,

max_depth=1, random_state=0).fit(X, y)

clf.feature_importances_

1.11.5. Histogram-Based Gradient Boosting

# explicitly require this experimental feature

from sklearn.experimental import enable_hist_gradient_boosting # noqa

# now you can import normally from ensemble

from sklearn.ensemble import HistGradientBoostingClassifier

1.11.5.1. Usage

from sklearn.experimental import enable_hist_gradient_boosting

from sklearn.ensemble import HistGradientBoostingClassifier

from sklearn.datasets import make_hastie_10_2

X, y = make_hastie_10_2(random_state=0)

X_train, X_test = X[:2000], X[2000:]

y_train, y_test = y[:2000], y[2000:]

clf = HistGradientBoostingClassifier(max_iter=100).fit(X_train, y_train)

clf.score(X_test, y_test)

1.11.5.2. Missing values support

from sklearn.experimental import enable_hist_gradient_boosting # noqa

from sklearn.ensemble import HistGradientBoostingClassifier

import numpy as np

X = np.array([0, 1, 2, np.nan]).reshape(-1, 1)

y = [0, 0, 1, 1]

gbdt = HistGradientBoostingClassifier(min_samples_leaf=1).fit(X, y)

gbdt.predict(X)

1.11.6. Voting Classifier

1.11.6.1.1. Usage

from sklearn import datasets

from sklearn.model_selection import cross_val_score

from sklearn.linear_model import LogisticRegression

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import VotingClassifier

iris = datasets.load_iris()

X, y = iris.data[:, 1:3], iris.target

clf1 = LogisticRegression(random_state=1)

clf2 = RandomForestClassifier(n_estimators=50, random_state=1)

clf3 = GaussianNB()

eclf = VotingClassifier(

estimators=[('lr', clf1), ('rf', clf2), ('gnb', clf3)],

voting='hard')

for clf, label in zip([clf1, clf2, clf3, eclf], ['Logistic Regression', 'Random Forest', 'naive Bayes', 'Ensemble']):

scores = cross_val_score(clf, X, y, scoring='accuracy', cv=5)

print("Accuracy: %0.2f (+/- %0.2f) [%s]" % (scores.mean(), scores.std(), label))

1.11.6.2. Weighted Average Probabilities (Soft Voting)

from sklearn import datasets

from sklearn.tree import DecisionTreeClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

from itertools import product

from sklearn.ensemble import VotingClassifier

# Loading some example data

iris = datasets.load_iris()

X = iris.data[:, [0, 2]]

y = iris.target

# Training classifiers

clf1 = DecisionTreeClassifier(max_depth=4)

clf2 = KNeighborsClassifier(n_neighbors=7)

clf3 = SVC(kernel='rbf', probability=True)

eclf = VotingClassifier(estimators=[('dt', clf1), ('knn', clf2), ('svc', clf3)],

voting='soft', weights=[2, 1, 2])

clf1 = clf1.fit(X, y)

clf2 = clf2.fit(X, y)

clf3 = clf3.fit(X, y)

eclf = eclf.fit(X, y)

1.11.6.3. Using the VotingClassifier with GridSearchCV

from sklearn.model_selection import GridSearchCV

clf1 = LogisticRegression(random_state=1)

clf2 = RandomForestClassifier(random_state=1)

clf3 = GaussianNB()

eclf = VotingClassifier(

estimators=[('lr', clf1), ('rf', clf2), ('gnb', clf3)],

voting='soft'

)

params = {'lr__C': [1.0, 100.0], 'rf__n_estimators': [20, 200]}

grid = GridSearchCV(estimator=eclf, param_grid=params, cv=5)

grid = grid.fit(iris.data, iris.target)

1.11.7. Voting Regressor

from sklearn.datasets import load_boston

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.ensemble import RandomForestRegressor

from sklearn.linear_model import LinearRegression

from sklearn.ensemble import VotingRegressor

# Loading some example data

X, y = load_boston(return_X_y=True)

# Training classifiers

reg1 = GradientBoostingRegressor(random_state=1, n_estimators=10)

reg2 = RandomForestRegressor(random_state=1, n_estimators=10)

reg3 = LinearRegression()

ereg = VotingRegressor(estimators=[('gb', reg1), ('rf', reg2), ('lr', reg3)])

ereg = ereg.fit(X, y)

1.11.8. Stacked generalization

from sklearn.linear_model import RidgeCV, LassoCV

from sklearn.svm import SVR

estimators = [('ridge', RidgeCV()),

('lasso', LassoCV(random_state=42)),

('svr', SVR(C=1, gamma=1e-6))]

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.ensemble import StackingRegressor

reg = StackingRegressor(

estimators=estimators,

final_estimator=GradientBoostingRegressor(random_state=42))

from sklearn.datasets import load_boston

X, y = load_boston(return_X_y=True)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y,

random_state=42)

reg.fit(X_train, y_train)

y_pred = reg.predict(X_test)

from sklearn.metrics import r2_score

print('R2 score: {:.2f}'.format(r2_score(y_test, y_pred)))

source address

https://scikit-learn.org/stable/modules/ensemble.html