https://ci.apache.org/projects/flink/flink-docs-release-1.7/

1.Flink简介

1.1 什么是Flink

Apache Flink 是⼀个分布式⼤数据处理引擎,可对有限数据流和⽆限数据流进⾏有状态计算。可部署在各种集群环境,对各种⼤⼩的数据规模进⾏快速计算。

1.2 Flink的历史

早在 2008 年,Flink 的前身已经是柏林理⼯⼤学⼀个研究性项⽬, 在 2014 被 Apache 孵化器所接受,然后迅速地成为了 ASF(Apache Software Foundation)的顶级项⽬之⼀。阿⾥基于Flink搞出了Blink,并在国内进⾏推⼴,让Flink⽕了起来

1.3流处理和批处理

- 批处理的特点是有界、持久、⼤量,批处理⾮常适合需要访问全套记录才能完成的计算⼯作,⼀般⽤于离线统计。

- 流处理的特点是⽆界、实时,流处理⽅式⽆需针对整个数据集执⾏操作,⽽是对通过系统传输的每个数据项执⾏操作,⼀般⽤于实时统计。

1.4 大数据流处理引擎

1.5Flink对比spark

Spark就是为离线计算⽽设计的,在Spark⽣态体系中,不论是流处理和批处理都是底层引擎都是SparkCore,Spark Streaming将微批次⼩任务不停的提交到Spark引擎,从⽽实现准实时计算,SparkStreaming只不过是⼀种特殊的批处理⽽已。

Flink就是为实时计算⽽设计的,Flink可以同时实现批处理和流处理,Flink将批处理(即有有界数据)视作⼀种特殊的流处理。

2Flink架构体系

2.1Flink中的重要⻆⾊

- JobManager:

也称之为Master,⽤于协调分布式执⾏,它们⽤来调度task,协调检查点,协调失败时恢复等。Flink运⾏时⾄少存在⼀个master,如果配置⾼可⽤模式则会存在多个master,它们其中有⼀个是leader,⽽其他的都是standby。

- TaskManager:

也称之为Worker,⽤于执⾏⼀个dataflow的task(或者特殊的subtask)、数据缓冲和data stream的交换,Flink运⾏时⾄少会存在⼀个worker。JobManager和TaskManager可以直接在物理机上启动,或者通过像YARN这样的资源调度框,TaskManager连接到JobManager,通过RPC通信告知⾃身的可⽤性进⽽获得任务分配。

- TaskManager与Slots :

每⼀个TaskManager(worker)是⼀个JVM进程,它可能会在独⽴的线程上执⾏⼀个或多个subtask。为了控制⼀个worker能接收多少个task,worker通过task slot来进⾏控制(⼀个worker⾄少有⼀个task slot)。

每个task slot表示TaskManager拥有资源的⼀个固定⼤⼩的⼦集。假如⼀个TaskManager有三个slot,那么它会将其管理的内存分成三份给各个slot。资源slot化意味着⼀个subtask将不需要跟来⾃其他job的subtask竞争被管理的内存,取⽽代之的是它将拥有⼀定数量的内存储备。需要注意的是,这⾥不会涉及到CPU的隔离,slot⽬前仅仅⽤来隔离task的受管理的内存。通过调整task slot的数量,允许⽤户定义subtask之间如何互相隔离。如果⼀个TaskManager⼀个slot,那将意味着每个task group运⾏在独⽴的JVM中(该JVM可能是通过⼀个特定的容器启动的),⽽⼀个TaskManager多个slot意味着更多的subtask可以共享同⼀个JVM。⽽在同⼀个JVM进程中的task将共享TCP连接(基于多路复⽤)和⼼跳消息。它们也可能共享数据集和数据结构,因此这减少了每个task的负载。Task Slot是静态的概念,是指TaskManager具有的并发执⾏能⼒,可以通过参taskmanager.numberOfTaskSlots进⾏配置,⽽并⾏度parallelism是动态概念,即TaskManager运⾏程序时实际使⽤的并发能⼒,可以通过参数parallelism.default进⾏配置。也就是说,假设⼀共有3个TaskManager,每⼀个TaskManager中的分配3个TaskSlot,也就是每个TaskManager可以接收3个task,⼀共9个TaskSlot,如果我们设置parallelism.default=1,即运⾏程序默认的并⾏度为1,9个TaskSlot只⽤了1个,有8个空闲,因此,设置合适的并⾏度才能提⾼效率。

- 程序与数据流 Flink程序的基础构建模块是 流(streams) 与 转换(transformations)(需要注意的是,Flink的DataSet API所使⽤的DataSets其内部也是stream)。⼀个stream可以看成⼀个中间结果,⽽⼀个transformations是以⼀个或多个stream作为输⼊的某种operation,该operation利⽤这些stream进⾏计算从⽽产⽣⼀个或多个result stream。在运⾏时,Flink上运⾏的程序会被映射成streaming dataflows,它包含了streams和transformations operators。每⼀个dataflow以⼀个或多个sources开始以⼀个或多个sinks结束。dataflow类似Spark的DAG,当然特定形式的环可以通过iteration构建。在⼤部分情况下,程序中的transformations跟dataflow中的operator是⼀⼀对应的关系,但有时候,⼀个transformation可能对应多个operator。

- task与operator chai

出于分布式执⾏的⽬的,Flink将operator的subtask链接在⼀起形成task,每个task在⼀个线程中执⾏。将operators链接成task是⾮常有效的优化:它能减少线程之间的切换和基于缓存区的数据交换,在减少时延的同时提升吞吐量。链接的⾏为可以在编程API中进⾏指定

2.2 ⽆界数据流与有界数据流

⽆界数据流:⽆界数据流有⼀个开始但是没有结束,它们不会在⽣成时终⽌并提供数据,必须连续处理⽆界流,也就是说必须在获取后⽴即处理event。对于⽆界数据流我们⽆法等待所有数据都到达,因为输⼊是⽆界的,并且在任何时间点都不会完成。处理⽆界数据通常要求以特定顺序(例如事件发⽣的顺序)获取event,以便能够推断结果完整性。

有界数据流:有界数据流有明确定义的开始和结束,可以在执⾏任何计算之前通过获取所有数据来处理有界流,处理有界流不需要有序获取,因为可以始终对有界数据集进⾏排序,有界流的处理也称为批处理。

Flink在实现流处理和批处理时,在Flink它从另⼀个视⻆看待流处理和批处理,可以都认为是流处理,只不过是有界或⽆界⽽已Flink是完全⽀持流处理,也就是说作为流处理看待时输⼊数据流是⽆界的;批处理被作为⼀种特殊的流处理,只是它的输⼊数据流被定义为有界的。基于同⼀个Flink运⾏时(FlinkRuntime),分别提供了流处理和批处理API,⽽这两种API也是实现上层⾯向流处理、批处理类型应⽤框架的基础。

2.3 Flink编程模型

- DataStream API:实时计算编程API

- DataSet API:离线计算编程API

- Table API:带Schema的DataStream或DataSet,可以使⽤DSL⻛格的语法

- SQL:使⽤SQL查询可以直接在Table API定义的表上执⾏

3Flink环境搭建

-

3.1 下载安装包 (https://flink.apache.org/downloads.html)

3.2 修改flink-conf.yaml

3.3 修改slaves

3.4 访问web界面 (http://47.111.185.251:8081)

4Flink快速入门

4.1 初始化quickstart项目

curl https://flink.apache.org/q/quickstart-scala.sh | bash -s 1.6.3

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.myorg.quickstart</groupId>

<artifactId>quickstart</artifactId>

<version>0.1</version>

<packaging>jar</packaging>

<name>Flink Quickstart Job</name>

<url>http://www.myorganization.org</url>

<repositories>

<repository>

<id>apache.snapshots</id>

<name>Apache Development Snapshot Repository</name>

<url>https://repository.apache.org/content/repositories/snapshots/</url>

<releases>

<enabled>false</enabled>

</releases>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

</repositories>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<flink.version>1.7.1</flink.version>

<scala.binary.version>2.11</scala.binary.version>

<scala.version>2.11.12</scala.version>

</properties>

<dependencies>

<!-- Apache Flink dependencies -->

<!-- These dependencies are provided, because they should not be packaged into the JAR file. -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<!-- Scala Library, provided by Flink as well. -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.10_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-redis_${scala.binary.version}</artifactId>

<version>1.1.5</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.7</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>com.typesafe.akka</groupId>

<artifactId>akka-stream_2.11</artifactId>

<version>2.4.20</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.8</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.0.0</version>

<executions>

<!-- Run shade goal on package phase -->

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<artifactSet>

<excludes>

<exclude>org.apache.flink:force-shading</exclude>

<exclude>com.google.code.findbugs:jsr305</exclude>

<exclude>org.slf4j:*</exclude>

<exclude>log4j:*</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>org.myorg.quickstart.StreamingJob</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

<!-- Java Compiler -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<!-- Scala Compiler -->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<!-- Eclipse Scala Integration -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<version>2.8</version>

<configuration>

<downloadSources>true</downloadSources>

<projectnatures>

<projectnature>org.scala-ide.sdt.core.scalanature</projectnature>

<projectnature>org.eclipse.jdt.core.javanature</projectnature>

</projectnatures>

<buildcommands>

<buildcommand>org.scala-ide.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<classpathContainers>

<classpathContainer>org.scala-ide.sdt.launching.SCALA_CONTAINER</classpathContainer>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

</classpathContainers>

<excludes>

<exclude>org.scala-lang:scala-library</exclude>

<exclude>org.scala-lang:scala-compiler</exclude>

</excludes>

<sourceIncludes>

<sourceInclude>**/*.scala</sourceInclude>

<sourceInclude>**/*.java</sourceInclude>

</sourceIncludes>

</configuration>

</plugin>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>build-helper-maven-plugin</artifactId>

<version>1.7</version>

<executions>

<!-- Add src/main/scala to eclipse build path -->

<execution>

<id>add-source</id>

<phase>generate-sources</phase>

<goals>

<goal>add-source</goal>

</goals>

<configuration>

<sources>

<source>src/main/scala</source>

</sources>

</configuration>

</execution>

<!-- Add src/test/scala to eclipse build path -->

<execution>

<id>add-test-source</id>

<phase>generate-test-sources</phase>

<goals>

<goal>add-test-source</goal>

</goals>

<configuration>

<sources>

<source>src/test/scala</source>

</sources>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

<profiles>

<profile>

<id>add-dependencies-for-IDEA</id>

<activation>

<property>

<name>idea.version</name>

</property>

</activation>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

<scope>compile</scope>

</dependency>

</dependencies>

</profile>

</profiles>

</project>

实时计算案例:

package com.wedoctor.flink

import org.apache.flink.streaming.api.scala._

import org.apache.log4j.{Level, Logger}

/**

* created by Liuzc

*/

object WordCount {

//设置日志级别

Logger.getLogger("org").setLevel(Level.ERROR)

def main(args: Array[String]): Unit = {

//Flink实时计算的入口

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

//指定从哪里读数据,创建dataStream

val lines: DataStream[String] = env.socketTextStream("192.168.99.156",8888)

//对数据进行处理

val words: DataStream[String] = lines.flatMap(_.split(" "))

val wordAndOne: DataStream[(String, Int)] = words.map((_,1))

val groupedData: KeyedStream[(String, Int), String] = wordAndOne.keyBy(_._1)

val reduced: DataStream[(String, Int)] = groupedData.reduce((t1, t2) => (t1._1,t1._2 + t2._2))

//触发sink

reduced.print()

//flink的实时计算 也需要启动

env.execute("Flink WordCount")

}

}

批量处理案例

package com.wedoctor.flink

import org.apache.flink.api.scala._

import org.apache.flink.core.fs.FileSystem.WriteMode

import org.apache.log4j.{Level, Logger}

object WordCount2 {

//设置日志级别

Logger.getLogger("org").setLevel(Level.ERROR)

def main(args: Array[String]): Unit = {

//Flink入口

val env: ExecutionEnvironment = ExecutionEnvironment.getExecutionEnvironment

//读取本地文件(数据源)

val localLines: DataSet[String] = env.readTextFile("D:\\word.txt")

val words: DataSet[String] = localLines.flatMap(_.split(" "))

val wordWithOne: DataSet[(String, Int)] = words.map((_,1))

val counts: AggregateDataSet[(String, Int)] = wordWithOne.groupBy(0).sum(1)

//输出结果

counts.print()

//保存结果到txt文件

counts.writeAsText("D:\\output\\wordcount.txt", WriteMode.OVERWRITE).setParallelism(1)

env.execute("Scala WordCount Example")

}

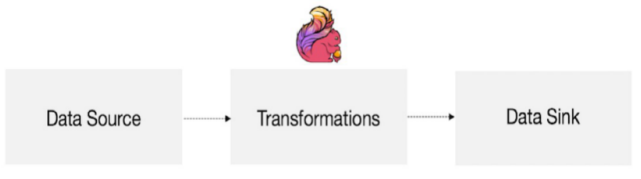

}4.2 Flink运行模型

Flink的程序主要由三部分构成,分别为Source、Transformation、Sink。Source主要负责数据的读

取,Transformation主要负责对属于的转换操作,Sink负责最终数据的输出。

5.Flink Source

在Flink中,Source主要负责数据的读取

5.1基于File的数据源

⼀列⼀列的读取遵循TextInputFormat规范的⽂本⽂件,并将结果作为String返回。

- readTextFile

val env = StreamExecutionEnvironment.getExecutionEnvironment

val inputStream = env.readTextFile(args(0))

inputStream.print()

env.execute("hello-world")5.2 基于Socket的数据源

从Socket中读取信息,元素可以⽤分隔符分开

- socketTextStream

val inputStream = env.socketTextStream("localhost", 8888)5.3 基于集合的数据源

从集合中创建⼀个数据流,集合中所有元素的类型是⼀致的。

- fromCollection(seq)

package com.wedoctor.flink

import org.apache.flink.streaming.api.scala._

import org.apache.log4j.{Level, Logger}

object SourceDemo {

Logger.getLogger("org").setLevel(Level.ERROR)

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val list = List(1,2,3,4,5,6,7,8,9)

val ds: DataStream[Int] = env.fromCollection(list)

val filtered: DataStream[Int] = ds.filter(_ % 2 == 0)

filtered.print()

env.execute("SourceDemo")

}

}- fromCollection(Iterator)

val iterator = Iterator(1,2,3,4)

val inputStream = env.fromCollection(iterator)- fromElements(elements:_*)

从⼀个给定的对象序列中创建⼀个数据流,所有的对象必须是相同类型的。

val lst1 = List(1,2,3,4,5)

val lst2 = List(6,7,8,9,10)

val inputStream = env.fromElement(lst1, lst2)- generateSequence(from, to)

从给定的间隔中并⾏地产⽣⼀个数字序列。

val inputStream = env.generateSequence(1,10)6. Flink Transformation

在Flink中,Transformation主要负责对属于的转换操作,调⽤Transformation后会⽣成⼀个新的

DataStream

6.1 map

val env = StreamExecutionEnvironment.getExecutionEnvironment

val inputStream = env.generateSequence(1,10)

val mappedStream = inputStream.map(x => x * 2)6.2 keyBy

DataStream 转换成 KeyedStream:输⼊必须是Tuple类型,逻辑地将⼀个流拆分成不相交的分区,

每个分区包含具有相同key的元素,在内部以hash的形式实现的。

6.3 reduce

KeyedStream 转换成 DataStream:⼀一个分组数据流的聚合操作,合并当前的元素和上次聚合的结

果,产⽣生⼀一个新的值,返回的流中包含每⼀一次聚合的结果,⽽而不不是只返回最后⼀一次聚合的最终结果。

6.4 connect

DataStream,DataStream 转换成 ConnectedStreams:连接两个保持他们类型的数据流,两个数据

流被Connect之后,只是被放在了了⼀一个同⼀一个流中,内部依然保持各⾃自的数据和形式不不发⽣生任何变化,

两个流相互独⽴立。

package com.wedoctor.flink

import org.apache.flink.streaming.api.scala._

import org.apache.log4j.{Level, Logger}

object TranformationDemo {

Logger.getLogger("org").setLevel(Level.ERROR)

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val s1: DataStream[String] = env.fromCollection(List("a","b","c","d"))

val s2: DataStream[Int] = env.fromCollection(List(1,2,3,4))

val streamConnect: ConnectedStreams[String, Int] = s1.connect(s2)

val s3: DataStream[Any] = streamConnect.map(a => a.toUpperCase, b=> b* 10)

s3.print()

env.execute("aa")

}

}6.5split

DataStream 转换成 SplitStream:根据某些特征把⼀一个DataStream拆分成两个或者多个DataStream。

6.6 select

SplitStream 转换成 DataStream:从⼀一个SplitStream中获取⼀一个或者多个DataStream。

7 Flink Sink

在Flink中,Sink负责最终数据的输出

7.1 print

打印每个元素的toString()⽅方法的值到标准输出或者标准错误输出流中。或者也可以在输出流中添加⼀一个前缀,这个可以帮助区分不不同的打印调⽤用,如果并⾏行行度⼤大于1,那么输出也会有⼀一个标识由哪个任务产生的标志。

7.2 writeAsText

将元素以字符串串形式逐⾏行行写⼊入(TextOutputFormat),这些字符串串通过调⽤用每个元素的toString()⽅方法来获取。

7.3 writeAsCsv

将元组以逗号分隔写⼊入⽂文件中(CsvOutputFormat),⾏行行及字段之间的分隔是可配置的。每个字段的值来⾃自对象的toString()⽅方法。

7.4 writeUsingOutputFormat

⾃自定义⽂文件输出的⽅方法和基类(FileOutputFormat),⽀支持⾃自定义对象到字节的转换。

7.5 writeToSocket

根据SerializationSchema 将元素写⼊入到socket中。

8 Flink整合kafka及redis

8.1 Flink整合kafka

package com.wedoctor.flink

import org.apache.flink.api.common.restartstrategy.RestartStrategies

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.api.java.utils.ParameterTool

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer010, FlinkKafkaProducer010}

import org.apache.log4j.{Level, Logger}

/**

*

* * --input-topic test_in --output-topic test_out --bootstrap.servers wedoctor1:9092,wedoctor2:9092,wedoctor3:9092 --auto.offset.reset earliest --group.id liuzc-test

*

*/

object KafkaConnectorDemo {

Logger.getLogger("org").setLevel(Level.ERROR)

def main(args: Array[String]): Unit = {

//解析参数

val params: ParameterTool = ParameterTool.fromArgs(args)

if (params.getNumberOfParameters < 4){

println("Missing parameters!\n"

+ "Usage: Kafka --input-topic <topic>"

+ "--output-topic <topic> "

+ "--bootstrap.servers <kafka brokers> "

+ "--group.id <some id> [--prefix <prefix>]")

return

}

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.getConfig.disableSysoutLogging

//设置重启策略

env.getConfig.setRestartStrategy(RestartStrategies.fixedDelayRestart(4,1000))

// create a checkpoint every 5 seconds

env.enableCheckpointing(5000)

//设置参数。make parameters available in the web interface

env.getConfig.setGlobalJobParameters(params)

// create a Kafka streaming source consumer for Kafka 0.10.x

val kafkaConsumer: FlinkKafkaConsumer010[String] = new FlinkKafkaConsumer010(

params.getRequired("input-topic"),

new SimpleStringSchema,

params.getProperties)

val lines: DataStream[String] = env.addSource(kafkaConsumer)

//Transformation

val filtered: DataStream[String] = lines.filter(!_.startsWith("hello"))

//filtered.print()

//create a Kafka producer for Kafka 0.10.x 将数据写回到kafka中 创建一个kafkaSink

val kafkaProducer = new FlinkKafkaProducer010(

params.getRequired("output-topic"),

new SimpleStringSchema,

params.getProperties)

filtered.addSink(kafkaProducer)

//执行任务

env.execute("Kafka 0.10 Example")

}

}

8.2 Flink整合redis

package com.wedoctor.flink

import org.apache.flink.api.common.restartstrategy.RestartStrategies

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.api.java.utils.ParameterTool

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.connectors.kafka.{FlinkKafkaConsumer010, FlinkKafkaProducer010}

import org.apache.flink.streaming.connectors.redis.RedisSink

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig

import org.apache.flink.streaming.connectors.redis.common.mapper.{RedisCommand, RedisCommandDescription, RedisMapper}

import org.apache.log4j.{Level, Logger}

/**

*

* --input-topic test_in --output-topic test_out --bootstrap.servers wedoctor1:9092,wedoctor2:9092,wedoctor3:9092 --auto.offset.reset earliest --group.id liuzc-test

*

*/

object RedisConnectorsDemo {

Logger.getLogger("org").setLevel(Level.ERROR)

def main(args: Array[String]): Unit = {

// parse input arguments

val params = ParameterTool.fromArgs(args)

if (params.getNumberOfParameters < 4) {

println("Missing parameters!\n"

+ "Usage: Kafka --input-topic <topic>"

+ "--output-topic <topic> "

+ "--bootstrap.servers <kafka brokers> "

+ "--group.id <some id> [--prefix <prefix>]")

return

}

val env = StreamExecutionEnvironment.getExecutionEnvironment

//事先准备一些参数

//禁止log

env.getConfig.disableSysoutLogging

//设置重启策略

env.getConfig.setRestartStrategy(RestartStrategies.fixedDelayRestart(4, 10000))

// create a checkpoint every 5 seconds

env.enableCheckpointing(5000)

//设置参数。make parameters available in the web interface

env.getConfig.setGlobalJobParameters(params)

//Source : Kafka, 从Kafka中读取数据

//Source 开始

val kafkaSource = new FlinkKafkaConsumer010(

params.getRequired("input-topic"),

new SimpleStringSchema,

params.getProperties)

val lines: DataStream[String] = env.addSource(kafkaSource)

//Source 结束

val result: DataStream[(String, Int)] = lines.flatMap(_.split(" ")).map((_, 1)).keyBy(0).sum(1)

//将统计的结果写入到Redis中

val jedisConf = new FlinkJedisPoolConfig.Builder().setHost("192.168.163.10").setPort(6379).build()

result.addSink(new RedisSink[(String, Int)](jedisConf, new RedisMapper[(String, Int)] {

override def getCommandDescription = new RedisCommandDescription(RedisCommand.HSET, "WC") // WC -> {(zc, 21), (zx, 10)}

override def getKeyFromData(t: (String, Int)) = t._1

override def getValueFromData(t: (String, Int)) = t._2.toString

}))

//执行任务

env.execute(this.getClass.getSimpleName)

}

}