dataSet操作:

scala> case class Customer(id:Int,firstName:String,lastName:String,homePhone:String,workPhone:String,address:String,city:String,state:String,zipCode:String)

defined class Customer

scala> val rddCustomers=sc.textFile("file:///home/data/customers.csv").map(line => line.split(",",-1).map(y =>y.replace("\"",""))

| )

rddCustomers: org.apache.spark.rdd.RDD[Array[String]] = MapPartitionsRDD[2] at map at <console>:24

scala> rddCustomers.take(3)

res0: Array[Array[String]] = Array(Array(1, Richard, Hernandez, XXXXXXXXX, XXXXXXXXX, 6303 Heather Plaza, Brownsville, TX, 78521), Array(2, Mary, Barrett, XXXXXXXXX, XXXXXXXXX, 9526 Noble Embers Ridge, Littleton, CO, 80126), Array(3, Ann, Smith, XXXXXXXXX, XXXXXXXXX, 3422 Blue Pioneer Bend, Caguas, PR, 00725))

scala> val rddCustomers=sc.textFile("file:///home/data/customers.csv").map(line => line.split(",",-1).map(y =>y.replace("\"",""))).map(x => Customer(x(0).toInt,x(1),x(2),x(3),x(4),x(5),x(6),x(7),x(8)))

rddCustomers: org.apache.spark.rdd.RDD[Customer] = MapPartitionsRDD[6] at map at <console>:26

scala> rddCustomers.take(2)

res1: Array[Customer] = Array(Customer(1,Richard,Hernandez,XXXXXXXXX,XXXXXXXXX,6303 Heather Plaza,Brownsville,TX,78521), Customer(2,Mary,Barrett,XXXXXXXXX,XXXXXXXXX,9526 Noble Embers Ridge,Littleton,CO,80126))

1.创建dataset:

scala> val dsCustomers=spark.createDataset(rddCustomers)

dsCustomers: org.apache.spark.sql.Dataset[Customer] = [id: int, firstName: string ... 7 more fields]

scala> dsCustomers.printSchema

root

|-- id: integer (nullable = false)

|-- firstName: string (nullable = true)

|-- lastName: string (nullable = true)

|-- homePhone: string (nullable = true)

|-- workPhone: string (nullable = true)

|-- address: string (nullable = true)

|-- city: string (nullable = true)

|-- state: string (nullable = true)

|-- zipCode: string (nullable = true)

2.操作dataset:

scala> dsCustomers.filter(x =>x.lastName =="Smith").show

scala> dsCustomers.filter($"city" ===lit("Caguas")).show

3.做连接查询:

scala> dsCustomers.alias("c").join(dfOrders.alias("o"),col("c.id")===col("o.OrderCustomerID"),"inner").show

4.dataSet可以转化为df:

scala> val dfc=dsCustomers.toDF

dfc: org.apache.spark.sql.DataFrame = [id: int, firstName: string ... 7 more fields]

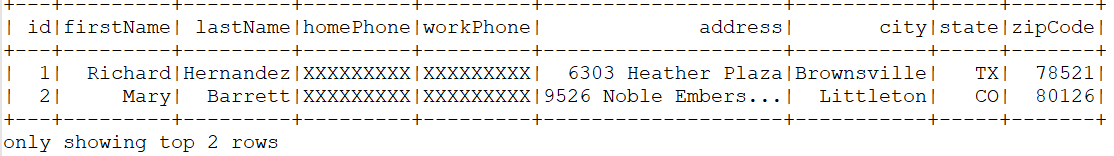

scala> dfc.show(2)

5.df转化为 dataSet:

scala> dfOrders.printSchema

root

|-- OrderDate: string (nullable = true)

|-- OrderStatus: string (nullable = true)

|-- OrderID: integer (nullable = true)

|-- OrderCustomerID: integer (nullable = true)

scala> case class Order(OrderID:Int,OrderDate:String,OrderCustomerID:Int,OrderStatus:String)

defined class Order

scala> val dsOrder=dfOrders.as[Order]

dsOrder: org.apache.spark.sql.Dataset[Order] = [OrderDate: string, OrderStatus: string ... 2 more fields]

6.比较一下类型:

scala> dsOrder

res9: org.apache.spark.sql.Dataset[Order] = [OrderDate: string, OrderStatus: string ... 2 more fields]

scala> dfOrders

res10: org.apache.spark.sql.DataFrame = [OrderDate: string, OrderStatus: string ... 2 more fields]

scala> dsOrder.printSchema

root

|-- OrderDate: string (nullable = true)

|-- OrderStatus: string (nullable = true)

|-- OrderID: integer (nullable = true)

|-- OrderCustomerID: integer (nullable = true)

6.查找数据类型:

scala> import org.apache.spark.sql.catalyst.ScalaReflection

import org.apache.spark.sql.catalyst.ScalaReflection

scala> val schema=ScalaReflection

ScalaReflection ScalaReflectionException

scala> val schema=ScalaReflection.schemaFor[Order].dataType.asInstanceOf[StructType]

schema: org.apache.spark.sql.types.StructType = StructType(StructField(OrderID,IntegerType,false), StructField(OrderDate,StringType,true), StructField(OrderCustomerID,IntegerType,false), StructField(OrderStatus,StringType,true))

scala> schema

res12: org.apache.spark.sql.types.StructType = StructType(StructField(OrderID,IntegerType,false), StructField(OrderDate,StringType,true), StructField(OrderCustomerID,IntegerType,false), StructField(OrderStatus,StringType,true))