关于kafka可以参考:

kafka概念:https://blog.51cto.com/mapengfei/1926063

kafka安装: https://blog.51cto.com/mapengfei/1926065

消费者和生产者demo: https://blog.51cto.com/mapengfei/1926068

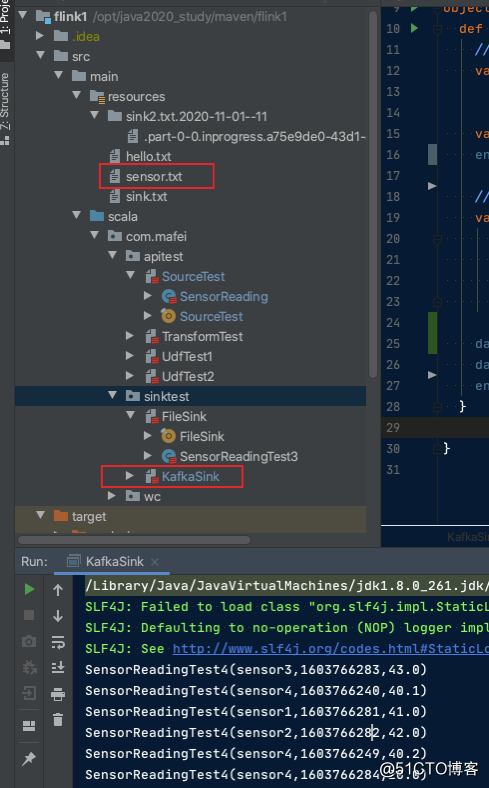

Flink输出kafka样例主要是实现从txt文件中读取出来,写入kafka

package com.mafei.sinktest

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala.{StreamExecutionEnvironment, createTypeInformation}

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer011

case class SensorReadingTest4(id: String,timestamp: Long, temperature: Double)

object KafkaSink {

def main(args: Array[String]): Unit = {

//创建执行环境

val env = StreamExecutionEnvironment.getExecutionEnvironment

val inputStream= env.readTextFile("/opt/java2020_study/maven/flink1/src/main/resources/sensor.txt")

env.setParallelism(1)

//先转换成样例类类型

val dataStream = inputStream

.map(data =>{

val arr = data.split(",") //按照,分割数据,获取结果

SensorReadingTest4(arr(0), arr(1).toLong,arr(2).toDouble).toString //生成一个传感器类的数据,参数中传toLong和toDouble是因为默认分割后是字符串类别

})

dataStream.addSink(new FlinkKafkaProducer011[String]("localhost:9092", "sensor", new SimpleStringSchema()))

dataStream.print()

env.execute()

}

}可以在服务器上命令行起一个consumer来消费数据:

[root@localhost ~]# sh /opt/kafka_2.11-0.10.2.0/bin/kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic sensor --consumer.config /opt/kafka_2.11-0.10.2.0/config/consumer.properties

[2020-11-02 20:08:51,679] WARN The configuration 'zookeeper.connect' was supplied but isn't a known config. (org.apache.kafka.clients.consumer.ConsumerConfig)

[2020-11-02 20:08:51,683] WARN The configuration 'zookeeper.connection.timeout.ms' was supplied but isn't a known config. (org.apache.kafka.clients.consumer.ConsumerConfig)

SensorReadingTest4(sensor3,1603766283,43.0)

SensorReadingTest4(sensor4,1603766240,40.1)

SensorReadingTest4(sensor1,1603766281,41.0)

SensorReadingTest4(sensor2,1603766282,42.0)

SensorReadingTest4(sensor4,1603766249,40.2)

SensorReadingTest4(sensor4,1603766284,20.0)代码结构