最近研究K8s资源配额问题,于是查询了好多资料,总结如下:

默认情况下,pod的运行没有内存和CPU的限制,这意味着pod的随意的消耗cpu和内存,导致资源分配不均;

资源的配额分为:

1)计算资源的配额

2)对象数量的配额

3)存储资源的配额

验证步骤:

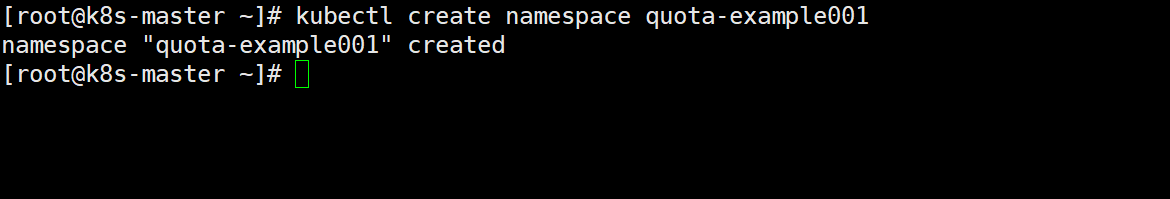

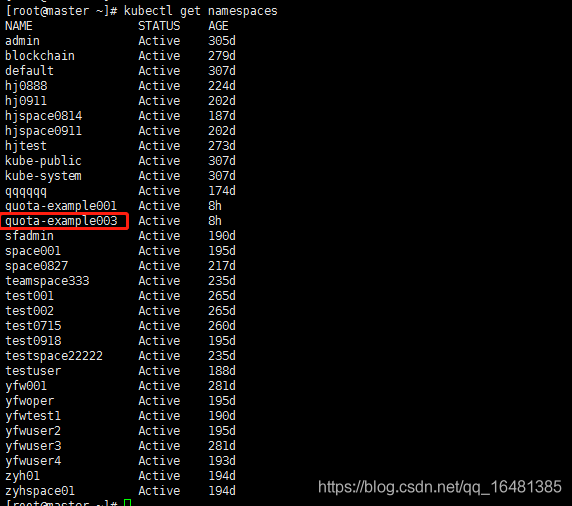

1、创建namespace

kubectl create namespace quota-example003

2、将对象计数配额应用于命名空间(内存和CPU)

kubectl delete -f resourcequota.yaml

cat << EOF > resourcequota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

namespace: quota-example003

name: lykops

labels:

project: admin

app: resourcequota

version: v1

spec:

hard:

pods: 5

requests.cpu: 0.5

requests.memory: 512Mi

limits.cpu: 5

limits.memory: 4Gi

configmaps: 20

persistentvolumeclaims: 20

replicationcontrollers: 20

secrets: 20

services: 5

EOF

1、应用资源到命名空间上

kubectl create -f resourcequota.yaml

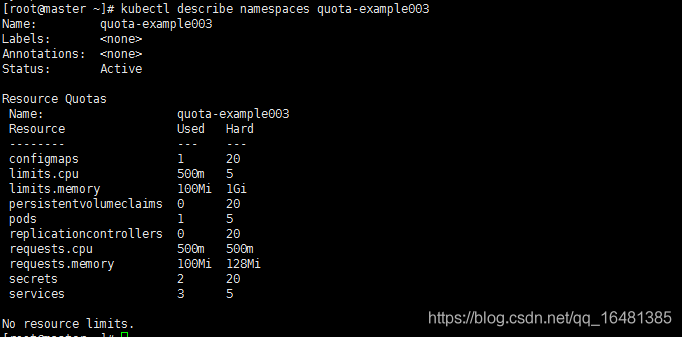

2、查看文件内容:

cat resourcequota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

namespace: quota-example003

name: quota-example003

labels:

project: admin

app: resourcequota

version: v1

spec:

hard:

pods: 5

requests.cpu: 0.5

requests.memory: 128Mi

limits.cpu: 5

limits.memory: 1Gi

configmaps: 20

persistentvolumeclaims: 20

replicationcontrollers: 20

secrets: 20

services: 5

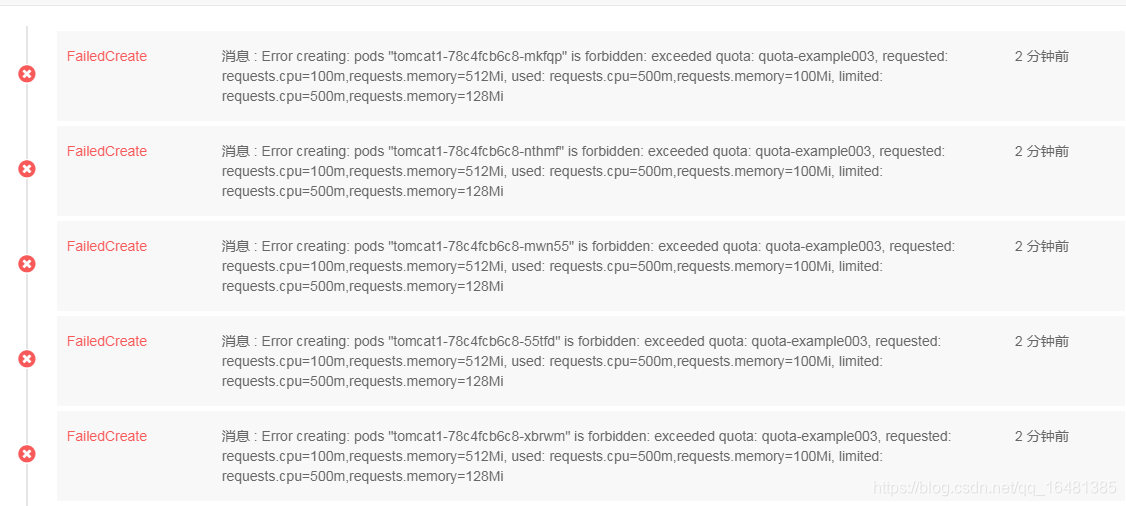

3、部署tomcat -内存设置超过128M 查看pod启动信息

资源配额已经生效