1、首选创建项目,创建方法请看:https://blog.csdn.net/sunxiaoju/article/details/101229620

2、添加远程同步目录(或者叫远程映射),首选选择Tools->Deployment->Configuration...,如下图所示:

3、然后选择+号,选择SFTP,如下图所示:

4、输入名称,如下图所示:

5、输入要远程执行spark的host,用户名和密码,如下图所示:

6、输入之后进行测试SFTP是否成功,如果不成功请检查远程主机是否支持SFTP,如下图所示:

7、然后输入远程目录,如下图所示:

8、选择Mappings选项,配置本地目录和远程的二级目录,如下图所示:

9、配置远程目录时,可以选择后边的文件夹图标,可以列出二级目录,如下图所示:

10、如果没有要选择的目录,可以新建一个,然后在选择,如下图所示:

11、选择之后就会自动填到路径中,如下图所示:

12、开始配置编译好之后自动上传至远程服务器上,选择Tools->Deployment->Options...如下图所示:

13、在Upload changed files automatiically to the default server中选择Always,如下图所示:

14、此时在选择Tools->Deployment就会看到Automatic Upload(always)打上了对勾,并且可以选择Browse Remote Host浏览全程目录,如下图所示:

15、在Browse Remote Host就可以看到远程目录了,如下图所示:

16、修改代码,代码如下:

-

package com.spark -

import org.apache.spark.{SparkConf, SparkContext} -

object WordCount { -

def main(args: Array[String]) { -

val conf = new SparkConf().setAppName("WorkCount") -

.setMaster("spark://master:7077") -

val sc = new SparkContext(conf) -

//sc.addJar("E:\\sunxj\\idea\\spark\\out\\artifacts\\spark_jar\\spark.jar") -

//val line = sc.textFile(args(0)) -

val file=sc.textFile("hdfs://master:9000/user_data/worldcount.txt") -

val rdd = file.flatMap(line => line.split(" ")).map(word => (word,1)).reduceByKey(_+_) -

rdd.collect() -

rdd.foreach(println) -

rdd.collectAsMap().foreach(println) -

} -

}

如下图所示:

17、pom.xml文件内容如下:

-

<?xml version="1.0" encoding="UTF-8"?> -

<project xmlns="http://maven.apache.org/POM/4.0.0" -

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" -

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> -

<modelVersion>4.0.0</modelVersion> -

<groupId>sparktest</groupId> -

<artifactId>spark</artifactId> -

<version>1.0-SNAPSHOT</version> -

<dependencies> -

<dependency> -

<groupId>org.apache.spark</groupId> -

<artifactId>spark-core_2.10</artifactId> -

<version>1.6.3</version> -

</dependency> -

<dependency> -

<groupId>org.apache.hadoop</groupId> -

<artifactId>hadoop-client</artifactId> -

<version>2.6.5</version> -

</dependency> -

</dependencies> -

<build> -

<plugins> -

<plugin> -

<groupId>org.scala-tools</groupId> -

<artifactId>maven-scala-plugin</artifactId> -

<version>2.15.2</version> -

<executions> -

<execution> -

<goals> -

<goal>compile</goal> -

<goal>testCompile</goal> -

</goals> -

</execution> -

</executions> -

</plugin> -

<plugin> -

<groupId>org.apache.maven.plugins</groupId> -

<artifactId>maven-assembly-plugin</artifactId> -

<version>3.1.1</version> -

<configuration> -

<archive> -

<manifest> -

<mainClass>com.spark.WordCount</mainClass> -

</manifest> -

</archive> -

<descriptorRefs> -

<descriptorRef>jar-with-dependencies</descriptorRef> -

</descriptorRefs> -

</configuration> -

<executions> -

<execution> -

<id>make-assembly</id> -

<phase>package</phase> -

<goals> -

<goal>single</goal> -

</goals> -

</execution> -

</executions> -

</plugin> -

</plugins> -

</build> -

</project>

注意:如果没有以下内容会出现无法将scala文件打包到jar的

-

<plugin> -

<groupId>org.scala-tools</groupId> -

<artifactId>maven-scala-plugin</artifactId> -

<version>2.15.2</version> -

<executions> -

<execution> -

<goals> -

<goal>compile</goal> -

<goal>testCompile</goal> -

</goals> -

</execution> -

</executions> -

</plugin>

在执行时有可能会出现

Listening for transport dt_socket at address: 8888

java.lang.ClassNotFoundException: WordCount

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.spark.util.Utils$.classForName(Utils.scala:175)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:689)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

如下图所示:

18、然后执行mvn package命令进行打包,如下图所示:

19、此时会在项目目录中生成一个target目录,如下图所示:

20、然后查看远程目录中是否有该目录,如下图所示:

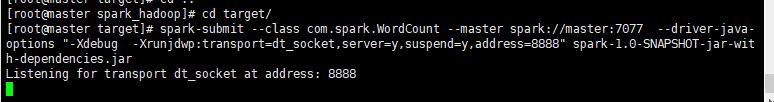

21、然后在远程进入到该目录执行:

spark-submit --class com.spark.WordCount --master spark://master:7077 --driver-java-options "-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=y,address=8888" spark-1.0-SNAPSHOT-jar-with-dependencies.jar

命令解释:

-Xdebug 启用调试特性

-Xrunjdwp 启用JDWP实现,包含若干子选项:

transport=dt_socket JPDA front-end和back-end之间的传输方法。dt_socket表示使用套接字传输。

address=8888 JVM在8888端口上监听请求,这个设定为一个不冲突的端口即可。

server=y y表示启动的JVM是被调试者。如果为n,则表示启动的JVM是调试器。

suspend=y y表示启动的JVM会暂停等待,直到调试器连接上才继续执行。suspend=n,则JVM不会暂停等待。

如下图所示:

22、然后在idea中配置远程调试,首先选择WordCount,然后选择Edit Configurations,如下图所示::

23、然后点击+号,选择Remote,如下图所示:

24、输入名称,然后输入远程的主机名和端口,刚才spark-submit启动监听的端口是8888,因此这里也要设置8888,也可以换做其他端口,如下图所示:

25、然后启动调试,如下图所示:

26、此时远程就出现了调试内容,如下图所示:

27、此时查看web页面就可以查看结果,如下图所示:

在调试过程中出现的问题:

1、

-

19/09/24 20:41:28 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:41:43 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:41:58 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:42:13 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:42:28 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:42:43 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:42:58 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:43:13 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

19/09/24 20:43:28 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources -

^C19/09/24 20:43:30 INFO spark.SparkContext: Invoking stop() from shutdown hook -

19/09/24 20:43:30 INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/metrics/json,null}

重启spark集群即可。

2、使用maven打包scala的jar出现找不到主类,如:

-

java.lang.ClassNotFoundException: WordCount -

at java.net.URLClassLoader.findClass(URLClassLoader.java:382) -

at java.lang.ClassLoader.loadClass(ClassLoader.java:424) -

at java.lang.ClassLoader.loadClass(ClassLoader.java:357) -

at java.lang.Class.forName0(Native Method) -

at java.lang.Class.forName(Class.java:348) -

at org.apache.spark.util.Utils$.classForName(Utils.scala:175) -

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:689) -

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181) -

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206) -

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121) -

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

这是由于没有加入scala插件,如下图所示:

参考了:https://note.youdao.com/ynoteshare1/index.html?id=2b9655588b6a22738081c80ec5cd094e&type=note