今天接到公司领导要求, 要求用zabbix能够实时的监控所有服务器的报错报警日志。

但是因为服务器数量较大, 日志量很大, 单独做脚本分析日志来上报的话消耗资源可能会比较大,因此就使用了已经部署了的elk来把错误的日志单独整理上报 ,然后就在网上查询资料找到了ZABBIX+ELK的部署,经过十几个小时的尝试和测试,已经能够成功的监控到错误和告警日志了, 因为部署过程中踩了很多坑, 因此整理整个流程把相关的内容发了这篇博客,给大家借鉴。

安装Jdk:

// tar xf jdk-15_linux-aarch64_bin.tar.gz -C /usr/local/

// mv /usr/local/jdk-15/ /usr/local/jdk-1.8.0

添加环境变量:

// alternatives --install /usr/bin/java java /usr/local/jdk1.8.0/jre/bin/java 3000

// alternatives --install /usr/bin/jar jar /usr/local/jdk1.8.0/bin/jar 3000

// alternatives --install /usr/bin/javac javac /usr/local/jdk1.8.0/bin/javac 3000

// alternatives --install /usr/bin/javaws javaws /usr/local/jdk1.8.0/jre/bin/javaws 3000

// alternatives --set java /usr/local/jdk1.8.0/jre/bin/java

// alternatives --set jar /usr/local/jdk1.8.0/bin/jar

// alternatives --set javac /usr/local/jdk1.8.0/bin/javac

// alternatives --set javaws /usr/local/jdk1.8.0/jre/bin/javaws

查看java版本:

// java -version

java version "1.8.0_131"

Java(TM) SE Runtime Environment (build 1.8.0_131-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode)

安装logstash:

文件需在官网下载,并进行解压安装:https://www.elastic.co/cn/downloads/logstash

// unzip logstash-7.9.2.zip

// mv logstash-7.9.2 /usr/local/logstash

安装 logstash-integration-jdbc、logstash-output-zabbix、logstash-input-beats-master 插件:

// /usr/local/logstash/bin/logstash-plugin install logstash-integration-jdbc

Validating logstash-integration-jdbc

Installing logstash-integration-jdbc

Installation successful

// /usr/local/logstash/bin/logstash-plugin install logstash-output-zabbix

Validating logstash-output-zabbix

Installing logstash-output-zabbix

Installation successful

// wget https://github.com/logstash-plugins/logstash-input-beats/archive/master.zip -O /opt/master.zip

// unzip -d /usr/local/logstash /opt/master.zip

安装elasticsearch:

// yum install elasticsearch-6.6.2.rpm

编辑主配置文件:

// vim /etc/elasticsearch/elasticsearch.yml

cluster.name: my-application #17行

node.name: node-1 #23行

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 192.168.191.130 #55行

http.port: 9200 #59行

运行服务elasticsearch:

// systemctl enable elasticsearch

// systemctl start elasticsearch

验证服务:

// netstat -lptnu|grep java

tcp6 0 0 192.168.191.130:9200 :::* LISTEN 14671/java

tcp6 0 0 192.168.191.130:9300 :::* LISTEN 14671/java

通过读取系统日志文件的监控,过滤掉日志信息中的异常关键词,将这些带有异常关键词的日志信息过滤出来,输出到zabbix上,通过zabbix告警机制实现触发告警,最后由logsatsh拉取日志并过滤,输出到zabbix中

添加配置文件: 测试配置文件 !!!!!!!

// vim /usr/local/logstash/config/from_beat.conf

input {

beats {

port => 5044

}

}

filter {

#过滤access 日志

if ( [source] =~ "localhost\_access\_log" ) {

grok {

match => {

message => [ "%{COMMONAPACHELOG}" ]

}

}

date {

match => [ "request_time", "ISO8601" ]

locale => "cn"

target => "request_time"

}

#过滤tomcat日志

}

else if ( [source] =~ "catalina" ) {

#使用正则匹配内容到字段

grok {

match => {

message => [ "(?<webapp_name>\[\w+\])\s+(?<request_time>\d{4}\-\d{2}\-\d{2}\s+\w{2}\:\w{2}\:\w{2}\,\w{3})\s+(?<log_level>\w+)\s+(?<class_package>[^.^\s]+(?:\.[^.\s]+)+)\.(?<class_name>[^\s]+)\s+(?<message_content>.+)" ]

}

}

#解析请求时间

date {

match => [ "request_time", "ISO8601" ]

locale => "cn"

target => "request_time"

}

}

else {

drop {

}

}

}

output {

if ( [source] =~ "localhost_access_log" ) {

elasticsearch {

hosts => ["192.168.132.129:9200"]

index => "access_log"

}

}

else {

elasticsearch {

hosts => ["192.168.132.129:9200"]

index => "tomcat_log"

}

}

stdout {

codec => rubydebug }

}

启动logstash 看是否能接收到 filebeat 传过来的日志内容: 进行前台测试:

// /usr/local/logstash/bin/logstash -f /usr/local/logstash/config/from_beat.conf

开启成功提示下方日志信息:

Sending Logstash logs to /usr/local/logstash/logs which is now configured via log4j2.properties

•[2020-10-08T20:37:47,334][INFO ][logstash.runner ] Starting Logstash {

"logstash.version"=>"7.9.2", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc Java HotSpot(TM) 64-Bit Server VM 25.131-b11 on 1.8.0_131-b11 +indy +jit [linux-x86_64]"}

•[2020-10-08T20:37:47,923][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

•[2020-10-08T20:37:50,204][INFO ][org.reflections.Reflections] Reflections took 42 ms to scan 1 urls, producing 22 keys and 45 values

•[2020-10-08T20:37:51,436][INFO ][logstash.javapipeline ][main] Starting pipeline {

:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["/etc/logstash/conf.d/zabbix.conf"], :thread=>"#<Thread:0x2ef7b133 run>"}

•[2020-10-08T20:37:52,520][INFO ][logstash.javapipeline ][main] Pipeline Java execution initialization time {

"seconds"=>1.06}

•[2020-10-08T20:37:52,766][INFO ][logstash.inputs.file ][main] No sincedb_path set, generating one based on the "path" setting {

:sincedb_path=>"/usr/local/logstash/data/plugins/inputs/file/.sincedb_730aea1d074d4636ec2eacfacc10f882", :path=>["/var/log/secure"]}

•[2020-10-08T20:37:52,830][INFO ][logstash.javapipeline ][main] Pipeline started {

"pipeline.id"=>"main"}

•[2020-10-08T20:37:52,921][INFO ][filewatch.observingtail ][main][5ffcc74b3b6be0e4daa892ae39a07dc20fdbc1d05bd5cedc4b4290930274f61e] START, creating Discoverer, Watch with file and sincedb collections

•[2020-10-08T20:37:52,963][INFO ][logstash.agent ] Pipelines running {

:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

•[2020-10-08T20:37:53,369][INFO ][logstash.agent ] Successfully started Logstash API endpoint {

:port=>9600}c

启动后如果没有报错需要等待logstash 完成,此时间可能比较长!!!!!

踩过的坑!!!!!

日志中出现的报错:

[2020-10-08T09:06:42,311][WARN ][logstash.outputs.zabbix ][main][630c433ba0be0739e8ee72ca91d03f00695f05873b64e12c7488b8f2c32a8e05] Zabbix server at 192.168.132.130 rejected all items sent. {

:zabbix_host=>"192.168.132.129"}

表示zabbix主机拒绝了所有的连接:

解决方法:

修改防火墙规则,使需要测试 logstash 的 IP 放行。 切记!!不要关闭防火墙!!!!!

查看配置文件zabbix地址与logstash地址

[2020-10-08T20:41:45,154][WARN ][logstash.outputs.zabbix ][main][cf6b448e829beca8b4ffbd64e71c6e510108015eec5933f7b4675d79d5f09f03] Field referenced by 192.168.132.130 is missing

缺少引用的字段:

解决方法:

添加带有message字段的信息到secure日志中

缺少插件出现的问题:

操作过程中出现了无法启动配置文件,从而导致无限循环 #仅做参考

在 /usr/local/logstash/config 下添加配置文件:

# vim /usr/local/logstash/config/file_to_zabbix.conf

input {

file {

path => ["/var/log/secure"]

type => "system"

start_position => "beginning"

add_field => [ "[zabbix_key]", "oslogs" ] #新增的一个字段,字段名是zabbix_key,值为 oslogs。

add_field => [ "[zabbix_host]", "192.168.132.129" ] #新增的字段是zabbix_host,值可以在这里直接定义,这里的 IP 获取的就是日志是本机的,这个主机名要与zabbix web中“主机名称”需要保持一致。

}

}

output {

zabbix {

zabbix_host => "[zabbix_host]" #这个zabbix_host将获取上面input的oslogs

zabbix_key => "[zabbix_key]" #这个zabbix_key将获取上面input的ip地址

zabbix_server_host => "192.168.132.130" #这是指定zabbix server的IP地址,监控端的

zabbix_server_port => "10051" #这是指定zabbix server的监控端的监听端口

zabbix_value => "message" #这个很重要,指定要传给zabbix监控项(oslogs)的值, zabbix_value默认的值是"message"字段

}

# stdout {

codec => rubydebug } #这个模式下第一次测试时可以开启,测试OK之后,可以关闭

}

// /usr/local/logstash/bin/logstash -f /usr/local/logstash/config/file_to_zabbix.conf &>/dev/null &

// 后台运行添加 &>/dev/null &

在本机安装 zabbix-agent :

// yum install Zabbix-agent

修改 zabbix-agent 配置文件:

// vim /etc/zabiix/zabbix-agent.conf

Server=192.168.132.130 #98行,添加监控端IP

ServerActive=192.168.132.130 #139行,添加监控端IP

通过zabbix-web平台创建需要的模板:

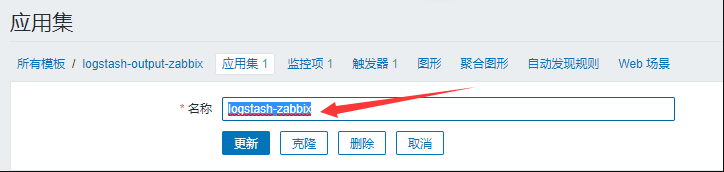

在模板下创建应用集:

创建监控项:监控类型必须的zabbix采集器。键值要与文本的键值相同。

创建触发器:

我们要将创建好的模板链接到客户端上,也就是监控192.168.132.129主机上的日志数据,发现日志异常就会进行告警:

我们通过检测里的最新数据获取客户端的最新日志:

进入历史记录,我们会看到详细的日志内容: