一、概述

CDH,全称 Cloudera’s Distribution, including Apache Hadoop。是 Hadoop 众多分支中对应中的一种,由 Cloudera 维护,基于稳定版本的 Apache Hadoop 构建,提供了 Hadoop 的核心(可扩展存储、分布式计算),最为重要的是提供基于 web 的用户界面。

CDH 的优点:版本划分清晰,更新速度快,支持 Kerberos 安全认证,支持多种安装方式(如Yum、rpm 等)。

二、部署规划

CDH 分为 Cloudera Manager 管理平台和 CDH parcel(parcel 包含各种组件的安装包)。

2.1 Cloudera Manager

Cloudera Manger地址:https://archive.cloudera.com/cm6/6.3.1/redhat7/yum/RPMS/x86_64/

ASC 文件:http://archive.cloudera.com/cm6/6.3.1/allkeys.asc

下载的文件保存在“cloudera-repos”目录下

2.2 CDH parcel

CDH parcel地址:https://archive.cloudera.com/cdh6/6.3.2/parcels/

2.3 软件目录

├── cdh

│ ├── allkeys.asc

│ ├── cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm

│ ├── cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm

│ ├── cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm

│ ├── cloudera-manager-server-db-2-6.3.1-1466458.el7.x86_64.rpm

│ ├── enterprise-debuginfo-6.3.1-1466458.el7.x86_64.rpm

│ └── oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm

├── mysql

│ ├── mysql-5.7.32-el7-x86_64.tar

│ └── mysql-connector-java-5.7.32-1.el7.noarch.rpm

└── parcel

├── CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel

├── CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel.sha1

├── CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel.sha256

└── manifest.json

3 directories, 13 files

三、环境配置

3.1 配置映射

cat>>/etc/hosts <<EOF

192.168.137.129 db01

192.168.137.130 db02

192.168.137.131 db03

EOF

3.2 关闭防火墙&selinux

systemctl stop firewalld

systemctl disable firewalld

setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

3.3 配置互信

# 每个节点都执行

ssh-keygen -t rsa # 一路回车

# 将公钥添加到认证文件中

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# 并设置authorized_keys的访问权限

chmod 600 ~/.ssh/authorized_keys

# 只要在一个节点执行即可

ssh db02 cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

ssh db03 cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

# 分发整合后的文件到其它节点

scp ~/.ssh/authorized_keys db02:~/.ssh/

scp ~/.ssh/authorized_keys db03:~/.ssh/

#各节点执行

ssh db01 date

ssh db02 date

ssh db03 date

3.4 创建用户

groupadd hadoop

useradd hadoop -g hadoop

id hadoop

3.5 安装jdk

[root@db01 cdh]# rpm -ivh oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm

警告:oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm: 头V3 RSA/SHA256 Signature, 密钥 ID b0b19c9f: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:oracle-j2sdk1.8-1.8.0+update181-1################################# [100%]

cat>>/etc/profile <<EOF

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

EOF

source /etc/profile

3.6 配置NTP

修改时区

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

安装ntp

yum install ntp -y

- manager节点

修改/etc/ntp.conf

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server ntp.aliyun.com

- node节点

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server master

启动ntp

systemctl enable ntpd.service

systemctl restart ntpd.service

ntpdc -c loopinfo #查看与时间同步服务器的时间偏差ntpq -p #查看当前同步的时间服务器

ntpstat #查看状态

3.7 安装MySQL驱动程序

需大于5.1.26 否则安装hive报错报错:org.apache.hadoop.hive.metastore.HiveMetaException: Failed to retrieve schema tables from Hive Metastore DB,Not supported

mv ~/mysql-connector-java-5.1.49/mysql-connector-java-5.1.49.jar /usr/share/java/mysql-connector-java.jar

四、安装MySQL

注意:需安装MySQL5.7版本,8.0暂不支持;不能开启GTID,因为要使用create table as select …语法;

4.1 创建MySQL用户组和用户

groupadd mysql

useradd -g mysql mysql -s /sbin/nologin

id mysql

4.2 解压软件&目录配置

tar xf mysql-5.7.32-el7-x86_64.tar

tar xf mysql-5.7.32-el7-x86_64.tar.gz -C /usr/local

tar xf mysql-test-5.7.32-el7-x86_64.tar.gz -C /usr/local

ln -s /usr/local/mysql-5.7.32-el7-x86_64/ /usr/local/mysql

mkdir -pv /data/mysql/{data,logs,tmp,binlog}

chown -R mysql.mysql /data/mysql/

chown -R mysql.mysql /usr/local/mysql/

4.3 配置环境变量

cat>>/etc/profile <<EOF

export PATH=$PATH:/usr/local/mysql//bin/

echo 'export PATH=$PATH:/usr/local/mysql//bin/' >> /etc/profile

EOF

source /etc/profile

4.4 修改配置文件

cat>>/etc/my.cnf <<EOF

[client]

port = 3306

socket = /data/mysql/data/mysql.sock

[mysql]

prompt="\u@mysql \R:\m:\s [\d]> "

no-auto-rehash

[mysqld]

federated

secure_file_priv=/tmp

user=mysql

port=3306

basedir=/usr/local/mysql

datadir=/data/mysql/data

tmpdir =/data/mysql/tmp

socket=/data/mysql/data/mysql.sock

pid-file=mysql.pid

character-set-server = utf8mb4

skip_name_resolve = 1

default_time_zone = "+8:00"

open_files_limit= 65535

back_log = 1024

max_connections = 256

max_user_connections = 64

max_connect_errors = 10000

autocommit = 1

table_open_cache = 1024

table_definition_cache = 1024

table_open_cache_instances = 64

thread_stack = 512K

external-locking = FALSE

max_allowed_packet = 32M

sort_buffer_size = 4M

join_buffer_size = 4M

innodb_sort_buffer_size = 64M

thread_cache_size = 384

interactive_timeout = 600

wait_timeout = 600

tmp_table_size = 32M

max_heap_table_size = 32M

slow_query_log = 1

log_timestamps = SYSTEM

slow_query_log_file = /data/mysql/logs/slow.log

log-error = /data/mysql/logs/error.log

long_query_time = 1

log_queries_not_using_indexes =1

log_throttle_queries_not_using_indexes = 60

min_examined_row_limit = 0

log_slow_admin_statements = 1

log_slow_slave_statements = 1

server-id = 330601

log-bin = /data/mysql/binlog/binlog

sync_binlog = 1

binlog_cache_size = 4M

max_binlog_cache_size = 2G

max_binlog_size = 1G

auto_increment_offset=1

auto_increment_increment=1

expire_logs_days = 7

master_info_repository = TABLE

relay_log_info_repository = TABLE

#gtid_mode = on

#enforce_gtid_consistency = 1

log_slave_updates

slave-rows-search-algorithms = 'INDEX_SCAN,HASH_SCAN'

binlog_format = row

relay_log = /data/mysql/logs/relaylog

relay_log_recovery = 1

relay-log-purge = 1

key_buffer_size = 32M

read_buffer_size = 8M

read_rnd_buffer_size = 4M

bulk_insert_buffer_size = 64M

myisam_sort_buffer_size = 128M

myisam_max_sort_file_size = 10G

myisam_repair_threads = 1

lock_wait_timeout = 3600

explicit_defaults_for_timestamp = 1

innodb_thread_concurrency = 0

innodb_sync_spin_loops = 100

innodb_spin_wait_delay = 30

transaction_isolation = READ-COMMITTED

innodb_buffer_pool_size = 1000M

innodb_buffer_pool_instances = 4

innodb_buffer_pool_load_at_startup = 1

innodb_buffer_pool_dump_at_shutdown = 1

innodb_data_file_path = ibdata1:500M:autoextend

innodb_temp_data_file_path=ibtmp1:500M:autoextend

innodb_flush_log_at_trx_commit = 1

innodb_log_buffer_size = 32M

innodb_log_file_size = 1G

innodb_log_files_in_group = 2

innodb_max_undo_log_size = 2G

innodb_undo_directory = /data/mysql/data

innodb_undo_tablespaces = 2

innodb_io_capacity = 4000

innodb_io_capacity_max = 8000

innodb_flush_sync = 0

innodb_flush_neighbors = 0

innodb_write_io_threads = 8

innodb_read_io_threads = 8

innodb_purge_threads = 4

innodb_page_cleaners = 4

innodb_open_files = 65535

innodb_max_dirty_pages_pct = 50

innodb_flush_method = O_DIRECT

innodb_lru_scan_depth = 4000

innodb_checksum_algorithm = crc32

innodb_lock_wait_timeout = 10

innodb_rollback_on_timeout = 1

innodb_print_all_deadlocks = 1

innodb_file_per_table = 1

innodb_online_alter_log_max_size = 4G

innodb_stats_on_metadata = 0

innodb_undo_log_truncate = 1

performance_schema = 1

performance_schema_instrument = '%memory%=on'

performance_schema_instrument = '%lock%=on'

innodb_monitor_enable="module_innodb"

innodb_monitor_enable="module_server"

innodb_monitor_enable="module_dml"

innodb_monitor_enable="module_ddl"

innodb_monitor_enable="module_trx"

innodb_monitor_enable="module_os"

innodb_monitor_enable="module_purge"

innodb_monitor_enable="module_log"

innodb_monitor_enable="module_lock"

innodb_monitor_enable="module_buffer"

innodb_monitor_enable="module_index"

innodb_monitor_enable="module_ibuf_system"

innodb_monitor_enable="module_buffer_page"

innodb_monitor_enable="module_adaptive_hash"

[mysqldump]

quick

max_allowed_packet = 32M

EOF

4.5 初始化&启动数据库

mysqld --defaults-file=/etc/my.cnf --initialize-insecure &

mysqld_safe --defaults-file=/etc/my.cnf &

4.6 创建相关数据库&用户

| 服务名 | 数据库名 | 用户名 |

|---|---|---|

| Cloudera Manager Server | scm | scm |

| Activity Monitor | amon | amon |

| Reports Manager | rman | rman |

| Hue | hue | hue |

| Hive Metastore Server | metastore | hive |

| Sentry Server | sentry | sentry |

| Cloudera Navigator Audit Server | nav | nav |

| Cloudera Navigator Metadata Server | navms | navms |

| Oozie | oozie | oozie |

mysql

alter user root@'localhost' identified by '970125';

create database scm;

create database amon;

create database rman;

create database hue;

create database metastore;

create database sentry;

create database nav;

create database navms;

create database oozie;

create user scm@'%' identified by 'scm';

create user amon@'%' identified by 'amon';

create user rman@'%' identified by 'rman';

create user hue@'%' identified by 'hue';

create user hive@'%' identified by 'hive';

create user sentry@'%' identified by 'sentry';

create user nav@'%' identified by 'nav';

create user navms@'%' identified by 'navms';

create user oozie@'%' identified by 'oozie';

grant all on scm.* to scm@'%';

grant all on amon.* to amon@'%';

grant all on rman.* to rman@'%';

grant all on hue.* to hue@'%';

grant all on metastore.* to hive@'%';

grant all on sentry.* to sentry@'%';

grant all on nav.* to nav@'%';

grant all on navms.* to navms@'%';

grant all on oozie.* to oozie@'%';

五、Cloudera-manager-agent部署

5.1 安装软件

yum localinstall -y cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm

yum localinstall -y cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm

5.2 修改配置文件

sed -i 's/server_host=localhost/server_host=db01/g' /etc/cloudera-scm-agent/config.ini

5.3 启动服务

systemctl start cloudera-scm-agent

systemctl status cloudera-scm-agent

systemctl enable cloudera-scm-agent

六、Cloudera Manager 部署

6.1 安装httpd服务

yum install -y httpd createrepo

systemctl start httpd.service

systemctl enable httpd.service

6.2 配置资料库

mkdir -pv /var/www/html/cloudera-repos/

cp -ar /var/soft/cdh/* /var/www/html/cloudera-repos/

cd /var/www/html/cloudera-repos/

createrepo .

chmod -R a+rx var/www/html/cloudera-repos/

6.3 配置yum源

cat>>/etc/yum.repos.d/cloudera-manager.repo <<EOF

[cloudera-manager]

name=Cloudera Manager 6.3.0

baseurl=http://db01/cloudera-repos/

gpgcheck=0

enabled=1

EOF

yum clean all

yum makecache

6.4 安装Cloudera Manager Server

yum localinstall -y cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm

cp /var/soft/parcel/* /opt/cloudera/parcel-repo/

cd /opt/cloudera/parcel-repo/

sha1sum CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel|awk '{ print $1 }' > CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel.sha

chown -R cloudera-scm:cloudera-scm /opt/cloudera/parcel-repo/*

6.5 数据库配置

[root@db01 schema]# sh scm_prepare_database.sh mysql scm scm

Enter SCM password:

JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

Verifying that we can write to /etc/cloudera-scm-server

Creating SCM configuration file in /etc/cloudera-scm-server

Executing: /usr/java/jdk1.8.0_181-cloudera/bin/java -cp /usr/share/java/mysql-connector-java.jar:/usr/share/java/oracle-connector-java.jar:/usr/share/java/postgresql-connector-java.jar:/opt/cloudera/cm/schema/../lib/* com.cloudera.enterprise.dbutil.DbCommandExecutor /etc/cloudera-scm-server/db.properties com.cloudera.cmf.db.

[ main] DbCommandExecutor INFO Successfully connected to database.

All done, your SCM database is configured correctly!

6.6 启动服务

systemctl start cloudera-scm-server

systemctl enable cloudera-scm-server

systemctl status cloudera-scm-server

tail -f /var/log/cloudera-scm-server/cloudera-scm-server.log

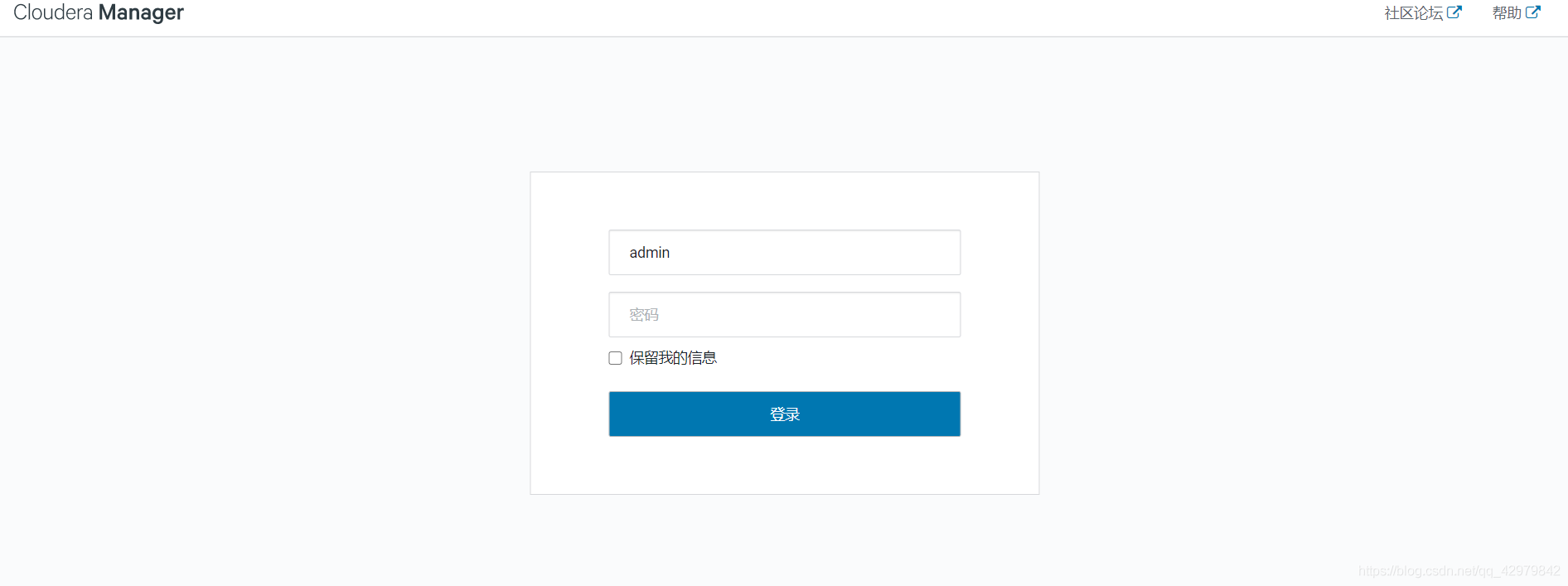

七、服务安装

地址:192.168.137.129:7180

用户:admin

密码:admin

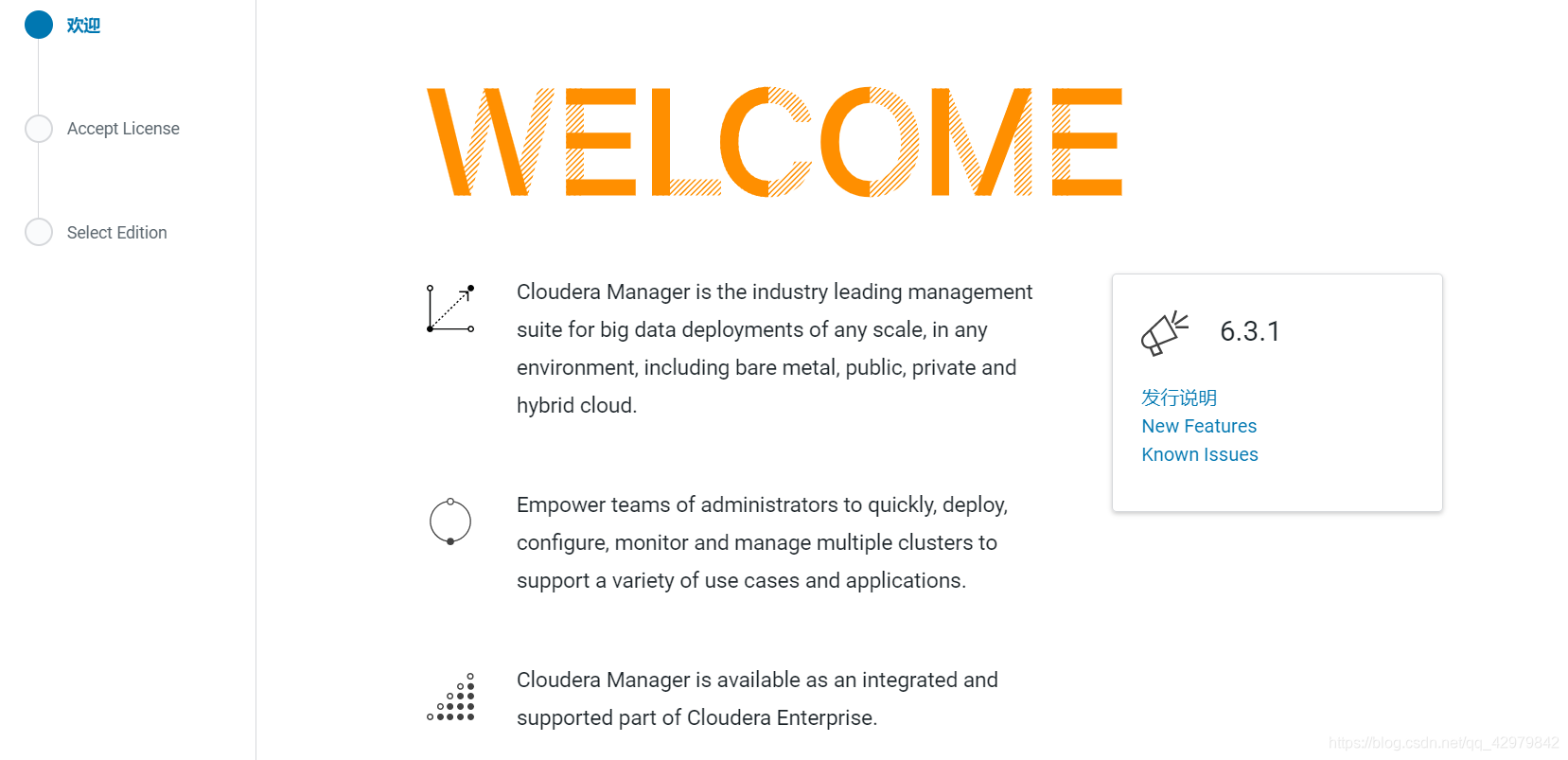

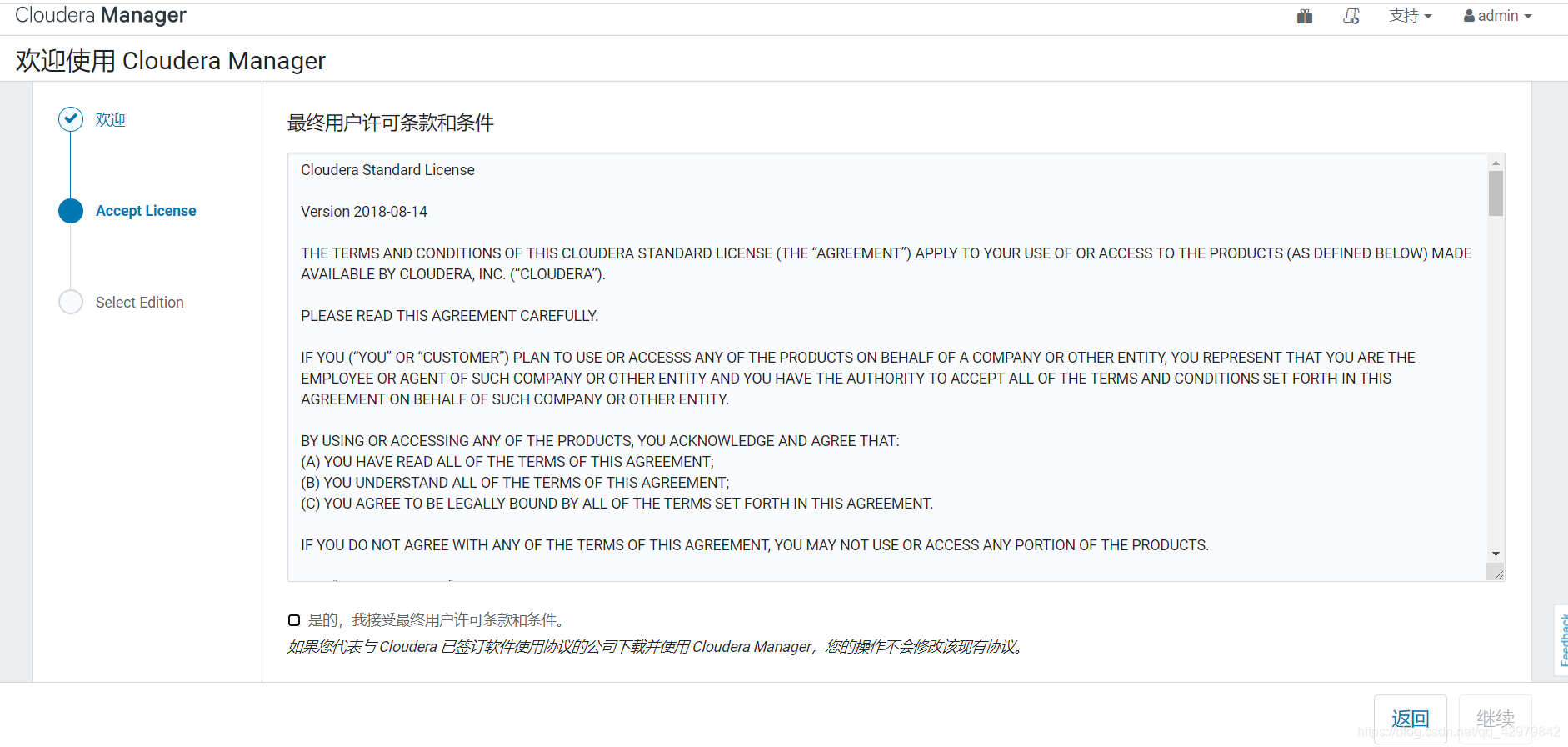

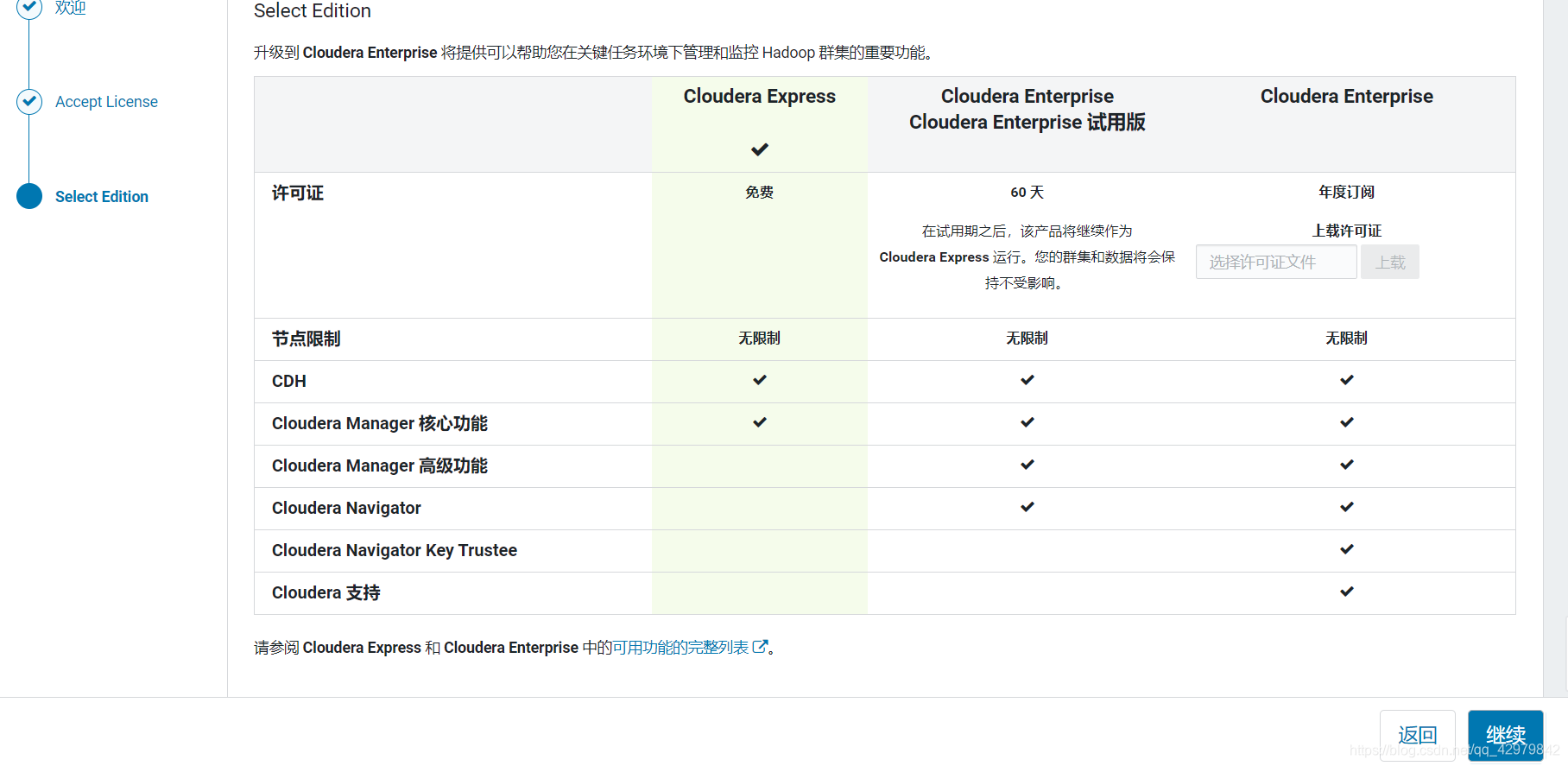

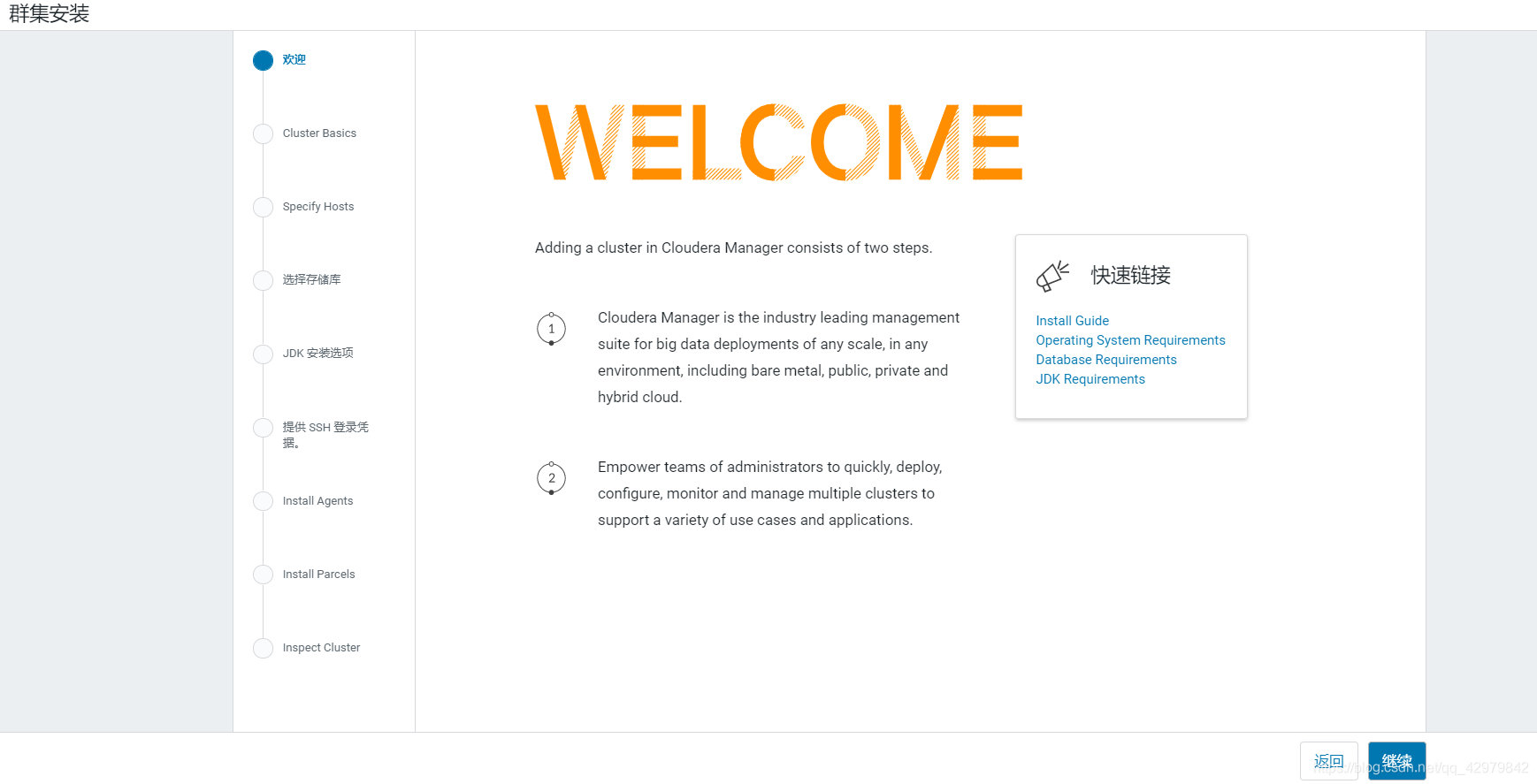

7.1 欢迎界面

继续

继续

根据情况选择版本

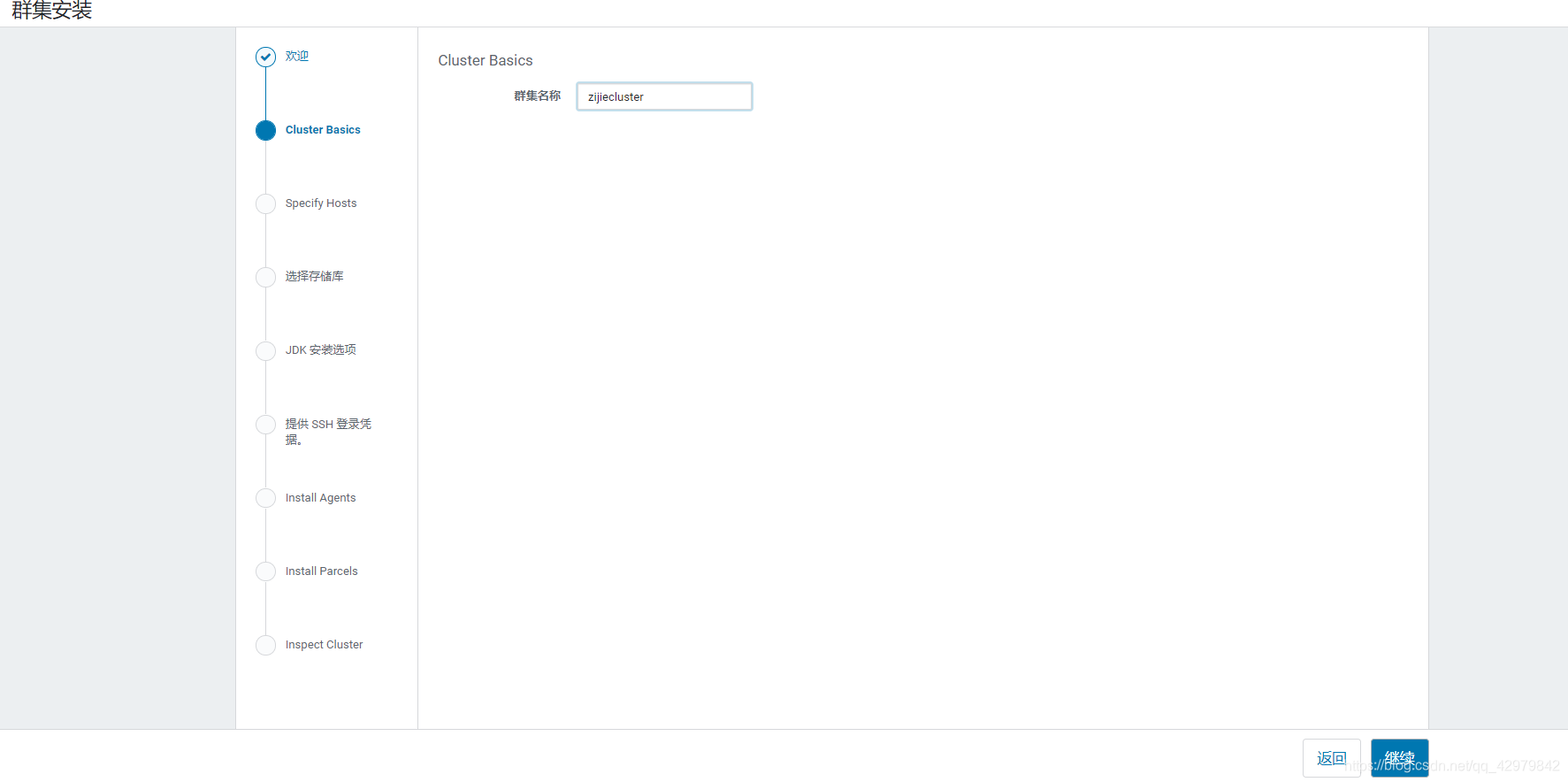

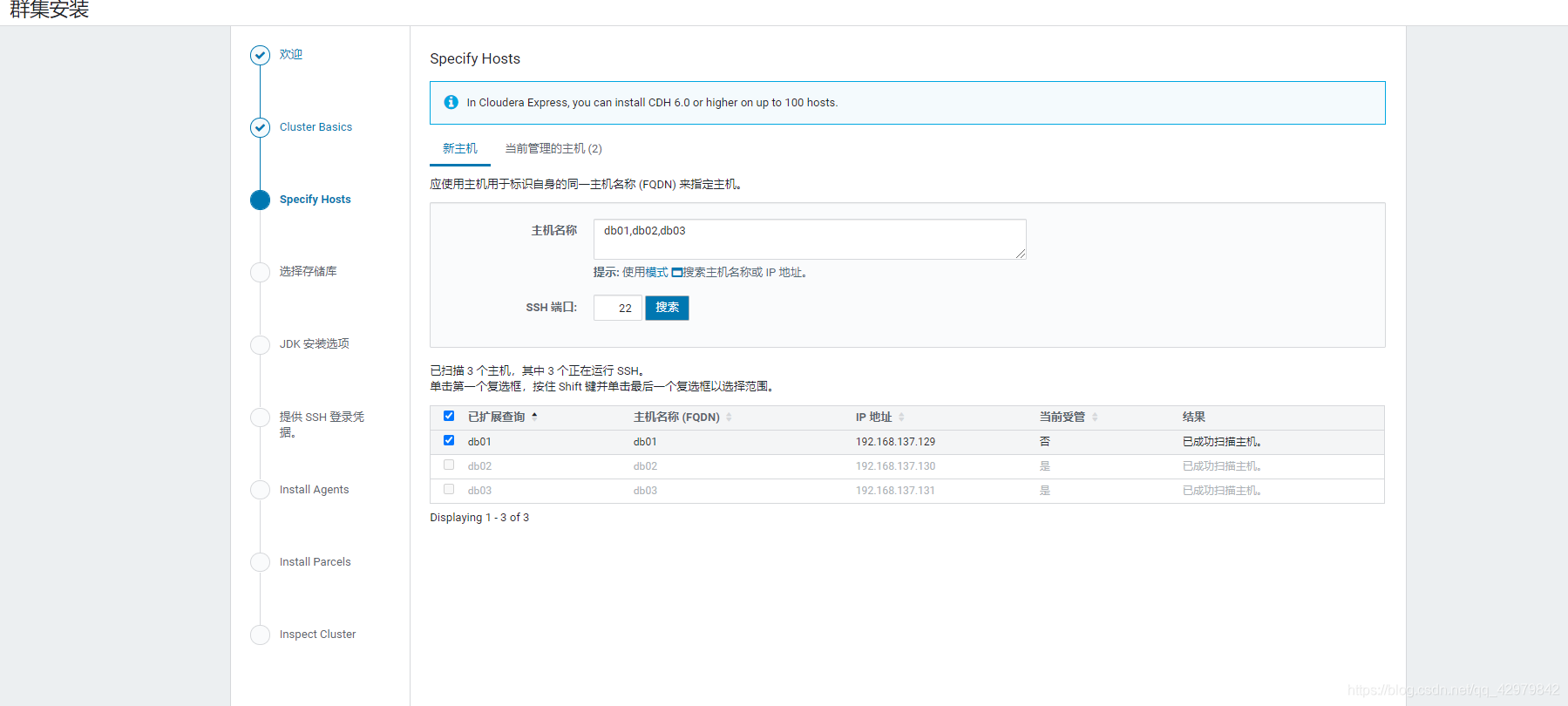

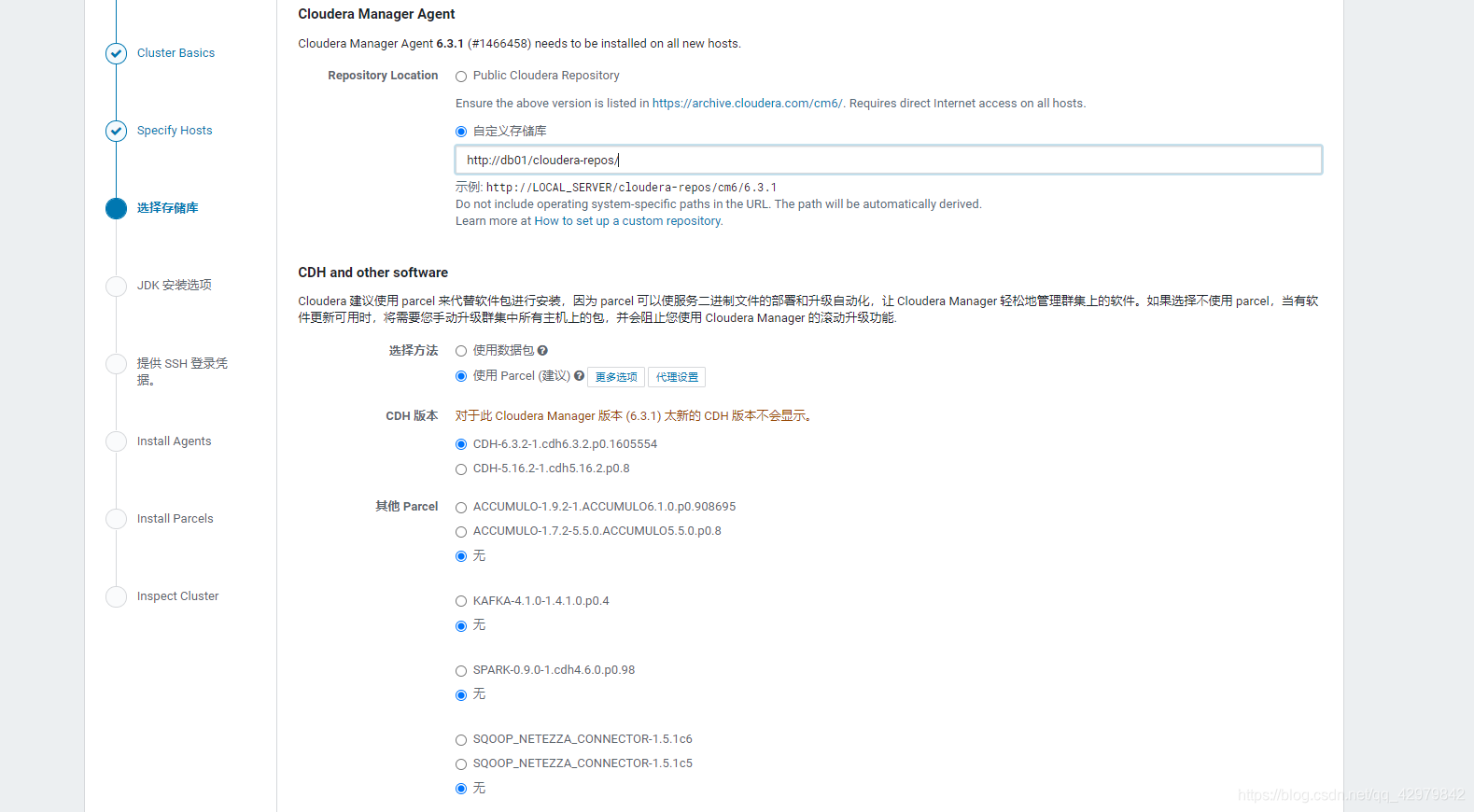

7.2 集群安装

继续

配置集群名

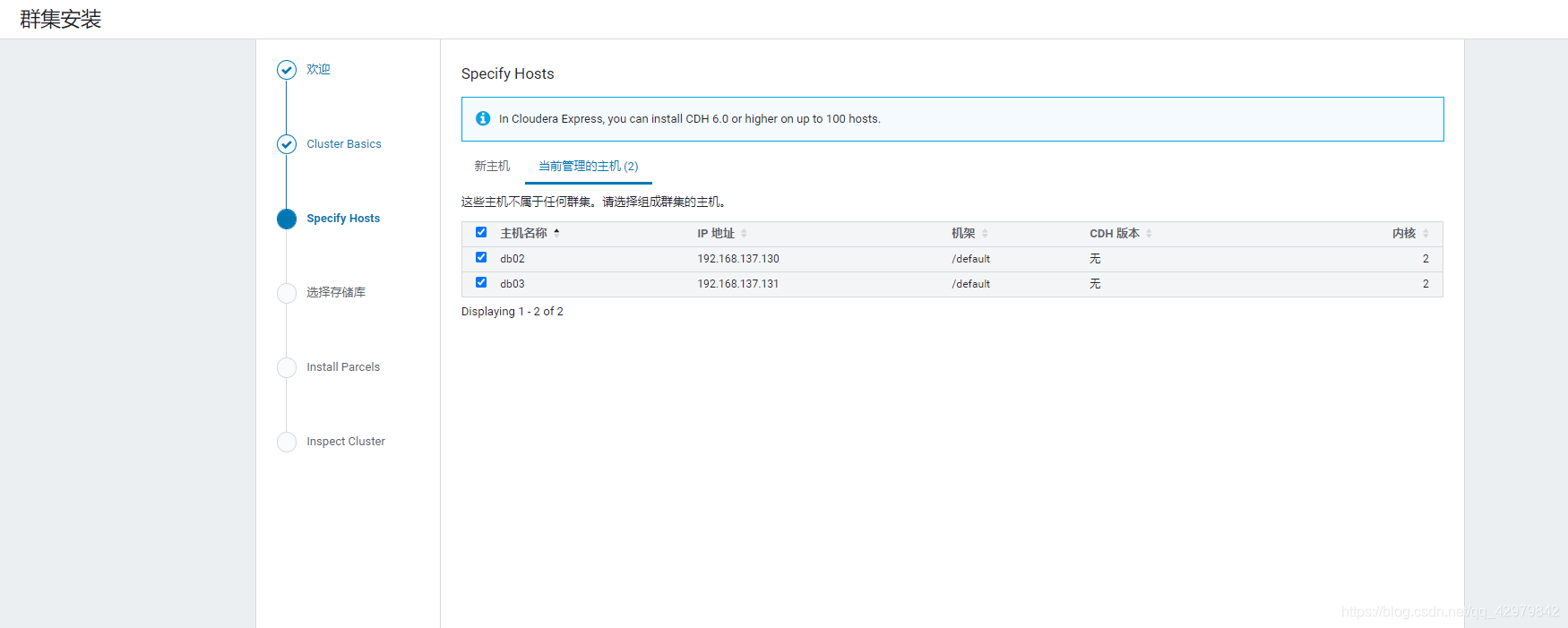

选择主机

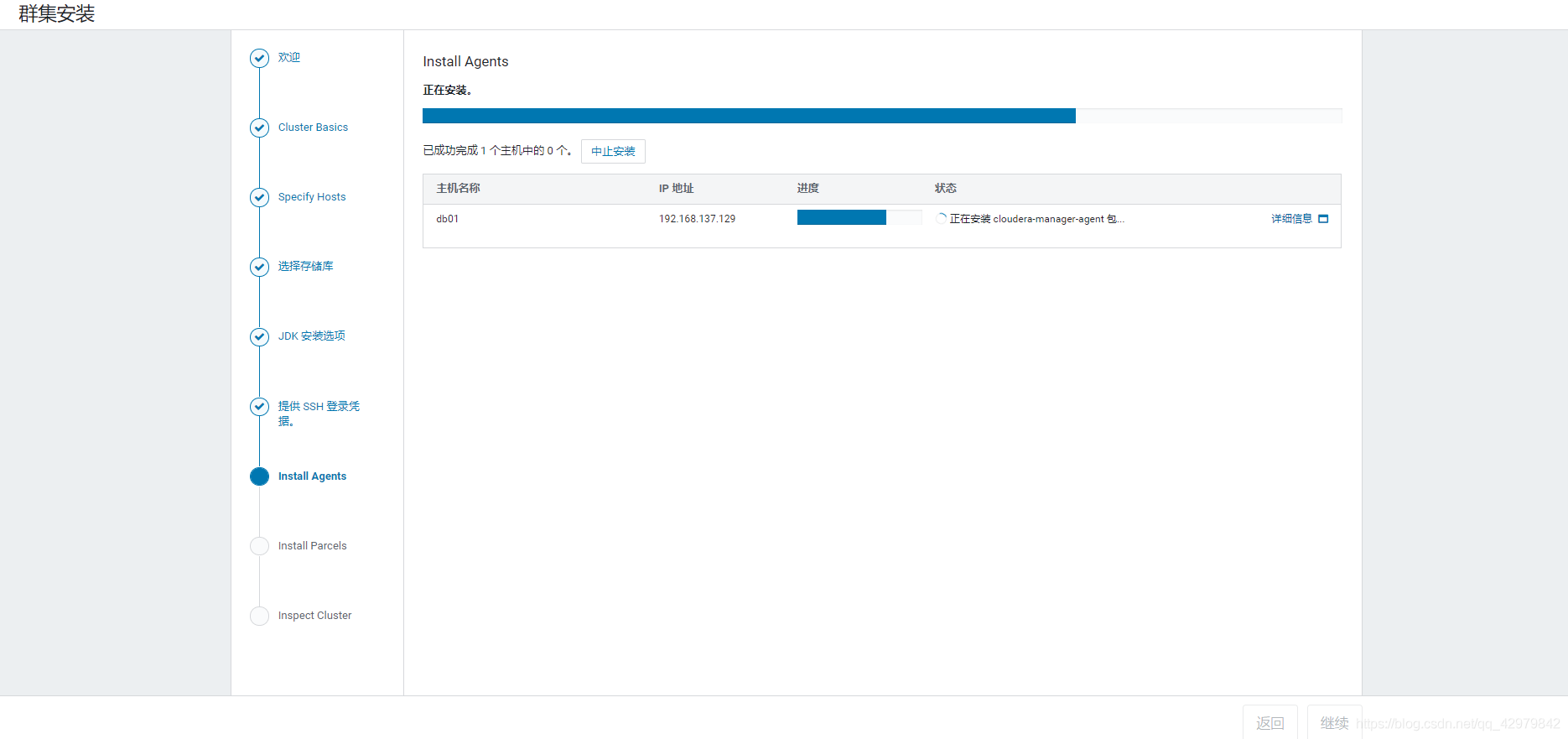

无agent

已有agent

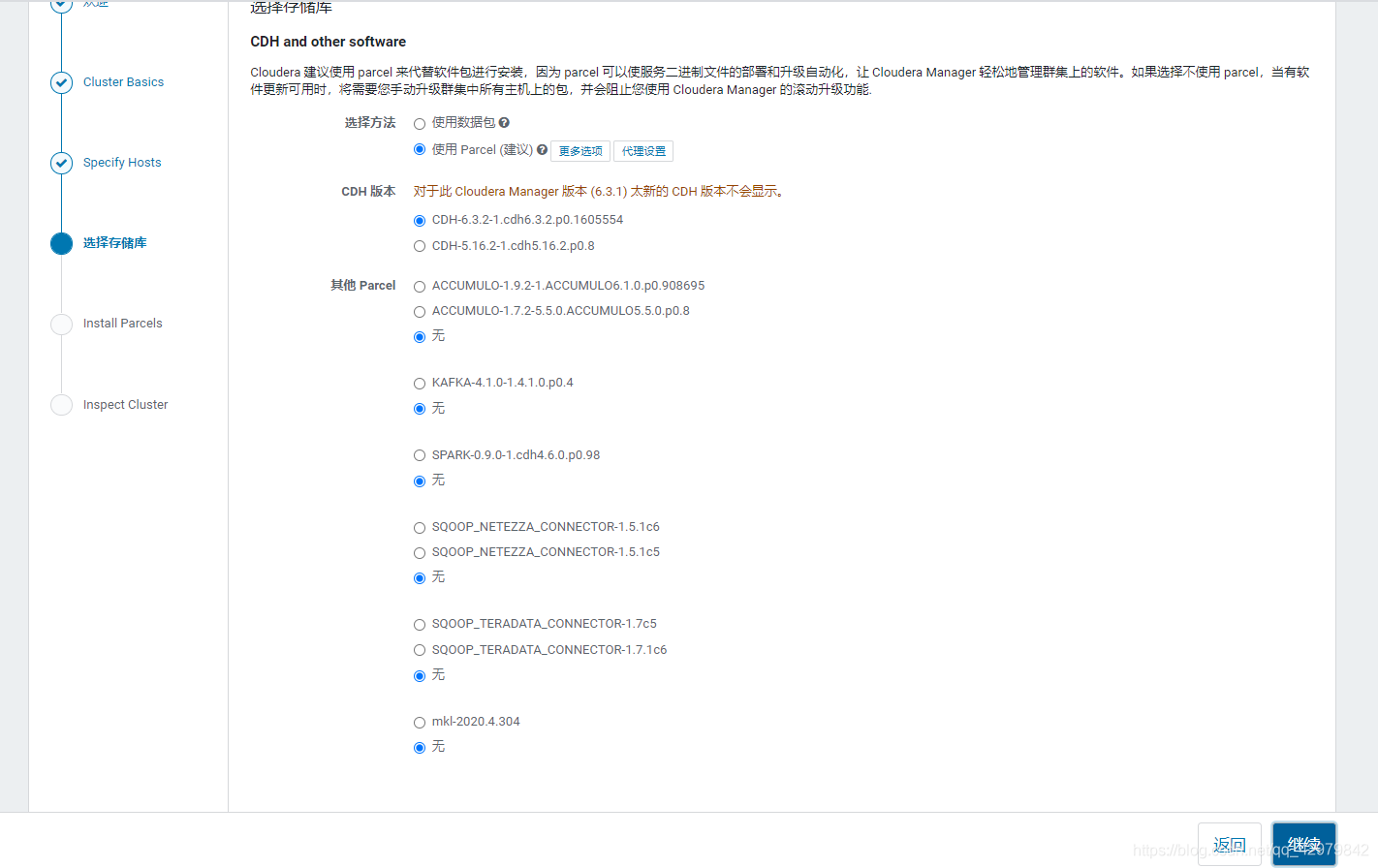

选择存储库

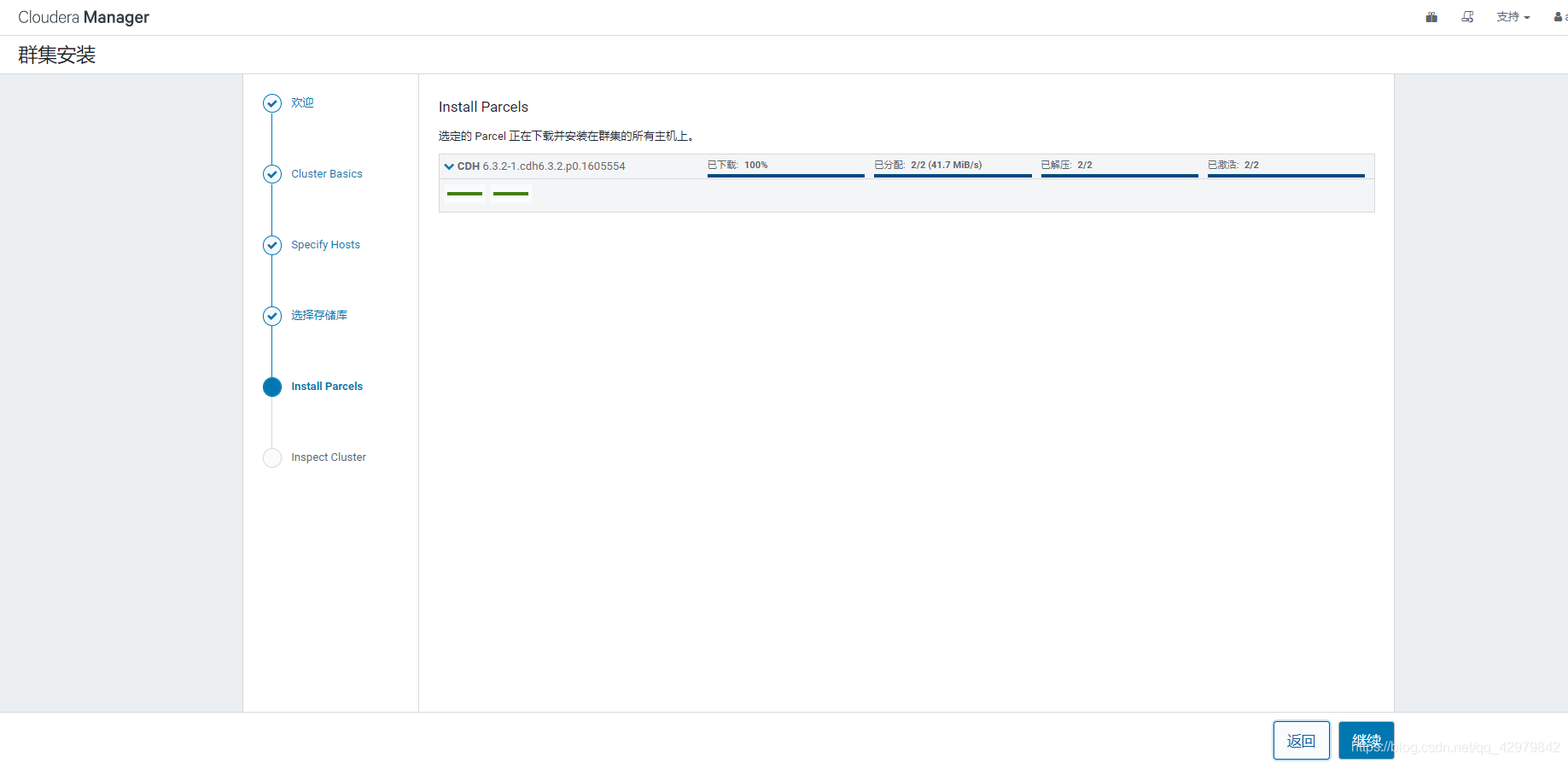

等待安装

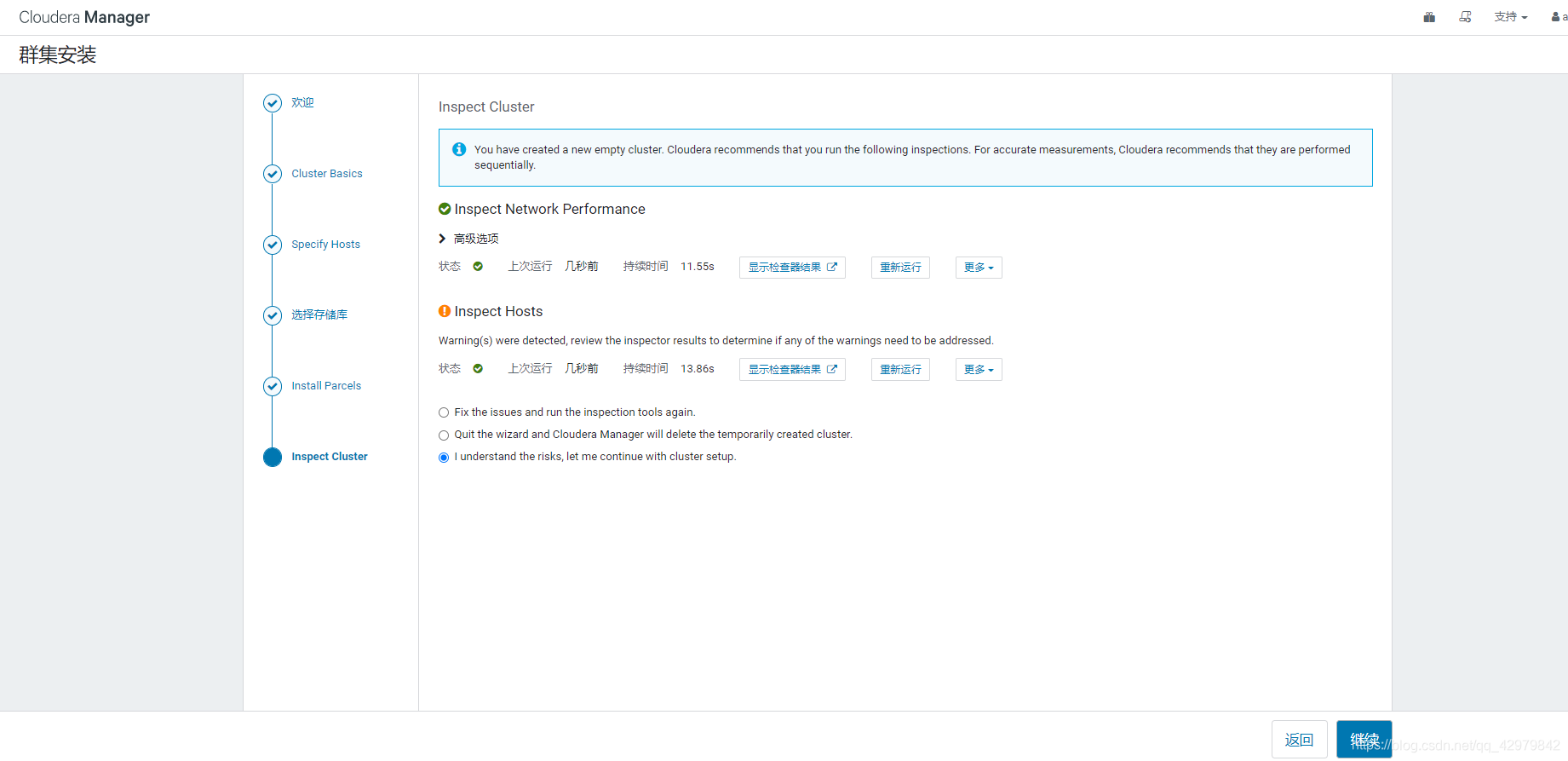

运行检测

选择需要安装的服务

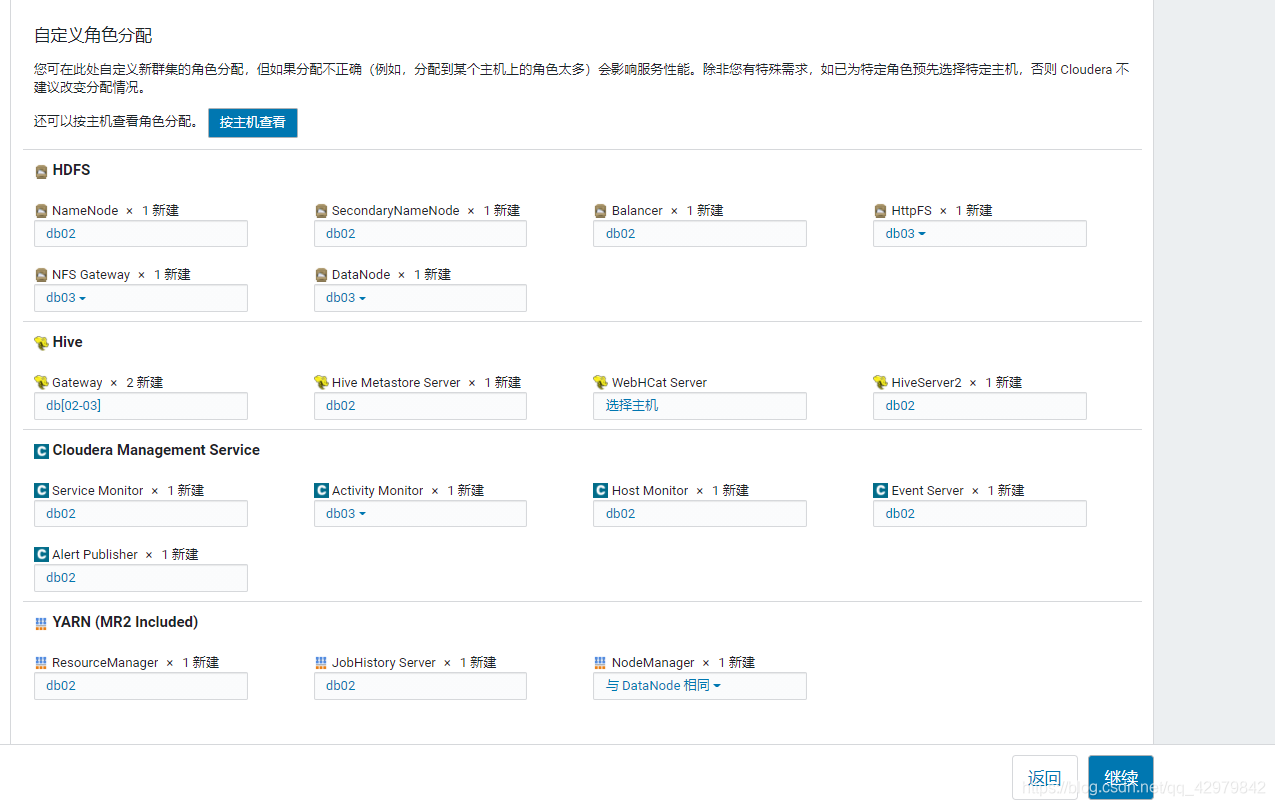

分配角色

数据库设置

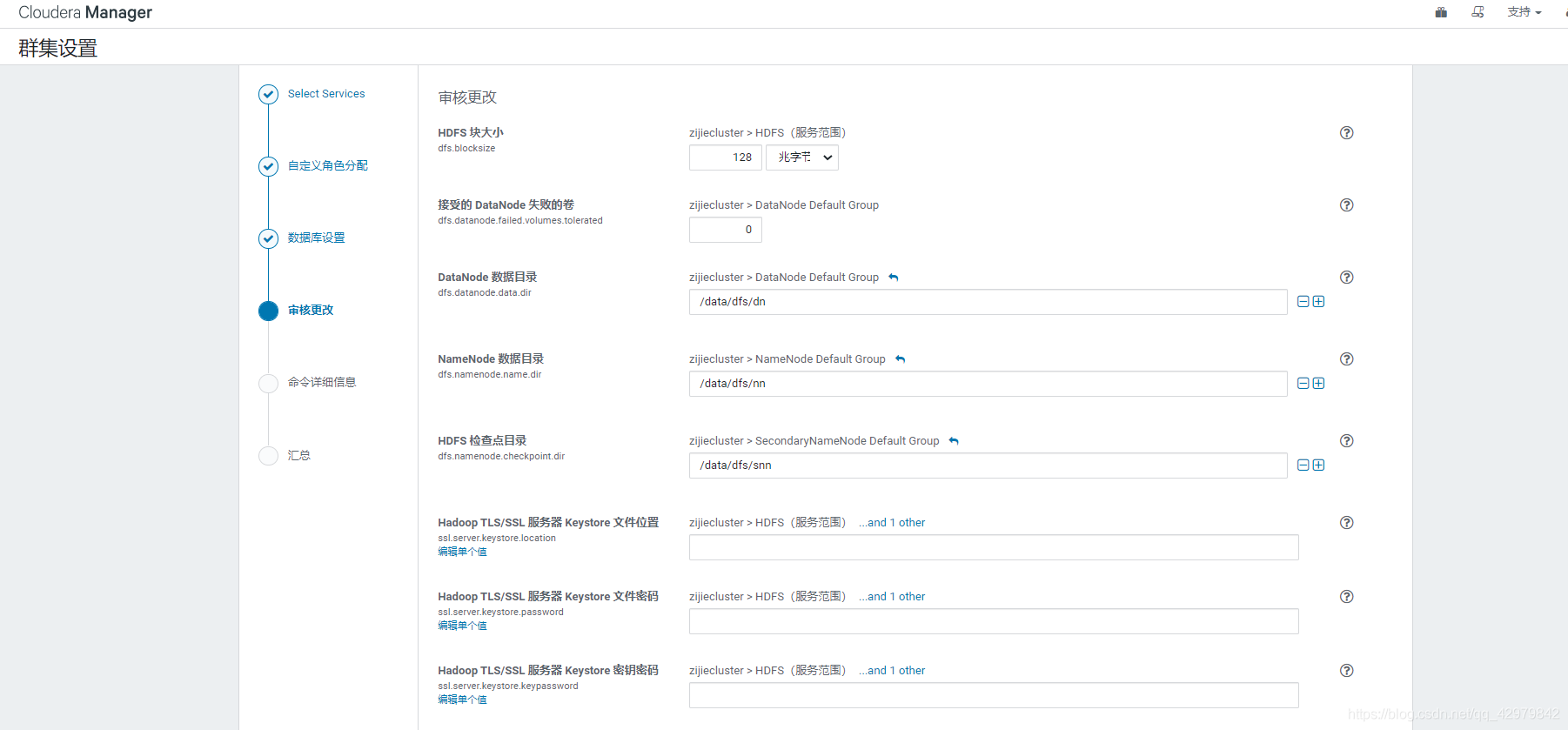

审核

详细输出

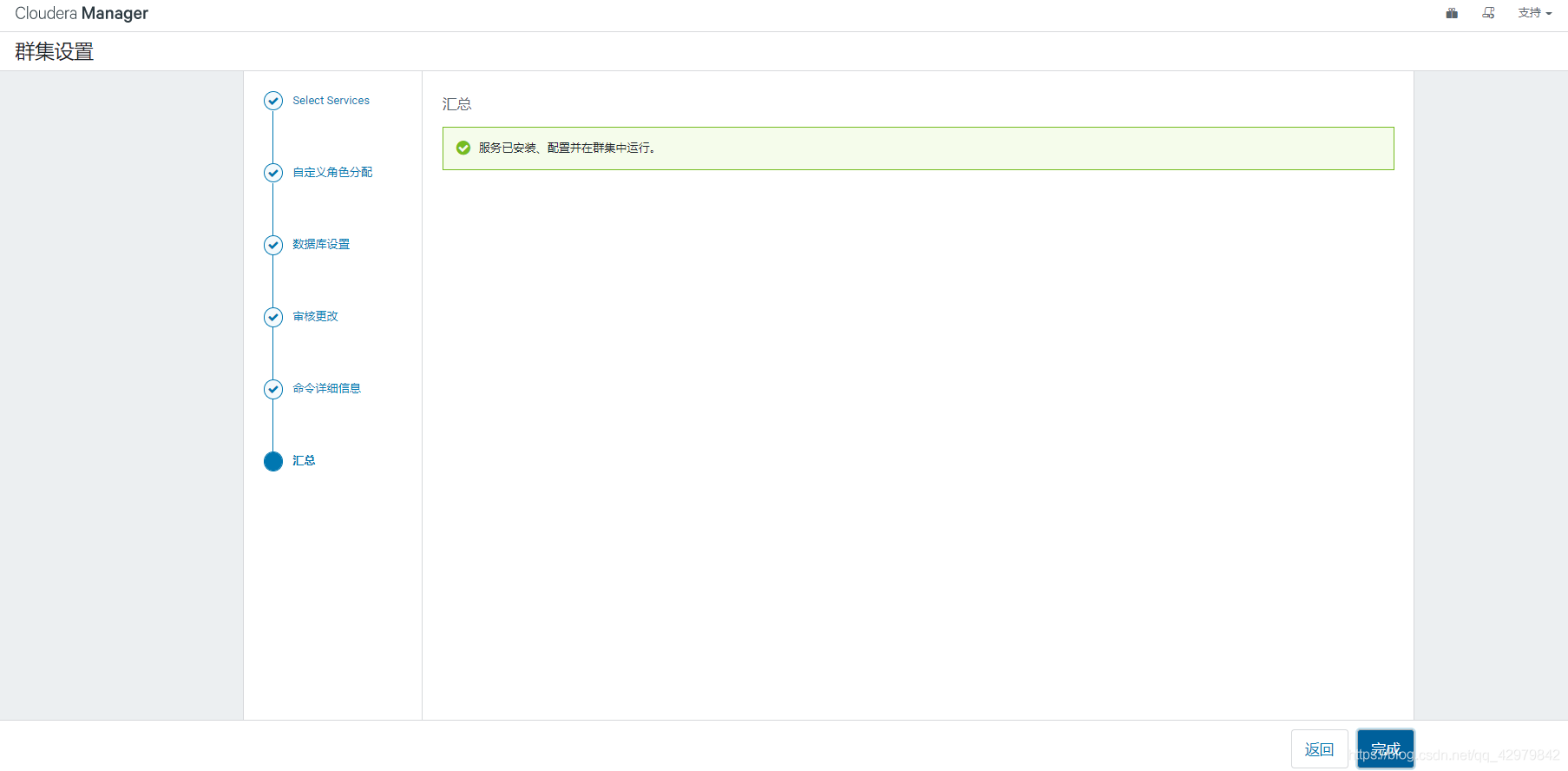

结束

cdh主页