0 资源需求

0.1 软件版本

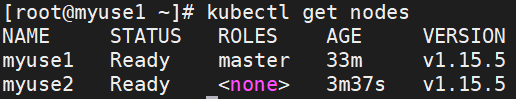

kubernetes的版本有要求V1.15.5

10.23.241.142 myuse2

10.23.241.97 myuse1

kubeflow与kubernetes的版本兼容性。

0.2 镜像文件

(1)从阿里云获取镜像文件

阿里云,容器镜像服务,搜索镜像,将镜像名输入搜索框。

(2)修改镜像标签

#pull ml-pipeline images

docker pull gcr.io/ml-pipeline/viewer-crd-controller:0.2.5

docker pull gcr.io/ml-pipeline/api-server:0.2.5

docker pull gcr.io/ml-pipeline/frontend:0.2.5

docker pull gcr.io/ml-pipeline/visualization-server:0.2.5

docker pull gcr.io/ml-pipeline/scheduledworkflow:0.2.5

docker pull gcr.io/ml-pipeline/persistenceagent:0.2.5

docker pull gcr.io/ml-pipeline/envoy:metadata-grpc

阿里云,容器镜像服务,搜索镜像,将镜像名输入搜索框。

#!/bin/bash

images1=(viewer-crd-controller:0.2.5 \

api-server:0.2.5 \

frontend:0.2.5 \

visualization-server:0.2.5 \

scheduledworkflow:0.2.5 \

persistenceagent:0.2.5 \

envoy:metadata-grpc

)

for imageName in ${images1[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/$imageName;

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/$imageName gcr.io/ml-pipeline/$imageName;

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/$imageName;

done

1 安装kubernetes

1.1 安装kubelet和kubeadm和kubectl和docker

需求1:kubeadm,初始化集群、管理集群等,版本1.15.5

需求2:kubelet,用于接收api-server指令,对Pod生命周期进行管理,版本1.15.5

需求3:kubectl,集群命令行管理工具,版本为1.15.5

需求4:docker-ce,版本为18.06.3

基础环境的配置不再赘述。

#kubeadm reset若之前安装过kubernetes需要执行此命令

#yum remove -y kubectl kubeadm kubelet卸载老版本

#yum install -y kubeadm-1.15.5-0 kubelet-1.15.5-0 kubectl-1.15.5-0

#kubelet --version【1.15.5】

#kubeadm version【1.15.5】

#kubectl version【1.15.5】

#docker version【18.06.3】

#vi /etc/sysconfig/kubelet

添加以下内容

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

#systemctl enable kubelet设置为开机自启动即可

1.2 准备kubernetes镜像

#kubeadm config images list查看集群需要使用的容器镜像

不同版本的kubeadm查出来的结果不同

k8s.gcr.io/kube-apiserver:v1.15.5

k8s.gcr.io/kube-controller-manager:v1.15.5

k8s.gcr.io/kube-scheduler:v1.15.5

k8s.gcr.io/kube-proxy:v1.15.5

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.10

k8s.gcr.io/coredns:1.3.1

加载镜像文件

docker load -i k8s.gcr.io_coredns_1.3.1.tar

docker load -i k8s.gcr.io_etcd_3.3.10.tar

docker load -i k8s.gcr.io_kube-apiserver_v1.15.5.tar

docker load -i k8s.gcr.io_kube-controller-manager_v1.15.5.tar

docker load -i k8s.gcr.io_kube-proxy_v1.15.5.tar

docker load -i k8s.gcr.io_kube-scheduler_v1.15.5.tar

docker load -i k8s.gcr.io_pause_3.1.tar

保存镜像文件

docker save -o k8s.gcr.io_kube-apiserver_v1.15.5.tar k8s.gcr.io/kube-apiserver:v1.15.5

docker save -o k8s.gcr.io_kube-controller-manager_v1.15.5.tar k8s.gcr.io/kube-controller-manager:v1.15.5

docker save -o k8s.gcr.io_kube-scheduler_v1.15.5.tar k8s.gcr.io/kube-scheduler:v1.15.5

docker save -o k8s.gcr.io_kube-proxy_v1.15.5.tar k8s.gcr.io/kube-proxy:v1.15.5

docker save -o k8s.gcr.io_pause_3.1.tar k8s.gcr.io/pause:3.1

docker save -o k8s.gcr.io_etcd_3.3.10.tar k8s.gcr.io/etcd:3.3.10

docker save -o k8s.gcr.io_coredns_1.3.1.tar k8s.gcr.io/coredns:1.3.1

1.3 初始化kubernetes

[root@myuse1 ~]# kubeadm init --kubernetes-version=v1.15.9 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=10.23.241.97

(2)配置授权信息

只配置master即可

[root@myuse1 ~]#mkdir -p /root/.kube

[root@myuse1 ~]#cp -i /etc/kubernetes/admin.conf /root/.kube/config

(3)安装网络插件

配置master和worker两者

[root@myuse1 ~]#docker save -o quay.io_coreos_flannel_v0.12.0-amd64.tar quay.io/coreos/flannel:v0.12.0-amd64

[root@myuse1 ~]#kubectl apply -f kube-flannel.yml

(4)加入工作节点

[root@myuse2 ~]#kubeadm join 10.23.241.97:6443 --token 68qggn.diljfxlg6ooy9j7h \

--discovery-token-ca-cert-hash sha256:0b42332af1355b3e22022ec38dad65a63e22697ad967bd3cd9b77aed29bf22bb

1.4 安装dashboard

#kubectl apply -f kubernetes-dashboard.yaml

1.5 安装本地存储的pv和pvc

#kubectl create -f local-path-storage.yaml

#kubectl get sc --all-namespaces

1.6 安装docker本地私有仓库

#docker pull registry

#docker run -id --name=r1 -p 5000:5000 registry

输入地址http://私有仓库服务器IP:5000/v2/_catalog

http://10.23.241.97:5000/v2/_catalog

#vi /etc/docker/daemon.json

{"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors":["https://ung2thfc.mirror.aliyuncs.com"],

"insecure-registries":["10.23.241.97:5000"]}

#systemctl restart docker

#docker start r1

2 安装kubeflow的dex1.0.2版本

2.1 安装kfctl和kubectl及提前配置

(1)安装kfctl

#tar -xzvf kfctl_v1.0.2-0-ga476281_linux.tar.gz

#mv kfctl /usr/bin/

#kfctl version【v1.0.2-0-ga476281】

(2)安装kubectl

安装k8s的过程中,已经安装。

(3)安装kubeflow之前需要配置

#vi /etc/kubernetes/manifests/kube-apiserver.yaml

- --service-account-issuer=kubernetes.default.svc

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

2.2 文件kfctl_istio_dex.v1.0.2.yaml

(1)下载kfctl_istio_dex.v1.0.2.yaml和manifests-1.0.2.tar.gz

https://github.com/kubeflow/manifests/blob/master/kfdef/kfctl_istio_dex.v1.0.2.yaml

https://github.com/kubeflow/manifests/archive/v1.0.2.tar.gz

v1.0.2.tar.gz就是manifests-1.0.2.tar.gz

(2)修改配置文件

repos:

- name: manifests

uri: https://github.com/kubeflow/manifests/archive/v1.0.2.tar.gz

修改为

repos:

- name: manifests

uri: file:///root/your102dex/manifests-1.0.2.tar.gz

#mkdir -p /root/your102dex

同时将manifests-1.0.2.tar.gz放在目录中

apiVersion: kfdef.apps.kubeflow.org/v1

kind: KfDef

metadata:

clusterName: kubernetes

creationTimestamp: null

name: your102dex

namespace: kubeflow

spec:

applications:

- kustomizeConfig:

repoRef:

name: manifests

path: application/application-crds

name: application-crds

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: application/application

name: application

- kustomizeConfig:

parameters:

- name: namespace

value: istio-system

repoRef:

name: manifests

path: istio-1-3-1/istio-crds-1-3-1

name: istio-crds

- kustomizeConfig:

parameters:

- name: namespace

value: istio-system

repoRef:

name: manifests

path: istio-1-3-1/istio-install-1-3-1

name: istio-install

- kustomizeConfig:

parameters:

- name: namespace

value: istio-system

repoRef:

name: manifests

path: istio-1-3-1/cluster-local-gateway-1-3-1

name: cluster-local-gateway

- kustomizeConfig:

parameters:

- name: clusterRbacConfig

value: "ON"

repoRef:

name: manifests

path: istio/istio

name: istio

- kustomizeConfig:

parameters:

- name: namespace

value: cert-manager

repoRef:

name: manifests

path: cert-manager/cert-manager-crds

name: cert-manager-crds

- kustomizeConfig:

parameters:

- name: namespace

value: kube-system

repoRef:

name: manifests

path: cert-manager/cert-manager-kube-system-resources

name: cert-manager-kube-system-resources

- kustomizeConfig:

overlays:

- self-signed

- application

parameters:

- name: namespace

value: cert-manager

repoRef:

name: manifests

path: cert-manager/cert-manager

name: cert-manager

- kustomizeConfig:

overlays:

- application

parameters:

- name: namespace

value: istio-system

- name: userid-header

value: kubeflow-userid

- name: oidc_provider

value: http://dex.auth.svc.cluster.local:5556/dex

- name: oidc_redirect_uri

value: /login/oidc

- name: oidc_auth_url

value: /dex/auth

- name: skip_auth_uri

value: /dex

- name: client_id

value: kubeflow-oidc-authservice

repoRef:

name: manifests

path: istio/oidc-authservice

name: oidc-authservice

- kustomizeConfig:

overlays:

- istio

parameters:

- name: namespace

value: auth

- name: issuer

value: http://dex.auth.svc.cluster.local:5556/dex

- name: client_id

value: kubeflow-oidc-authservice

- name: oidc_redirect_uris

value: '["/login/oidc"]'

- name: static_email

value: [email protected]

- name: static_password_hash

value: $2y$12$ruoM7FqXrpVgaol44eRZW.4HWS8SAvg6KYVVSCIwKQPBmTpCm.EeO

repoRef:

name: manifests

path: dex-auth/dex-crds

name: dex

- kustomizeConfig:

overlays:

- istio

- application

repoRef:

name: manifests

path: argo

name: argo

- kustomizeConfig:

repoRef:

name: manifests

path: kubeflow-roles

name: kubeflow-roles

- kustomizeConfig:

overlays:

- istio

- application

parameters:

- name: userid-header

value: kubeflow-userid

repoRef:

name: manifests

path: common/centraldashboard

name: centraldashboard

- kustomizeConfig:

overlays:

- cert-manager

- application

repoRef:

name: manifests

path: admission-webhook/webhook

name: webhook

- kustomizeConfig:

overlays:

- istio

- application

parameters:

- name: userid-header

value: kubeflow-userid

repoRef:

name: manifests

path: jupyter/jupyter-web-app

name: jupyter-web-app

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: spark/spark-operator

name: spark-operator

- kustomizeConfig:

overlays:

- istio

- application

- db

repoRef:

name: manifests

path: metadata

name: metadata

- kustomizeConfig:

overlays:

- istio

- application

repoRef:

name: manifests

path: jupyter/notebook-controller

name: notebook-controller

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pytorch-job/pytorch-job-crds

name: pytorch-job-crds

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pytorch-job/pytorch-operator

name: pytorch-operator

- kustomizeConfig:

overlays:

- application

parameters:

- name: namespace

value: knative-serving

repoRef:

name: manifests

path: knative/knative-serving-crds

name: knative-crds

- kustomizeConfig:

overlays:

- application

parameters:

- name: namespace

value: knative-serving

repoRef:

name: manifests

path: knative/knative-serving-install

name: knative-install

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: kfserving/kfserving-crds

name: kfserving-crds

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: kfserving/kfserving-install

name: kfserving-install

- kustomizeConfig:

overlays:

- application

parameters:

- name: usageId

value: <randomly-generated-id>

- name: reportUsage

value: "true"

repoRef:

name: manifests

path: common/spartakus

name: spartakus

- kustomizeConfig:

overlays:

- istio

repoRef:

name: manifests

path: tensorboard

name: tensorboard

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: tf-training/tf-job-crds

name: tf-job-crds

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: tf-training/tf-job-operator

name: tf-job-operator

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: katib/katib-crds

name: katib-crds

- kustomizeConfig:

overlays:

- application

- istio

repoRef:

name: manifests

path: katib/katib-controller

name: katib-controller

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pipeline/api-service

name: api-service

- kustomizeConfig:

overlays:

- application

parameters:

- name: minioPvcName

value: minio-pv-claim

repoRef:

name: manifests

path: pipeline/minio

name: minio

- kustomizeConfig:

overlays:

- application

parameters:

- name: mysqlPvcName

value: mysql-pv-claim

repoRef:

name: manifests

path: pipeline/mysql

name: mysql

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pipeline/persistent-agent

name: persistent-agent

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pipeline/pipelines-runner

name: pipelines-runner

- kustomizeConfig:

overlays:

- istio

- application

repoRef:

name: manifests

path: pipeline/pipelines-ui

name: pipelines-ui

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pipeline/pipelines-viewer

name: pipelines-viewer

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pipeline/scheduledworkflow

name: scheduledworkflow

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: pipeline/pipeline-visualization-service

name: pipeline-visualization-service

- kustomizeConfig:

overlays:

- application

- istio

parameters:

- name: userid-header

value: kubeflow-userid

repoRef:

name: manifests

path: profiles

name: profiles

- kustomizeConfig:

overlays:

- application

repoRef:

name: manifests

path: seldon/seldon-core-operator

name: seldon-core-operator

repos:

- name: manifests

uri: file:///root/your102dex/manifests-1.0.2.tar.gz

version: v1.0.2

status:

reposCache:

- localPath: '".cache/manifests/manifests-1.0.2"'

name: manifests

(3)文件kfctl_istio_dex.v1.0.2.yaml中查看需要安装的控件

#grep “path” kfctl_k8s_istio.v1.0.2.yaml

path: istio/istio-crds

path: istio/istio-install【运行前需要kubectl create ns kubeflow】

path: istio/istio

path: istio/cluster-local-gateway【需要修改kustomization.yaml的configMapGenerator】

path: istio/kfserving-gateway

path: istio/add-anonymous-user-filter

path: application/application-crds

path: application/application

path: cert-manager/cert-manager-crds

path: cert-manager/cert-manager-kube-system-resources

path: cert-manager/cert-manager

path: metacontroller

path: argo

path: kubeflow-roles

path: common/centraldashboard

path: admission-webhook/bootstrap

path: admission-webhook/webhook

path: jupyter/jupyter-web-app

path: spark/spark-operator

path: metadata

path: jupyter/notebook-controller

path: pytorch-job/pytorch-job-crds

path: pytorch-job/pytorch-operator

path: knative/knative-serving-crds

path: knative/knative-serving-install

path: kfserving/kfserving-crds

path: kfserving/kfserving-install

path: common/spartakus

path: tensorboard

path: tf-training/tf-job-crds

path: tf-training/tf-job-operator

path: katib/katib-crds

path: katib/katib-controller

path: pipeline/api-service

path: pipeline/minio

path: pipeline/mysql

path: pipeline/persistent-agent

path: pipeline/pipelines-runner

path: pipeline/pipelines-ui

path: pipeline/pipelines-viewer

path: pipeline/scheduledworkflow

path: pipeline/pipeline-visualization-service

path: profiles

path: seldon/seldon-core-operator

2.3 安装kubeflow

#cp kfctl_istio_dex.v1.0.2.yaml /root/your102dex

#cp manifests-1.0.2.tar.gz /root/your102dex

#tar -xzvf kustomizefile.tar.gz -C /root/your102dex/

#kfctl apply -V -f kfctl_istio_dex.v1.0.2.yaml

#kfctl apply -V -f kfctl_istio_dex.v1.0.2.yaml

安装后需要下载镜像并修改镜像拉取策略

2.3.1 镜像文件

(1)cert-manager

quay.io/jetstack/cert-manager-webhook:v0.11.0

(2)istio-system

gcr.io/istio-release/citadel:release-1.3-latest-daily

gcr.io/istio-release/galley:release-1.3-latest-daily

gcr.io/istio-release/proxyv2:release-1.3-latest-daily

gcr.io/istio-release/node-agent-k8s:release-1.3-latest-daily

gcr.io/istio-release/pilot:release-1.3-latest-daily

gcr.io/istio-release/mixer:release-1.3-latest-daily

gcr.io/istio-release/kubectl:release-1.3-latest-daily

gcr.io/istio-release/sidecar_injector:release-1.3-latest-daily

gcr.io/arrikto/kubeflow/oidc-authservice:28c59ef

(3)kubeflow

gcr.io/kubeflow-images-public/kubernetes-sigs/application:1.0-beta

gcr.io/kubeflow-images-public/admission-webhook:v1.0.0-gaf96e4e3

gcr.io/kubeflow-images-public/centraldashboard:v1.0.0-g3ec0de71

gcr.io/kubeflow-images-public/jupyter-web-app:v1.0.0-g2bd63238

gcr.io/kubeflow-images-public/katib/v1alpha3/katib-controller:v0.8.0

gcr.io/kubeflow-images-public/katib/v1alpha3/katib-db-manager:v0.8.0

mysql:8

mysql:8.0.3

gcr.io/kubeflow-images-public/katib/v1alpha3/katib-ui:v0.8.0

gcr.io/kfserving/kfserving-controller:0.2.2

gcr.io/kubebuilder/kube-rbac-proxy:v0.4.0

gcr.io/kubeflow-images-public/metadata:v0.1.11

gcr.io/ml-pipeline/envoy:metadata-grpc

gcr.io/tfx-oss-public/ml_metadata_store_server:v0.21.1

gcr.io/kubeflow-images-public/metadata-frontend:v0.1.8

gcr.io/ml-pipeline/api-server:0.2.5

gcr.io/ml-pipeline/visualization-server:0.2.5

gcr.io/ml-pipeline/persistenceagent:0.2.5

gcr.io/ml-pipeline/scheduledworkflow:0.2.5

gcr.io/ml-pipeline/frontend:0.2.5

gcr.io/ml-pipeline/viewer-crd-controller:0.2.5

gcr.io/kubeflow-images-public/notebook-controller:v1.0.0-gcd65ce25

gcr.io/kubeflow-images-public/profile-controller:v1.0.0-ge50a8531

gcr.io/kubeflow-images-public/kfam:v1.0.0-gf3e09203

gcr.io/kubeflow-images-public/pytorch-operator:v1.0.0-g047cf0f

gcr.io/spark-operator/spark-operator:v1beta2-1.0.0-2.4.4

gcr.io/google_containers/spartakus-amd64:v1.1.0

gcr.io/kubeflow-images-public/tf_operator:v1.0.0-g92389064

(4)auth

gcr.io/arrikto/dexidp/dex:4bede5eb80822fc3a7fc9edca0ed2605cd339d17

(5)knative-serving

gcr.io/istio-release/proxy_init:release-1.3-latest-daily

gcr.io/knative-releases/knative.dev/serving/cmd/networking/istio:latest

gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler-hpa:latest

gcr.io/knative-releases/knative.dev/serving/cmd/controller:0.14.0

gcr.io/knative-releases/knative.dev/serving/cmd/webhook:0.14.0

gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler:0.14.0

gcr.io/knative-releases/knative.dev/serving/cmd/activator:0.14.0

2.3.2 knative-serving本地私有仓库

需要镜像仓库

gcr.io/knative-releases/knative.dev/serving/cmd/networking/istio:latest

gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler-hpa:latest

gcr.io/knative-releases/knative.dev/serving/cmd/controller:0.14.0

gcr.io/knative-releases/knative.dev/serving/cmd/webhook:0.14.0

gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler:0.14.0

gcr.io/knative-releases/knative.dev/serving/cmd/activator:0.14.0

(1)改标签推仓库

docker tag gcr.io/knative-releases/knative.dev/serving/cmd/activator:0.14.0 10.23.241.97:5000/knative/serving/activator:0.14.0

docker tag gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler:0.14.0 10.23.241.97:5000/knative/serving/autoscaler:0.14.0

docker tag gcr.io/knative-releases/knative.dev/serving/cmd/webhook:0.14.0 10.23.241.97:5000/knative/serving/webhook:0.14.0

docker tag gcr.io/knative-releases/knative.dev/serving/cmd/controller:0.14.0 10.23.241.97:5000/knative/serving/controller:0.14.0

docker tag gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler-hpa:latest 10.23.241.97:5000/knative/serving/autoscaler-hpa:latest

docker tag gcr.io/knative-releases/knative.dev/serving/cmd/networking/istio:latest 10.23.241.97:5000/knative/serving/istio:latest

(2)推送镜像

docker push 10.23.241.97:5000/knative/serving/activator:0.14.0

docker push 10.23.241.97:5000/knative/serving/autoscaler:0.14.0

docker push 10.23.241.97:5000/knative/serving/webhook:0.14.0

docker push 10.23.241.97:5000/knative/serving/controller:0.14.0

docker push 10.23.241.97:5000/knative/serving/autoscaler-hpa:latest

docker push 10.23.241.97:5000/knative/serving/istio:latest

修改文件/root/your102dex/kustomize/knative-install/base/deployment.yaml

(3)重复执行

#kfctl apply -V -f kfctl_k8s_istio.v1.0.2.yaml

(4)将安装生成的kustomize文件夹打包拷贝后续使用

#tar -czf kustomizefile.tar.gz kustomize/

3 kubeflow版本dex1.0.2使用

(1)登录kubeflow页面

#kubectl get svc --all-namespaces

http://10.23.241.97:31380/

默认用户名[email protected]

默认用户名[email protected]

默认密码12341234

4 异常解决

4.1 namespace处于Terminating删除

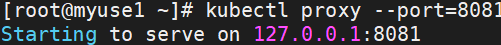

(1)解决办法是重新打开一个终端执行kubectl proxy跑一个API代理在本地的8081端口

#kubectl proxy --port=8081

(2)将namespace信息以json格式输出到文件中

4.2 pod的yaml文件异常

更新文件/root/your02dex/kustomize中的yaml文件后,删除对应pod。

#kubectl delete pod activator-7b47d44c6b-lf5xw -n knative-serving

然后会自动重新生成pod。

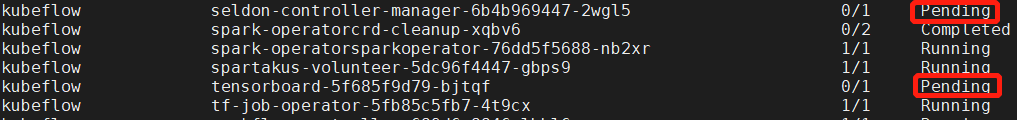

4.3 pod处于pending状态

Warning FailedScheduling 76s (x199 over 5h21m) default-scheduler 0/2 nodes are available: 1 Insufficient cpu, 1 node(s) had taints that the pod didn’t tolerate.

#kubectl describe pod tensorboard-5f685f9d79-bjtqf -n kubeflow

#kubectl describe pod tensorboard-5f685f9d79-bjtqf -n kubeflow

查看pod的状态

4.4 pod处于Evicted

k8s的容器存储空间资源限制ephemeral-storage

(1)查看详情

The node was low on resource: ephemeral-storage. Container ml-pipeline-api-server was using 37158112Ki, which exceeds its request of 0.

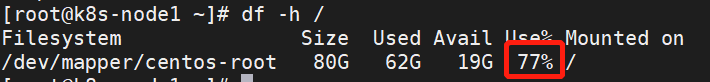

用df -h查看磁盘的使用空间,发现根目录还剩下77%,删除了一些后,发现可以正常启动了。

原因可能是根目录的磁盘空间不足,删除部分空间即可。

(2)深入分析

ephemeral-storage(短暂存储)的概念和作用。

ephemeral-storage是为了管理和调度Kubernetes中运行的应用的短暂存储。

在每个Kubernetes的节点上,kubelet的根目录(默认是/var/lib/kubelet)和日志目录(/var/log)保存在节点的主分区上,这个分区同时也会被Pod的EmptyDir类型的volume、容器日志、镜像的层、容器的可写层所占用。ephemeral-storage便是对这块主分区进行管理,通过应用定义的需求(requests)和约束(limits)来调度和管理节点上的应用对主分区的消耗。

我们使用df -h可以看到/var/lib下有很多容器相关的目录,这些都是ephemeral-storage:

Docker Root Dir: /var/lib/docker使用docker下载的镜像就存在这个目录下。

kubelet的–root-dir: 默认(/var/lib/kubelet)

(3)原因总结

根本原因:Kubernetes使用的系统盘容量不足;触发项如下:

1.image镜像太多,耗用太多ephemeral-storage;

2.本地pv存储全部挂载了系统盘目录下;

3.有些容器没有使用持久化存储,日志等全部打印到了ephemeral-storage。

(4)解决方式

原则:让ephemeral-storage只存储kubernetes体系下的日志,系统盘容量要充足。

1.image镜像层的存储指定非系统盘;

2.本地pv存储挂载非系统盘;(生产环境禁止使用本地磁盘,必须使用云存储)

3.容器必须都使用持久化存储,禁止把日志都打到容器里。

5 删除kubeflow

#cd /root/your102dex

#kfctl delete -f kfctl_istio_dex.v1.0.2.yaml

发现kubeflow处于terminating状态。

大概3分钟左右,发现kubeflow消失。

其余的都是安装kubeflow需要的其他的外围组件。