搭建flink,版本flink-1.9.2

搭建kafka,版本kafka_2.11-2.0.0

pom文件根据自己的版本自定义

pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.htdata</groupId>

<artifactId>kafka-mysql</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>jar</packaging>

<name>kafka-mysql</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<flink.version>1.9.2</flink.version>

</properties>

<dependencies>

<!--mysql-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

<!--flink-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${

flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>${

flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>${

flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.12</artifactId>

<version>1.10.0</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.0.0</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<artifactSet>

<excludes>

<exclude>com.google.code.findbugs:jsr305</exclude>

<exclude>org.slf4j:*</exclude>

<exclude>log4j:*</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<!-- Do not copy the signatures in the META-INF folder.

Otherwise, this might cause SecurityExceptions when using the JAR. -->

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.htdata.flink.ReadFromKafka</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

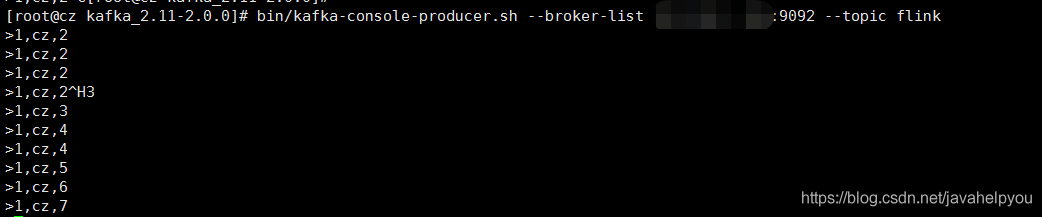

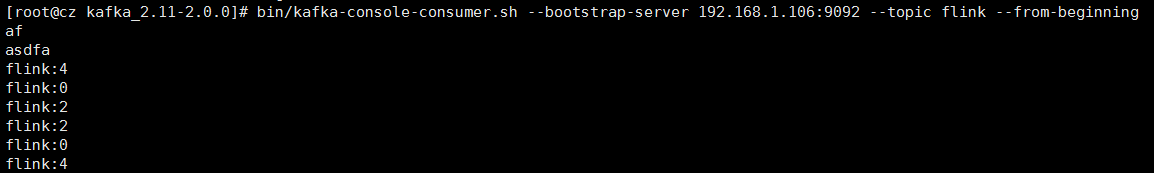

写入kafka

package com.htdata.flink;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer;

import java.util.Properties;

public class WriteToKafka {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "你的ip:9092");

DataStream<String> stream = env.addSource(new SimpleStringGenerator());

stream.addSink(new FlinkKafkaProducer<String>("flink", new SimpleStringSchema(), properties)); //配置topic

env.execute();

}

public static class SimpleStringGenerator implements SourceFunction<String> {

long i = 0;

boolean swith = true;

@Override

public void run(SourceContext<String> ctx) throws Exception {

for(int k=0;k<5;k++) {

ctx.collect("flink:" + k++);

}

}

@Override

public void cancel() {

swith = false;

}

}

}

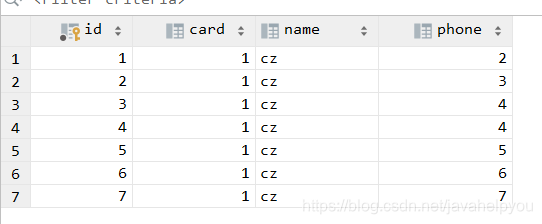

从kafka读取数据写入mysql

自定义sink

package com.htdata.flink;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

public class MysqlSink extends

RichSinkFunction<Tuple3<Integer, String, Integer>> {

private Connection connection;

private PreparedStatement preparedStatement;

String username = "root";

String password = "123456";

String drivername = "com.mysql.jdbc.Driver";

String dburl = "jdbc:mysql://你的mysqlip:3306/flink";

@Override

public void invoke(Tuple3<Integer, String, Integer> value) throws Exception {

Class.forName(drivername);

connection = DriverManager.getConnection(dburl, username, password);

String sql = "replace into person(card,name,phone) values(?,?,?)"; //mysql存在表person 有3个字段 card,name,phone

preparedStatement = connection.prepareStatement(sql);

preparedStatement.setInt(1, value.f0);

preparedStatement.setString(2, value.f1);

preparedStatement.setInt(3, value.f2);

preparedStatement.executeUpdate();

if (preparedStatement != null) {

preparedStatement.close();

}

if (connection != null) {

connection.close();

}

}

}

package com.htdata.flink;

import org.apache.commons.lang3.StringUtils;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import java.util.Properties;

public class ReadFromKafka {

public static void main(String[] args) throws Exception {

// 构建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

Properties properties = new Properties();

//这里是由一个kafka

properties.setProperty("bootstrap.servers", "你的ip:9092");

properties.setProperty("group.id", "flink_consumer");

//第一个参数是topic的名称

DataStream<String> stream=env.addSource(new FlinkKafkaConsumer<String>("flink", new SimpleStringSchema(), properties));

DataStream<Tuple3<Integer, String, Integer>> sourceStreamTra = stream.filter(new FilterFunction<String>() {

@Override

public boolean filter(String value) throws Exception {

return StringUtils.isNotBlank(value);

}

}).map(new MapFunction<String, Tuple3<Integer, String, Integer>>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple3<Integer, String, Integer> map(String value)

throws Exception {

String[] args = value.split(",");

return new Tuple3<Integer, String, Integer>(Integer

.valueOf(args[0]), args[1],Integer

.valueOf(args[2]));

}

});

sourceStreamTra.addSink(new MysqlSink());

env.execute("data to mysql start");

}

}