文章目录

- 1. `torch.randn((dim1, dim2))`

- 2. `torch.linspace(start, end, steps=100, out=None)`

- 3. `torch.meshgird(x, y)`

- 4. `torch.expand(*sizes) → Tensor`

- 5.`torch.cat(tensors,dim=0,out=None)→ Tensor` 和 `torch.stack(tensors,dim=0,out=None)→ Tensor`

- 6. `torch.nn.functional.interpolate(input_shape, scale_factor= , mode='')`

- 7. `torch.sum() , torch.ones()`

- 8. `torch.nonzero() 返回查找符合条件的索引`

- 9. `torch.clamp(input, min, max, *, out=None) → Tensor`

- 10. `torch.squeeze(input, dim) and torch.unsqueeze(input, dim)`

- 11. `torch.nn.functional.max_pool2d` 以及 `关于tensor之间的比较,bool值的转化 `

- 12. `torch.Tensor.view(*shape) → Tensor`

- 13. `torch.cumsum(input, dim, *, dtype=None, out=None) → Tensor`

- 14. `torch.argsort(input, dim=-1, descending=False) → LongTensor`

- 14. `torch.mm(input, mat2, *, out=None) → Tensor`

- 15. `torch.triu(input, diagonal=0, *, out=None) → Tensor`

- 16. `torch.nn.functional.interpolate(input, size=None, scale_factor=None, mode='nearest', align_corners=None, recompute_scale_factor=None)`

- 17. `torch.zeros(*size, *, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor`

- 18. `pytorch通过索引直接扩大矩阵`

- 19. `torch.repeat(*sizes) → Tensor`

- 20. 广播以及在pdb调试中画掩码

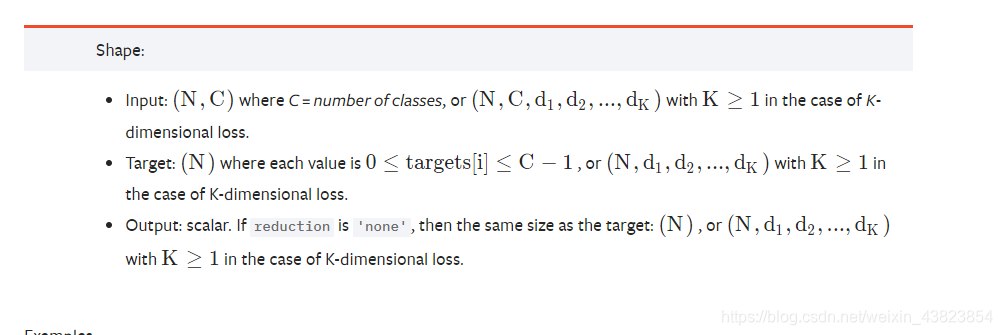

- 21. torch.nn.CrossEntropyLoss()

1. torch.randn((dim1, dim2))

import torch

# 1.torch.randn

s = torch.randn((5,2,3))

2. torch.linspace(start, end, steps=100, out=None)

# 2.torch.linspace

a = torch.linspace(-1, 1, s.shape[-1]) # 均匀分割。 [-1, 1]分成s.shape[0]

b = torch.linspace(-1, 1, s.shape[-2])

print('a:', a)

print('b:', b)

print('-'* 100)

a: tensor([-1., 0., 1.])

b: tensor([-1., 1.])

3. torch.meshgird(x, y)

# a: tensor([-1., 0., 1.])

# b: tensor([-1., 1.])

# 3.torch.meshgrid

a, b = torch.meshgrid(a, b) # meshgrid后为[3, 2] 但是根据元素的不同 a,b也不同

# 也就是将a,b扩展为 a x b的大小,可用于将一维的坐标扩展为二维上的坐标。(x或者y在变换中其中有一个坐标是保持不变的)

print(a.size(), b.size())

print('meshgrid a:', a)

print('meshgrid b:', b)

print('-'* 100)

torch.Size([3, 2]) torch.Size([3, 2])

meshgrid a: tensor([[-1., -1.],

[ 0., 0.],

[ 1., 1.]])

meshgrid b: tensor([[-1., 1.],

[-1., 1.],

[-1., 1.]])

4. torch.expand(*sizes) → Tensor

# 4.torch.expand

a = a.expand(s.shape[0], 1, -1, -1) # 第三第四个参数为-1,-1 表示使用a.shape(3, 2)本身的2个维度

b = b.expand(s.shape[0], 1, -1, -1) # [5, 1, 3, 2] 上同

print(a.size())

print(b.size())

torch.Size([5, 1, 3, 2])

torch.Size([5, 1, 3, 2])

5.torch.cat(tensors,dim=0,out=None)→ Tensor 和 torch.stack(tensors,dim=0,out=None)→ Tensor

# 5.torch.cat()

cat_ab = torch.cat((a,b), dim=1) # 使用cat的前提是 其他维度必须相同。对dim=1上进行cat

print("cat_ab:", cat_ab.size()) # [5, 2, 3, 2]

>>> a = torch.rand((2, 3))

>>> b = torch.rand((2, 3))

>>> c = torch.cat((a, b))

>>> a.size(), b.size(), c.size()

(torch.Size([2, 3]), torch.Size([2, 3]), torch.Size([4, 3]))

>>> a = torch.rand((2, 3))

>>> b = torch.rand((2, 3))

>>> c = torch.stack((a, b))

>>> a.size(), b.size(), c.size()

(torch.Size([2, 3]), torch.Size([2, 3]), torch.Size([2, 2, 3]))

6. torch.nn.functional.interpolate(input_shape, scale_factor= , mode='')

# 6.torch.nn.functional.interpolate

cat_ab = torch.nn.functional.interpolate(cat_ab, scale_factor=2, mode="bilinear") # [N, C, H, W] --> [N, C, 2H, 2W]

print("interpolate cat_ab:", cat_ab.size()) # [5, 2, 6, 4]

cat_ab: torch.Size([5, 2, 3, 2])

interpolate cat_ab: torch.Size([5, 2, 6, 4])

7. torch.sum() , torch.ones()

import torch

a = torch.ones((3,2)) # torch.Size([3, 2])

b = a.sum(dim=0)

b.size() # torch.Size([2])

c = a.sum(dim=1)

b.size() # torch.Size([3])

8. torch.nonzero() 返回查找符合条件的索引

(x condition y) .nonzero()

x=torch.arange(12).view(3,4)

x

tensor([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]])

(x>4).nonzero()

tensor([[1, 1],

[1, 2],

[1, 3],

[2, 0],

[2, 1],

[2, 2],

[2, 3]])

9. torch.clamp(input, min, max, *, out=None) → Tensor

>>> a = torch.randn(4)

>>> a

tensor([-1.7120, 0.1734, -0.0478, -0.0922])

>>> torch.clamp(a, min=-0.5, max=0.5)

tensor([-0.5000, 0.1734, -0.0478, -0.0922])

>>> a = torch.randn(4)

>>> a

tensor([-0.0299, -2.3184, 2.1593, -0.8883])

>>> torch.clamp(a, min=0.5)

tensor([ 0.5000, 0.5000, 2.1593, 0.5000])

>>> a = torch.randn(4)

>>> a

tensor([ 0.7753, -0.4702, -0.4599, 1.1899])

>>> torch.clamp(a, max=0.5)

tensor([ 0.5000, -0.4702, -0.4599, 0.5000])

10. torch.squeeze(input, dim) and torch.unsqueeze(input, dim)

增加或者减少某维度为1的维数

>>> x = torch.zeros(2, 1, 2, 1, 2)

>>> x.size()

torch.Size([2, 1, 2, 1, 2])

>>> y = torch.squeeze(x)

>>> y.size()

torch.Size([2, 2, 2])

>>> y = torch.squeeze(x, 0)

>>> y.size()

torch.Size([2, 1, 2, 1, 2])

>>> y = torch.squeeze(x, 1)

>>> y.size()

torch.Size([2, 2, 1, 2])

>>> x = torch.tensor([1, 2, 3, 4])

>>> torch.unsqueeze(x, 0)

tensor([[ 1, 2, 3, 4]])

>>> torch.unsqueeze(x, 1)

tensor([[ 1],

[ 2],

[ 3],

[ 4]])

11. torch.nn.functional.max_pool2d 以及 关于tensor之间的比较,bool值的转化

import torch

import torch.nn as nn

heat = torch.tensor([[[[-0.9752, -2.1402],

[-0.67, -1.0918],

[ -1.5324, 0.7099]],

[[-0.3685, -0.1001],

[-0.8646, -1.4367],

[ 0.9132, -1.2989]]]])

kernel = 2

ans = nn.functional.max_pool2d(

heat, (kernel, kernel), stride=1, padding=1)

ans.size()

#1.

t1

'''

tensor([[[[-0.9752, -0.9752],

[-0.6700, -0.6700],

[-0.6700, 0.7099]],

[[-0.3685, -0.1001],

[-0.3685, -0.1001],

[ 0.9132, 0.9132]]]])

'''

t1 = (ans[:, :, :-1, :-1] == heat)

'''

tensor([[[[ True, False],

[ True, False],

[False, True]],

[[ True, True],

[False, False],

[ True, False]]]])

'''

#2.

t2 =(ans[:, :, :-1, :-1] == heat).float()

'''

tensor([[[[1., 0.],

[1., 0.],

[0., 1.]],

[[1., 1.],

[0., 0.],

[1., 0.]]]])

'''

关于条件判断后,通过索引查找

a = torch.tensor([[2, 1, 0], [1, 2, 2]])

ind = (a > 0.1)

'''

tensor([[ True, True, False],

[ True, True, True]])

'''

b = torch.ones((2,3))

c = b[ind]

'''

tensor([1., 1., 1., 1.])

'''

12. torch.Tensor.view(*shape) → Tensor

>>> x = torch.randn(4, 4)

>>> x.size()

torch.Size([4, 4])

>>> y = x.view(16)

>>> y.size()

torch.Size([16])

>>> z = x.view(-1, 8) # the size -1 is inferred from other dimensions

>>> z.size()

torch.Size([2, 8])

>>> a = torch.randn(1, 2, 3, 4)

>>> a.size()

torch.Size([1, 2, 3, 4])

>>> b = a.transpose(1, 2) # Swaps 2nd and 3rd dimension

>>> b.size()

torch.Size([1, 3, 2, 4])

>>> c = a.view(1, 3, 2, 4) # Does not change tensor layout in memory

>>> c.size()

torch.Size([1, 3, 2, 4])

>>> torch.equal(b, c)

False

13. torch.cumsum(input, dim, *, dtype=None, out=None) → Tensor

a = torch.randn((5,2))

a

tensor([[-0.9153, 0.7133],

[ 0.0106, 1.4720],

[ 0.5626, -0.4366],

[ 0.3931, -0.4698],

[-0.5168, 0.0720]])

a.cumsum(0)

tensor([[-0.9153, 0.7133],

[-0.9047, 2.1853],

[-0.3421, 1.7487],

[ 0.0510, 1.2789],

[-0.4658, 1.3510]])

a.cumsum(1)

tensor([[-0.9153, -0.2020],

[ 0.0106, 1.4827],

[ 0.5626, 0.1259],

[ 0.3931, -0.0766],

[-0.5168, -0.4448]])

14. torch.argsort(input, dim=-1, descending=False) → LongTensor

Example:

>>> a = torch.randn(4, 4)

>>> a

tensor([[ 0.0785, 1.5267, -0.8521, 0.4065],

[ 0.1598, 0.0788, -0.0745, -1.2700],

[ 1.2208, 1.0722, -0.7064, 1.2564],

[ 0.0669, -0.2318, -0.8229, -0.9280]])

>>> torch.argsort(a, dim=1)

tensor([[2, 0, 3, 1],

[3, 2, 1, 0],

[2, 1, 0, 3],

[3, 2, 1, 0]])

14. torch.mm(input, mat2, *, out=None) → Tensor

>>> mat1 = torch.randn(2, 3)

>>> mat2 = torch.randn(3, 3)

>>> torch.mm(mat1, mat2)

tensor([[ 0.4851, 0.5037, -0.3633],

[-0.0760, -3.6705, 2.4784]])

15. torch.triu(input, diagonal=0, *, out=None) → Tensor

>>> a = torch.randn(4, 4)

>>> a

tensor([[ 0.5144, 0.5091, -0.3698, 0.3694],

[-1.1344, -0.2793, 1.6651, -1.3632],

[-0.3397, -0.1468, -0.0300, -1.1186],

[-2.1449, 1.3087, -0.1409, 2.4678]])

>>>

>>> torch.triu(a)

tensor([[ 0.5144, 0.5091, -0.3698, 0.3694],

[ 0.0000, -0.2793, 1.6651, -1.3632],

[ 0.0000, 0.0000, -0.0300, -1.1186],

[ 0.0000, 0.0000, 0.0000, 2.4678]])

>>>

>>> torch.triu(a, diagonal=1)

tensor([[ 0.0000, 0.5091, -0.3698, 0.3694],

[ 0.0000, 0.0000, 1.6651, -1.3632],

[ 0.0000, 0.0000, 0.0000, -1.1186],

[ 0.0000, 0.0000, 0.0000, 0.0000]])

>>>

>>> torch.triu(a, diagonal=-1)

tensor([[ 0.5144, 0.5091, -0.3698, 0.3694],

[-1.1344, -0.2793, 1.6651, -1.3632],

[ 0.0000, -0.1468, -0.0300, -1.1186],

[ 0.0000, 0.0000, -0.1409, 2.4678]])

>>>

>>> torch.triu(a, diagonal=-2)

tensor([[ 0.5144, 0.5091, -0.3698, 0.3694],

[-1.1344, -0.2793, 1.6651, -1.3632],

[-0.3397, -0.1468, -0.0300, -1.1186],

[ 0.0000, 1.3087, -0.1409, 2.4678]])

>>>

>>> torch.triu(a, diagonal=-3)

tensor([[ 0.5144, 0.5091, -0.3698, 0.3694],

[-1.1344, -0.2793, 1.6651, -1.3632],

[-0.3397, -0.1468, -0.0300, -1.1186],

[-2.1449, 1.3087, -0.1409, 2.4678]])

16. torch.nn.functional.interpolate(input, size=None, scale_factor=None, mode='nearest', align_corners=None, recompute_scale_factor=None)

ins_feat = F.interpolate(ins_feat, size=(self.seg_num_grids[idx] * sa_h, self.seg_num_grids[idx] * sa_w), mode='bilinear')

17. torch.zeros(*size, *, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

>>> torch.zeros(2, 3)

tensor([[ 0., 0., 0.],

[ 0., 0., 0.]])

>>> torch.zeros(5)

tensor([ 0., 0., 0., 0., 0.])

18. pytorch通过索引直接扩大矩阵

a = torch.randn((4,5,5))

b = torch.ones(10,dtype=int) #注意这里必须是bool,long或者byte,否则无法构成索引

c = a[b]

c.size()

------------------------------------------------------------------------

OUTPUTS:

tensor([[[-1.0732, -0.7315, -0.4887, 1.3547, -0.8984],

[-1.3814, 0.7787, 0.4166, -0.4355, -0.4207],

[-0.1047, 2.1872, 0.3320, -0.4274, -0.2006],

[-0.1602, 0.1737, 1.0411, 0.8609, 0.3690],

[ 0.2870, -0.4992, -0.4487, 1.0585, 0.2058]],

[[ 0.4890, -0.0888, -0.2893, -0.3513, 2.1535],

[ 0.2390, -1.3600, 0.2401, 1.2936, -1.7246],

[ 0.3906, 0.9688, -0.1664, 1.9916, -0.5115],

[ 0.4018, 0.0544, -1.4237, -0.3007, 0.1592],

[ 1.2119, 1.0150, 2.2753, 0.2863, -2.0256]],

[[ 0.1236, 0.5212, -0.2304, -0.0847, 0.7568],

[ 0.3000, -0.2914, -0.9238, 0.2331, -0.3673],

[ 0.0475, -0.8758, 0.8781, 0.4415, 0.2434],

[ 0.4388, -2.3196, 1.0323, 0.8560, -1.8282],

[-2.3422, -0.0589, 0.7717, -0.1974, 0.2317]],

[[ 1.5203, -0.4977, -1.0504, -0.0240, -0.1234],

[ 0.4833, -0.4155, 1.3780, 0.2443, 0.8457],

[-0.4753, -0.3182, 0.1281, -0.1161, 0.8857],

[ 1.8742, 0.5412, -0.8276, 1.2642, 0.3902],

[ 2.4383, 0.9594, 0.0306, -0.1631, 0.8616]]])

------------------------------------------------------------------------

b:

tensor([1, 1, 1, 1, 1, 1, 1, 1, 1, 1])

------------------------------------------------------------------------

c

tensor([[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]],

[[ 2.6001, 1.7210, 0.2823, 0.0699, -0.2888],

[-0.4970, 0.2690, -0.2260, 0.3258, 0.4532],

[ 0.2565, -1.2199, 0.1472, -1.5703, 1.5488],

[-1.2409, -0.1028, -1.0456, -0.7941, -0.4220],

[-1.1634, -1.8762, 0.0277, -0.0281, -1.9816]]])

19. torch.repeat(*sizes) → Tensor

>>> x = torch.tensor([1, 2, 3])

>>> x.repeat(4, 2) #根据x的维度 x[1, 3] 变换 1 * 4 = 4, 3 * 2 = 6 [4, 6]

tensor([[ 1, 2, 3, 1, 2, 3],

[ 1, 2, 3, 1, 2, 3],

[ 1, 2, 3, 1, 2, 3],

[ 1, 2, 3, 1, 2, 3]])

>>> x.repeat(4, 2, 1).size()# 相当于x的看成[1, 1, 3]

torch.Size([4, 2, 3])

20. 广播以及在pdb调试中画掩码

# a[5 , 1] b[1, 2] (a-b).size() --> (5,2) 我认为这是一种广播!

a = torch.randn((5,1)) #最后一维为1

b = torch.randn((1,10))# 第一维度为1

print(a)

print(b)

c = a - b

print(c.size())

print(c)

tensor([[-0.7045],

[ 1.2742],

[ 0.4087],

[ 0.4675],

[ 0.2742]])

tensor([[ 0.0117, 1.2361, 0.8163, 0.1032, 1.3831, 1.3738, 1.4786, -0.4542,

0.1732, 3.4909]])

torch.Size([5, 10])

tensor([[-0.7162, -1.9407, -1.5209, -0.8078, -2.0877, -2.0783, -2.1831, -0.2504,

-0.8778, -4.1955],

[ 1.2625, 0.0380, 0.4578, 1.1709, -0.1090, -0.0996, -0.2044, 1.7283,

1.1009, -2.2168],

[ 0.3971, -0.8274, -0.4076, 0.3055, -0.9744, -0.9651, -1.0698, 0.8629,

0.2355, -3.0822],

[ 0.4558, -0.7687, -0.3488, 0.3643, -0.9156, -0.9063, -1.0111, 0.9216,

0.2943, -3.0235],

[ 0.2625, -0.9620, -0.5422, 0.1709, -1.1089, -1.0996, -1.2044, 0.7283,

0.1010, -3.2168]])

# 这样也可以

a = torch.randn((2,5,1))

b = torch.randn((2,1,10))

c = a - b

#

a = torch.randn((5,1))

b = torch.randn((5,10))

# 这样就会报错

a = torch.randn((5,2))

b = torch.randn((1,10))

#

a = torch.randn((5,1))

b = torch.randn((2,10))

#

a = torch.randn((5,1))

b = torch.randn((10,1))

# 画图

from PIL import Image

import numpy as np

img = Image.fromarray(np.array(gt_masks.cpu()*255).astype(‘uint8’)).convert(‘RGB’)

img.save(‘picture.jpg’)

21. torch.nn.CrossEntropyLoss()

易错点: 如果input是二维的(N,C),那么target就是(N);如果input是三维的(N,C,d_k),那么target就是(N, d_k) ,而不是(N,C)!!

# 三维

import torch

import torch.nn as nn

loss = nn.CrossEntropyLoss()

input = torch.randn(2,92, 100, requires_grad=True)

target = torch.empty(2,100, dtype=torch.long).random_(5)

print(input, target)

output = loss(input, target)

# 二维

import torch

import torch.nn as nn

loss = nn.CrossEntropyLoss()

input = torch.randn(92, 100, requires_grad=True)

# input = torch.randn(100, 92, requires_grad=True) 则会出错

target = torch.empty(92, dtype=torch.long).random_(5)

print(input, target)

output = loss(input, target)